rtabmap holonomic rotational odometry accuracy issue

|

Hello,

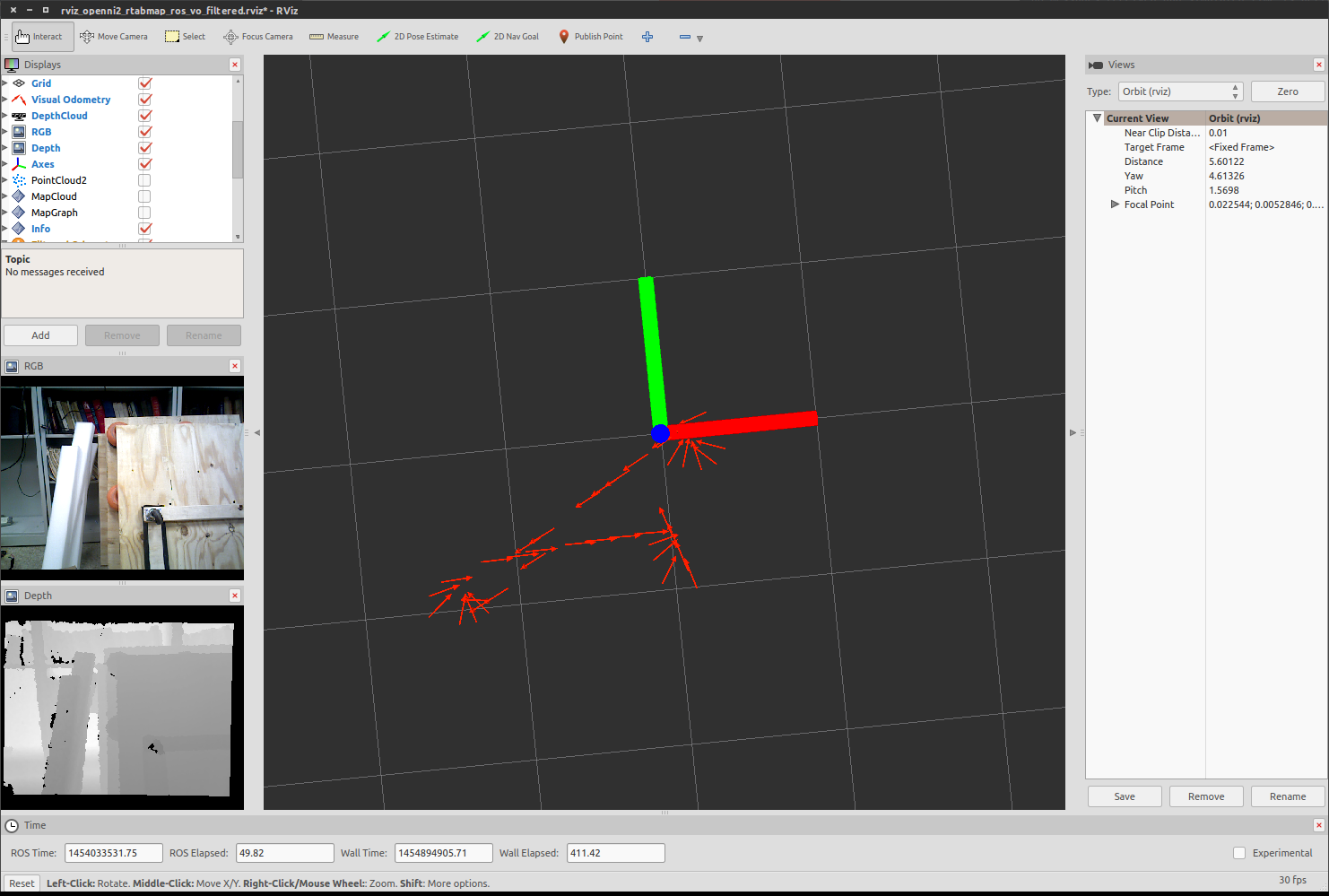

I'm trying to use a PrimeSense, rtabmap (with rgbd odometry), and robot_localization for robot localization. I'm using a holonomic robot base (DataSpeed mobility base for baxter). I have everything working, but rtabmap's rgbd_odometry is handling rotations very poorly. Given the holonomic rotation, the rotation should basically occur in place (mostly an adjustment of the yaw of the base). The screenshot actually has a zero transform between the camera_link and the base_link. I know I need a proper transform from base_link to camera_link, but the behavior I'm seeing is inconsistent regardless. It appears rtabmap thinks the camera is "behind" the robot. It might be helpful to think about the behavior in terms of concave and convex arcs (think lenses not optimization). When the robot rotates, the odometry reports a "swing" with the tails of the odometry being placed on a convex arc (so the odometry arrows during rotation point inward, towards each other). The expected swing should place the odometry tails on a concave arc (so the odomery arrows point outward, away from each other). I can provide a further visualization if necessary. Conceptually, it's like the odometry frame is off by a transform, specifically that it thinks the robot's base is in front of the camera (so the camera is behind the robot). Has anyone experienced similar behavior? I can naively apply transforms to mitigate the issue, but that causes problems with the map's location relative to the robot (basically everything is closer to the robot than it should be, because I'm correcting the swing through a transform that brings the scene closer to the robot). Any help/guidance would be appreciated! Here's a screenshot of a demo, you can see the swing (which has arrows pointing towards each other).

|

|

Administrator

|

Hi,

Do you have also an Identity transform between /camera_link and the frame_id contained in the image topics? For example with freenect_launch:

$ rosrun tf tf_echo /camera_link /camera_rgb_optical_frame

At time 1454949606.123

- Translation: [0.000, -0.045, 0.000]

- Rotation: in Quaternion [-0.500, 0.500, -0.500, 0.500]

in RPY (radian) [-1.571, -0.000, -1.571]

in RPY (degree) [-90.000, -0.000, -90.000]

If it is identity, the odometry may think it is looking up. If you rotate only the camera in place, does it still swing? Indeed, if the TF base_link->camera_link doesn't match the reality, there will be a constant odometry error. cheers, Mathieu |

|

Hi Mathieu,

Indeed, I have the same camera_link to camera_rgb_optical_frame transformation as you:

$ rosrun tf tf_echo camera_link camera_rgb_optical_frame

At time 1454033510.146

- Translation: [0.000, -0.045, 0.000]

- Rotation: in Quaternion [-0.500, 0.500, -0.500, 0.500]

in RPY (radian) [-1.571, -0.000, -1.571]

in RPY (degree) [-90.000, -0.000, -90.000]

And the transform from camera_link to camera_depth_optical_frame

At time 1454033523.169

- Translation: [0.000, -0.020, 0.000]

- Rotation: in Quaternion [-0.500, 0.500, -0.500, 0.500]

in RPY (radian) [-1.571, -0.000, -1.571]

in RPY (degree) [-90.000, -0.000, -90.000]

I've also rotated the camera in-place, completely separated from the robot, and I observe the same swing (so this isn't just about the transform from base_link to camera_link transform). Thanks for your help. Mark |

|

Administrator

|

Hi Mark,

I can reproduce a similar problem than yours: a consistent translation always on the same side when rotating on-place (i.e, when turning left, the camera translates left). When turning left and then right, the camera is still coming back at the initial pose. It seems to be a fixed transform issue. On a Kinect360, the optical lens of the camera is not on the rotation axis 'z' of the casing. By adding a translation on +x axis of about 2 cm, the translation is much less. Both x and y components have then an influence for this problem. cheers, Mathieu |

|

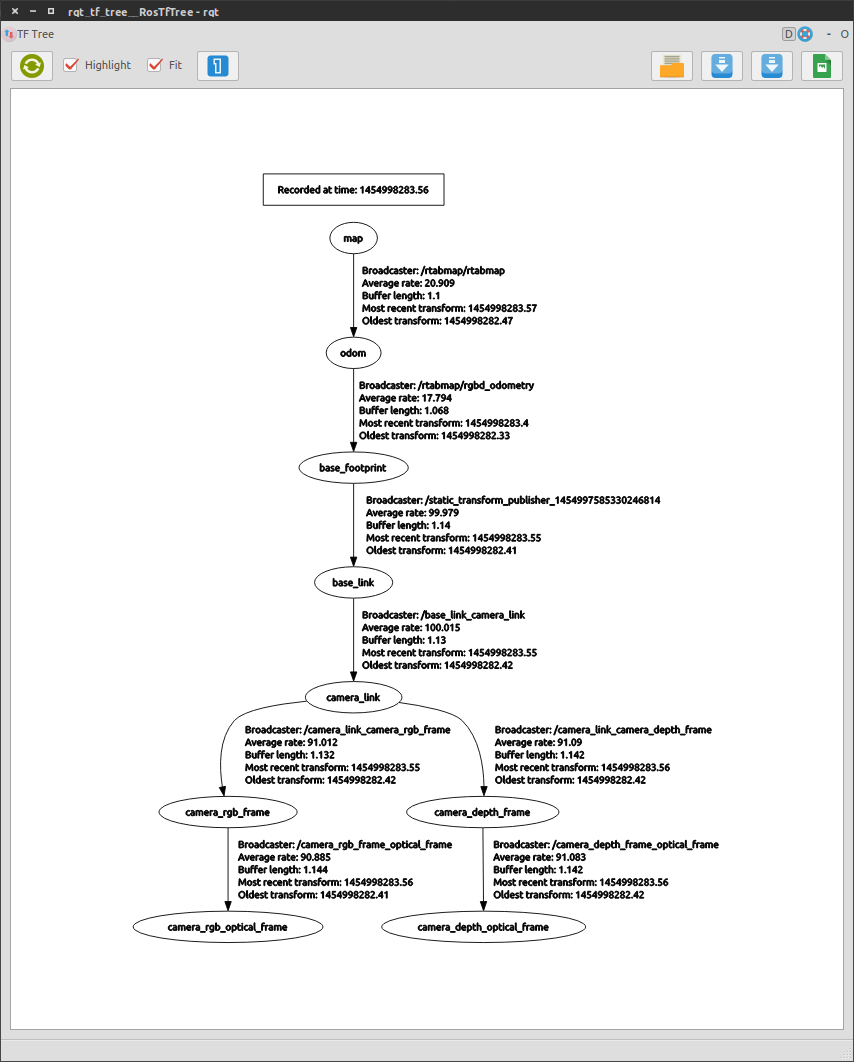

Mathieu,

Thanks for the reply. I actually thought the tf's among openni2 might be the source of the problem. I recreated them according to the actual locations of the camera's sensors. However, I'm still seeing extremely similar and problematic behavior from rtabmap. I've uploaded two videos, one showing the odometry swign, and another showing the tfs. The tfs cause me to believe the problem is from the rgbd_odometry node (since rtabmap is the only posting the odom -> base_link transform). Here are the two videos: https://www.youtube.com/watch?v=L80IYc6m5p4 https://www.youtube.com/watch?v=EYWjETxFDcw I've also included my current openni2 launch file and my rtabmap launch file: rtabmap.launch openni2.launch And here's my tf tree.  I'm on a bit of a time crunch to get this figured out; if anyone has any fixes please let me know! I'm more than willing to provide more information and thoroughly document any fix. Thanks! |

|

Administrator

|

Hi Mark,

Did you compare at different distances from the background/objects? Which PrimeSense sensor are you using? The Carmine 1.09 has an operating range of 0.35 – 1.4 meters and the 1.08 has 0.8 - 3.5 meters. With this kind of sensors, farther features have less reliable depth for good odometry. I tried your launch files with rtabmap 0.10.12. I only changed in rtabmap.launch "base_footprint" to "base_link" and in openni2.launch I used "debayer_processing=true" and "device.xml.launch" of freenect_launch instead of openni2_launch (I am using a Kinect360): $ roslaunch openni2.launch depth_registration:=true $ roslaunch rtabmap.launch $ rosrun rviz rviz I've recorded this video with in-place rotations: https://youtu.be/I_sC1AoIYDU Part 1: Odometry looks ok, the objects are under 2 meters Part 2: I've put in the air the Kinect over the workspaces so that the background is farther. Odometry has more errors and it's showing a swing behavior. Note how the depth after 4 meters is less precise (look likes depth lines). When most features are tracked at this depth, the odometry may swing because the features are "translating" on the "same" depth value. Note also how shaky the odometry is with farther tracked features. Part 3: I did like Part 2 but with a closer view. However, it seems this time the odometry got more good close features than in Part 2, so the camera is swinging less. I've done also a comparison with a Kinect v2, for which the depth is more precise at longer distances: https://youtu.be/d3YjHvYJZj0. The default base_link used is the color camera, which is on the right of the Kinect, this explains why there is a "correct" swing behavior at start. When putting the Kinect over the workspaces like Parts 2-3 of the previous video, the swing is not higher (thx for precise features under 10 meters). cheers, Mathieu |

|

Hi Mathieu,

It was a crazy couple of weeks, but I'm back working on this project. I think your analysis is correct, and the kinect v1 results I've obtained agree with it. The translation is significantly improved when using a camera with a better depth sensor, and the room is large enough that the PrimeSense (3m depth) doesn't have enough range. For anyone else reading this, you can see this effect in the video I posted, the turn at the plywood with the orange donuts is accurate at first (within 1m), and then translates poorly as the depth of the nearest object increases. I think Mathieu's response and analysis is correct. Thank you for your help and detailed responses; I really appreciate it! Mark |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |