rtabmap + orbbec reconstrution failure

rtabmap + orbbec reconstrution failure

|

This post was updated on .

Hi, dear friends,

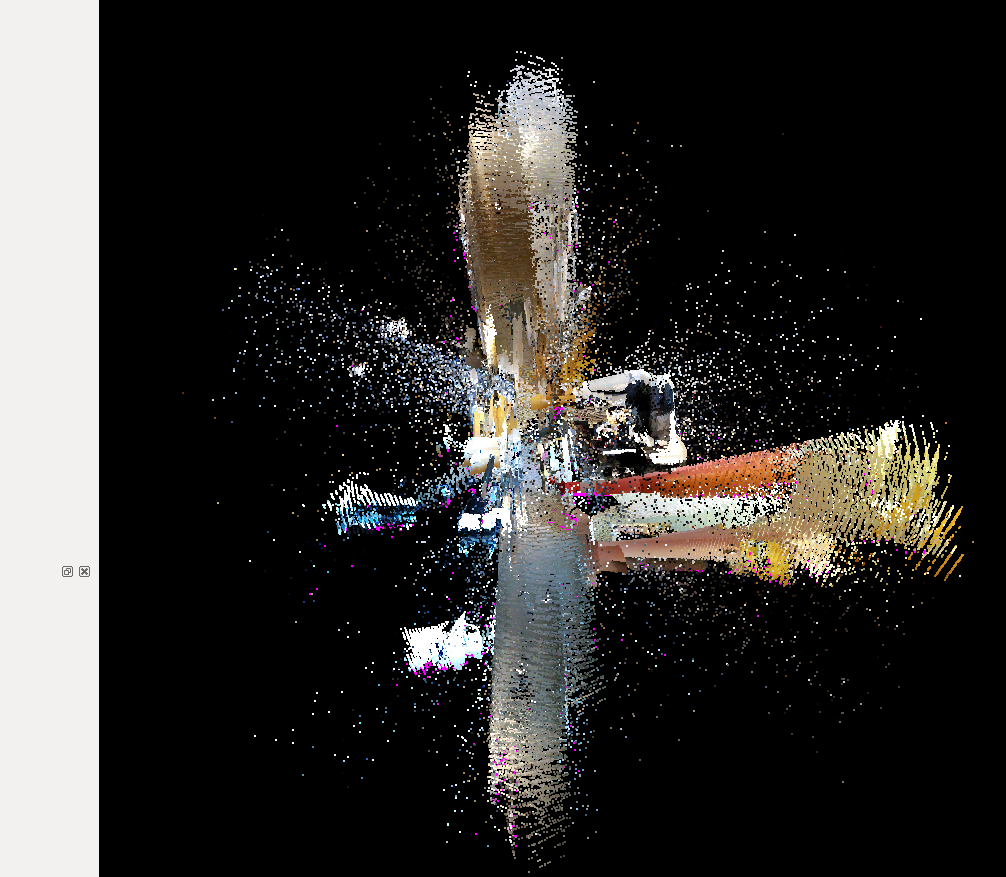

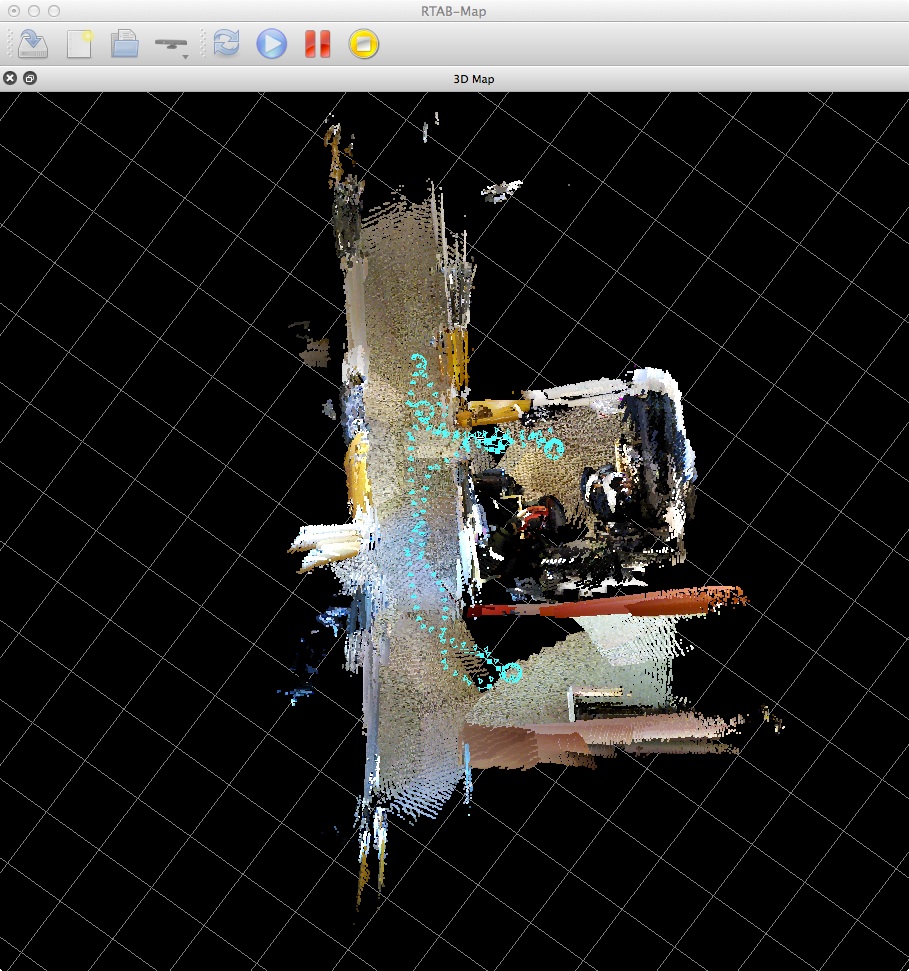

I'm newbie to rtabmap and 3d SLAM reconstruction. I encounter a problem when using rtabmap_ros + orbbec astra pro camera to reconstruct 3d map in my office. Here are the details for my setting: 1) Devices: Turtlebot + Orbbec be Astra Pro camera 2) ROS Version: Indigo 3) rtabmap for reconsturction: ros-indigo-rtabmap-ros + demo_turtlebot_mapping.launch Here is the result I get.  The reconstruction result is quite noisy. What should I do to get cleaner and better reconstruction? I've uploaded by .db file here: https://drive.google.com/file/d/0B1WOOizQRMEkR2E2cUhwZ25Ibm8/view?usp=sharing I've tried to use Tango device Rtabmap to reconstruct the same area and get much cleaner and better result. Any idea on potential problem in my turtlebot case? Besides, I'm mounting my camera on top of the robot. Is it necessary to get the correct TF between base_link and camera_link in order to make rtabmap work properly? Does rtabmap algorithm depend on the robot odometry, since there may be odometry error due to the control precision? And, when I tried to use rtabmap stand-alone version to detect loop closure, I get the following error: [FATAL] DBDriverSqlite3.cpp:4970::stepKeypoint() Condition (rc == SQLITE_OK) not met! [DB error (0.11.8): bind or column index out of range] Any idea on why this occurs? Thank you! |

|

Administrator

|

Hi,

I am using the latest version of rtabmap, but the parameters should look the same. Open Preferences->3D rendering:   With only a kinect-like sensor, don't use ICP for registration. Set Reg/Strategy to 0. Set also Vis/MinInliers to 25 to get more accurate loop closures (and reject bad ones):  Yes it is necessary to have an accurate TF between base_link and camera_link, in particular when odometry is not computed from the camera frame (e.g., robot/wheel odometry). If the TF is not accurate, the point clouds won't be correctly aligned against odometry poses. Yes, RTAB-Map depends on odometry, so there will be always some drift. The difference with Tango, is that visual inertial odometry approach of Tango may be more accurate than turtlebot wheel odometry. However, in this case I don't think the odometry of turtlebot is that bad, maybe it is just the TF between base frame and camera frame that is not accurate enough. For example, if you do a 360 degrees rotation and odometry tells you also that you did 360 degrees rotation and the point clouds are not correctly aligned, this is a TF problem between base and camera. For the error, I didn't encountered this problem while replaying the database. If you can describe the exact steps you did with the database above, I may be able to reproduce the bug. cheers, Mathieu |

Re: rtabmap + orbbec reconstrution failure

|

In reply to this post by daerduocarey

Thank you so much for the detailed reply, Mattieu. The information you provided is really helpful!

Could you simply teach me how can I get the precise TF between camera_link and base_link? I don't mount my camera at the standard place for Kinect. I mount it higher on top of my turtlebot. I can measure the rough TF for x, y and z translation. Is it necessary to make it more accurate? I plot out the simulated laser scan from my orbbec camera and rotate my robot for 360 degree. I find that there is some slight drift but the accumulated scan can delineate the rough layout for my office. I want to know how accurate does the TF need to be? Thank you! Bests, Kaichun |

|

Administrator

|

Hi Kaichun,

I generally measures roughly the position of the kinect and then with RVIZ, I project the point cloud and make sure roll/pitch orientation and z position is good (ground cloud is superposing perfectly the plane xy). Adjust TF or move the camera iteratively. For yaw rotation and y position, I can put a tape on the ground in middle of the robot and make sure that in RVIZ it is also straight in the middle. For x position, you may put the robot in front of a wall at known distance and see if the cloud corresponds in RVIZ (using the grid). An automatic approach: http://wiki.ros.org/openni/Contests/ROS%203D/Automatic%20Calibration%20of%20Extrinsic%20Parameters cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |