rtabmap_ros outdoor 3D mapping

|

Dear people.

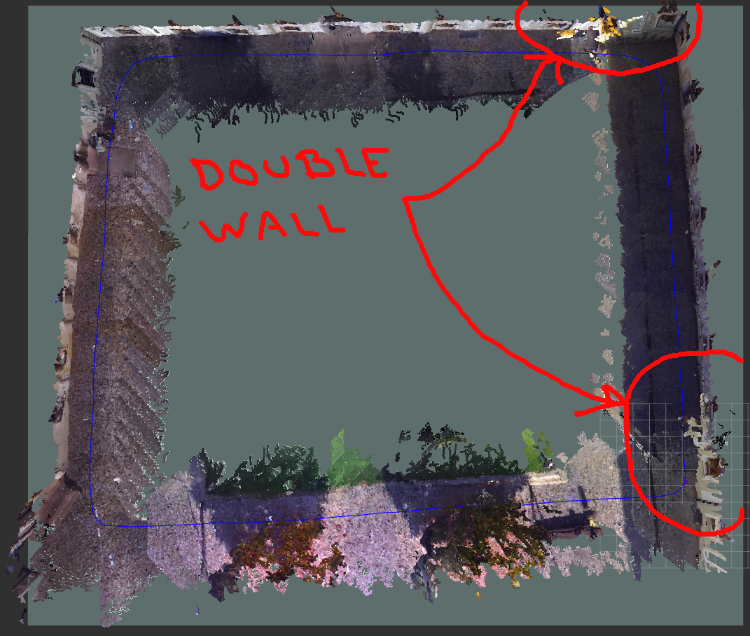

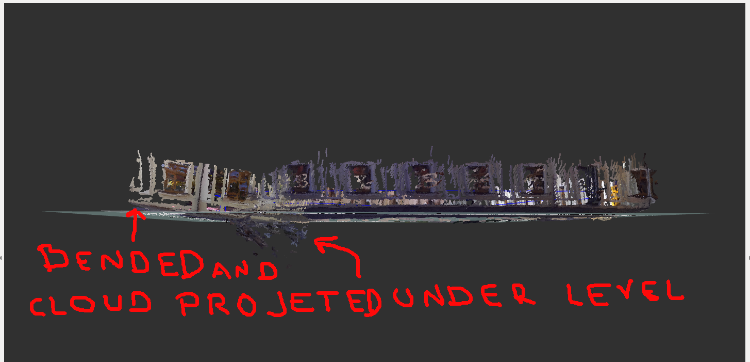

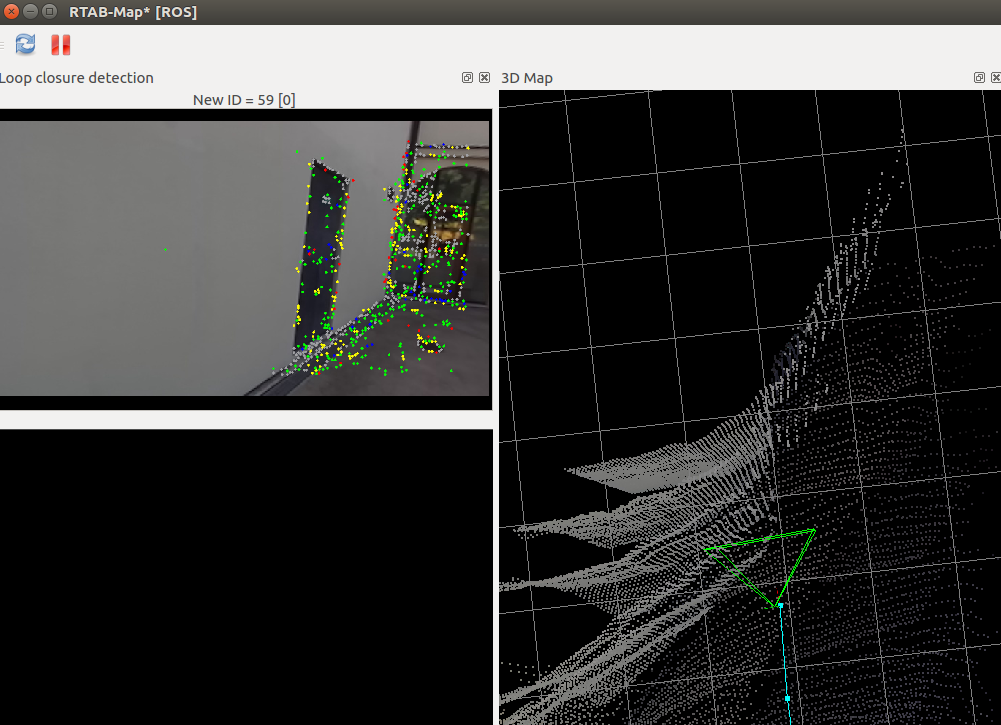

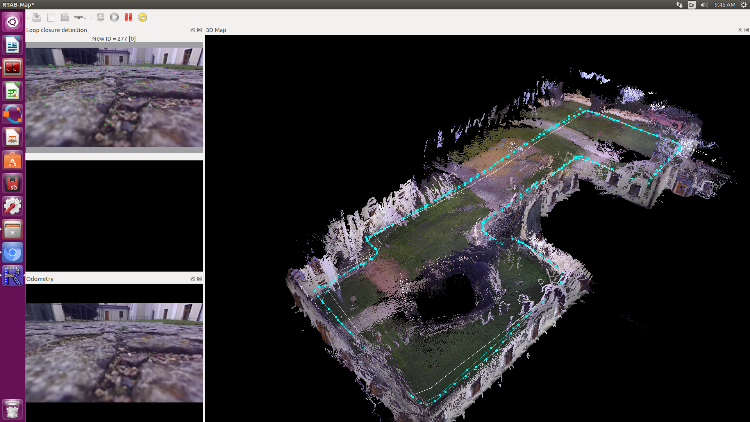

I do have a ZED camera and a Jetson TX2 mounted on a drone. I have taken the drone with my hands to map an outdoor place by means of the rtabmap_ros node. I do have some problems when I map the outdoor place. I have taken two pictures of the RVIZ pointcloud. In the picture we can see that the individual pointclouds of the wall do not aligned with the wall.  In the next picture the wall is bended.  Do you have any clue how to solve the previous issues. I am looking forward |

|

Administrator

|

This post was updated on .

Hi,

For the double wall, it is difficult to see. But when there is a double-wall, it means there are some drift or there is an overlap between the walls seen from different orientation or distance. If loop closures have been detected, it would reduce the error. If you have a database to share, it would be easier to look at. For the bending, it is caused by roll/pitch errors in odometry. Which odometry do you use? Is it rtabmap's visual odometry or zed's odometry? Is it Zed or Zed-M camera? To reduce that bending in 6DoF mapping, we need to inject gravity constraints to graph (through the drone IMU topic for example). See http://official-rtab-map-forum.206.s1.nabble.com/Subscribe-to-imu-topic-td6099.html#a6102 for more info. cheers, Mathieu |

|

Hi Mathiew,

Unfortunately I do not have a data set, i deleted, sorry for that. I have just Zed camera. However, * I have re calibrated the ZED camera by means of Matlab. * I have bent the camera towards the floor about 18 degrees. * I have used the rtabmap's visual odometry but I did not get good results so I have tried the zed's odometry and I got better results. * I have used the zed odometry. * I have re mapped the same place, well half of it. * I can get IMU data from the Pixhawk 4 mini, but as far as I know it needs to be synchronized, right? or how can set up the imu if I used the zed odometry? * I will map other place and if I get bend or alignment problems I will share this time the data base. As you can see the wall bent problem has reduced and also the alignment of the walls but there is a bit anyway.   Thank you and cheers |

|

Dear Hi Mathiew.

I have re mapped another outdoor scenario. I got two problems. * The not aligned wall * the detected sun light * I have also load a rtabmap.db for you to take a look :) http://www.fit.vutbr.cz/~plascencia/rtabmap.db * Btw, How do you play a rtabmap_ros.db????? You can see the pictures:  Thank you. |

|

Administrator

|

Hi,

If you are using rtabmap.launch, set imu_topic argument to topic of your IMU. It can be roughly synchronized with the zed. If rtabmap node is used directly, add the remap for "imu" topic. Make sure TF between imu and the zed is accurate (at least for orientation). Then to use the gravity constraints in the graph, add parameter "--Optimizer/GravitySigma 0.3". On loop closures, it will optimize the graph to be aligned with gravity. To run a database again like a rosbag: $ roslaunch rtabmap_ros rtabmap.launch \ args:="-d --Rtabmap/DetectionRate 0" \ visual_odometry:=false \ rgb_topic:=/rgb/image \ depth_topic:=/depth_registered/image \ camera_info_topic:=/rgb/camera_info \ odom_frame_id:=odom \ frame_id:=base_link \ use_sim_time:=true $ rosrun rtabmap_ros data_player \ _database:=~/Downloads/rtabmap.db \ _rate:=1 \ --clock"rate" argument can be used to play the database faster (>1) than real-time. Note that odometry is not recomputed, the one from the real experiment is used directly. It is why most of the time a rosbag is the preferred way to record data. The not aligned wall is a depth computation issue, the zed algorithm interpolates a lot on featureless surfaces. I think the pose is good, but the wall is very distorted. There are maybe parameters on zed side to avoid creating depth on featureless surfaces like that.  What kind of problems is causing the sun light? We cannot remove sun light from the map, if it is in the RGB image, it will appear in the map. cheers, Mathieu |

|

This post was updated on .

Hi Mathew

Thank you very much for the answer. However, I have some doubts about what you have written :) If you are using rtabmap.launch, set imu_topic argument to topic of your IMU. It can be roughly synchronized with the zed. If rtabmap node is used directly, add the remap for "imu" topic. Make sure TF between imu and the zed is accurate (at least for orientation). * I guess this is to give more robustness to the zed/odometer rotations, right? and how to obtained the TF between IMU and ZED :) * And, why using TF if I am using rtabmap node and not using TF if I am using rtabmap.launch Then to use the gravity constraints in the graph, add parameter "--Optimizer/GravitySigma 0.3". On loop closures, it will optimize the graph to be aligned with gravity.<quote author="matlabbe"> * What does it mean the graph to be aligned with gravity :) The not aligned wall is a depth computation issue, the zed algorithm interpolates a lot on featureless surfaces. I think the pose is good, but the wall is very distorted. There are maybe parameters on zed side to avoid creating depth on featureless surfaces like that. * How can the featureless interpolation surface can cause multiple walls? * I have sent a mail to the stereolabs about the issue of featureless surfaces and I got the following replay: The ZED camera uses stereo vision to estimate the depth. This technic detects details of objects in the left and right images to compare their position to estimate their depth. Homogeneous surfaces are textureless so the depth algorithm tries to estimate them based on nearby objects. As this is less precise, the depth can vary in these areas which result in duplicated walls in your scan. The ZED SDK has no option to filter this out but I've reported your suggestion and pictures to the development team. I'll keep you updated if any solution gets available for testing. Based on the previous answer, what can be done to solve that issue? Thank you and looking forward to your replay. |

|

Administrator

|

Depending on the frame of the ZED and the IMU, you can set a static_transform_publisher with the correct orientation between those frames. TF is used for both (that remark applies to two previous sentences). The orientation of the point clouds created will be aligned with the horizon. For example, if the ground is flat, it should appear flat in the resulting map. If the depth estimation is poor on textureless surfaces, this could create your doublewall effect. As I said in the previous post, it is possible to try another disparity estimation approach on rtabmap side with cv::StereoBM. To do that that, you should use stereo mode of rtabmap with left/right images of zed, not the rgb/depth images. See stereo demo with zed here. |

|

This post was updated on .

Hi Mathieu

Thank you for your replay. I know the static transformation, but do you have or know an example how to get that transformation :)

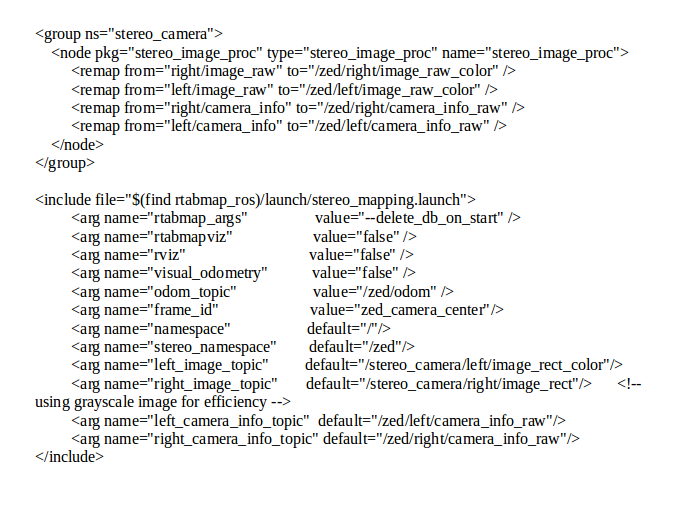

I have taken a look at the stereo_mapping demo as you suggested. And, I have created a launch file with the main script as follows:  I have run the launch file in two scenarios. The first one is an outdoor and the second one is indoor. Outdoorhttp://www.fit.vutbr.cz/~plascencia/rtabmap_sq1.db  Indoorhttp://www.fit.vutbr.cz/~plascencia/rtabmap_ind.db   I am looking forward to your replay |

|

Administrator

|

Hi,

For the static transform, I would use a ruler :P The outdoor database is empty, but I did take a look at the indoor one. The artifacts under the floor are caused by reflexions on the surfaces. I think you would see similar behavior with zed depth estimation (in particular on reflective floors). Lights reflecting on the ground can also create points under the ground. Note that reflective surfaces are quite an issue with almost all kind of sensors (stereo, rgb-d, lidar). For the double wall effect indoor (as well as the door shifted), it looks caused by some odometry drift. For outdoor, it seems that the depth estimation was not that accurate on that kind of surface or some reasons (are there windows?). cheers, Mathieu |

|

This post was updated on .

So this more an issue of the zed driver, right? there is nothing to do, right? or how can avoid these underground points? I have used the zed odometer as well as the rtabmap_odometer but the same result with multiple walls. Yea when I tried outdoor, there are windows. I was suggested by stereolabs: I have done what stereolabs suggested using cv_bridge. When I created the depth image with nan values and then I converted cv-image to ros-image with cv_bridge, the nan values were converted to black and I tried with that image but I got the same result. To be sincere, I do not know what can I do to have a good 3D indoor as well as outdoor maps using rtabmap_ros. I am looking forward to your replay. |

|

Administrator

|

This post was updated on .

For the reflections on the floor, assuming that we are always on the same floor, would be to filter points under xy plane. With Grid/NormalsSegmentation=false and Grid/MaxGroundHeight=0.05, we could get /rtabmap/cloud_obstacles with only points over 5 cm of the floor.

For the stereolabs answer, it seems a good idea to filter their depth image. As explained in this previous post, re-creating the depth image with OpenCV can be another option too. Not sure which option would need more processing power from OpenCV: edge detection or disparity computation. To have good indoor 3d reconstruction, lidar or RGB-D camera is recommended (zed is a stereo camera). For outdoor, either lidar or stereo camera. In your case with the zed, you sohuld filter the depth images to remove depth values in textureless areas, so you would get relatively accurate 3d reconstruction with some holes (on textureless surfaces). cheers, Mathieu |

|

This post was updated on .

Hi Mathew.

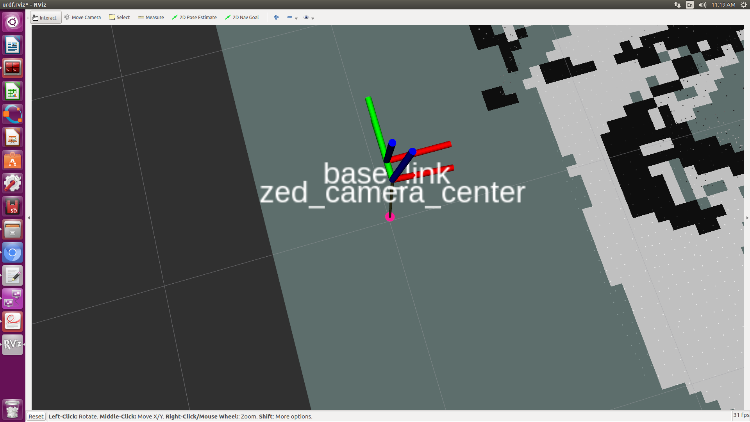

I have used Kalibr to calibrate the imu topic /mavros/imu/data from the pixhawk 4 mini and the /stereo_camera/left/image_mono /stereo_camera/right/image_mono from the zed camera. I have gotten the following two rotational matrices: "T_cam_imu: rostopic: /stereo_camera/left/image_mono - [-0.01172298941818703, -0.9917474694886735, 0.12766960594406557, 0.0009733116714396002] - [-0.2241398884968312, -0.12182314042441611, -0.9669128362172881, -0.001738025514640773] - [0.9744864708674659, -0.039950960188021634, -0.22086203584212016, 0.0017137757927055978] - [0.0, 0.0, 0.0, 1.0]" T_cam_imu: rostopic: /stereo_camera/right/image_mono - [-0.0030002316421554875, -0.9920830562172235, 0.12554763309910266, -0.11063708293669737] - [-0.22017184308270676, -0.1218120127551974, -0.9678254972163587, -0.0018464550183625145] - [0.9754564870478116, -0.030545754454934293, -0.21806329072340788, 0.0019577491538307953] - [0.0, 0.0, 0.0, 1.0] I have used the cam_imu_left rotational and the 3D Rotation Converter to obtain the [xyzw] quaternion angles. Then I have used the getRPY() ROS function to obtain the roll, pitch, yaw angles Roll: -3.00242, Pitch: -1.34878, Yaw: -1.58442. Then I have inserted them into the zed.urdf file as you can see. <robot name="zed_camera"> <link name="zed_camera_center"> <visual> <origin xyz="0 0 0" rpy="0 0 0"/> <geometry> <mesh filename="package://zed_wrapper/urdf/ZED.stl" /> </geometry> <material name="light_grey"> <color rgba="0.8 0.8 0.8 0.8"/> </material> </visual> </link> <link name="base_link" /> <joint name="zed_base_link_camera_center_joint" type="fixed"> <parent link="zed_left_camera_optical_frame"/> <child link="base_link"/> <origin xyz="0 0 0" rpy="-3.00242 -1.34878 -1.58442 " /> </joint> and I have inserted the imu and the Optimizer/GravitySigma as you can see: <include file="$(find rtabmap_ros)/launch/rtabmap.launch"> <arg name="rtabmap_args" value="--delete_db_on_start --Optimizer/GravitySigma 0.3" /> <arg name="imu_topic" value="/mavros/imu/data"/> You can also see the two frames in rviz  The question here if what I have done is correct and what do you think?cheers |

|

In reply to this post by matlabbe

Hi Mathew.

I am using rtabmap.launch and the zed odom. <include file="$(find rtabmap_ros)/launch/rtabmap.launch"><remap from="odom" to="/zed/odom"/>I have done the following: 1.- I have set the imu_topic argument to topic of my IMU. <arg name="imu_topic" value="/mavros/imu/data"/> 2.- I have set the gravity constraint parameter "--Optimizer/GravitySigma 0.3" into the graph <arg name="rtabmap_args" value="--delete_db_on_start --Optimizer/GravitySigma 0.3" />3.- I have set the TF between imu and zed camera. <joint name="zed_base_link_camera_center_joint" type="fixed"> |

|

Administrator

|

For TF between imu and camera, are the frames in rviz make sense compared to real setup?

The grid parameters can be set in rtabmap.launch with: args:="--Grid/NormalsSegmentation false --Grid/MaxGroundHeight 0.05"Note however that this assumes that we are using a ground robot moving on a plane. This would only be used for occupancy grid generation. For 3D point clouds generation (File->Export 3D clouds...) in rtabmapviz or MapCloud in rviz, those parameters won't have any effects. For the points under the ground, it is because the depth image has wrong disparity when the camera is close to the ground. For the bending, you can try setting Optimizer/GravitySigma to 0.1 to force the graph to be even more aligned with gravity. Be cautious that if the IMU transform to camera is wrong, it may not correctly align the ground with xy plane. To avoid large deformation between two poses on graph optimization, you should add covariance to odometry links, this can be done by setting the arguments odom_tf_angular_variance and odom_tf_linear_variance here to around 0.01 and 0.0004 respectively. Set odom_frame_id to "odom" (well, the odom frame used by zed) to use TF for odometry instead of the topic. cheers, Mathieu |

|

This post was updated on .

Hi Mathieu,

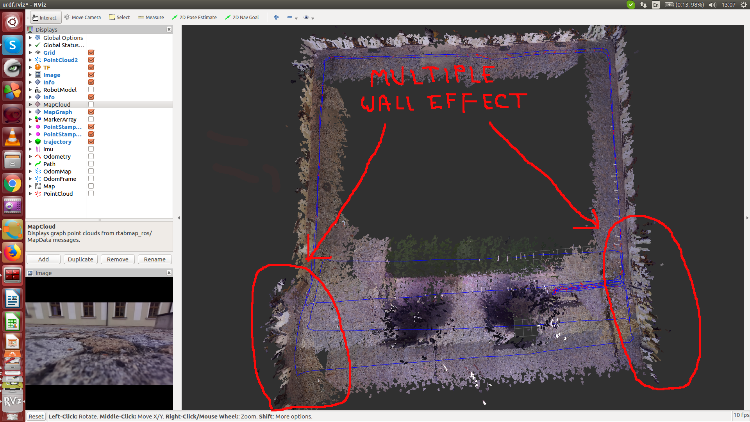

I have done more or less what you suggested, however, when the drone turns either left or right, it creates multiple wall effect. As it can be seen in the picture. Even though a node has created that takes away the texture less areas.  Here is the rtabmap.launch  Here you have a .bag and .db files http://www.fit.vutbr.cz/~plascencia/YardHalf.db http://www.fit.vutbr.cz/~plascencia/FitYardHalf.bag Any advise is appreciate it, thank you :) |

|

Administrator

|

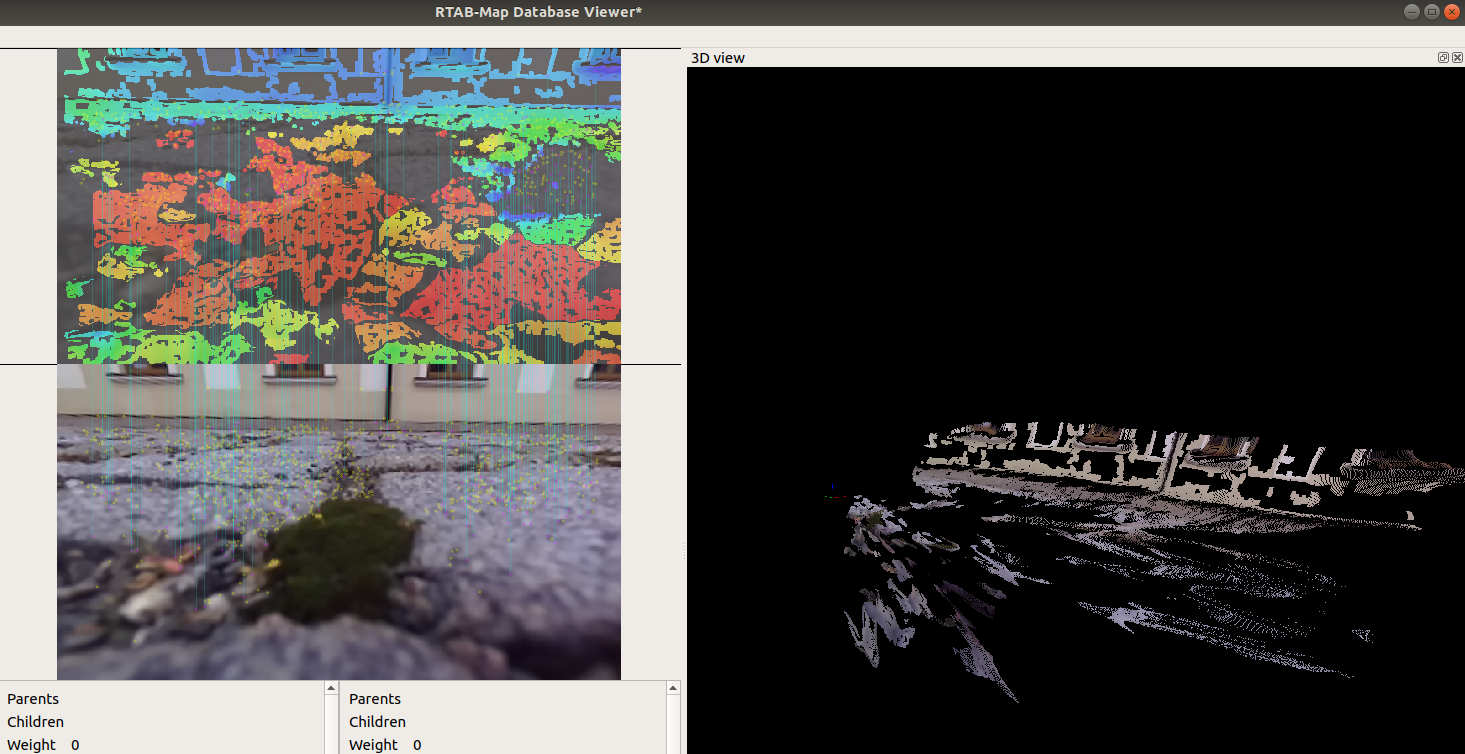

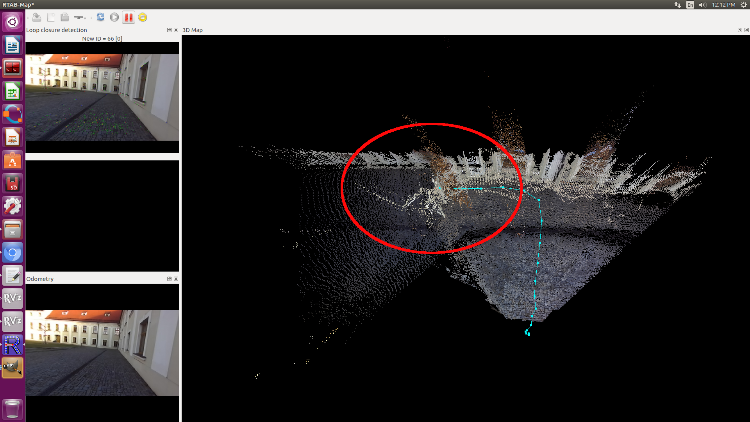

Hi,

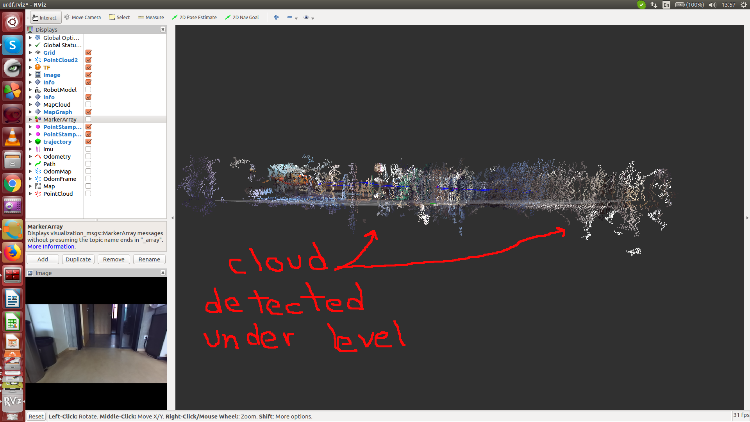

Not sure I have the right database, but beware that when the drone/camera is on the ground, the depth values on the ground seem wrong:  Using Optimizer/GravitySigma=0.3 seems to add more errors than remove them. I think it is because the TF between mavros IMU and the camera is wrong, or not enough accurate. With Optimizer/GravitySigma=0 (gravity optimization disabled), the map look a lot better but not aligned to gravity. Please copy/paste the launch file between "raw" xml tags instead of an image, I won't re-write manually to test the bag. cheers, Mathieu |

|

This post was updated on .

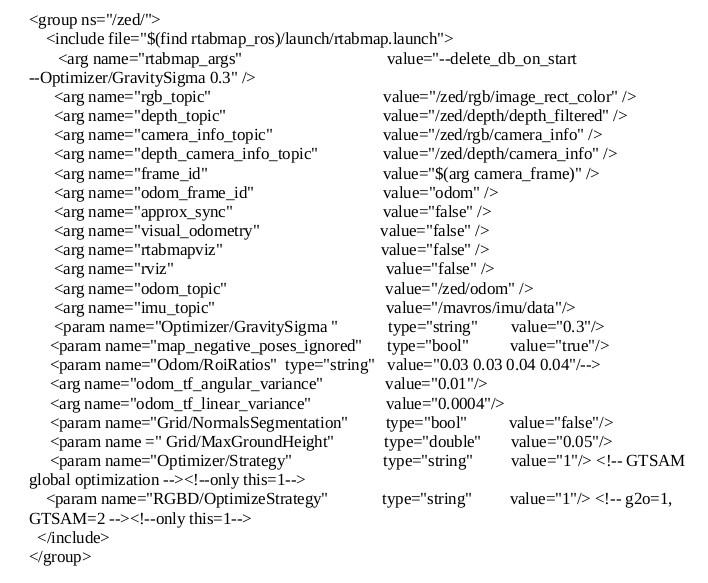

Hi Mathieu

Well, yea the drone starts on the floor and I do not fly it, I just take it on my hand. I am sorry to say it but I am remember that the camera was moved and the map was taken with the old (imu-camera) calibration and also I remember that probably Optimizer/GravitySigma was disable. I made a new calibration between imu and camera. I will make a new test and posted here :) Yea you are right in that point, sorry for that :). I just put parenthesis (param) because if not they are not posted, I do not know why <arg name="camera_frame" default="zed_camera_center" /> <group ns="/zed/"> <include file="$(find rtabmap_ros)/launch/rtabmap.launch"> <arg name="rtabmap_args" value="--delete_db_on_start --Optimizer/GravitySigma 0.3" /> <arg name="rgb_topic" value="/zed/rgb/image_rect_color" /> <arg name="depth_topic" value="/zed/depth/depth_filtered" /> <arg name="camera_info_topic" value="/zed/rgb/camera_info" /> <arg name="depth_camera_info_topic" value="/zed/depth/camera_info" /> <arg name="frame_id" value="$(arg camera_frame)" /> <arg name="odom_frame_id" value="odom" /> <arg name="approx_sync" value="false" /> <arg name="visual_odometry" value="false" /> <arg name="rtabmapviz" value="false" /> <arg name="rviz" value="false" /> <arg name="odom_topic" value="/zed/odom" /> <arg name="imu_topic" value="/mavros/imu/data"/> <(param) name="Optimizer/GravitySigma " type="string" value="0.3"/> <arg name="odom_tf_angular_variance" value="0.01"/> <arg name="odom_tf_linear_variance" value="0.0004"/> <(param) name="Grid/NormalsSegmentation" type="bool" value="false"/> <(param) name =" Grid/MaxGroundHeight" type="double" value="0.05"/> <(param) name="Optimizer/Strategy" type="string" value="1"/> <(param) name="RGBD/OptimizeStrategy" type="string" value="1"/> </include> </group> |

|

This post was updated on .

Hi Mathieu

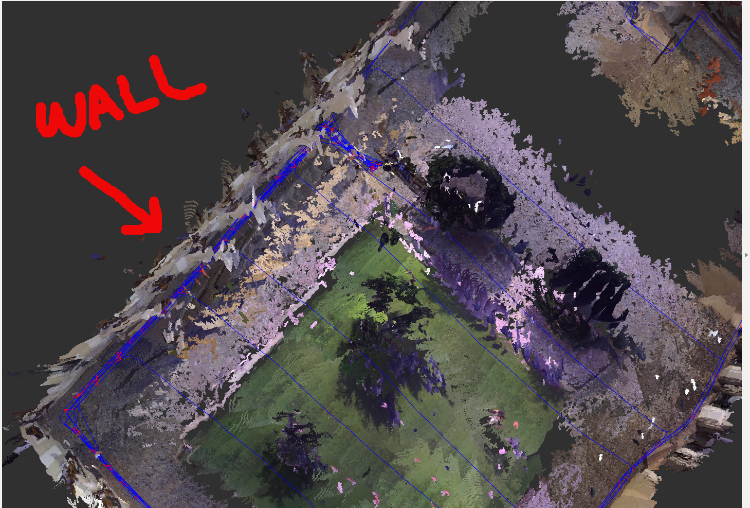

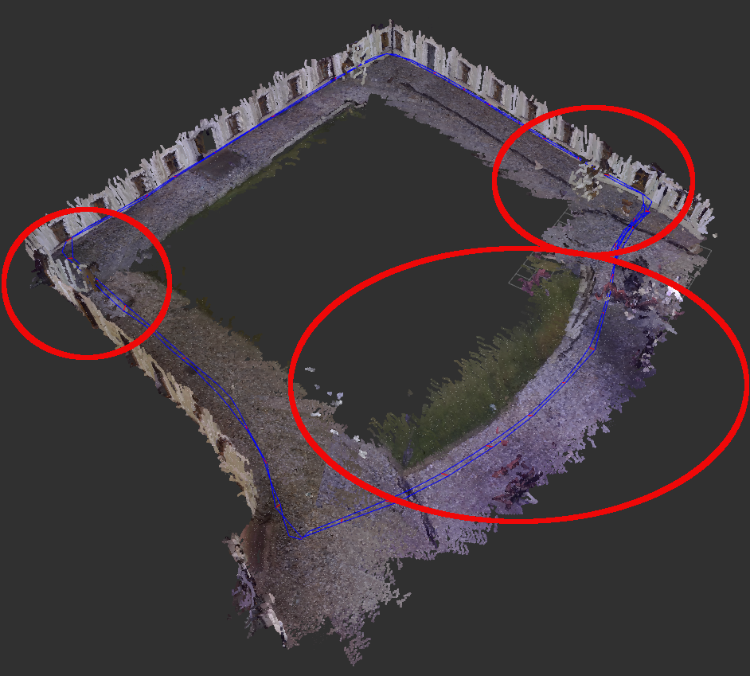

I have calibrated the imu and the zed camera Here you have a .bag and .db files made with the new calibration and the rtabmap.launch posted previously. http://www.fit.vutbr.cz/~plascencia/Yard.db http://www.fit.vutbr.cz/~plascencia/Half.bag Well. within the circles it can be seen the problems. For instance: - multiple wall -the path get twisted even with loop closure -points are detected under ground at the initial position - The tree is detected multiple times   I have a question, is it possible to change parameters and run the rtabmap.launch again with the recorded rtabmap.db to see if the new value of the parameter help or not. If it is possible, how can I do it? Any help it is appreciate it. Hi Mathieu, I just recorded a rosbag that can run the rtabmap.lauch script that has been posted previously. http://www.fit.vutbr.cz/~plascencia/YardBag.bag I just disabled the following tags: <arg name="odom_tf_angular_variance" value="0.01"/> <arg name="odom_tf_linear_variance" value="0.0004"/> And the twisted map disappeared, but I still have the problem of the wall goes out when the drone is turning and the points detected under ground, even I have the following parameter. <(param) name =" Grid/MaxGroundHeight" type="double" value="0.05"/> As you can see it in the picture.  Thank you :) |

|

Administrator

|

Hi,

The Yard.db seems not the right one, it is indoor and not moving. The bag is too large to download, the connection is very slow and fails many times. Can you use a google drive or dropbox instead? The easiest way to replay a rtabmap.db is to do it in standalone version. Launch: $ rtabmap Yard.db Say yes to resuse same parameters, then click on close database. Click on New, then in Source options, select database type. Select Yard.db as input database path in the Source panel in Preferences. Then do start. To change rtabmap parameters, just close/clear the curent session, changes parameters, and re-click start. The images and odometry contained in database will be republished. Try comparing with Optimizer/GravitySigma set or not, the gravity links may be the cause of bad graph optimizations (because IMU and camera TF is not accurate or there is some time synchronization issues between them). For points under the ground, I explained it in my previous post, the disparity algorithm is failing with surfaces too close to camera. cheers, Mathieu |

|

This post was updated on .

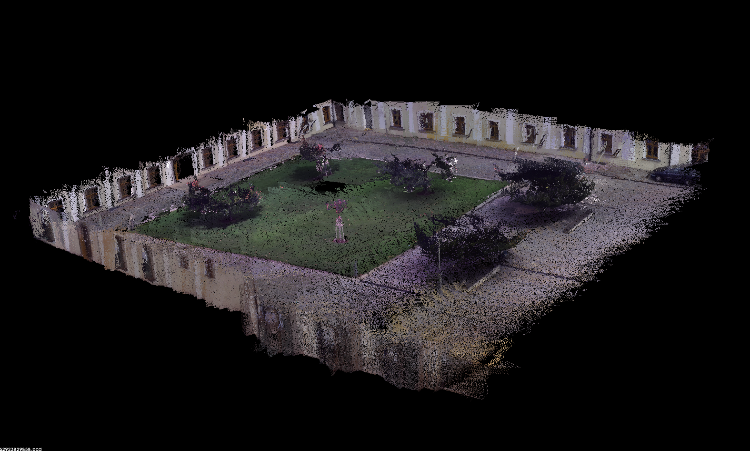

Hello Mathieu

Seems to be the the Yard.db is corrupted, cos I could not make it run. And, it is already deleted from the system. I do not have Google-Drive or DropBox, I may sign up to get an account  . But, for the time being I uploaded the following .db file into the university server. I have loaded and tested. Seems to be it is OK. As you can see it in the picture. . But, for the time being I uploaded the following .db file into the university server. I have loaded and tested. Seems to be it is OK. As you can see it in the picture.

http://www.fit.vutbr.cz/~plascencia/Back.db  I do have some questions: Did you manage to load the YardBag.bag and run it with rtabmap.launch? How can I change the rtabmap parameters when I run rtabmap Back.db? As you can see in the picture the walls are quite not aligned as in previous picture from the previous simulation posted here. Also, when running rtabmap InnerYard.db I can see that when the camera goes towards the wall, this one gets twisted. http://www.fit.vutbr.cz/~plascencia/InnerYard.db   I do not know what is causing this problem. Any help I appreciate it. Thank you

|

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |