scanning parameters

|

Hi,

I try to scan area and I don't get accuracy and robust result. I'm using realsense L515 and the commands: roslaunch realsense2_camera rs_camera.launch align_depth:=true unite_imu_method:="linear_interpolation" enable_gyro:=true enable_accel:=true rosrun imu_filter_madgwick imu_filter_node \ _use_mag:=false \ _publish_tf:=false \ _world_frame:="enu" \ /imu/data_raw:=/camera/imu \ /imu/data:=/rtabmap/imu roslaunch rtabmap_ros rtabmap.launch\ rtabmap_args:="--delete_db_on_start --Optimizer/GravitySigma 0.3" \ depth_topic:=/camera/aligned_depth_to_color/image_raw \ rgb_topic:=/camera/color/image_raw \ camera_info_topic:=/camera/color/camera_info \ approx_sync:=false \ wait_imu_to_init:=true \ imu_topic:=/rtabmap/imu 1. When I am scanning, I almost never have a green screen that show match id (sometimes yellow or red). Is it say, there is not have a good match between pictures? 2. I am moving in x-y-theta and the scanning direction is to Z (like the robot scan the ceiling). There is a parameter like 'Reg/Force3DoF' to set this motion? 3. I tried change some parameters: RGBD/LoopClosureReextractFeatures true, Kp/DetectorStrategy 6, Kp/MaxFeatures 0, Kp/MaxFeatures 1000, RGBD/LinearUpdate 0.01. But there is no a big difference, I still have a bad scanning. How can I change the numbers of features per image? Where can I find a list of parameters that should be changed for a better scan? Can I set the maximum depth? 4. In addition, there is an option to save database, change parameters and see demo of the scanning that use the same database pictures and the new parameters? To see if the updated parameter get a better scanning and we can see green screen and a better match between pictures. Thanks a lot, Orya |

|

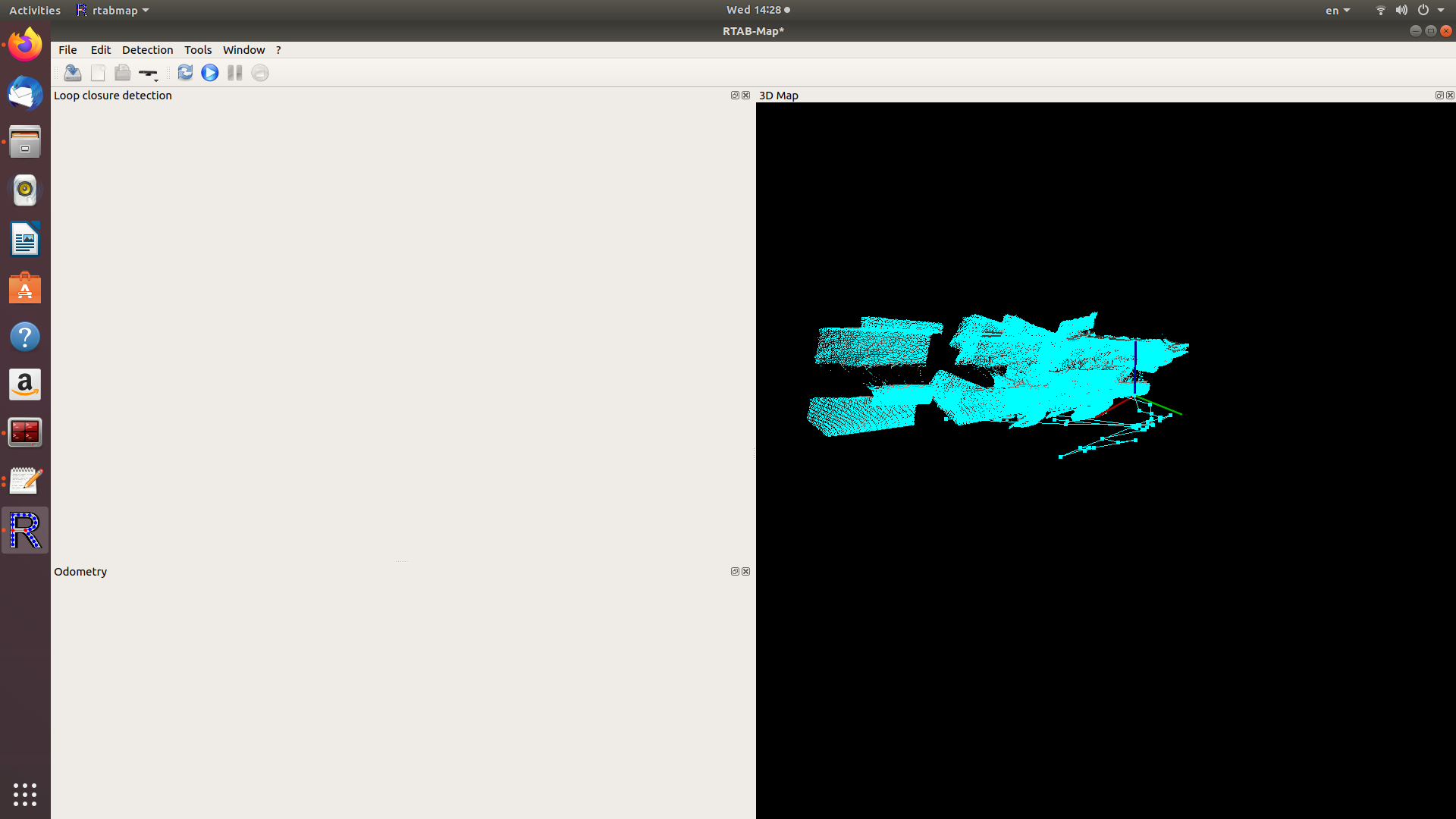

map.png

I'm adding the an example of a database and picture to show the offset I got, even I scan the same area (forward and backward) https://gofile.io/d/cgrhba https://gofile.io/d/cgrhba Thanks a lot, Orya |

|

Administrator

|

This post was updated on .

The database links are not working.

With L515, visual odometry is quite poor because the sync issues between RGB and tof camera, and that the RGB camera has low field of view and has a lot of motion blur when moving. The best scanning I had alone yet with L515 is by using icp_odometry instead. See example here: http://official-rtab-map-forum.206.s1.nabble.com/Kinect-For-Azure-L515-ICP-lighting-invariant-mapping-td7187.html A ros example based on the standalone example would be like this:

roslaunch realsense2_camera rs_camera.launch\

align_depth:=true \

unite_imu_method:="linear_interpolation" \

enable_gyro:=true \

enable_accel:=true

rosrun imu_filter_madgwick imu_filter_node\

_use_mag:=false\

_publish_tf:=false\

_world_frame:="enu"\

/imu/data_raw:=/camera/imu\

/imu/data:=/rtabmap/imu

rosrun nodelet nodelet standalone rtabmap_ros/point_cloud_xyz \

_approx_sync:=false \

/depth/image:=/camera/depth/image_rect_raw \

/depth/camera_info:=/camera/depth/camera_info \

_decimation:=4

roslaunch rtabmap_ros rtabmap.launch\

rtabmap_args:="\

--delete_db_on_start \

--Icp/VoxelSize 0.05 \

--Icp/PointToPlaneRadius 0 \

--Icp/PointToPlaneK 20 \

--Icp/CorrespondenceRatio 0.2 \

--Icp/PMOutlierRatio 0.65 \

--Icp/Epsilon 0.005 \

--Icp/PointToPlaneMinComplexity 0 \

--Odom/ScanKeyFrameThr 0.7 \

--OdomF2M/ScanMaxSize 15000 \

--Optimizer/GravitySigma 0.3 \

--RGBD/ProximityPathMaxNeighbors 1 \

--Reg/Strategy 1" \

icp_odometry:=true \

scan_cloud_topic:=/cloud \

subscribe_scan_cloud:=true \

depth_topic:=/camera/aligned_depth_to_color/image_raw \

rgb_topic:=/camera/color/image_raw \

camera_info_topic:=/camera/color/camera_info \

approx_sync:=false \

wait_imu_to_init:=true \

imu_topic:=/rtabmap/imu

|

|

Hello,

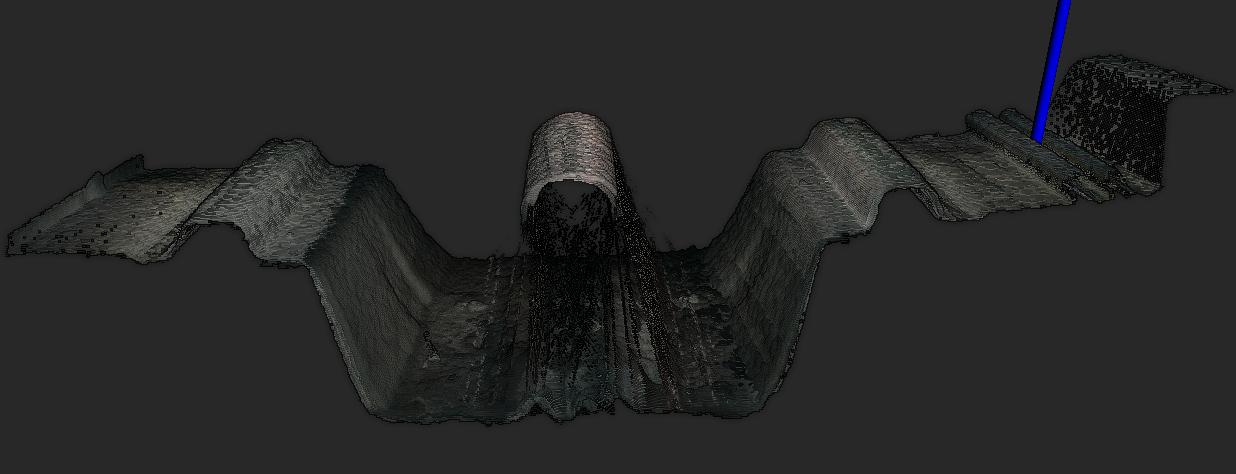

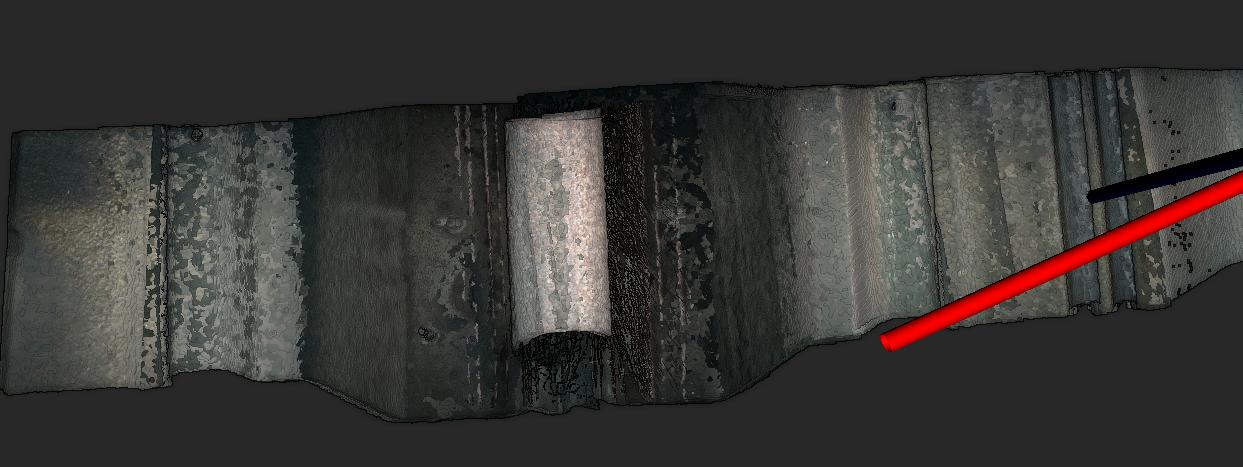

Thank a lot your answer and the example. I try to use the example, but actually, my result even worst. The map I get is almost random, every picture is in another position, even I moved only a little bit. I will note, we are close to the object we scan, which affects the field of view and maybe also the quality of the scan. Link for the current database (I hope it will work): https://www.jumbomail.me/j/T0G3dChPjEqgXC2 Link previous DB: https://www.jumbomail.me/j/20mE4t5jlEKF5Vm the current scan:  previous scan (RGBD):  |

|

Administrator

|

Hi,

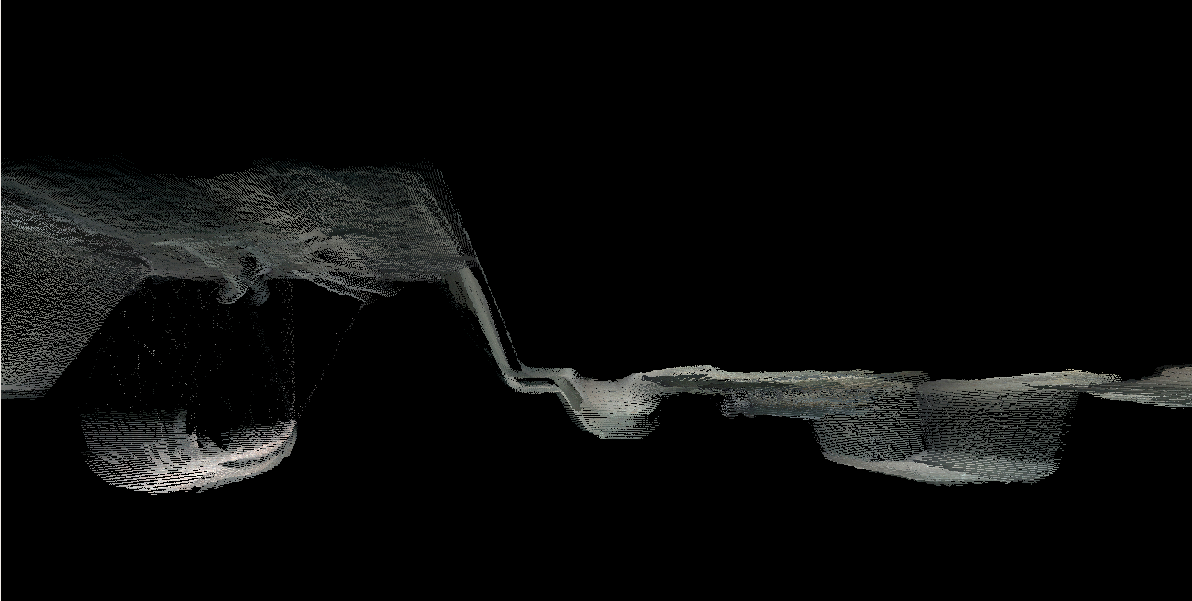

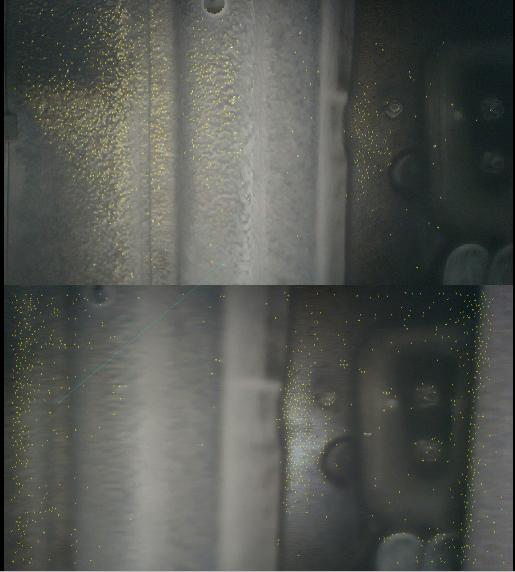

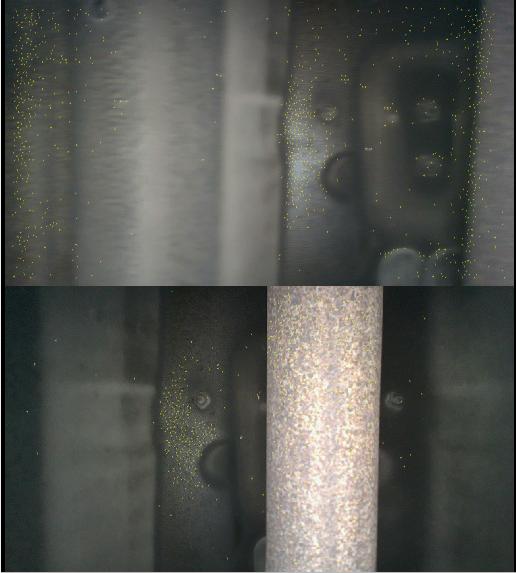

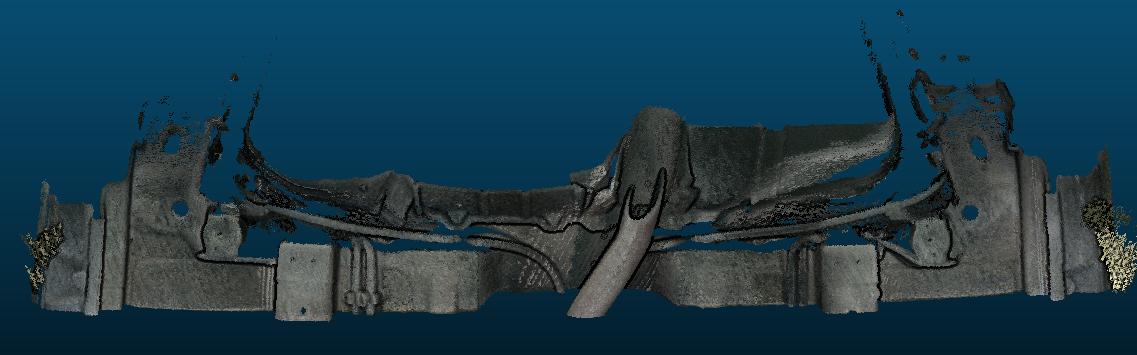

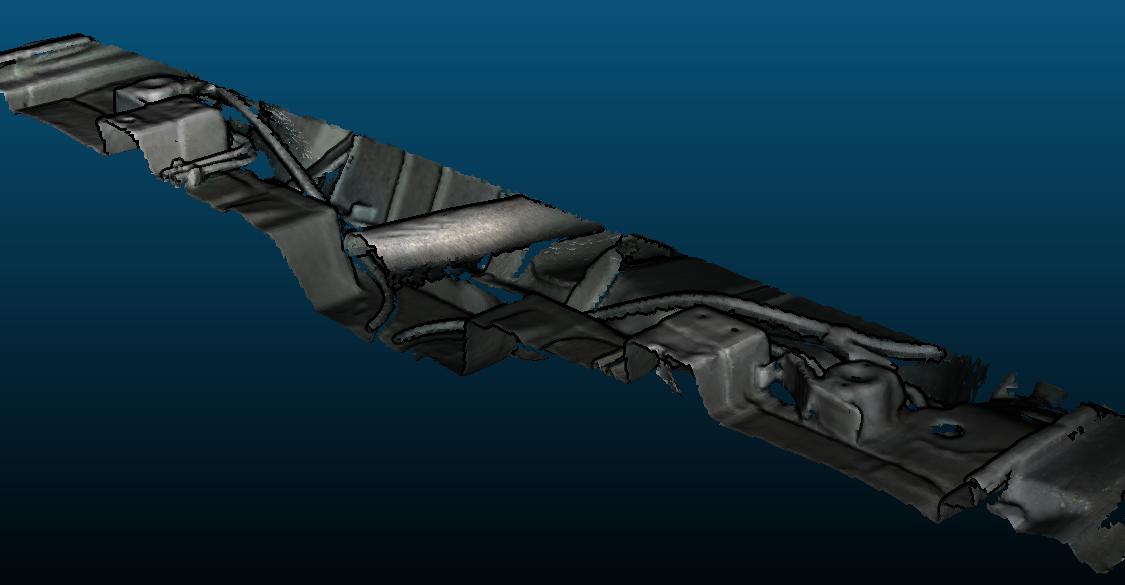

The geometry-only approach cannot work because the geometry is not enough complex (in all 3 axes) to work robustly. At least there are quite a lot of visual features to match between frames, however depending if visual features are located all at the same small spot of the image, rotation estimation can be not that accurate. Using vision seem to work relatively well, until there is motion blur in the camera:  or camera focus is changing:  Mixing vision and ICP with the test_icp.db, this is the best I could do:   To help more, I would need a rosbag of the L515 data. The point cloud has to be filtered for the noisy points on the edge, which make ICP more difficult to do. I think the best approach would be a hybrid approach between vision and ICP (Reg/Strategy=2, or rgbdicp_odometry), but I would need that rosbag to make an example (I don't have one mixing both).

roslaunch realsense2_camera rs_camera.launch\

align_depth:=true \

unite_imu_method:="linear_interpolation" \

enable_gyro:=true \

enable_accel:=true

$ rosbag record /tf_static /tf /camera/imu /camera/depth/image_rect_raw /camera/depth/camera_info /camera/aligned_depth_to_color/image_raw /camera/color/image_raw /camera/color/camera_info

Make sure to move smoothly to avoid motion blur.

cheers, Mathieu |

|

Hi Mathieu,

Thank you so much for your help. Your result looks much better than the result we got, can you share with us the parameters you used? I will record scanning and upload a rosbag file the soon I can. Thanks a lot. Orya |

|

Administrator

|

Hi,

I did it manually with rtabmap-databaseViewer, so I would wait your bag to make it more automated. |

|

Hi,

I adding 3 records: https://www.dropbox.com/s/94k2y91ozssf1sl/record1.bag?dl=0 Faster scanning: https://www.dropbox.com/s/qvnrk413ac4yvr7/record5.bag?dl=0 The camera on an angle: https://www.dropbox.com/s/4vv48be5r6w6ulp/record4.bag?dl=0 thank you very much, Orya |

|

Administrator

|

Hi Orya,

thx for the bags. I was able to scan record1.bag and record4.bag with same parameters. For record5.bag, there is too much motion blur in RGB image. Here is a comparison: record1.bag:  record5.bag (this is where visual odometry got lost):  For record1.bag and record4.bag, here what I used:

$ roslaunch rtabmap_ros rtabmap.launch\

rtabmap_args:="\

--delete_db_on_start \

--Icp/VoxelSize 0 \

--Icp/PointToPlaneRadius 0 \

--Icp/PointToPlaneK 20 \

--Icp/CorrespondenceRatio 0.3 \

--Icp/PMOutlierRatio 0.65 \

--Icp/Epsilon 0.005 \

--Icp/PointToPlaneMinComplexity 0.02 \

--Optimizer/GravitySigma 0.3 \

--RGBD/ProximityPathMaxNeighbors 1 \

--Reg/Strategy 1 \

--RGBD/NeighborLinkRefining true \

--RGBD/LinearUpdate 0.01 \

--Optimizer/GravitySigma 0 \

--Optimizer/OptimizeMaxError 4 \

--Rtabmap/DetectionRate 2 \

--Mem/STMSize 30 " \

scan_cloud_topic:=/cloud \

subscribe_scan_cloud:=true \

depth_topic:=/camera/aligned_depth_to_color/image_raw \

rgb_topic:=/camera/color/image_raw \

camera_info_topic:=/camera/color/camera_info \

approx_sync:=false \

wait_imu_to_init:=true \

imu_topic:=/rtabmap/imu \

use_sim_time:=true

$ rosrun imu_filter_madgwick imu_filter_node\

_use_mag:=false\

_publish_tf:=false\

_world_frame:="enu"\

/imu/data_raw:=/camera/imu\

/imu/data:=/rtabmap/imu

rosrun nodelet nodelet standalone rtabmap_ros/point_cloud_xyz \

_approx_sync:=false \

/depth/image:=/camera/depth/image_rect_raw \

/depth/camera_info:=/camera/depth/camera_info \

_voxel_size:=0.005 \

_decimation:=4 \

_noise_filter_radius:=0.02 \

_noise_filter_min_neighbors:=20

$ rosbag play --clock record1.bag

or

$ rosbag play --clock record4.bag

Notice that I disabled gravity optimization (Optimizer/GravitySigma=0) because for record1.bag, the camera is looking perfectly upwards, which is causing issue with attitude estimation. rtabmap should be built with libpointmatcher support. use_sim_time should be set to false when doing live mapping with the sensor. After mapping, for convenience to export the assembled cloud with noise filtered (note that noise options have been added today):

rtabmap-export --voxel 0.001 --noise_radius 0.005 --noise_k 10 --bin ~/.ros/rtabmap.db Some results:      If you need a mesh: $ rtabmap-export --voxel 0.001 --noise_radius 0.005 --noise_k 10 --bin --texture --poisson_depth 9 --color_radius 0.002 ~/.ros/rtabmap.db  cheers, Mathieu |

|

Hi Mathieu,

Thank you, it look good with those parameters. Sometimes, we still have some stitching issue. Is there an option to see, in the Rtabmap, the dynamically full scan without the blue dots? So we will see when we are getting a bad stitching in the scanning (and not just after). In addition,the picture you sent us here, is a Ply format? Can you tell us which software you use? We does not get a high resolution pictures like your... |

|

Administrator

|

Hi,

For rendering options, open Preferences->3D Rendering. Not sure what you mean by blue dots, the trajectory? If so in 3D Rendering panel we can hide the graph by unchecking "Show graphs". The point clouds were visualized in CloudCompare, but they were exported with the "rtabmap-export" commands in my previous post. cheers, Mathieu |

|

In reply to this post by matlabbe

I tried to use the same parameters to get icp_odometry for L515, but I get 5 secs timeout warnings, like some topic is not synced (becaause they all are published well, I checked). So, I tried approx_sync:=true, but still no effect. And also, how does rtabmap use IMU data (output of Madgwick filter) internally?

|

|

Administrator

|

For a more general L515 icp example, see the one on this page: http://wiki.ros.org/rtabmap_ros/Tutorials/HandHeldMapping

The IMU is used to guess orientation between frames, so that we get better visual matches. It is also used as gravity constraints in local bundle adjustment for odometry, and also in graph optimization on rtabmap side. This is a loosely coupled VIO approach. How did you check, make sure to use "rostopic hz" to see the actual rate of all topics in the warning. |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |