stereo example

12

12

|

C:\rtabmap\build\bin>C:\rtabmap\build\bin\rtabmap-rgbd_mapping.exe

Using single stereo camera (device=0) Camera initialized successfully Stereo model: Baseline: 0.059000 Left fx: 1169.041067, fy: 1169.041067, cx: 809.218430, cy: 549.442814 Right fx: 1169.041067, fy: 1169.041067, cx: 809.218430, cy: 549.442814 Image size: 1920x1080 Press ESC to quit, 'r' to start/stop recording. [ WARN] (2024-10-18 20:16:12) OdometryF2M.cpp:565::rtabmap::OdometryF2M::computeTransform() Registration failed: "Not enough inliers 10/20 (matches=118) between -1 and 2" [FATAL] (2024-10-18 20:16:12) OptimizerG2O.cpp:1906::rtabmap::OptimizerG2O::optimizeBA() Condition (optimizer.verifyInformationMatrices()) not met! the final calibration %YAML:1.0 --- camera_name: v3_right image_width: 1920 image_height: 1080 camera_matrix: rows: 3 cols: 3 data: [ 1.0432729593104793e+03, 0., 9.6817899140676104e+02, 0., 1.0437151166783851e+03, 5.4787074179925901e+02, 0., 0., 1. ] distortion_coefficients: rows: 1 cols: 5 data: [ 2.2983564924898458e-02, -3.0795060067362240e-02, 2.0109709445381911e-03, 8.3164915158473885e-04, 2.1762285839859938e-03 ] distortion_model: plumb_bob rectification_matrix: rows: 3 cols: 3 data: [ 9.9642747902063711e-01, -1.5890042875408825e-02, 8.2944472932169722e-02, 1.5845339921251176e-02, 9.9987373793833800e-01, 1.1972401579947057e-03, -8.2953024389456537e-02, 1.2132037578631641e-04, 9.9655345116356342e-01 ] projection_matrix: rows: 3 cols: 4 data: [ 1.1690410672309877e+03, 0., 8.0921842956542969e+02, -6.8973422966628263e+01, 0., 1.1690410672309877e+03, 5.4944281387329102e+02, 0., 0., 0., 1., 0. ] local_transform: rows: 3 cols: 4 data: [ 0., 0., 1., 0., -1., 0., 0., 0., 0., -1., 0., 0. ] %YAML:1.0 --- camera_name: v3_left image_width: 1920 image_height: 1080 camera_matrix: rows: 3 cols: 3 data: [ 1.0558953147204809e+03, 0., 9.6861224966146654e+02, 0., 1.0561742576279689e+03, 5.4737703186392207e+02, 0., 0., 1. ] distortion_coefficients: rows: 1 cols: 5 data: [ 3.1549975364296087e-02, -5.4710881303338565e-02, 1.4402077554779872e-03, 1.1984140678372901e-04, 2.5597635329116526e-02 ] distortion_model: plumb_bob rectification_matrix: rows: 3 cols: 3 data: [ 9.9650419731458895e-01, -1.5571413260300928e-02, 8.2078717238293686e-02, 1.5615644220603613e-02, 9.9987806308218730e-01, 1.0306594503182510e-04, -8.2070313694918809e-02, 1.1790063996523145e-03, 9.9662584431366585e-01 ] projection_matrix: rows: 3 cols: 4 data: [ 1.1690410672309877e+03, 0., 8.0921842956542969e+02, 0., 0., 1.1690410672309877e+03, 5.4944281387329102e+02, 0., 0., 0., 1., 0. ] local_transform: rows: 3 cols: 4 data: [ 0., 0., 1., 0., -1., 0., 0., 0., 0., -1., 0., 0. ] %YAML:1.0 --- camera_name: v3 rotation_matrix: rows: 3 cols: 3 data: [ 9.9999958108800902e-01, 2.2982158297996056e-04, -8.8600555664321626e-04, -2.3077866499832288e-04, 9.9999938987836812e-01, -1.0802703824882582e-03, 8.8575674662271832e-04, 1.0804744011295782e-03, 9.9999902400455087e-01 ] translation_matrix: rows: 3 cols: 1 data: [ -5.8789221262217591e-02, 9.3751252964912582e-04, -4.8937239029980427e-03 ] essential_matrix: rows: 3 cols: 3 data: [ -2.6333012605341845e-07, 4.3113965386387771e-03, 8.2112573241551715e-04, -4.2646380487953309e-03, 5.4959479093007765e-05, 5.1786695204754693e-02, -8.1383231074367668e-04, -5.1783084800446848e-02, 5.6671210309109435e-05 ] fundamental_matrix: rows: 3 cols: 3 data: [ 8.8283363112214513e-10, -1.4450456780826143e-05, 5.0022352146395412e-03, 1.4291456005740164e-05, -1.8412899888568561e-07, -1.9698760866374873e-01, -4.9842252585007131e-03, 1.9516274776158657e-01, 1. ] |

|

This post was updated on .

In reply to this post by matlabbe

it works now but i want to know the warning i get when i do calibration is it bad or what ?

and how can i control the StereoBM or StereoSGBM ? <----- very important i guess from Parameters.h but i am not sure if what i see is just StereoBM or StereoSGBM there must be octomap or something , i just want to improve the point cloud so about the header and cpp which i updated i confirm they work so you can add them to the next update  mention my name in github , and for some reason i cant open github the ISP ( internet company ) i think they have a problem , i am nesquik011 at github mention my name in github , and for some reason i cant open github the ISP ( internet company ) i think they have a problem , i am nesquik011 at github

and about the GUI when we do calibration add a button start and stop so once i am in it will not going to automatically start playing so i can have a chance to change the numbers without closing and opening window again |

|

Administrator

|

This error may be caused by a compilation issue (see also this post) with Eigen:

[FATAL] (2024-10-18 20:16:12) OptimizerG2O.cpp:1906::rtabmap::OptimizerG2O::optimizeBA() Condition (optimizer.verifyInformationMatrices()) not met! You may also set Odom/ImageDecimation=2 for odometry because the default parameters may not work well with 1080p resolution. You can switch between StereoBM and StereoSGBM with parameter Stereo/DenseStrategy=0 or 1 respectively. OctoMap is not required if you just want to see a point cloud. For the requested code update, is it mostly just the FOURCC('M', 'J', 'P', 'G') line to add? For the calibration GUI, having controls of the camera could be indeed useful. I created a ticket about that. cheers, Mathieu |

|

This post was updated on .

thats a lot :) i will test and see if i got other problems or fixes i will update you right away

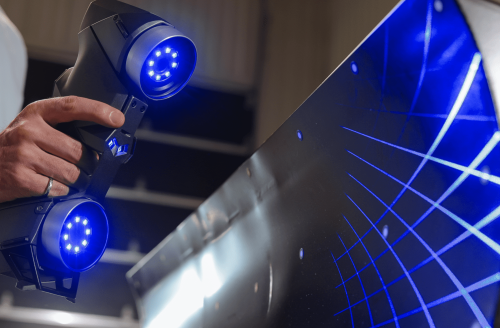

you said OctoMap is not required if you just want to see a point cloud , i thought octomap turn the point cloud to voxels then icp handle the merge , so i can increase the resolution of it how to open odometry window like the GUI with the red and green dots ? i want to ask something not related the what we were talking about , i wonder if there laser projection pattern algo to make better point cloud ? or binary code single shot ? in ( RTAB map ) is there a discord channel for rtab map ? if nt , may i get urs if you got one ? its ok if you dont want to share it i can stick with the forum no hard feelings  i am working on that , i wrote python code from long time does something like that but i did not test it yet on last thing , dont forget to update the ELP part in the next update

|

|

In reply to this post by matlabbe

about the update here is the full header i added as well the .cpp

i added few options like public const StereoCameraModel& getStereoModel() const; void setResolution(int width, int height) { _width = width; _height = height; } void setFPS(double fps) { _fps = fps; } void setFOURCC(int fourcc) { _fourcc = fourcc; } private double _fps; int _fourcc; here is the header https://pastebin.com/gKMAP4Vq about the CameraStereoVideo.cpp added the elp part , and const return stereomodel so i can print the fx fy cx cy etc this part i added early right after namespace here is the code https://pastebin.com/CPgpMvFU i forgot to answer on the update question , i was excited

copy the codes because they may expire after 2 weeks |

|

Administrator

|

Hi,

here is the related pull request: https://github.com/introlab/rtabmap/pull/1366 OctoMap is not used by ICP, it provides a 3D occupancy grid for planning and/or obstacle avoidance. When using ICP, there is another parameter called Icp/VoxelSize that can be applied to input point clouds before doing ICP. With stereo cameras, you are likely not using ICP (I don't recommend it). ICP would be used only with TOF cameras or LiDAR. Do you mean " how to do it in your cpp code"? You may look at how this example works. Structured light sensor like original Kinect for xbox 360 can indeed improve depth quality over pure stereo. Other option is a semi-random IR dotted pattern to improve depth estimation with standard stereo disparity algorithms (like realsense cameras). No, I cannot give real-time advices, otherwise I would have no life :) Regards, Mathieu |

|

This post was updated on .

hello , long time no talk

i have a theory i want to test , i will train a model so what would be the easiest to go with Rtab map , i want to use it to replace stereo matching 1-TensorFlow light 2-PyTorch 3-Darknet (YOLO) 4- other suggestions or should i go with Matlab ? its more robust than opencv in detecting the pattern i want to use Note : i will still go with opencv after making the detection if you want me to open another post just say i wont mind |

|

Administrator

|

To replace the stereo matching approach (I assume you refer to dense stereo matching), if you use python code and ROS, the easiest way would be to do it upstream (externally of rtabmap node) and republish your disparity image as a depth image that could be fed to rtabmap. Just make sure to republish with same stamp than the input stereo images used to estimate it so that rtabmap can correctly synchronize it with the left or right stereo image. Based on your previous posts, you were not using ROS, but directly the c++ API. If the neural network could be used from c++ code, that would be the easiest. cheers, Mathieu |

|

This post was updated on .

Hello Mathieu,

Long time no talk! Could you suggest an IMU sensor that’s easy to use without much hassle? By the way, I’m waiting for some equipment to arrive—getting ready to create the ultimate point cloud ever!  i will go mono camera ( industrial one ) anyway the imu do i need 6 or 9 degrees freedom ? and take a look at adafruit imu pick one for me if you dont mind , and if you have better ones give me the name , i am thinking about Adafruit BNO055 it has internal sensor fusion or do i need raw data ? i will go mono camera ( industrial one ) anyway the imu do i need 6 or 9 degrees freedom ? and take a look at adafruit imu pick one for me if you dont mind , and if you have better ones give me the name , i am thinking about Adafruit BNO055 it has internal sensor fusion or do i need raw data ?

|

|

Administrator

|

Hi,

Any IMU should work, unless you get a bad one that is super noisy. 6 deg of freedom is generally enough for indoor use. For outdoor use, having 9 deg may help to remove yaw drift (though beware that magnetometers can be sensible to many external sources, that is why indoor we generally don't use it). If the IMU has already computing the quaternion, then you can use it directly. If the IMU doesn't provide the quaternion (only raw angular velocity and linear acceleration), you can use ROS packages like complementary filter or Madgwick filter to compute it. RTAB-Map requires the quaternion to be already computed. cheers, Mathieu |

|

hello Mathieu

If I use monocular (mono) mode , I understand the scale won’t reflect real-world measurements. But if I add an IMU to mono mode, will it provide real-world measurements for distances and camera poses ? not talking about the point cloud because i will have my own way to get the point cloud , so correct me because i am worried i wont get real world measurements |

|

Administrator

|

Monocular-only SLAM has a scale drift issue, that's why we don't support it. If you add an IMU, it can be used to resolve the scale (see visual inertial odometry, VIO), for the pose but also the tracked features. Good examples are ARKit or ARCore on your phone, they both use monocular + IMU (VIO) to track the pose in real scale and also for the tracked features. For example, with RTAB-Map iOS or android, we can use RTAB-Map to create a map even if there is no depth camera or lidar. The accuracy of the point cloud is obsviously not as good than with a TOF camera or Lidar, but it may give a rough idea of the area (it depends how accurate you want the point cloud). At the end of this video (~8:43), we show an example just with monocular+IMU.

|

|

do you mean the mono + imu only supported on android or ios version , and i cant use it on pc build ? , and i am not worried about the point cloud because i will implant SLS (Structured Light System) to rtab map , the point cloud would be way more accurate than stereo or TOF , and i dont want to treat it as RGBD it would complicate things , the plan is using mono + IMU once i get valid pose the SLS will capture a point cloud in less than 30 ms , and so on

|

|

Administrator

|

The mono+IMU is supported on the back-end (rtabmap node), not in visual odometry nodes of rtabmap_ros. On Android or iOS, the front-end is computed by ARCOre or ARKit, then we feed to odometry pose + tracked features to rtabmap's back-end for loop closure detection and graph optimization. On PC, that means you will need to find a library (e.g., visual initial odometry VIO approach) doing the same than ARCore or ARKit, in order to provide the odometry pose + tracked 2D/3D features to rtabmap. |

|

Could you provide an example or point me to documentation on how to feed VIO output (poses + features) from ORB-SLAM3 to RTAB-Map's back-end? What's the expected data format and ROS topics? , i really dont know how to start or what to do , but i have orb slam 3 as a .lib , i only work on windows for now

|

|

Administrator

|

You have two choices: 1) Build RTAB-Map with ORB_SLAM3 as dependency, then you can re-use VSLAM (ROS1 or ROS2) examples from rtabmap_ros, but adding "Odometry/Strategy: 5" parameter to use ORB_SLAM3 internally for stereo_odometry or rgbd_odometry nodes. RTAB-Map's wrapper will handle appropriately TF and camera calibration and initialize ORB_SLAM3 accordingly. Caveats: 1.a) I never tried to build ORB_SLAM3 on Windows with RTAB-Map, it may not compile. 1.b) Only ORB_SLAM3 VO is supported with the current integration, not VIO. 2) Run ORB_SLAM3 node (in ROS or ROS2) independently of RTAB-Map. You would only need to forward odometry topic generated by ORB_SLAM3 (or its TF, by using odom_frame_id parameter) to rtabmap node. Caveats: 2.a) If base_link is not the same frame than the camera frame, then it will give a not standard TF tree. 2.b) I don't know if it is possible with a parameter (or a hard-coded change is needed), but loop closure detection should be disabled in ORB_SLAM3 to work with rtabmap. cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |