Re: Combine 3D Lidar and RGB-D Camera to create a single 3D point cloud (sensor fusion)

Posted by matlabbe on

URL: http://official-rtab-map-forum.206.s1.nabble.com/Combine-3D-Lidar-and-RGB-D-Camera-to-create-a-single-3D-point-cloud-sensor-fusion-tp10162p10471.html

Hi Alex,

When using Grid/Sensor=2 (with Grid/RangeMax=0, Grid/ClusterRadius=1 and Grid/DepthDecimation=1), as soon as the points seen by the camera exit the field of view, the lidar will override the points over time.

It is not currently possible to do what you want just by changing some parameters. A workaround would be to use Grid/Sensor=0 in rtabmap node, and enabling gen_depth if you are using a single RGB camera, then use map_assembler node to generate the map cloud from the camera. That way you will have a RGB cloud and a lidar cloud of the map. With an external node, you could subscribe to these 2 clouds, then merge them so that lidar points close to RGB points are filtered (using something like this cloud subtraction function). You will end up with a unique point cloud with RGB and lidar points not overlapping.

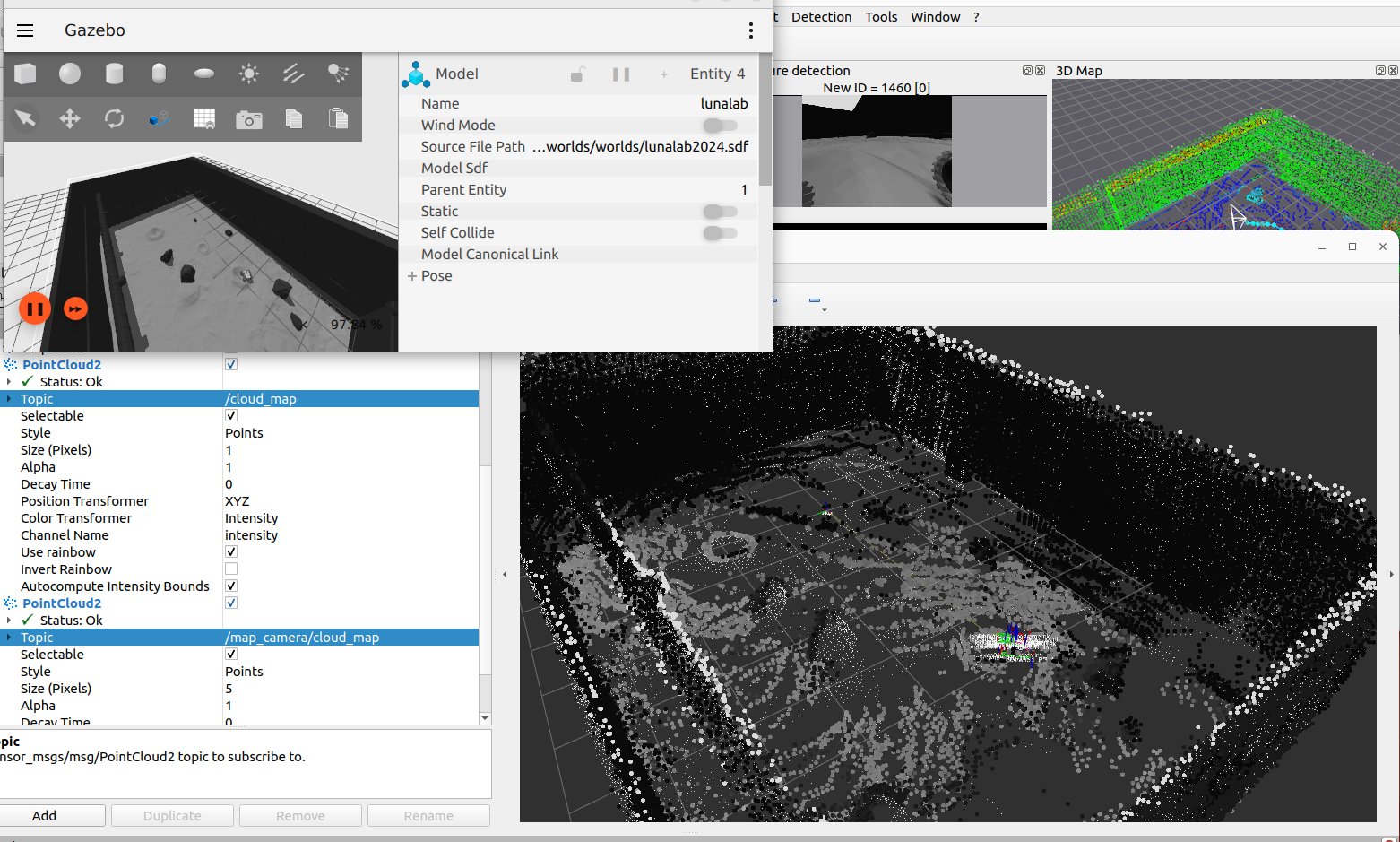

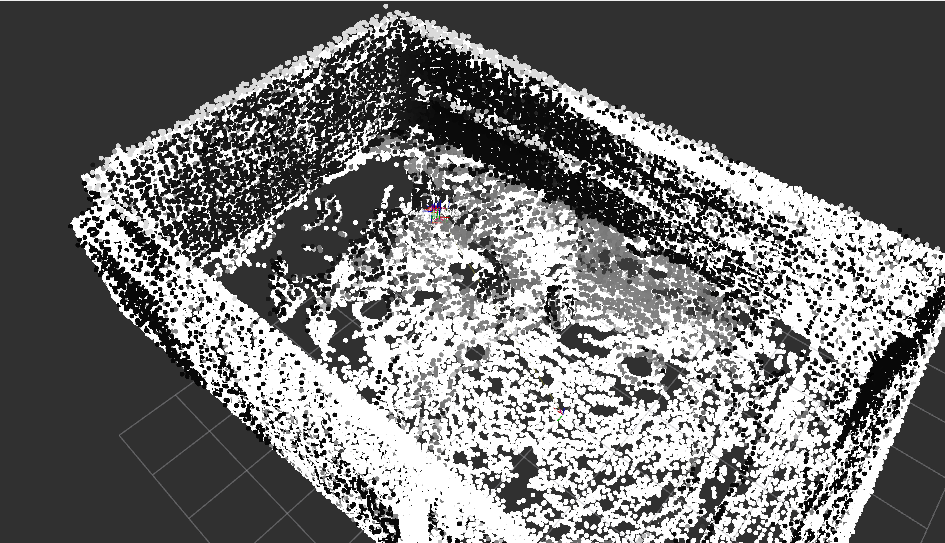

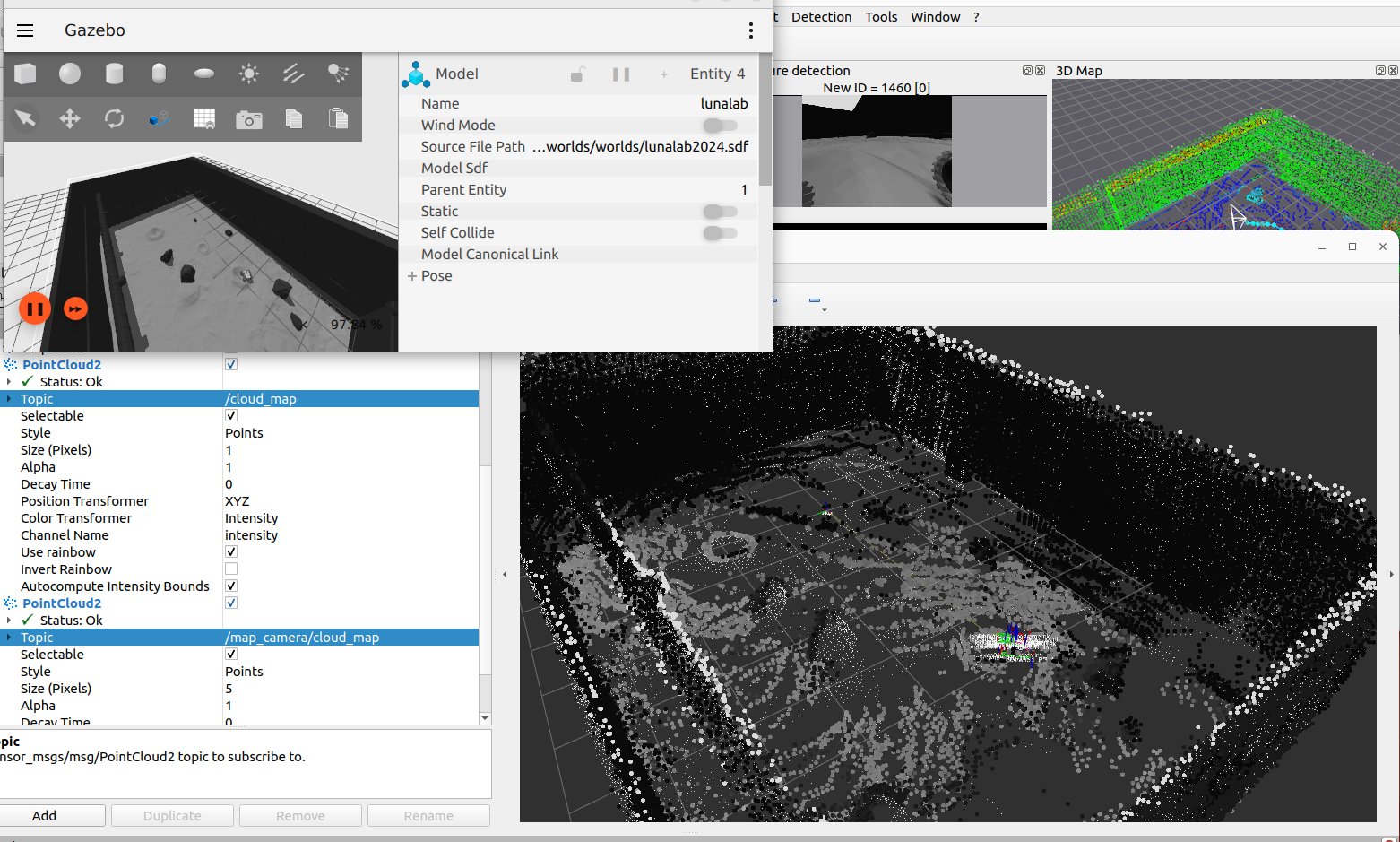

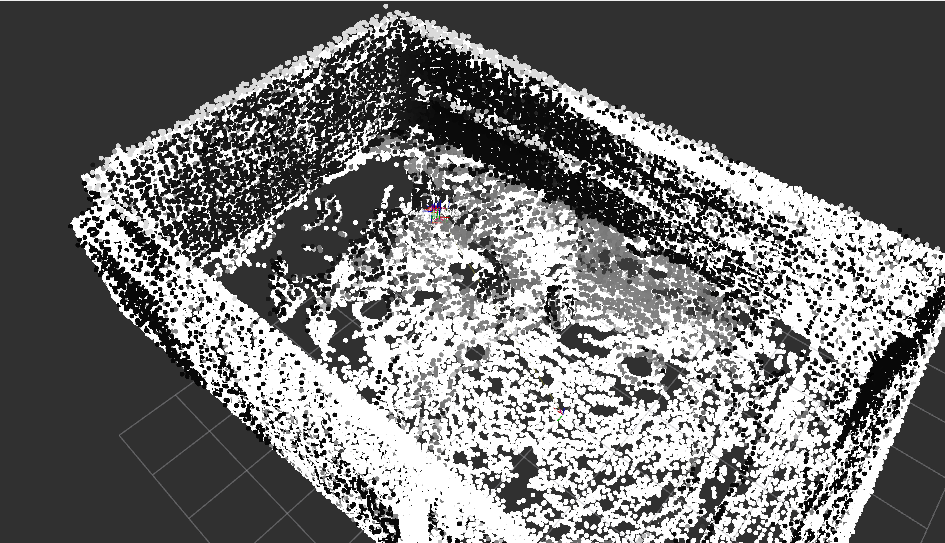

Or make the lidar points look small and RGB points look bigger in rviz, like this:

Here is the command I used to spawn a map_assembler after launching your launch file:

UPDATE: well I think there is another option not involving any other nodes. You can enable "cloud_subtract_filtering" parameter for rtabmap node. However, some RGB points may be not added if some lidar points were added first at some place.

Regards,

Mathieu

URL: http://official-rtab-map-forum.206.s1.nabble.com/Combine-3D-Lidar-and-RGB-D-Camera-to-create-a-single-3D-point-cloud-sensor-fusion-tp10162p10471.html

Hi Alex,

When using Grid/Sensor=2 (with Grid/RangeMax=0, Grid/ClusterRadius=1 and Grid/DepthDecimation=1), as soon as the points seen by the camera exit the field of view, the lidar will override the points over time.

It is not currently possible to do what you want just by changing some parameters. A workaround would be to use Grid/Sensor=0 in rtabmap node, and enabling gen_depth if you are using a single RGB camera, then use map_assembler node to generate the map cloud from the camera. That way you will have a RGB cloud and a lidar cloud of the map. With an external node, you could subscribe to these 2 clouds, then merge them so that lidar points close to RGB points are filtered (using something like this cloud subtraction function). You will end up with a unique point cloud with RGB and lidar points not overlapping.

Or make the lidar points look small and RGB points look bigger in rviz, like this:

Here is the command I used to spawn a map_assembler after launching your launch file:

ros2 run rtabmap_util map_assembler --ros-args \ -p Grid/Sensor:="'1'" \ -p Grid/RangeMax:="'0'" \ -p regenerate_local_grids:=true \ -r __ns:=/map_camera \ -p rtabmap:=/rtabmap \ -r mapData:=/mapData \ -p use_sim_time:=true \ -p Grid/ClusterRadius:="'1'" \ -p Grid/DepthDecimation:="'1'"Note that map_assembler has been ported to ros2 recently, and available only if rtabmap_util is rebuilt from source.

UPDATE: well I think there is another option not involving any other nodes. You can enable "cloud_subtract_filtering" parameter for rtabmap node. However, some RGB points may be not added if some lidar points were added first at some place.

Regards,

Mathieu

| Free forum by Nabble | Edit this page |