Re: Global Optimizing with Loop Closure and Navigation After Mapping.

Posted by matlabbe on

URL: http://official-rtab-map-forum.206.s1.nabble.com/Global-Optimizing-with-Loop-Closure-and-Navigation-After-Mapping-tp11061p11205.html

Hi,

From your video, I see a small vertical shift here:

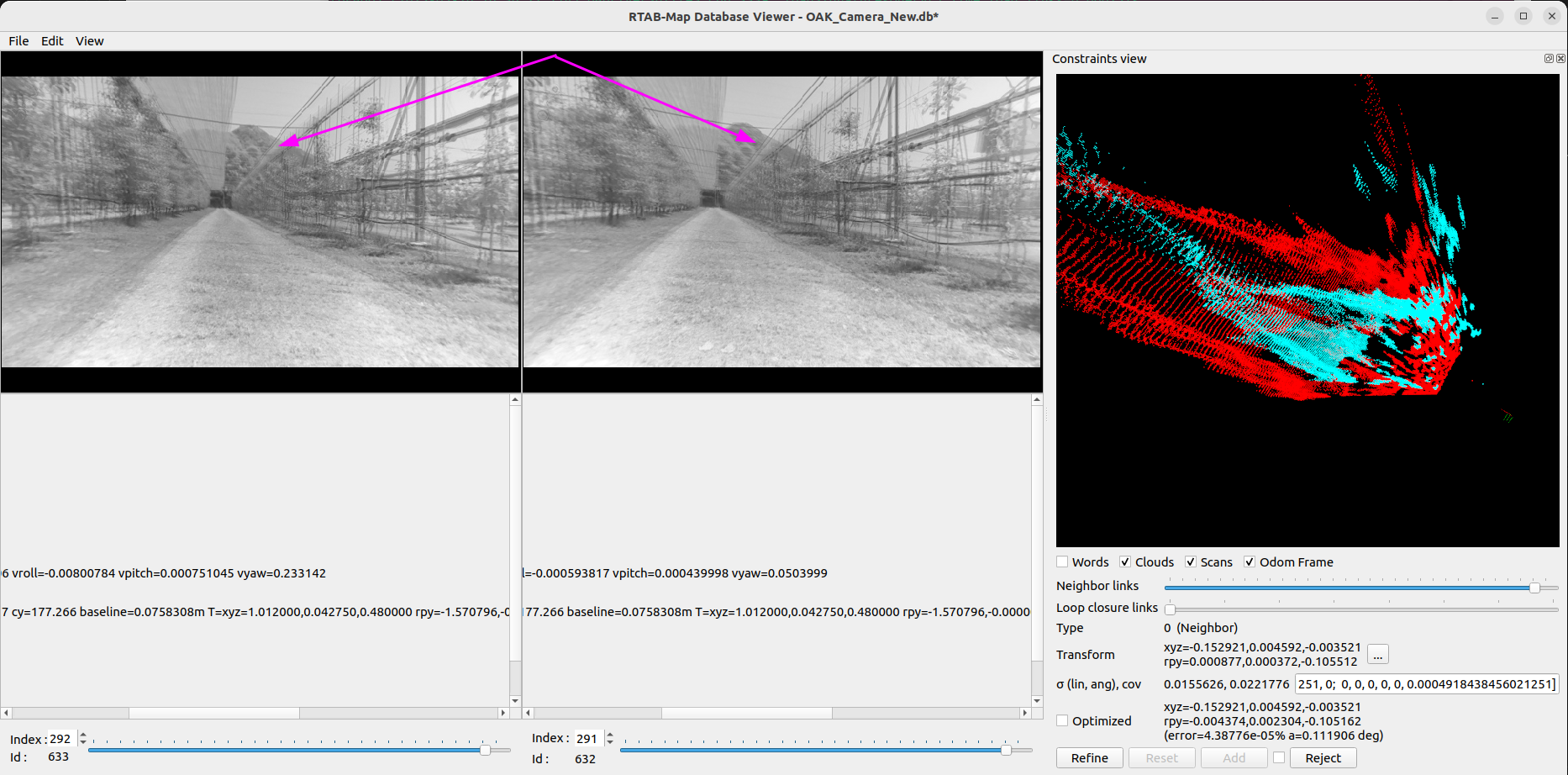

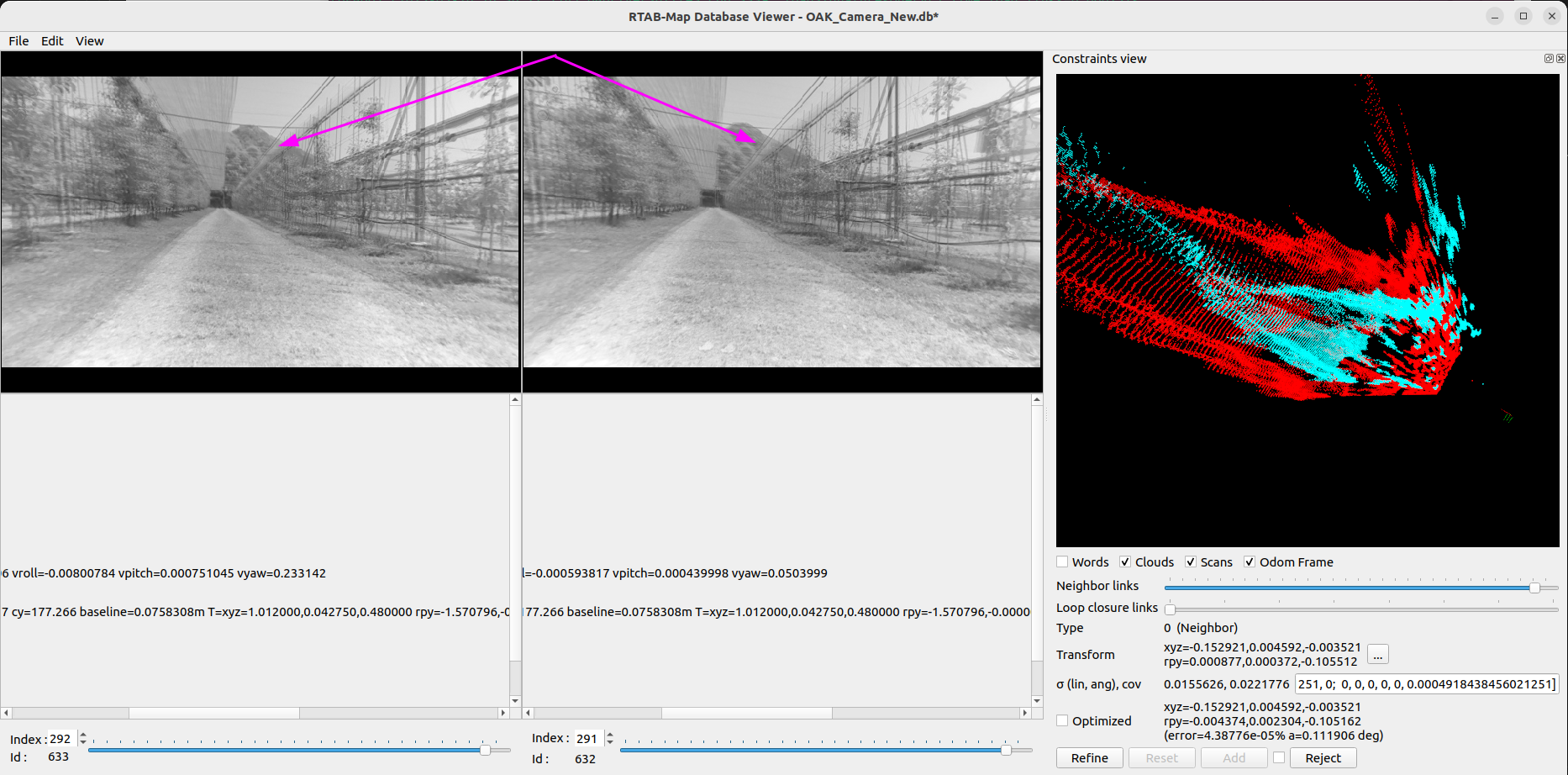

I looked into OAK database, and I can still see some time sync issues. Even if the driver is reporting same stamp, it seems the cameras don't actually shut exactly at the same time. If you record left and right images (in a rosbag) at the same time while doing very fast rotation, it may be more obvious. Here is an example of two consecutive images, in the Constraints View, the point cloud scale appears different:

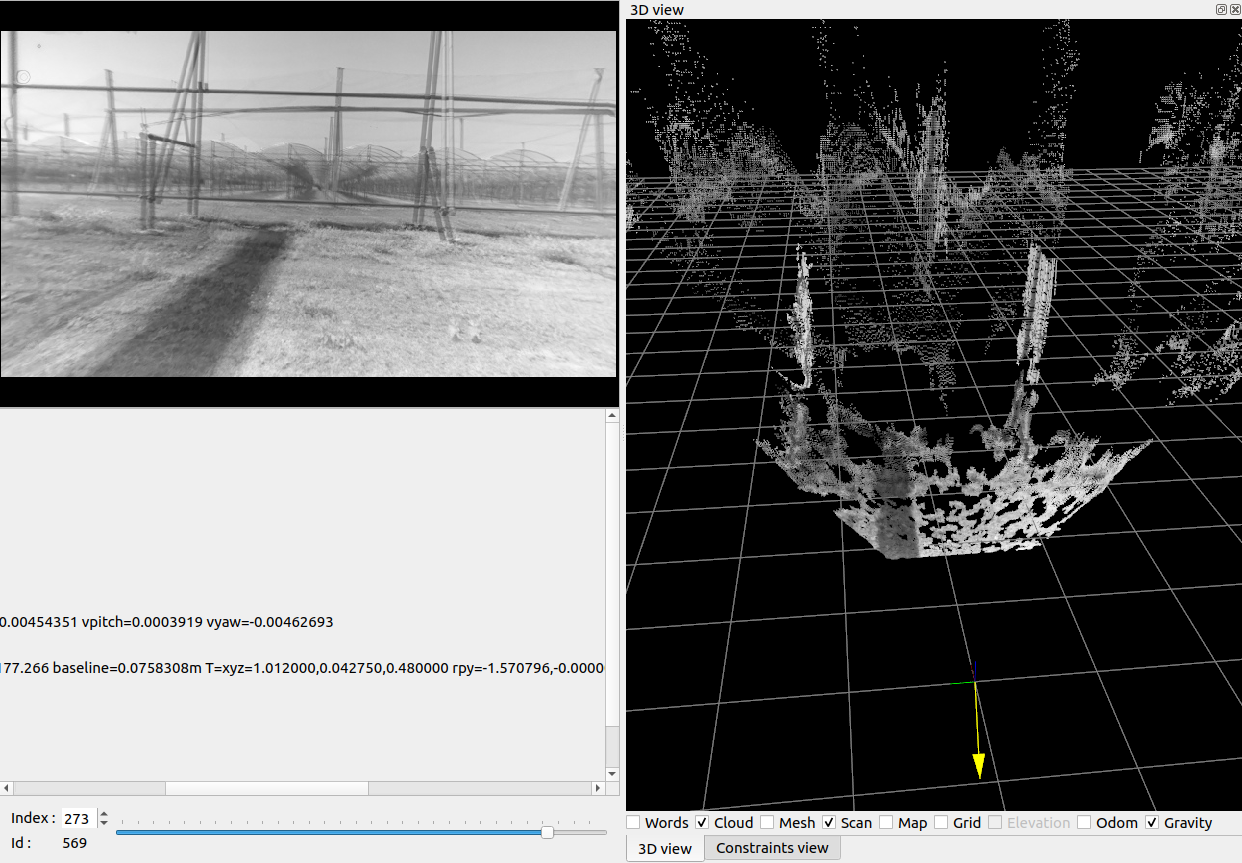

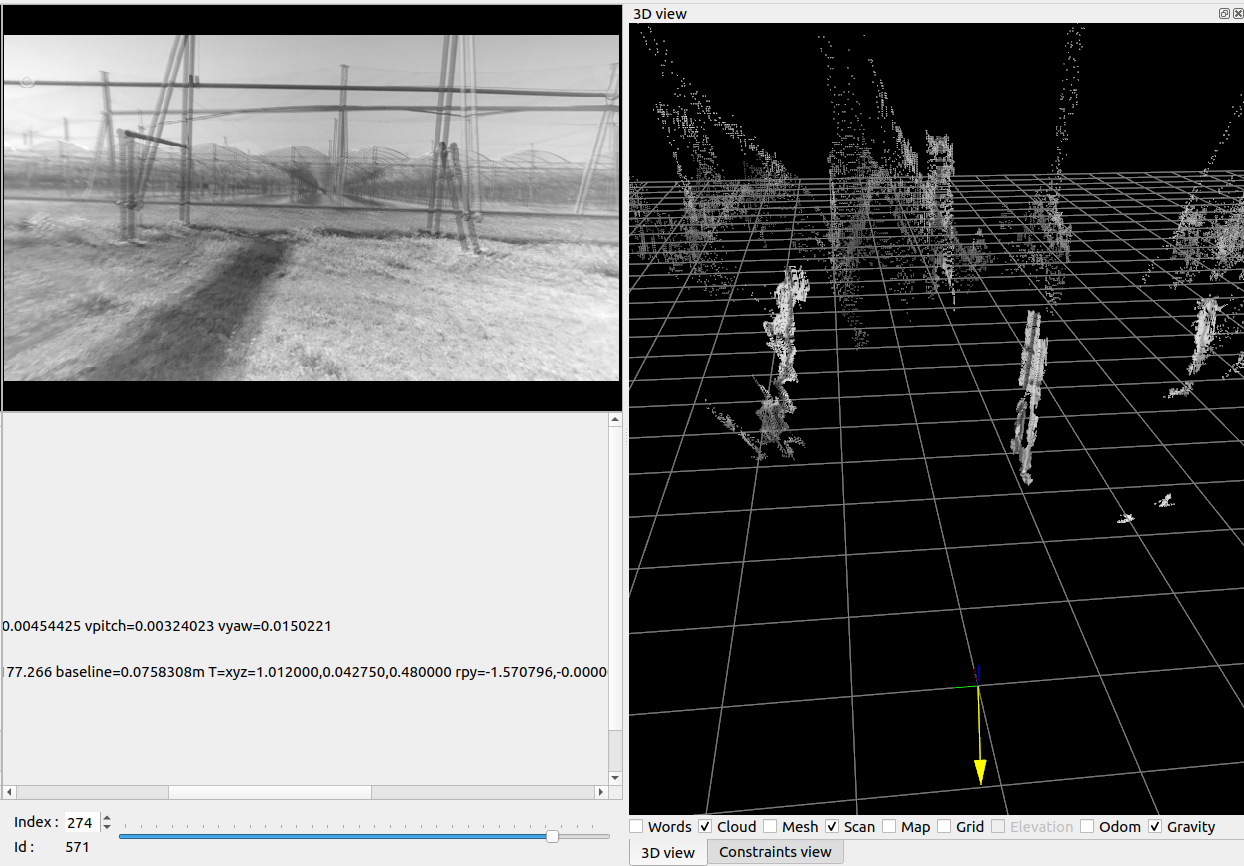

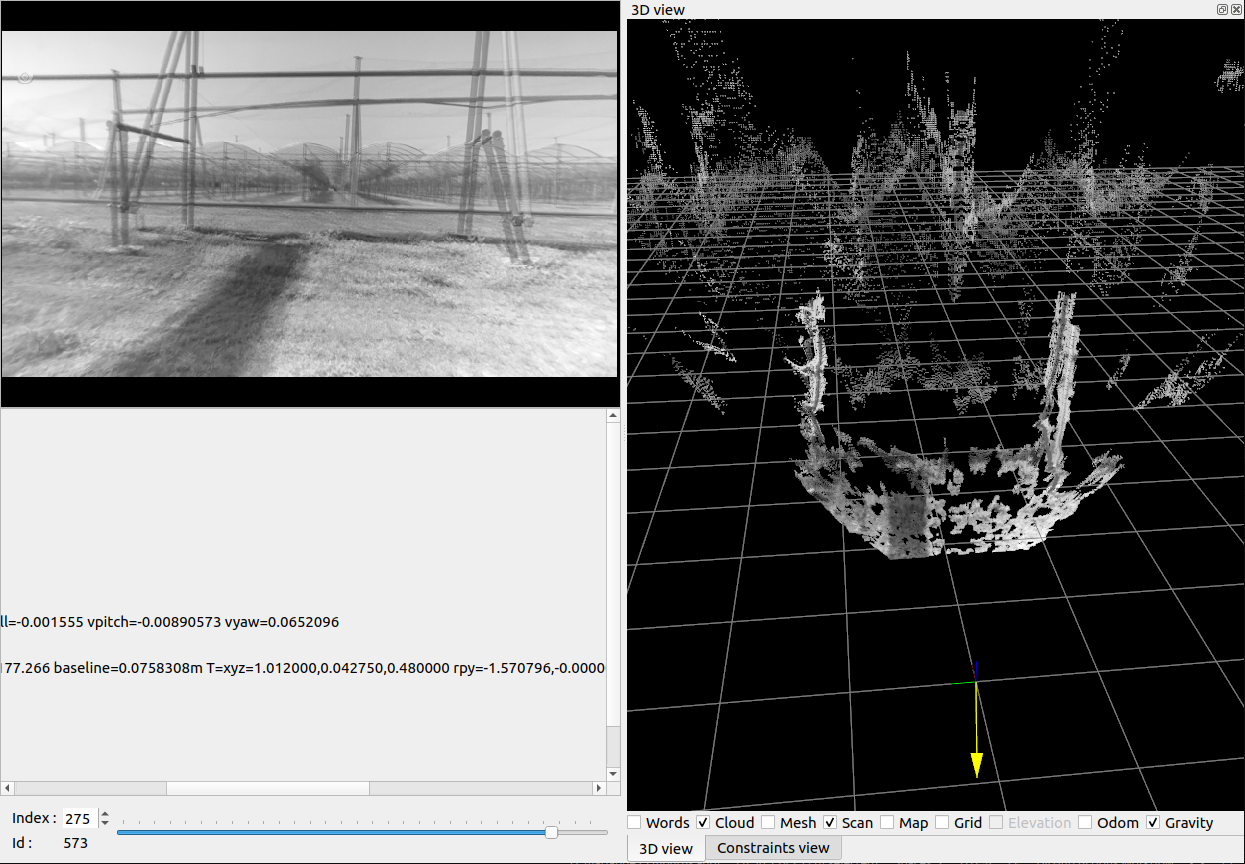

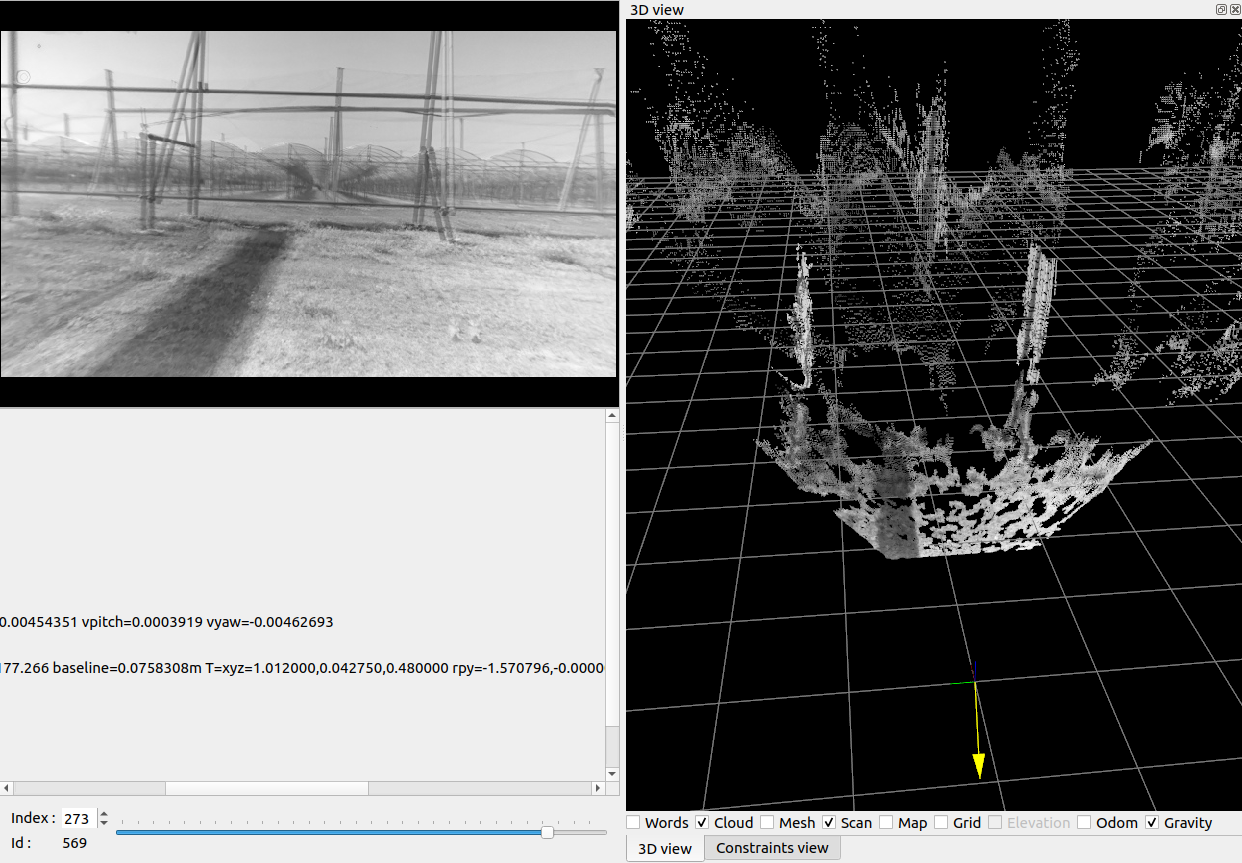

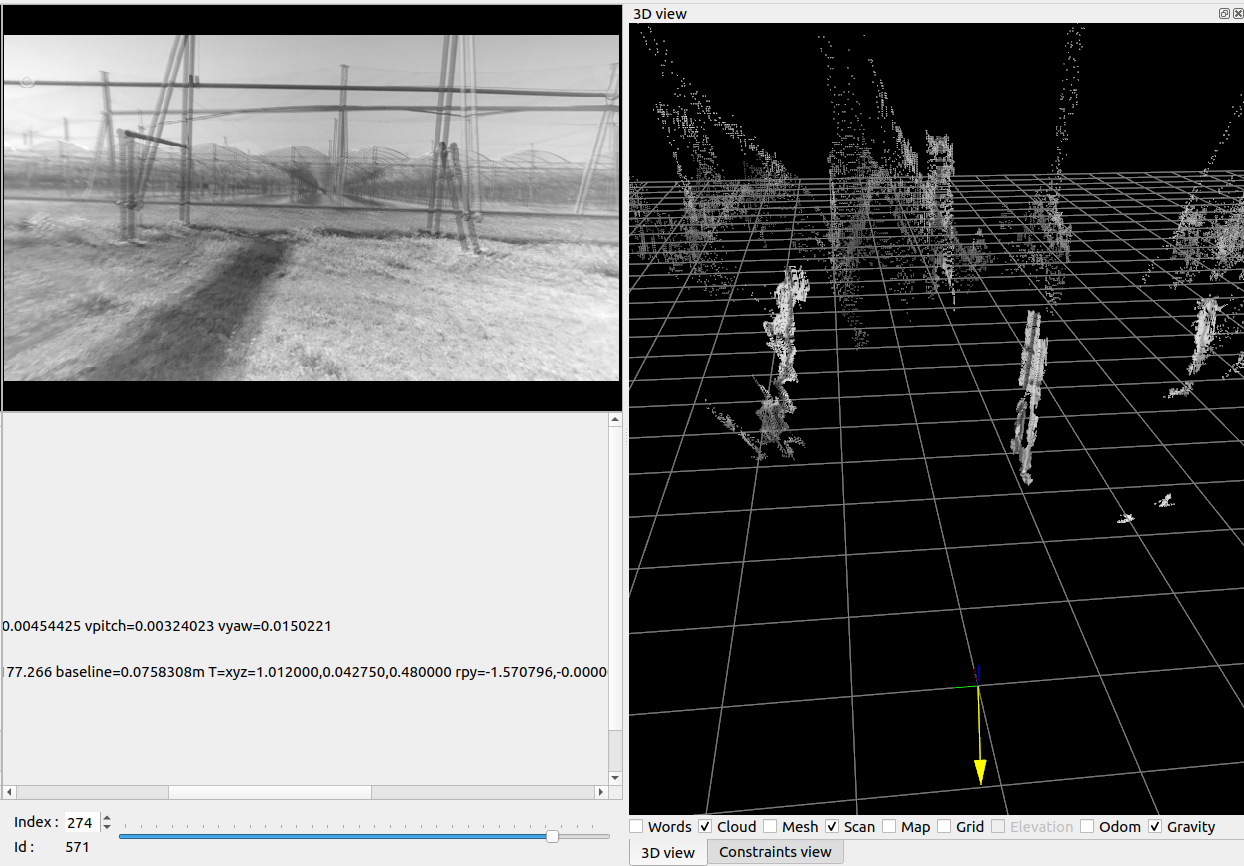

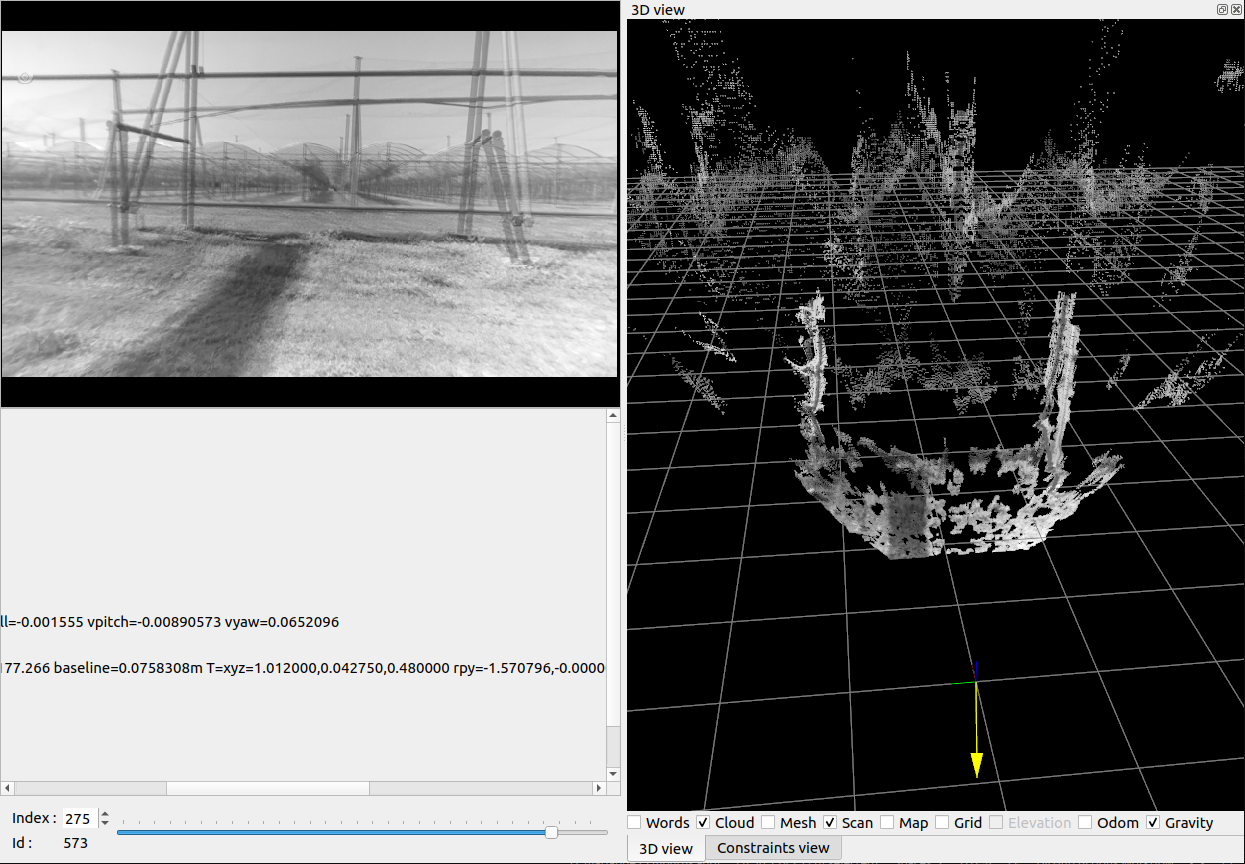

Here another example of 3 consecutives frames, where the second one no disparity could be computed on the ground. We can see in the stereo image that the horizontal shift doesn't make sense accordingly to the first and third frames (look at the poles, the disparity looks smaller on left side but higher on right side of the image on the second one):

For the realsense database, I don't see any stereo sync issue. The trajectory looks like expected. The image resolution is quite low (~120p), a slightly higher resolution (~480p) could help to get more loop closures. Using SIFT can also help (if your opencv is built with it).

It seems you didn't feed IMU from the camera, it could help to keep the map aligned with gravity.

For VIO integration, you would need to experiment with some approaches that you can find. To integrate with rtabmap, if they provide an odometry topic, then it is kinda plug-and-play to feed that topic to rtabmap node.

For SuperPoint, I recently updated my computer with latest pytorch and it worked. Only thing is to make sure to re-create the superpoint.pt file from your current pytorch version, using this tracing script:

cheers,

Mathieu

URL: http://official-rtab-map-forum.206.s1.nabble.com/Global-Optimizing-with-Loop-Closure-and-Navigation-After-Mapping-tp11061p11205.html

Hi,

From your video, I see a small vertical shift here:

I looked into OAK database, and I can still see some time sync issues. Even if the driver is reporting same stamp, it seems the cameras don't actually shut exactly at the same time. If you record left and right images (in a rosbag) at the same time while doing very fast rotation, it may be more obvious. Here is an example of two consecutive images, in the Constraints View, the point cloud scale appears different:

Here another example of 3 consecutives frames, where the second one no disparity could be computed on the ground. We can see in the stereo image that the horizontal shift doesn't make sense accordingly to the first and third frames (look at the poles, the disparity looks smaller on left side but higher on right side of the image on the second one):

For the realsense database, I don't see any stereo sync issue. The trajectory looks like expected. The image resolution is quite low (~120p), a slightly higher resolution (~480p) could help to get more loop closures. Using SIFT can also help (if your opencv is built with it).

rtabmap-reprocess --Mem/UseOdomFeatures false --Kp/DetectorStrategy 1 realsense.db output.db

It seems you didn't feed IMU from the camera, it could help to keep the map aligned with gravity.

For VIO integration, you would need to experiment with some approaches that you can find. To integrate with rtabmap, if they provide an odometry topic, then it is kinda plug-and-play to feed that topic to rtabmap node.

For SuperPoint, I recently updated my computer with latest pytorch and it worked. Only thing is to make sure to re-create the superpoint.pt file from your current pytorch version, using this tracing script:

import torch

import torchvision

from demo_superpoint import SuperPointNet

model = SuperPointNet()

model.load_state_dict(torch.load("superpoint_v1.pth"))

model.eval()

example = torch.rand(1, 1, 640, 480)

traced_script_module = torch.jit.trace(model, example)

traced_script_module.save("superpoint_v1.pt")

cheers,

Mathieu

| Free forum by Nabble | Edit this page |