Re: Bag of Binary Words VS RTABMap

Posted by matlabbe on

URL: http://official-rtab-map-forum.206.s1.nabble.com/Bag-of-Binary-Words-VS-RTABMap-tp2335p2354.html

Hi,

The biggest difference between the timings above and those in the original RTAB-Map's paper is the parameter "Kp/IncrementalFlann" (which didn't exist at the time the paper was made). For example, if I set "-Kp/IncrementalFlann false" like in the Benchmark page (to reproduce exactly the experiment), we got the ~1.4 sec processing time as you mentioned. The vocabulary is re-constructed after each image with "Kp/IncrementalFlann=false". Note that in this case the acquisition time was set to 2 seconds and the time limit was set to 1.4 sec. The limit could be set to 700 ms for example, so that maximum processing time would be bounded around 700 ms, independently of the number of images processed.

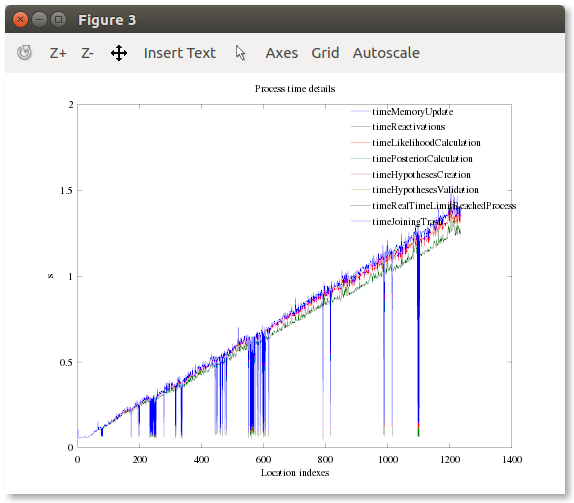

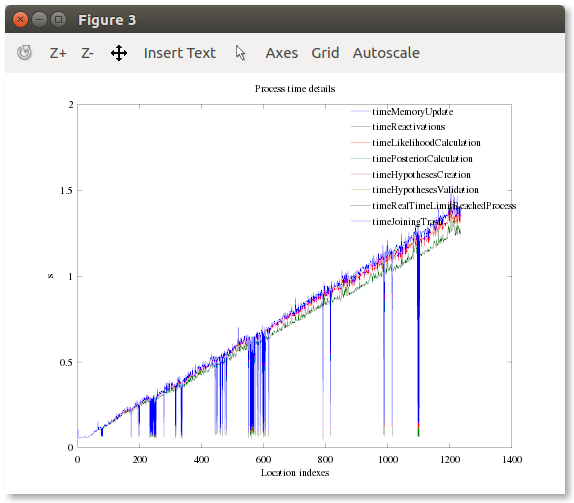

Beside the inherent costly vocabulary structure update of RTAB-Map, if we look only at the time of the likelihood/posterior computation (the bag of words part on the DBoW figure you've shown), we get ~40 ms for likelihood and ~70 ms for posterior (total ~110 ms, 85% Recall at 100% precision). There are some new options to reduce this time though. If I set "-Kp/TfIdfLikelihoodUsed true" (TF-IDF search approach) and "-Bayes/FullPredictionUpdate false", they can be reduced to ~0.8 ms and ~8 ms respectively (total ~9 ms) after 1237 images, while keeping high Precision/Recall (86% Recall at 100% precision in this case). If we interpolate linearly to 25000 images and if we ignore memory management that could bound those times, we would have ~16 ms likelihood and ~176 ms posterior computation time, which is indeed higher than the ~10 ms of DBoW. Here is a breakdown (note that I re-used "Kp/IncrementalFlann=true" here to save some time repeating the same experiment):

cheers,

Mathieu

URL: http://official-rtab-map-forum.206.s1.nabble.com/Bag-of-Binary-Words-VS-RTABMap-tp2335p2354.html

Hi,

The biggest difference between the timings above and those in the original RTAB-Map's paper is the parameter "Kp/IncrementalFlann" (which didn't exist at the time the paper was made). For example, if I set "-Kp/IncrementalFlann false" like in the Benchmark page (to reproduce exactly the experiment), we got the ~1.4 sec processing time as you mentioned. The vocabulary is re-constructed after each image with "Kp/IncrementalFlann=false". Note that in this case the acquisition time was set to 2 seconds and the time limit was set to 1.4 sec. The limit could be set to 700 ms for example, so that maximum processing time would be bounded around 700 ms, independently of the number of images processed.

Beside the inherent costly vocabulary structure update of RTAB-Map, if we look only at the time of the likelihood/posterior computation (the bag of words part on the DBoW figure you've shown), we get ~40 ms for likelihood and ~70 ms for posterior (total ~110 ms, 85% Recall at 100% precision). There are some new options to reduce this time though. If I set "-Kp/TfIdfLikelihoodUsed true" (TF-IDF search approach) and "-Bayes/FullPredictionUpdate false", they can be reduced to ~0.8 ms and ~8 ms respectively (total ~9 ms) after 1237 images, while keeping high Precision/Recall (86% Recall at 100% precision in this case). If we interpolate linearly to 25000 images and if we ignore memory management that could bound those times, we would have ~16 ms likelihood and ~176 ms posterior computation time, which is indeed higher than the ~10 ms of DBoW. Here is a breakdown (note that I re-used "Kp/IncrementalFlann=true" here to save some time repeating the same experiment):

cheers,

Mathieu

| Free forum by Nabble | Edit this page |