Re: How to process RGBD-SLAM datasets with RTAB-Map?

Posted by matlabbe on Jan 10, 2017; 11:16pm

URL: http://official-rtab-map-forum.206.s1.nabble.com/How-to-process-RGBD-SLAM-datasets-with-RTAB-Map-tp939p2546.html

Hi,

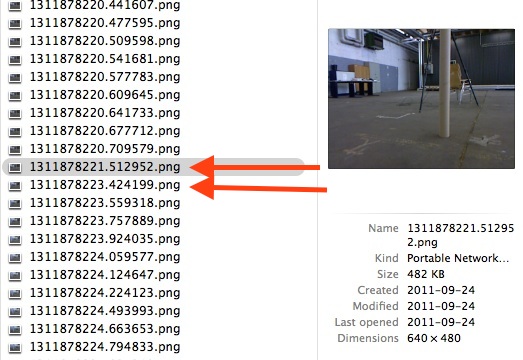

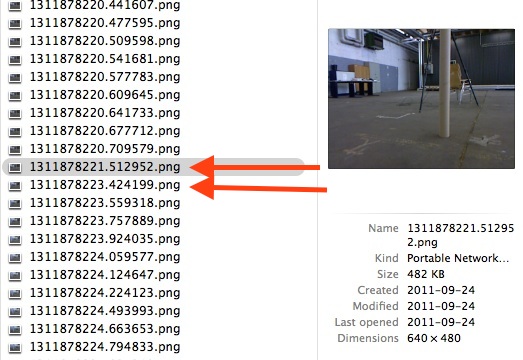

Just tried the dataset. With default parameters, the odometry get lost exactly where there is a huge delay (~2 sec, normally 30 msec) between two images in the dataset, making odometry not able to follow:

Another observation is that there are not a lot of discriminative visual features and they are often >4 meters away. I then used 3D to 2D instead of 3D to 3D motion estimation (Vis/EstimationType=1) to use far features. I also set GFTT quality 0.001 instead of 0.01 (GFTT/QualityLevel=0.001) to get more features. Here is the rgbdslam.yaml I used too (don't forget to set the depth scale factor to 5):

Results: The odometry gets lost some times but recover the next frame. As the camera is quite shaky and visual feautres are far, odometry can be bad at some places. Overall, here is the full run (gray line is the ground truth):

Source parameters:

cheers,

Mathieu

URL: http://official-rtab-map-forum.206.s1.nabble.com/How-to-process-RGBD-SLAM-datasets-with-RTAB-Map-tp939p2546.html

Hi,

Just tried the dataset. With default parameters, the odometry get lost exactly where there is a huge delay (~2 sec, normally 30 msec) between two images in the dataset, making odometry not able to follow:

Another observation is that there are not a lot of discriminative visual features and they are often >4 meters away. I then used 3D to 2D instead of 3D to 3D motion estimation (Vis/EstimationType=1) to use far features. I also set GFTT quality 0.001 instead of 0.01 (GFTT/QualityLevel=0.001) to get more features. Here is the rgbdslam.yaml I used too (don't forget to set the depth scale factor to 5):

%YAML:1.0

camera_name: rgbdslam

image_width: 0

image_height: 0

camera_matrix:

rows: 3

cols: 3

data: [ 525., 0., 3.1950000000000000e+02, 0., 525.,

2.3950000000000000e+02, 0., 0., 1. ]

distortion_coefficients:

rows: 1

cols: 5

data: [ 0., 0., 0., 0., 0. ]

rectification_matrix:

rows: 3

cols: 3

data: [ 1., 0., 0., 0., 1., 0., 0., 0., 1. ]

projection_matrix:

rows: 3

cols: 4

data: [ 525., 0., 3.1950000000000000e+02, 0., 0., 525.,

2.3950000000000000e+02, 0., 0., 0., 1., 0. ]

Results: The odometry gets lost some times but recover the next frame. As the camera is quite shaky and visual feautres are far, odometry can be bad at some places. Overall, here is the full run (gray line is the ground truth):

Source parameters:

cheers,

Mathieu

| Free forum by Nabble | Edit this page |