Re: RTAB-Map and Unity integration

Posted by viniciusbs on

URL: http://official-rtab-map-forum.206.s1.nabble.com/RTAB-Map-and-Unity-integration-tp4925p5096.html

Hi Mathieu,

In any case, I'm still unable to get a good map of my environment. So I built this "minimal case" example to help me debug the problems I'm having. I was hoping you could also help me with it.

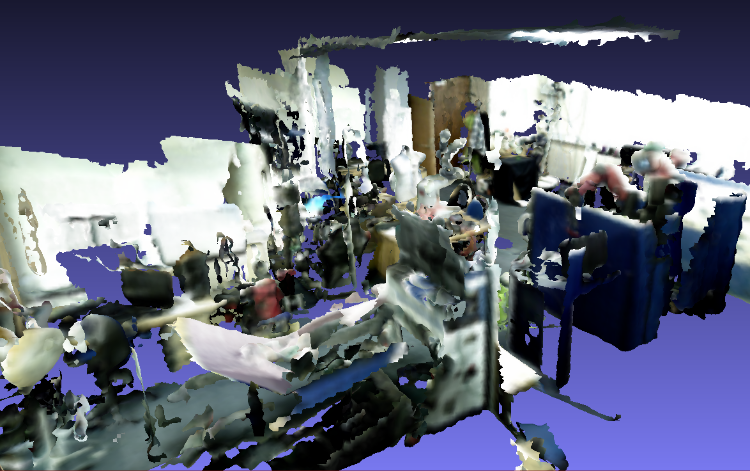

First, I followed all the procedures for camera calibration recommended on rtabmap page, both regular for rgb and depth and also the CLAMS approach. After this, I ran rtabmap standalone (built from source) with the handheld camera inside my lab; screenshots of the results are below. It has some distortions, but the general shape of objects is preserved.

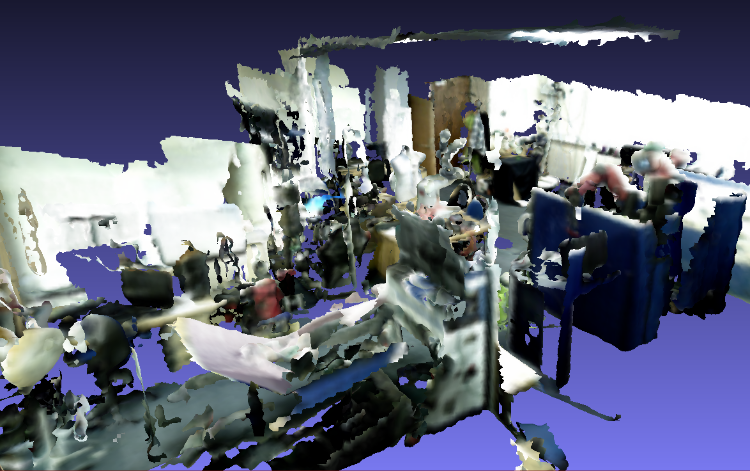

After that, I tried the same thing on the corridor outside of the lab, which I wanted to map:

But this time I get the distorted objects. My only conclusion is that this environment is harder to map, due to fewer features on the images (and maybe small repetitive patterns on the floor?).

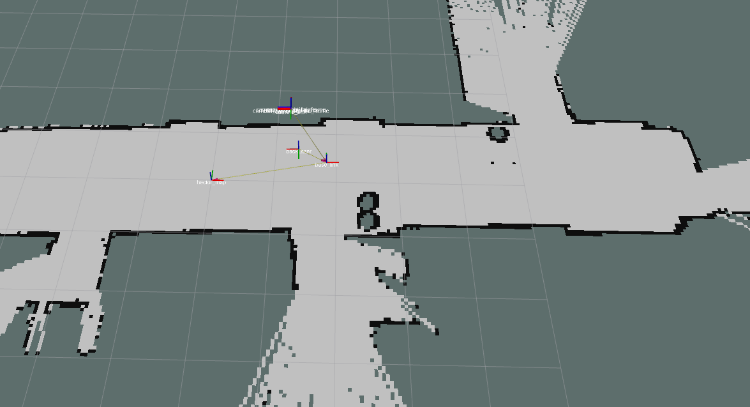

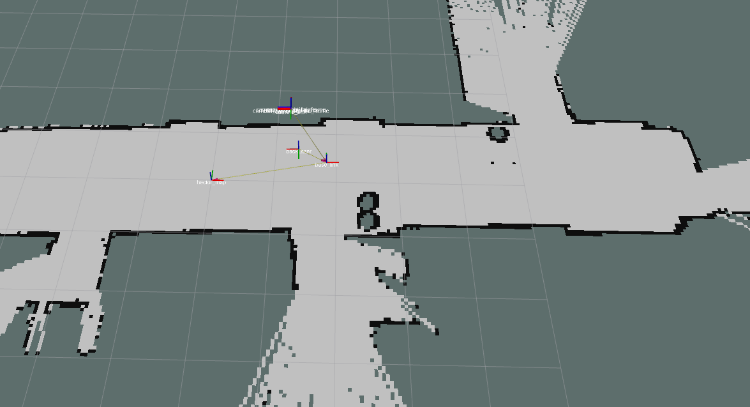

So, my followup is to add the laser scanners to see if odometry can be improved. This time, to reduce TF alignment problems, I used only the best laser scanner in our robot, which is on its back. Using just this with hector_mapping gives me a pretty good 2D mapping and localization accuracy, as can be seen in the figure below.

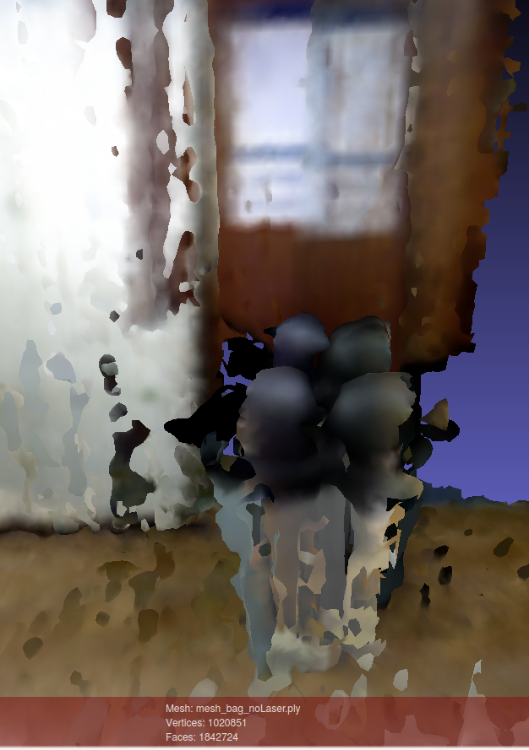

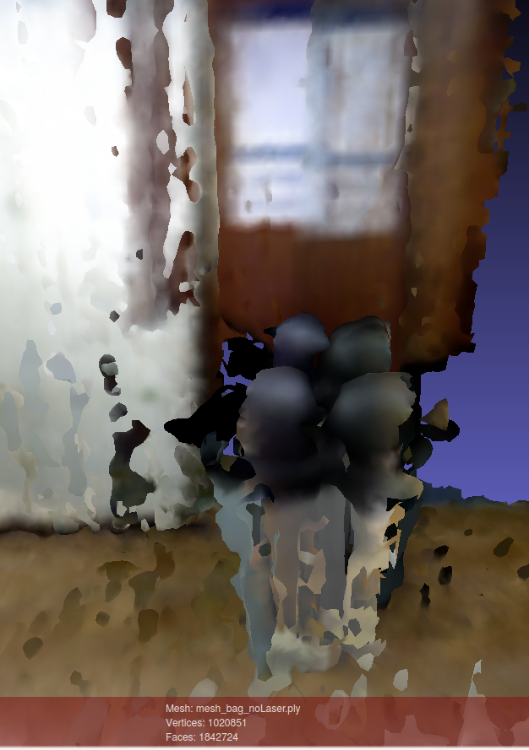

So I thought that this would surely help with improving the 3D mapping quality. I put up the Asus camera facing the same direction as the laser and got the best possible tf alignment, focusing especially on closer ranges, as you had recommended. However, to my surprise, the laser+hector does not help much, and might even decrease performance a little, as can be seen below:

Both meshes above were built with the same bag, which I copied (compressed) to this link. For the laser-based odometry, I used a custom launch file (also added to the link), adapted from demo_hector_mapping.launch of the tutorials. I just ran this file with bag:=true argument. For the camera only setup, I simply filtered tf messages from the bag and ran:

roslaunch rtabmap_ros rtabmap.launch rtabmap_args:="--delete_db_on_start" rgb_topic:=/camera/data_throttled_image depth_topic:=/camera/data_throttled_image_depth camera_info_topic:=/camera/data_throttled_camera_info use_sim_time:=true rviz:=true

I also copied to the link the rtabmap databases generated after the run and the exported mesh files.

Finally, I noticed that the 2 above setups seem to fail for different reasons. With the camera only, the problem seems to be a poor odometry on the x-axis of the path, as objects surfaces appear relatively smooth, but their fronts and backs are not correctly aligned, even after loop closure is detected. With the laser setup, on the other hand, there is this weird behaviour that can be observed around 33 seconds of the bag. Before it, the trash can is being mapped fine, but after that new points start to be added with a depth off-set. I would think this is a camera distortion problem, but when using the camera-only setup, the problem doesn't occur.

So, now I don't really know where to go with my "minimal case debug" attempt, or how to improve the quality of the 3D maps. Any insights?

Sorry for the long post, but I wanted to describe all the steps I've taken, to see if anything can help.

Cheers,

Vinicius

URL: http://official-rtab-map-forum.206.s1.nabble.com/RTAB-Map-and-Unity-integration-tp4925p5096.html

Hi Mathieu,

It was because when setting subscribe_scan to true rtabmap defaults Grid/FromDepth to false and then I couldn't actually observe the difference. But I think this is still not quite the effect I was looking for. Is there a way to filter the clouds before they are processed by rtabmap? I mean something similar to what we get when using the "regenerate clouds" option on the gui when exporting the 3d map, but live, so that noisy cloud points don't get registered on the wrong place.

In any case, I'm still unable to get a good map of my environment. So I built this "minimal case" example to help me debug the problems I'm having. I was hoping you could also help me with it.

First, I followed all the procedures for camera calibration recommended on rtabmap page, both regular for rgb and depth and also the CLAMS approach. After this, I ran rtabmap standalone (built from source) with the handheld camera inside my lab; screenshots of the results are below. It has some distortions, but the general shape of objects is preserved.

After that, I tried the same thing on the corridor outside of the lab, which I wanted to map:

But this time I get the distorted objects. My only conclusion is that this environment is harder to map, due to fewer features on the images (and maybe small repetitive patterns on the floor?).

So, my followup is to add the laser scanners to see if odometry can be improved. This time, to reduce TF alignment problems, I used only the best laser scanner in our robot, which is on its back. Using just this with hector_mapping gives me a pretty good 2D mapping and localization accuracy, as can be seen in the figure below.

So I thought that this would surely help with improving the 3D mapping quality. I put up the Asus camera facing the same direction as the laser and got the best possible tf alignment, focusing especially on closer ranges, as you had recommended. However, to my surprise, the laser+hector does not help much, and might even decrease performance a little, as can be seen below:

Both meshes above were built with the same bag, which I copied (compressed) to this link. For the laser-based odometry, I used a custom launch file (also added to the link), adapted from demo_hector_mapping.launch of the tutorials. I just ran this file with bag:=true argument. For the camera only setup, I simply filtered tf messages from the bag and ran:

roslaunch rtabmap_ros rtabmap.launch rtabmap_args:="--delete_db_on_start" rgb_topic:=/camera/data_throttled_image depth_topic:=/camera/data_throttled_image_depth camera_info_topic:=/camera/data_throttled_camera_info use_sim_time:=true rviz:=true

I also copied to the link the rtabmap databases generated after the run and the exported mesh files.

Finally, I noticed that the 2 above setups seem to fail for different reasons. With the camera only, the problem seems to be a poor odometry on the x-axis of the path, as objects surfaces appear relatively smooth, but their fronts and backs are not correctly aligned, even after loop closure is detected. With the laser setup, on the other hand, there is this weird behaviour that can be observed around 33 seconds of the bag. Before it, the trash can is being mapped fine, but after that new points start to be added with a depth off-set. I would think this is a camera distortion problem, but when using the camera-only setup, the problem doesn't occur.

So, now I don't really know where to go with my "minimal case debug" attempt, or how to improve the quality of the 3D maps. Any insights?

Sorry for the long post, but I wanted to describe all the steps I've taken, to see if anything can help.

Cheers,

Vinicius

| Free forum by Nabble | Edit this page |