Re: Troubleshooting mapping with kinect v2, rplidar and jetson tx2

Posted by matlabbe on

URL: http://official-rtab-map-forum.206.s1.nabble.com/Troubleshooting-mapping-with-kinect-v2-rplidar-and-jetson-tx2-tp5517p5534.html

Hi,

I took a look at the database.

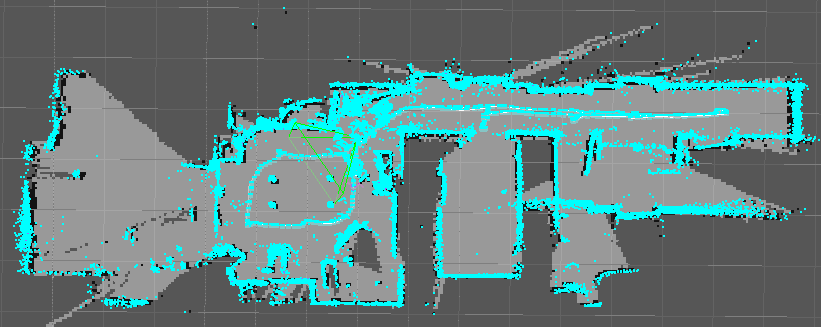

Scan matching seems working good for most of the mapping, but when the robot is rotating, wheel odom seems to drift more and scan matching has more difficulty to correct it. Increasing Icp/MaxCorrespondenceDistance from 0.05 to 0.1 helped in some case.

Maybe decrease the rotation speed to make scan matching easier (unless you can get more accurate odometry on rotation).

When the robot is rotating, the RGB and depth images are poorly synchronized, this will make the 3D map looking bad and loop closures won't be as accurate in those situations. However, in your case you are using a lidar, which corrects most of the not-so-accurate loop closures computed by the camera.

I recommend to use "qhd" quality for the kinect to get depth image registered to color camera, not color registered to depth image like in "sd" mode (this causes a lot of black pixels in color images, which affects feature extraction). Using "qhd" will also help if you use visual odometry (look at this kinectv2 example launch file for some tuning parameters for qhd images).

For the screenshot of your first post, the RVIZ global frame should be "map", not "odom", otherwise the rtabmap_ros/MapCloud display won't work correctly (like adding clouds over the same place).

cheers,

Mathieu

URL: http://official-rtab-map-forum.206.s1.nabble.com/Troubleshooting-mapping-with-kinect-v2-rplidar-and-jetson-tx2-tp5517p5534.html

Hi,

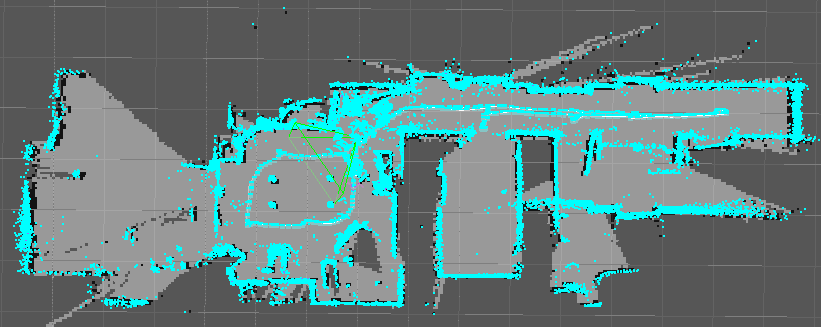

I took a look at the database.

Scan matching seems working good for most of the mapping, but when the robot is rotating, wheel odom seems to drift more and scan matching has more difficulty to correct it. Increasing Icp/MaxCorrespondenceDistance from 0.05 to 0.1 helped in some case.

Maybe decrease the rotation speed to make scan matching easier (unless you can get more accurate odometry on rotation).

When the robot is rotating, the RGB and depth images are poorly synchronized, this will make the 3D map looking bad and loop closures won't be as accurate in those situations. However, in your case you are using a lidar, which corrects most of the not-so-accurate loop closures computed by the camera.

I recommend to use "qhd" quality for the kinect to get depth image registered to color camera, not color registered to depth image like in "sd" mode (this causes a lot of black pixels in color images, which affects feature extraction). Using "qhd" will also help if you use visual odometry (look at this kinectv2 example launch file for some tuning parameters for qhd images).

For the screenshot of your first post, the RVIZ global frame should be "map", not "odom", otherwise the rtabmap_ros/MapCloud display won't work correctly (like adding clouds over the same place).

cheers,

Mathieu

| Free forum by Nabble | Edit this page |