Re: RTAB SLAM using Wheel Encoder+imu+2d rotating Lidar

Posted by tsdk on

URL: http://official-rtab-map-forum.206.s1.nabble.com/RTAB-SLAM-using-Wheel-Encoder-imu-2d-rotating-Lidar-tp5741p5826.html

Thanks for your reply. So, if I have a Kinect as well to my system, do you think it would be sufficient to use RTAB mapping since my environment is featureless, only the junctions can be considered as features. As Kinect matches the corner+key features from the database, I was a bit skeptical about the RTAB mapping in such cases.

Moreover, I tried another approach. So, I added a 3d LiDAR Velodyne-16 which gives me point cloud directly. So, currently my launch file is this:

<?xml version="1.0" encoding="UTF-8"?>

<launch>

<group ns="rtabmap">

<node pkg="rtabmap_ros" type="rtabmap" name="rtabmap">

<remap from="scan_cloud" to="/velodyne_points"/>

<remap from="odom" to="/odom"/>

<remap from="grid_map" to="/map"/>

</node>

</group>

</launch>

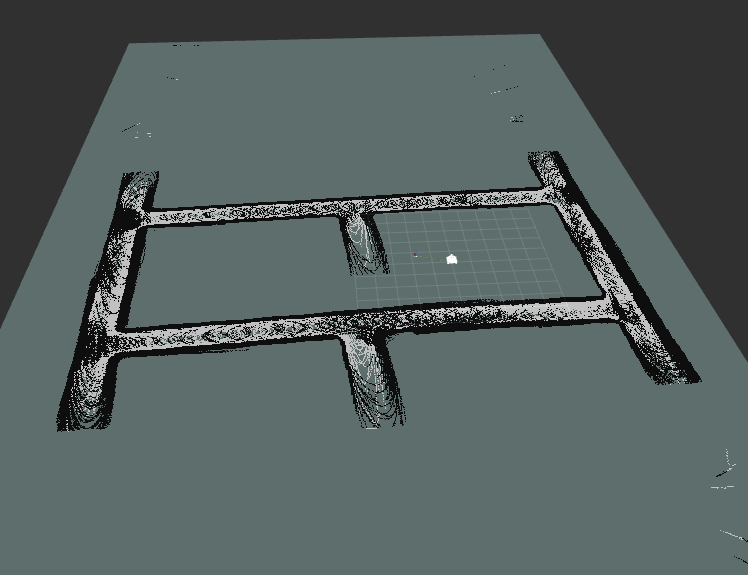

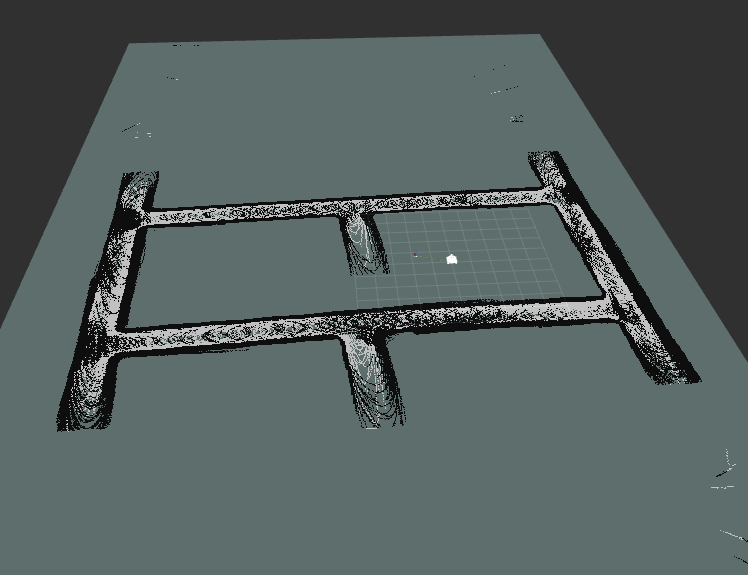

So, when I run the bag file, I'm not able to retain the point cloud when visualizing in Rviz. It disappears as the robots moves further and I end up with a 2d map instead of 3d.

But surprisingly, it is still able to close the loop.

I read in one of your post where you suggested to use a monocular rgb camera and then transform the point cloud coming from velodyne to depth. But I'm afraid I couldn't figure out how to convert the point cloud data to depth image.

Could you help me in this case?

URL: http://official-rtab-map-forum.206.s1.nabble.com/RTAB-SLAM-using-Wheel-Encoder-imu-2d-rotating-Lidar-tp5741p5826.html

Thanks for your reply. So, if I have a Kinect as well to my system, do you think it would be sufficient to use RTAB mapping since my environment is featureless, only the junctions can be considered as features. As Kinect matches the corner+key features from the database, I was a bit skeptical about the RTAB mapping in such cases.

Moreover, I tried another approach. So, I added a 3d LiDAR Velodyne-16 which gives me point cloud directly. So, currently my launch file is this:

<?xml version="1.0" encoding="UTF-8"?>

<launch>

<group ns="rtabmap">

<node pkg="rtabmap_ros" type="rtabmap" name="rtabmap">

<remap from="scan_cloud" to="/velodyne_points"/>

<remap from="odom" to="/odom"/>

<remap from="grid_map" to="/map"/>

</node>

</group>

</launch>

So, when I run the bag file, I'm not able to retain the point cloud when visualizing in Rviz. It disappears as the robots moves further and I end up with a 2d map instead of 3d.

But surprisingly, it is still able to close the loop.

I read in one of your post where you suggested to use a monocular rgb camera and then transform the point cloud coming from velodyne to depth. But I'm afraid I couldn't figure out how to convert the point cloud data to depth image.

Could you help me in this case?

| Free forum by Nabble | Edit this page |