Mapping with multiple stereocameras, localization with multiple monochrome monocular cameras

Posted by Artem Lobantsev on

URL: http://official-rtab-map-forum.206.s1.nabble.com/Mapping-with-multiple-stereocameras-localization-with-multiple-monochrome-monocular-cameras-tp6647.html

Hello, Mathieu!

I'm encountered with the following task:

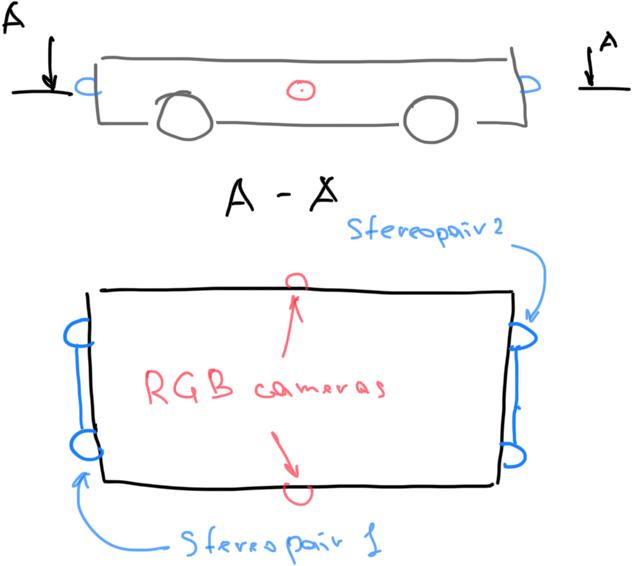

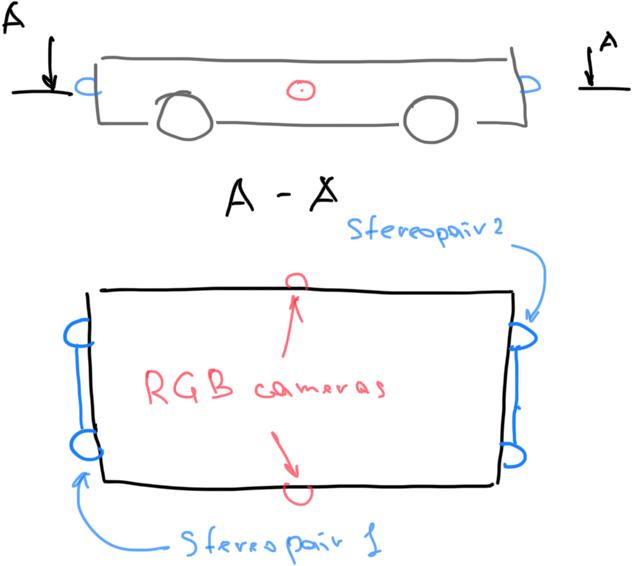

- Create map with robot setup of two two stereo cameras (opposed directed, see pic 1 below)

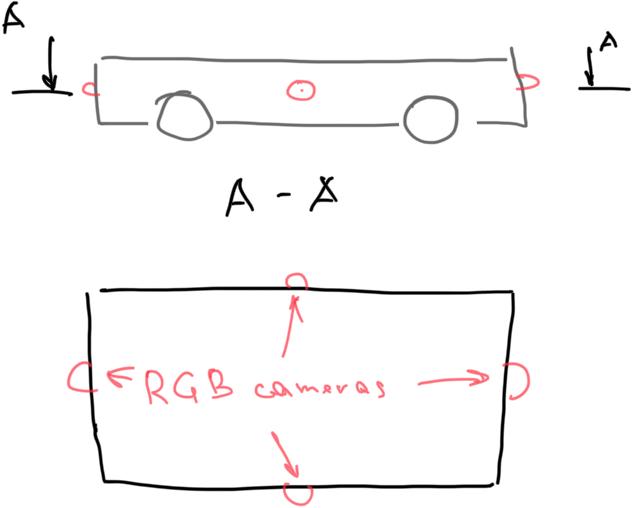

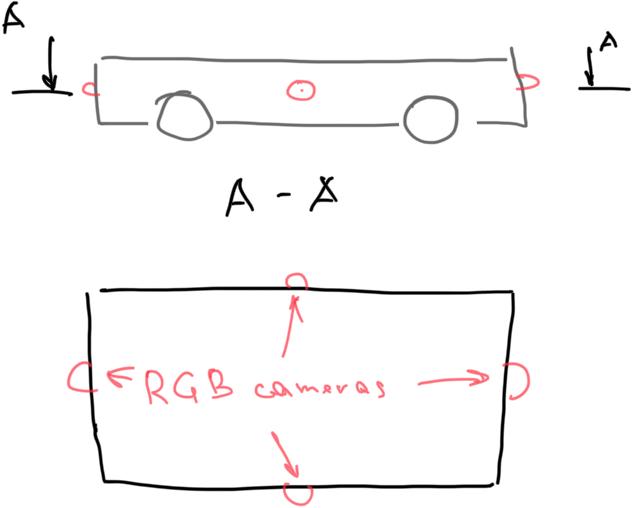

- Provide localization on that map with different robot setup (4 RGB omni directed cameras, see pic 2 below)

- No IMU, Lidar or other sensors provided, (it could be used wheel odometry though)

As far as the area of mapping is rather big, (around 1100 m^2), and can be bigger, I preferred to use rtabmap_ros.

To deal with stereocameras, I guess to use stereo_img_proc and disparity_to_depth to provide depth channels for rgbd_camera0 and rgbd_camera1 topics (as in demo_two_kinects.launch example)

At this point, after research of this forum and your papers I still have several questions. I'd really appreciate it if you would have the answers.

1. General question is how does multicam mapping technically implemented (as in demo_two_kinects.launch example)? Is it used only in odometry node or in mapping too?

1.1. Does your approach have the same roots as in MCPTAM or Multicol-SLAM, namely does using multicam setup gives profit in map precision besides "blind" camera problem (when one cam does'n see any features, like white wall)?

1.2. As far as you wrote here that image from both cameras merged into one "meta-image" and treated as RGBDImage as I understand, does it mean, that produced PointClouds (so far grid_map, octomap and so on) also are merged and during rgbd mapping-localization stage can be used by both cameras? For instance, when during the mapping stage, robot sees the same place with another camera, does it recognize it's place?

1.3. Are two monochrome stereocams enough for mapping?

1.4 You mentioned here that in the odometry stage, features from both cameras are used, but do they used in loop closure and graph optimization?

2. How monocular localization stage is implemented? Can it be used in multicam setup?

2.1. Does the RGB localization approach need external odometry? Are four differently directed mono cameras is enough?

2.2. How does mono loc technically works after rgbd mapping? Does it retrieve visual features from the pre-loaded map and compares it with visible features? How does it deal with the absence of depth?

Thanks for your time and answers!

Best regards,

Artem

Picture 1. Mapping robot setup

Picture 2. Localization robot setup

URL: http://official-rtab-map-forum.206.s1.nabble.com/Mapping-with-multiple-stereocameras-localization-with-multiple-monochrome-monocular-cameras-tp6647.html

Hello, Mathieu!

I'm encountered with the following task:

- Create map with robot setup of two two stereo cameras (opposed directed, see pic 1 below)

- Provide localization on that map with different robot setup (4 RGB omni directed cameras, see pic 2 below)

- No IMU, Lidar or other sensors provided, (it could be used wheel odometry though)

As far as the area of mapping is rather big, (around 1100 m^2), and can be bigger, I preferred to use rtabmap_ros.

To deal with stereocameras, I guess to use stereo_img_proc and disparity_to_depth to provide depth channels for rgbd_camera0 and rgbd_camera1 topics (as in demo_two_kinects.launch example)

At this point, after research of this forum and your papers I still have several questions. I'd really appreciate it if you would have the answers.

1. General question is how does multicam mapping technically implemented (as in demo_two_kinects.launch example)? Is it used only in odometry node or in mapping too?

1.1. Does your approach have the same roots as in MCPTAM or Multicol-SLAM, namely does using multicam setup gives profit in map precision besides "blind" camera problem (when one cam does'n see any features, like white wall)?

1.2. As far as you wrote here that image from both cameras merged into one "meta-image" and treated as RGBDImage as I understand, does it mean, that produced PointClouds (so far grid_map, octomap and so on) also are merged and during rgbd mapping-localization stage can be used by both cameras? For instance, when during the mapping stage, robot sees the same place with another camera, does it recognize it's place?

1.3. Are two monochrome stereocams enough for mapping?

1.4 You mentioned here that in the odometry stage, features from both cameras are used, but do they used in loop closure and graph optimization?

2. How monocular localization stage is implemented? Can it be used in multicam setup?

2.1. Does the RGB localization approach need external odometry? Are four differently directed mono cameras is enough?

2.2. How does mono loc technically works after rgbd mapping? Does it retrieve visual features from the pre-loaded map and compares it with visible features? How does it deal with the absence of depth?

Thanks for your time and answers!

Best regards,

Artem

Picture 1. Mapping robot setup

Picture 2. Localization robot setup

| Free forum by Nabble | Edit this page |