Export camera poses for textured mesh in multi-camera mode

URL: http://official-rtab-map-forum.206.s1.nabble.com/Export-camera-poses-for-textured-mesh-in-multi-camera-mode-tp9709.html

Hi Mathieu,

I want to generate a textured mesh from my pointcloud. I followed your guide on how to do it and it works fine for maps, created with one RGBD camera. However, for scans with 2 RGBD cameras, I cannot "export cameras in bundler format". I get the following error message when trying:

"No poses exported because of missing images. Try refreshing the cache (with clouds)."

I tried refreshing the cache, but the error persists. Is there a way to make this work?

I also tried exporting the camera poses in raw format, which gives me the following error:

"Could not find any "Camera" frame, exporting in Robot frame instead."

So I guess the information about the camera position is missing in the first place, but I do not understand why.

My parameters for launching the slam node are:

use_sim_time: True rgbd_cameras: 2 Rtabmap/DetectionRate: '5' # [Detection rate (Hz). RTAB-Map will filter input images to satisfy this rate.] odom_frame_id: odom subscribe_depth: True subscribe_rgbd: True subscribe_rgb: True subscribe_odom_info: True frame_id: front_camera_link map_frame_id: map publish_tf: True database_path: /root/Documents/rtabmap/rtabmap.db approx_sync: True Mem/IncrementalMemory: "true" Mem/InitWMWithAllNodes: "true" wait_imu_to_init: True Rtabmap/StartNewMapOnLoopClosure: "true" # [Start a new map only if there is a global loop closure with a previous map.] true: map won't be overwritten if odometry is lost Reg/Strategy: '0' # [0=Vis 1=Icp 2=VisIcp] Vis/EstimationType: '1' # [Motion estimation approach: 0:3D->3D, 1:3D->2D (PnP), 2:2D->2D (Epipolar Geometry)] Vis/InlierDistance: '0.1' # [[Vis/EstimationType = 0] Maximum distance for feature correspondences. Used by 3D->3D estimation approach.] Vis/RefineIterations: '5' # [[Vis/EstimationType = 0] Number of iterations used to refine the transformation found by RANSAC. 0 means that the transformation is not refined.] Vis/FeatureType: "2" # [0=SURF 1=SIFT 2=ORB 3=FAST/FREAK 4=FAST/BRIEF 5=GFTT/FREAK 6=GFTT/BRIEF 7=BRISK 8=GFTT/ORB 9=KAZE 10=ORB-OCTREE 11=SuperPoint 12=SURF/FREAK 13=GFTT/DAISY 14=SURF/DAISY 15=PyDetector] Vis/CorType: "0" # Correspondences: 0=Features Matching, 1=Optical Flow Vis/MaxDepth: '0' # [Max depth of the features (0 means no limit).] Vis/MaxFeatures: '2000' # [0 no limits.] OdomF2M/MaxSize: '2500' # [[Visual] Local map size: If > 0 (example 5000), the odometry will maintain a local map of X maximum words.] Rtabmap/CreateIntermediateNodes: 'true' # [Create intermediate nodes between loop closure detection. Only used when Rtabmap/DetectionRate>0.]

I launch the node by:

slam_node = launch_ros.actions.Node(

package='rtabmap_slam', executable='rtabmap', output="screen",

parameters=[

param_slam_from_file

],

remappings=[

("rgbd_image0", '/realsense_front/rgbd_image'),

("rgbd_image1", '/realsense_back/rgbd_image'),

("imu", '/imu/data'),

("odom", 'odom')],

arguments=["--delete_db_on_start"],

prefix='',

namespace='rtabmap'

)

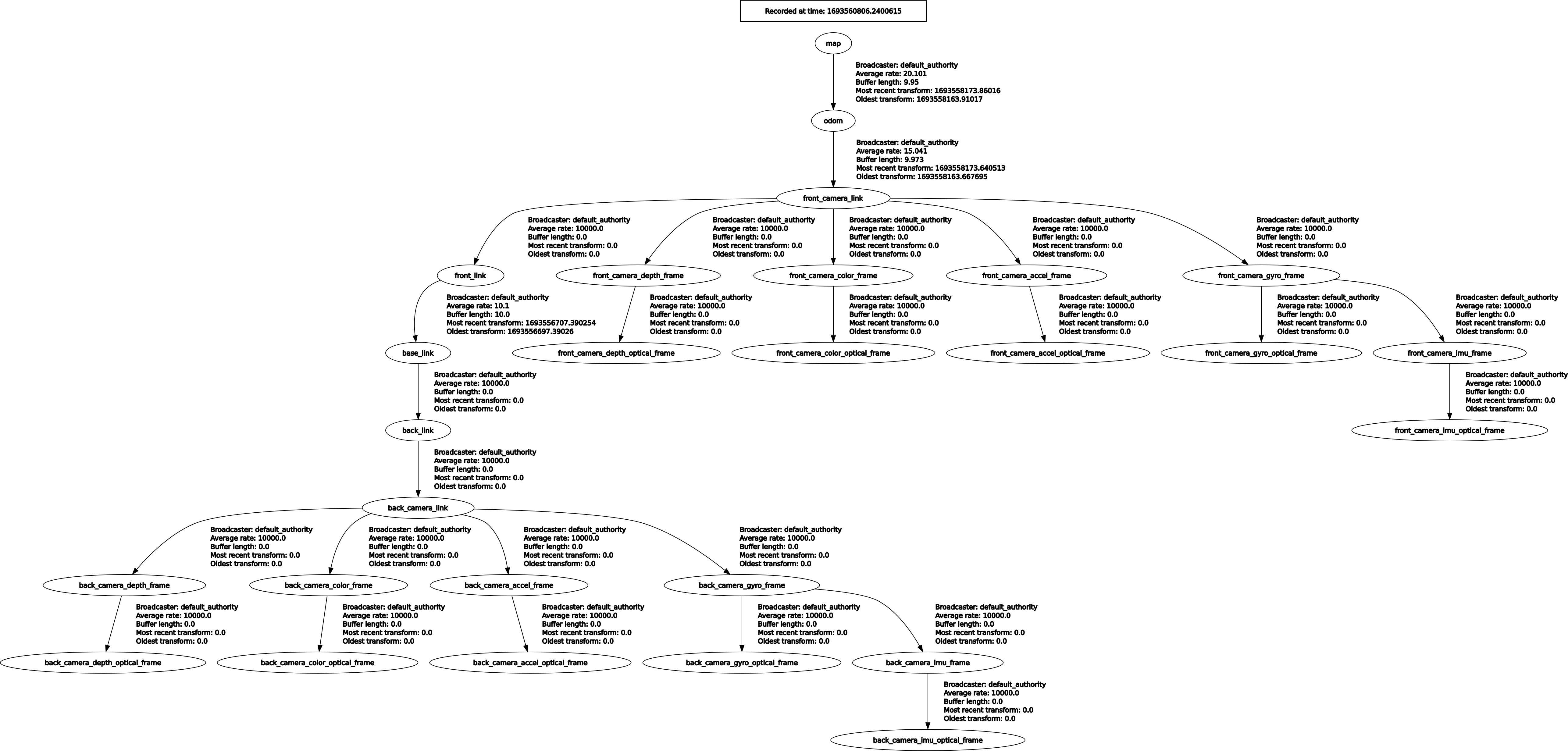

This is my tf-tree:

I built rtabmap and rtabmap_ros from source on Ubuntu 20.04 with ROS2 Humble.

Best regards,

Johannes

| Free forum by Nabble | Edit this page |