Re: Hesai QT128 with Zed2i and external fused Odometry

Posted by matlabbe on Oct 29, 2023; 2:29am

URL: http://official-rtab-map-forum.206.s1.nabble.com/Hesai-QT128-with-Zed2i-and-external-fused-Odometry-tp9512p9729.html

Hi,

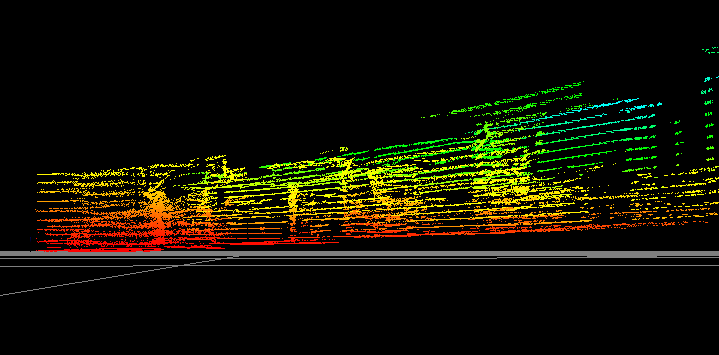

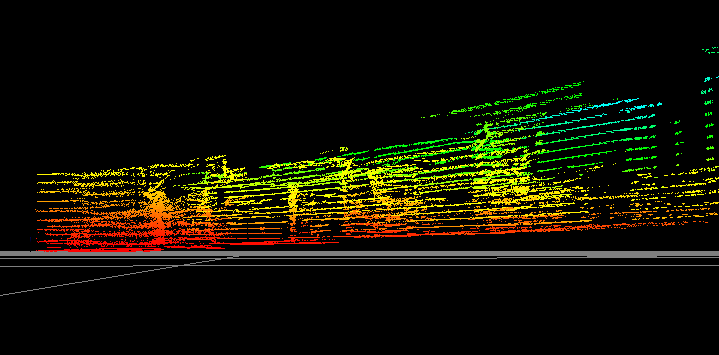

I am surprised that the lidar occupancy grids look relatively good, because the TF between base_link and the lidar frame seems wrong. The lidar looks tilted:

For the stereo, you need to use stereo_sync node if you want to input both image and scan data to rtabmap. When setting rtabmap node in stereo mode, it will ignore scan_cloud input. Because your grid parameter Grid/Sensor=0, hen no occupancy grids are generated because there is no lidar. To generate from stereo, you would need to set Grid/Sensor=1.

For grid generation, as the robot is working in 2D, use a fast passthrough filter instead:

A Rtabmap/DetectionRate of 7 Hz is pretty high, I would start with default 1 Hz.

For 3D lidar (assuming 360 FOV):

For your last question, if the robot has to navigate under it, maybe reduce `Grid/MaxObstacleHeight` so that it will only see the legs/wheels as obstacle. Otherwise, if it should see the whole cart as obstacle most of the time, but only when it has to go under the cart, you may keep obstacle map like this, and bypass nav2 with some control node specific to dock under the cart (like you said, maybe a line follower, or without using the map, try to detect the wheel and navigate in the middle).

cheers,

Mathieu

URL: http://official-rtab-map-forum.206.s1.nabble.com/Hesai-QT128-with-Zed2i-and-external-fused-Odometry-tp9512p9729.html

Hi,

I am surprised that the lidar occupancy grids look relatively good, because the TF between base_link and the lidar frame seems wrong. The lidar looks tilted:

For the stereo, you need to use stereo_sync node if you want to input both image and scan data to rtabmap. When setting rtabmap node in stereo mode, it will ignore scan_cloud input. Because your grid parameter Grid/Sensor=0, hen no occupancy grids are generated because there is no lidar. To generate from stereo, you would need to set Grid/Sensor=1.

For grid generation, as the robot is working in 2D, use a fast passthrough filter instead:

'Grid/NormalsSegmentation': 'false', 'Grid/MaxGroundHeight': '0.05', 'Grid/MaxObstacleHeight': '1', 'Grid/MinGroundHeight': '0', 'Grid/NoiseFilteringRadius': '0', 'Grid/VoxelSize': '0.05',

A Rtabmap/DetectionRate of 7 Hz is pretty high, I would start with default 1 Hz.

For 3D lidar (assuming 360 FOV):

'RGBD/ProximityPathMaxNeighbors': '1', 'RGBD/ProximityPathFilteringRadius': '0', 'RGBD/ProximityAngle': '0'

For your last question, if the robot has to navigate under it, maybe reduce `Grid/MaxObstacleHeight` so that it will only see the legs/wheels as obstacle. Otherwise, if it should see the whole cart as obstacle most of the time, but only when it has to go under the cart, you may keep obstacle map like this, and bypass nav2 with some control node specific to dock under the cart (like you said, maybe a line follower, or without using the map, try to detect the wheel and navigate in the middle).

cheers,

Mathieu

| Free forum by Nabble | Edit this page |