Comparison between realsense D435 vs T265 vs T265+D435 dual setup

123

123

|

Administrator

|

This post was updated on .

Hi all,

Here is a post about some experiments I did last october when I integrated a dual mode for T265 and D435 in rtabmap standalone (tested with this 3d printed mount).  I wanted to put the results somewhere to be found more easily. We can compare CPU usage between each setup, and also 3D map quality. D435i (F2M odometry, Stereo-IR mode for larger field-of-view and less motion blur): https://www.youtube.com/watch?v=8KHbiPttRlI T265 (F2M odometry + fisheye 3D reconstruction, images are rectified at 30 Hz for odometry): https://www.youtube.com/watch?v=Tm8nttMR7SQ T265 (VIO odometry + fisheye 3D reconstruction, images are rectified only at 1 hz for 3d map): https://www.youtube.com/watch?v=FwmNHHoDvvI T265+D435 (VIO odometry from T265 and Ir/depth from D435): https://www.youtube.com/watch?v=iHQOpPuVcys The big advantage of the latest approach is the computation of odometry and disparity image are done on the cameras! This kind of setup could be easily integrated on a RPI4 for example. cheers, Mathieu |

Re: Comparison between realsense D435 vs T265 vs T265+D435 dual setup

|

Hi Mathieu!

I just wanted to ask you if you could share the launch files. I am not able to use rtabmap odometry with T265, I get an error since the image is not rectified so rtabmap can't compute the odometry, and I can't find a way to rectify fisheye images. Thanks in advance!! |

|

Administrator

|

This post was updated on .

Hi,

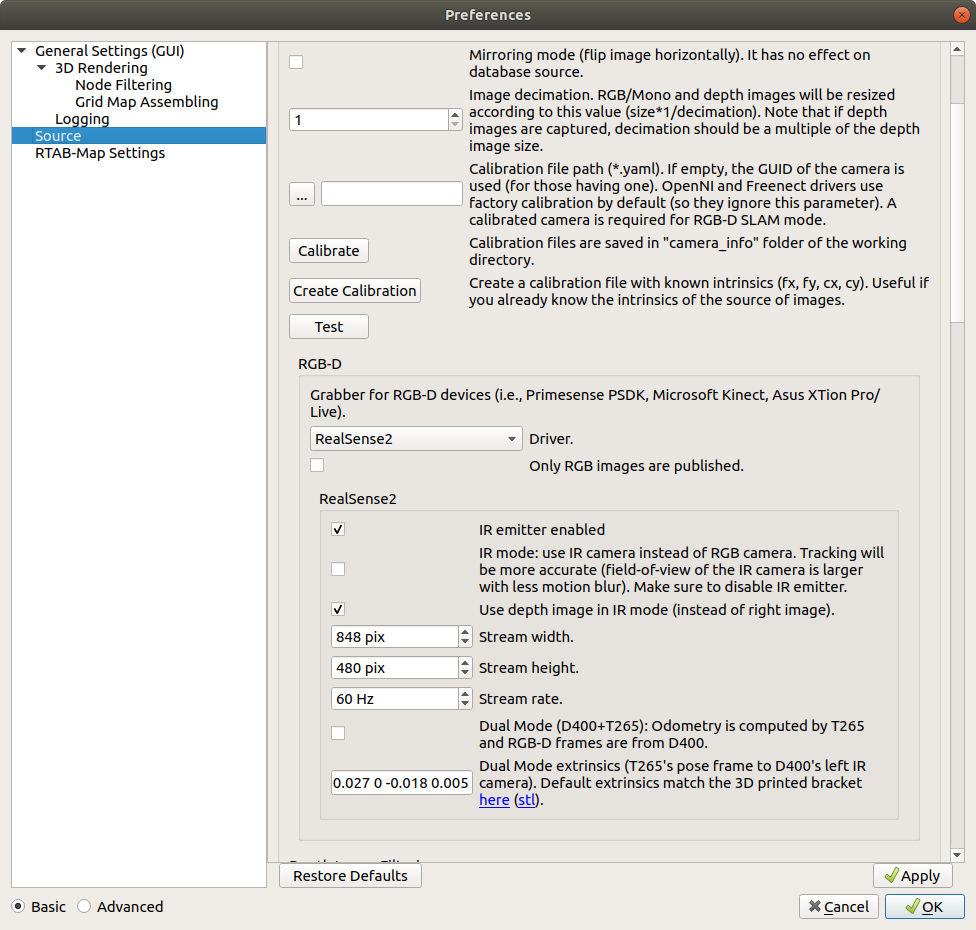

The example above was done in rtabmap standalone (without ROS) built with realsense2 support. Just click on Calibrate button in the source panel (like on the screenshot) to calibrate the T265. To use T265 with rtabmap's odometry under ROS, you would still have to calibrate using rtabmap standalone, then publish the calibration in new camera_info that can be used by rtabmap's odometry to rectify the images (note that you will need this new fix so that rtabmap's odometry can rectify the stereo images for convenience). Based on that example using T265 odometry with rtabmap on ROS, to use rtabmap's odometry with T265 images and imu: # Start realsense2: $ roslaunch realsense2_camera rs_t265.launch unite_imu_method:="linear_interpolation" # Start the camera_info publishers with the stereo fisheye calibration files: $ python camera_info_pub.py \ _url:=/home/mathieu/Documents/RTAB-Map/camera_info/905312112011_left.yaml \ image:=/camera/fisheye1/image_raw \ camera_info:=/camera/fisheye1/camera_info_calib $ python camera_info_pub.py \ _url:=/home/mathieu/Documents/RTAB-Map/camera_info/905312112011_right.yaml \ image:=/camera/fisheye2/image_raw \ camera_info:=/camera/fisheye2/camera_info_calib # Start imu filtering $ rosrun imu_filter_madgwick imu_filter_node _use_mag:=false _publish_tf:=false _world_frame:="enu" /imu/data_raw:=/camera/imu /imu/data:=/rtabmap/imu # Start RTAB-Map in stereo mode, use T265 odometry and do rectification: $ roslaunch rtabmap_ros rtabmap.launch \ args:="-d --Rtabmap/ImagesAlreadyRectified false --Optimizer/GravitySigma 0.3" \ stereo:=true \ left_image_topic:=/camera/fisheye1/image_raw \ right_image_topic:=/camera/fisheye2/image_raw \ left_camera_info_topic:=/camera/fisheye1/camera_info_calib \ right_camera_info_topic:=/camera/fisheye2/camera_info_calib \ wait_imu_to_init:=true \ imu_topic:=/rtabmap/imu cheers, Mathieu |

|

In reply to this post by matlabbe

Hello Mathieu,

I want to ask you a question about the latest approach [ T265+D435 (VIO odometry from T265 and Ir/depth from D435) ] . Are you just use RGBD for slam? Or both RGBD and fisheye 3D? Thanks! |

|

Administrator

|

On SLAM side, only RGB-D data from D435 are used. No images are fed to SLAM from T265, only odometry.

cheers, Mathieu |

|

Administrator

|

For dual D400+T265 ROS example, the official tutorial has been updated on ros wiki: http://wiki.ros.org/rtabmap_ros/Tutorials/HandHeldMapping

|

|

Hi Mathieu,

I have setup the mapping with T265+D435 (VIO odometry from T265 and Ir/depth from D435) following the steps in : http://wiki.ros.org/rtabmap_ros/Tutorials/HandHeldMapping My robot base is an IRobot Create Roomba. Now I want to implement the navigation using move_base. I would ask you some questions about it. Sorry if my questions aren’t well posed , I am beginner in this field , struggling to get my hands dirty with hardware and Rtabmap. 1) What should be the correct odom_topic to feed in move_base ? /t265/odom/sample or /rtabmap/localization_pose ? And map_topic /rtabmap/grid_map or /rtabmap/proj_map ? 2) Is it meaningful to use the /scan from depthimage_to_laserscan during map and navigation ( in addition to RGBD and VIO provided by D435 and T265 ) to make the system more robust ? 3) How Rtabmap modify the values in the costmap using the pointcloud from depth camera ? 4) How should result the tf tree of the robot ? Mine is map→t265 odom frame-> t265 pose frame → t265 link (robot base link and d400_camera_link are child of the t265_link) Thanks in advance for your help ! |

|

Administrator

|

Hi,

1. /t265/odom/sample for odom, /rtabmap/grid_map for the global costmap (/rtabmap/proj_map is deprecated, it is actually the same than grid_map to keep backward compatibility). 2. If you want a 2D map, it will use less computation power feeding the scans for mapping. However, it depends if you want to detect 3D obstacles or not. 3. For general usage of rtabmap with move_base, see http://wiki.ros.org/rtabmap_ros/Tutorials/MappingAndNavigationOnTurtlebot. There is also a config here where we used obstacles_detection nodelet point cloud outputs for the local costmap. 4. it looks ok. See also this tutorial where we used external VIO (attached to phone's frame) for turtlebot. cheers, Mathieu |

|

Hi Mathieu,

Thank you very much for your explanations and references , they have clarified my doubts. These days I have only the cameras with me so I cannot try the navigation part ; launching Rtabmap I find these two topics /rtabmap/cloud_ground /rtabmap/cloud_obstacles Are they corresponding to the ones you referenced in your answer ? /obstacles_cloud /ground_cloud Thanks Gian |

|

Administrator

|

No, /rtabmap/cloud_ground and /rtabmap/cloud_obstacles are segmented point cloud of the global map (they are outputs of rtabmap node). Don't use those clouds for the costmaps.

The other ground and obstacle clouds are outputs of obstacle_detection nodelet, generated with the current cloud of the camera. |

|

Thank you Mathieu . I have another doubt : I know that T265 calculates internally its SLAM using VIO and producing /t265/odom/sample topic relative to odom frame.

So the current pose should be determinated relatively to the origin of the odom frame. When the robot is kidnapped there is an "interruption" in the /t265/odom/sample odometry ; in this case, does rtabamp rely only on d435 images and on the map to relocalize the robot ? is the T265 odometry useless from that moment ? Thanks a lot ! GIan |

|

Administrator

|

If /t265 gets lost, there is no mechanism to recover correctly. With rtabmap's odometry, we send a null odometry value to notify that we cannot compute odometry anymore. We also send a large covariance when it restarts so that the rtabmap node knows that odometry has been reset and a new map is created. With T265, I didn't test that case (assuming that it is never lost!). However, if the robot is shutdown and restarted, rtabmap will localize in the previous map using D435, and still use T265 for odometry. cheers, Mathieu |

|

Hi Mathieu, I just found a thread of few days ago in the Realsense forum on github here , a developer has created a fork of the Realsense wrapper capable of relocalization.It exports the internal map of t265 and then it can reload it.In case it would work as expected is there a way Rtabmap could take in account of the time that t265 need to relocalize itself using this trick ?

Thanks Gian |

|

This post was updated on .

In reply to this post by matlabbe

Hello Mathieu,

I'm trying to adapt the nav3d launch file for the configuration d435+t265, but move_base doesn't receive the goal sent by rviz. After the mapping , I am launching rtabmap in localization mode from command line as in you Handheld Mapping tutorial : roslaunch rtabmap_ros rtabmap.launch args:=" --Mem/UseOdomGravity true --Optimizer/GravitySigma 0.3 " odom_topic:=/t265/odom/sample frame_id:=t265_link rgbd_sync:=true depth_topic:=/d400/aligned_depth_to_color/image_raw rgb_topic:=/d400/color/image_raw camera_info_topic:=/d400/color/camera_info approx_rgbd_sync:=false visual_odometry:=false localization:=true After I start the azimuth nav3 launch file modified by me for the d435 , only for navigation .I see the obastacles/ground cloud but robot doesn't move ( cmd_vel doesn't receive nothing)

<launch>

<arg name="cmd_vel_topic" default="/cmd_vel" />

<arg name="move_forward_only" default="false"/>

<arg name="odom_topic" default="/t265/odom/sample"/>

<!-- ROS navigation stack move_base -->

<group ns="planner">

<remap from="obstacles_cloud" to="/obstacles_cloud"/>

<remap from="ground_cloud" to="/ground_cloud"/>

<remap from="map" to="/rtabmap/grid_map"/>

<remap from="cmd_vel" to="/cmd_vel"/>

<remap from="/move_base_simple/goal" to="/rtabmap/goal_out"/>

<remap from="odom" to="/t265/odom/sample"/>

<node pkg="move_base" type="move_base" respawn="true" name="move_base" output="screen">

<param name="base_global_planner" value="navfn/NavfnROS"/>

<rosparam file="$(find rtabmap_ros)/launch/azimut3/config/costmap_common_params_2d.yaml" command="load" ns="global_costmap"/>

<rosparam file="$(find rtabmap_ros)/launch/azimut3/config/costmap_common_params_2d.yaml" command="load" ns="local_costmap" />

<rosparam file="$(find rtabmap_ros)/launch/azimut3/config/local_costmap_params.yaml" command="load" ns="local_costmap" />

<rosparam file="$(find rtabmap_ros)/launch/azimut3/config/global_costmap_params.yaml" command="load" ns="global_costmap"/>

<rosparam file="$(find rtabmap_ros)/launch/azimut3/config/base_local_planner_params.yaml" command="load" />

</node>

</group>

<group ns="camera">

<node pkg="nodelet" type="nodelet" name="nodelet_manager" args="manager" />

<node pkg="nodelet" type="nodelet" name="points_xyz_planner" args="load rtabmap_ros/point_cloud_xyz nodelet_manager">

<remap from="depth/image" to="/d400/aligned_depth_to_color/image_raw"/>

<remap from="depth/camera_info" to="/d400/color/camera_info"/>

<remap from="cloud" to="cloudXYZ" />

<param name="decimation" type="int" value="4"/>

<param name="max_depth" type="double" value="3.0"/>

<param name="voxel_size" type="double" value="0.02"/>

</node>

<node pkg="nodelet" type="nodelet" name="obstacles_detection" args="load rtabmap_ros/obstacles_detection nodelet_manager">

<remap from="cloud" to="cloudXYZ"/>

<remap from="obstacles" to="/obstacles_cloud"/>

<remap from="ground" to="/ground_cloud"/>

<param name="frame_id" type="string" value="base_link"/>

<param name="map_frame_id" type="string" value="map"/>

<param name="wait_for_transform" type="bool" value="true"/>

<param name="min_cluster_size" type="int" value="20"/>

<param name="max_obstacles_height" type="double" value="0.4"/>

</node>

</group>

</launch>

I left the costmap files almost as original ones, I modified only the odom frame ( t265_odom_frame) in the local costmap , and sensor_frame: d400_link and topic: /camera/cloudXYZ in the local_costmap_params_3d.yaml file local_costmap_params.yaml

costmap_params.yaml

global_frame: t265_odom_frame

robot_base_frame: base_footprint

update_frequency: 2

publish_frequency: 1

rolling_window: true

width: 3.0

height: 3.0

resolution: 0.025

origin_x: 0

origin_y: 0

plugins:

- {name: obstacle_layer, type: "costmap_2d::ObstacleLayer"}

- {name: inflation_layer, type: "costmap_2d::InflationLayer"}

local_costmap_params_2d.yaml

costmap_params_2d.yaml

global_frame: t265_odom_frame

robot_base_frame: base_footprint

update_frequency: 2.0

publish_frequency: 2.0

rolling_window: true

width: 4.0

height: 4.0

resolution: 0.025

origin_x: -2.0

origin_y: -2.0

plugins:

- {name: obstacle_layer, type: "costmap_2d::ObstacleLayer"}

- {name: inflation_layer, type: "costmap_2d::InflationLayer"}

obstacle_layer:

obstacle_range: 2.5

raytrace_range: 3.0

max_obstacle_height: 0.4

track_unknown_space: true

observation_sources: point_cloud_sensorA point_cloud_sensorB

point_cloud_sensorA: {

sensor_frame: base_footprint,

data_type: PointCloud2,

topic: obstacles_cloud,

expected_update_rate: 0.5,

marking: true,

clearing: true,

min_obstacle_height: 0.04

}

point_cloud_sensorB: {

sensor_frame: base_footprint,

data_type: PointCloud2,

topic: ground_cloud,

expected_update_rate: 0.5,

marking: false,

clearing: true,

min_obstacle_height: -1.0 # make usre the ground is not filtered

}

local_costmap_params_3d.yaml

costmap_params_3d.yaml

local_costmap:

global_frame: t265_odom_frame

robot_base_frame: base_footprint

update_frequency: 2.0

publish_frequency: 2.0

static_map: false

rolling_window: true

width: 4.0

height: 4.0

resolution: 0.025

origin_x: -2.0

origin_y: -2.0

#observation_sources: laser_scan_sensor point_cloud_sensor

observation_sources: point_cloud_sensor

# assuming receiving a cloud from rtabmap/obstacles_detection node

point_cloud_sensor: {

sensor_frame: d400_link

data_type: PointCloud2,

topic: /camera/cloudXYZ

expected_update_rate: 0.5,

marking: true,

clearing: true,

min_obstacle_height: -99999.0,

max_obstacle_height: 0.5}

Could you please advice how to correct my mistakes ? Thanks a lot Gian |

|

This post was updated on .

Hi Mathieu,

I managed to send the goal remapping : <remap from="move_base_simple/goal" to="/rtabmap/goal_out"/> but the robot stuck process[planner/move_base-1]: started with pid [14662] process[camera/nodelet_manager-2]: started with pid [14663] process[camera/points_xyz_planner-3]: started with pid [14664] process[camera/obstacles_detection-4]: started with pid [14669] [ INFO] [1601142016.025229104]: global_costmap: Using plugin "static_layer" [ INFO] [1601142016.055642825]: Requesting the map... [ INFO] [1601142016.764849831]: Resizing costmap to 133 X 182 at 0.050000 m/pix [ INFO] [1601142016.864754505]: Received a 133 X 182 map at 0.050000 m/pix [ INFO] [1601142016.876162808]: global_costmap: Using plugin "obstacle_layer" [ INFO] [1601142016.911972258]: Subscribed to Topics: point_cloud_sensorA point_cloud_sensorB [ INFO] [1601142017.061707808]: global_costmap: Using plugin "inflation_layer" [ INFO] [1601142017.268054604]: local_costmap: Using plugin "obstacle_layer" [ INFO] [1601142017.278878354]: Subscribed to Topics: point_cloud_sensorA point_cloud_sensorB [ INFO] [1601142017.455727035]: local_costmap: Using plugin "inflation_layer" [ INFO] [1601142017.662599476]: Created local_planner base_local_planner/TrajectoryPlannerROS [ INFO] [1601142017.732169394]: Sim period is set to 0.10 [ WARN] [1601142018.174572730]: The obstacles_cloud observation buffer has not been updated for 1.20 seconds, and it should be updated every 0.50 seconds. [ WARN] [1601142018.623082458]: The obstacles_cloud observation buffer has not been updated for 1.30 seconds, and it should be updated every 0.50 seconds. [ INFO] [1601142018.669252414]: Recovery behavior will clear layer 'obstacles' [ INFO] [1601142018.705379378]: Recovery behavior will clear layer 'obstacles' [ INFO] [1601142018.907379922]: odom received! [ERROR] [1601142018.983781094]: obstacles_detection: Parameter "min_cluster_size" has moved from rtabmap_ros to rtabmap library. Use parameter "Grid/MinClusterSize" instead. The value is still copied to new parameter name. [ERROR] [1601142018.989861745]: obstacles_detection: Parameter "max_obstacles_height" has moved from rtabmap_ros to rtabmap library. Use parameter "Grid/MaxObstacleHeight" instead. The value is still copied to new parameter name. [ WARN] [1601142019.123087664]: The obstacles_cloud observation buffer has not been updated for 1.80 seconds, and it should be updated every 0.50 seconds. [ WARN] [1601142019.174502512]: The obstacles_cloud observation buffer has not been updated for 2.20 seconds, and it should be updated every 0.50 seconds. [ WARN] [1601142056.503254267]: Clearing both costmaps to unstuck robot (3.00m). [ WARN] [1601142061.603023757]: Rotate recovery behavior started. [ERROR] [1601142064.153938723]: Rotate recovery can't rotate in place because there is a potential collision. Cost: -1.00 [ WARN] [1601142069.254295305]: Clearing both costmaps to unstuck robot (1.84m). [ WARN] [1601142074.354312275]: Rotate recovery behavior started. [ERROR] [1601142074.354672342]: Rotate recovery can't rotate in place because there is a potential collision. Cost: -1.00 [ERROR] [1601142079.355251789]: Aborting because a valid plan could not be found. Even after executing all recovery behaviors [ WARN] [1601142193.834880946]: Clearing both costmaps to unstuck robot (3.00m). [ WARN] [1601142198.934953312]: Rotate recovery behavior started. [ERROR] [1601142198.935298171]: Rotate recovery can't rotate in place because there is a potential collision. Cost: -1.00 [ WARN] [1601142204.034891823]: Clearing both costmaps to unstuck robot (1.84m). [ WARN] [1601142209.134934133]: Rotate recovery behavior started. [ERROR] [1601142209.135588849]: Rotate recovery can't rotate in place because there is a potential collision. Cost: -1.00 [ERROR] [1601142214.234863451]: Aborting because a valid plan could not be found. Even after executing all recovery behaviors [ WARN] [1601142275.442094095]: Clearing both costmaps to unstuck robot (3.00m). [ WARN] [1601142280.542109747]: Rotate recovery behavior started. [ERROR] [1601142280.542420438]: Rotate recovery can't rotate in place because there is a potential collision. Cost: -1.00 [ WARN] [1601142285.642134215]: Clearing both costmaps to unstuck robot (1.84m). [ WARN] [1601142290.742234216]: Rotate recovery behavior started. [ERROR] [1601142290.742621160]: Rotate recovery can't rotate in place because there is a potential collision. Cost: -1.00 [ERROR] [1601142295.842110405]: Aborting because a valid plan could not be found. Even after executing all recovery behaviorsI tried to increase the min height of the obstacles in the costmaps but it didn't work,anyaway I still think that the robot see itself as an obstacke. Could you please advice to tune the costmaps ? Thanks, Gian |

|

Administrator

|

You can show the costmaps in RVIZ to debug if there is an obstacle on the robot. Is the map published by rtabmap looking good? If the robot detects itself with the camera, for local costmap you may check if there is a minimum range parameter, otherwise you may have to filter the clouds before sending them to move_base. For rtabmap, there is a Grid/RangeMin parameter and also Grid/FootprintHeight, Grid/FootprintLength and Grid/FootprintWidh parameters to setup a footprint to filter.

cheers, Mathieu |

|

This post was updated on .

Hello Mathieu,

Thank you very much for your advice , rviz is really friendly for debug and I managed to make it move playing with inflation radius and footprint size ! Regarding obstacles avoidance I used a small black table ( the d435 is placed 20 cm over the flat part of the table) and I found that : - The table is black and the camera doesn't see it - Covering it with a colored drape, if the robot start at least 50 cm far can see on time and avoid it , but if it is closer and start ( for example after a rotate_recovery ) it crash on the obstacle without hesitation ; I think that it is a problem in thelimited FOV of the camera and when it is close to a plane obsracle the segmentation take it like the ground. Is there rtabmap or costmap setting that can resolve these issues , maybe ? I tried to reduce decimation and modify many Grid params but there is no change : Obstacles nodelet detect only the small pointcloud of the frontal edge of the table (legs) and not its flat part I have tried also with the standalone d435i : - Your Handheld Mapping with the Madgwick filter - The approach with robot localization that you setup in https://github.com/introlab/rtabmap_ros/blob/master/launch/tests/sensor_fusion.launch With both of them I had good mapping results but iat very low speed, ia faster movement make lose the odometry. The only modification I made is (for 2d differential robot) is param="odom0_config">[true, true, false, false, false, true, true, false, false, false, false, true, false, false, false] param="imu0_config">[false, false, false, false, false, true, false, false, false, false, false, true, true, false, false] And two_d_mode = true Could you give advices about tuning VIO parameters ? Thank you for your help and your patience ! Gian |

|

Hello Mathieu

Please I am looking forward your reply Thanks a lot ! gian |

|

Administrator

|

For the table disappearing from the local costmap as you are approaching, the voxel plugin (for PointCloud2) of the costmap should not clear obstacles not in field of view of the camera. The footprint set in costmap parameters may be too large, as with footprint_clearing_enabled parameter on, it could clear obstacles. You could also publish the voxel layer of the costmap with publish_voxel_map set to on (see http://wiki.ros.org/costmap_2d/hydro/obstacles#VoxelCostmapPlugin), it will be easier to see in rviz when the obstacle is cleared.

For pure VIO, you may look for some packages on internet. For D435i, if you already tried https://github.com/introlab/rtabmap_ros/blob/master/launch/tests/test_d435i_vio.launch with rtabmap built with vins_fusion and don't have good results (make sure to cover the IR emitter to avoid adding false features to track), you may look for other standalone VIO approaches. They would give odometry topic that can be used in rtabmap afterwards. cheers, Mathieu |

|

This post was updated on .

Thank you very much Mathieu !

I used this Voxel plugin and indeed the obstacle is not cleared anymore ! {name: voxel_layer, type: "costmap_2d::VoxelLayer"} voxel_layer: origin_z: 0.0 z_resolution: 0.1 z_voxels: 10 but still there is the problem of detection : the robot detects the obstacle only if it is more than one meter far !!! I put also negative min_obstacle_height to take into account the distance of the camera from the base_link. This is the complete costmap :

footprint: [[ 0.15, 0.15], [-0.15, 0.15], [-0.15, -0.15], [ 0.15, -0.15]]

footprint_padding: 0.0

inflation_layer:

inflation_radius: 0.08 # 2xfootprint, it helps to keep the global planned path farther from obstacles

transform_tolerance: 2

obstacle_layer:

obstacle_range: 2.5

raytrace_range: 12

max_obstacle_height: 3

track_unknown_space: true

voxel_layer:

enabled: true

origin_z: 0.0

z_resolution: 0.1

z_voxels: 10

publish_voxel_map: true

footprint_clearing_enabled: false

observation_sources: point_cloud_sensorA point_cloud_sensorB

point_cloud_sensorA: {

sensor_frame: d400_link,

data_type: PointCloud2,

topic: /obstacles_cloud,

expected_update_rate: 0.5,

marking: true,

clearing: true,

min_obstacle_height: -0.7

}

point_cloud_sensorB: {

sensor_frame: d400_link,

data_type: PointCloud2,

topic: /ground_cloud,

expected_update_rate: 0.5,

marking: false,

clearing: true,

min_obstacle_height: -1.0 # make sure the ground is not filtered

}

these are the Grid parameters in rtabmap : <param name="Grid/MaxObstacleHeight" type="string" value="1" /> <param name="Grid/NoiseFilteringRadius" type="string" value="0.0"/> <param name="Grid/NoiseFilteringMinNeighbors" type="string" value="5.0"/> <param name="Grid/FromDepth" type="bool" value="true" /> <!--param name="Grid/DepthDecimation" type="string" value="4"/--> <param name="Grid/3D" type="bool" value="true" /> <param name="Grid/RayTracing" type="bool" value="true" /> <param name="Grid/NormalsSegmentation" type="string" value="true" /> <param name="Grid/MaxGroundHeight" type="string" value="0.05" /> <param name="Grid/MaxGroundAngle" type="string" value="10" /> <param name="Grid/RangeMax" type="string" value="1" /> <param name="Grid/FootprintHeight" type="string" value="0.1"/> <param name="Grid/FootprintLength" type="string" value="0.1"/> <param name="Grid/FootprintWidh" type="string" value="0.1"/> <param name="Grid/MapFrameProjection" type="bool" value="false"/> and these the obstacle layer :

<node pkg="nodelet" type="nodelet" name="nodelet_manager" args="manager" />

<node pkg="nodelet" type="nodelet" name="points_xyz_planner" args="load rtabmap_ros/point_cloud_xyz nodelet_manager">

<remap from="depth/image" to="/d400/aligned_depth_to_color/image_raw"/>

<remap from="depth/camera_info" to="/d400/color/camera_info"/>

<remap from="cloud" to="cloudXYZ" />

<param name="decimation" type="int" value="4"/>

<param name="max_depth" type="double" value="3.0"/>

<param name="voxel_size" type="double" value="0.05"/>

<param name="approx_sync" type="bool" value="false"/>

</node>

<node pkg="nodelet" type="nodelet" name="obstacles_detection" args="load rtabmap_ros/obstacles_detection nodelet_manager">

<remap from="cloud" to="cloudXYZ"/>

<remap from="obstacles" to="/obstacles_cloud"/>

<remap from="ground" to="/ground_cloud"/>

<param name="frame_id" type="string" value="base_link"/>

<param name="map_frame_id" type="string" value="map"/>

<param name="wait_for_transform" type="bool" value="true"/>

<param name="Grid/ClusterRadius" type="string" value="0.2"/>

<param name="Grid/GroundIsObstacle" type="string" value="false"/>

Do you have any advice ? Sorry for one more post off topic,this will be the last one off topic. PS regarding VIO , I didn't try VINS fusion , instead with d435i I tried https://github.com/introlab/rtabmap_ros/blob/master/launch/tests/sensor_fusion.launch , with robot localization inside, and it works for me at very low speed of the robot So I would ask you, in your experience , how would be possible to make the odometry more resilient to speed of the robot ( with some calibration of parameters or the camera/imu in robot localization or adding maybe Vins Fusion ...I try to guess !) Thanks a lot for all ! cheers gian |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |