How to process RGBD-SLAM datasets with RTAB-Map?

12

12

|

Yes, you're right,

I was using RtabMap with OpenCV 3. so I switched to OpenCV2 and the results are in Color. Thanks :) |

|

Administrator

|

In reply to this post by matlabbe

Color seems working with OpenCV 3.1.0 on Windows 10 too:

|

|

In reply to this post by matlabbe

Thanks for this nice tutorial and discussions.

I try with freiburg2_pioneer_slam but I lost the tracking "Odometry" and 3D Map show in red for long time  . .

1. I Synchronize the rgb and depth images. 2. For calibration file I update it from parameters of this page. But I dont know where to insert the d0,d1,d2, d3,d4 in the calibration file ? 3. What is the RTAB-Map parameters that can be update to improve the RGBD-SLAM? Thanks for your support Mohammed |

|

Administrator

|

Hi,

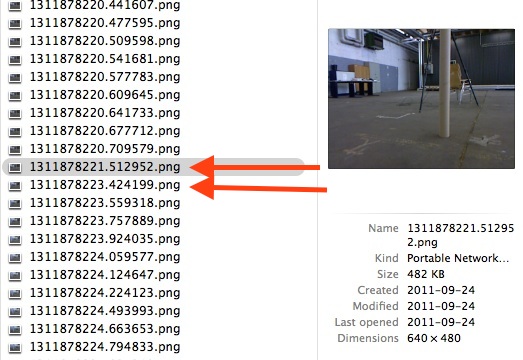

Just tried the dataset. With default parameters, the odometry get lost exactly where there is a huge delay (~2 sec, normally 30 msec) between two images in the dataset, making odometry not able to follow:  Another observation is that there are not a lot of discriminative visual features and they are often >4 meters away. I then used 3D to 2D instead of 3D to 3D motion estimation (Vis/EstimationType=1) to use far features. I also set GFTT quality 0.001 instead of 0.01 (GFTT/QualityLevel=0.001) to get more features. Here is the rgbdslam.yaml I used too (don't forget to set the depth scale factor to 5):

%YAML:1.0

camera_name: rgbdslam

image_width: 0

image_height: 0

camera_matrix:

rows: 3

cols: 3

data: [ 525., 0., 3.1950000000000000e+02, 0., 525.,

2.3950000000000000e+02, 0., 0., 1. ]

distortion_coefficients:

rows: 1

cols: 5

data: [ 0., 0., 0., 0., 0. ]

rectification_matrix:

rows: 3

cols: 3

data: [ 1., 0., 0., 0., 1., 0., 0., 0., 1. ]

projection_matrix:

rows: 3

cols: 4

data: [ 525., 0., 3.1950000000000000e+02, 0., 0., 525.,

2.3950000000000000e+02, 0., 0., 0., 1., 0. ]

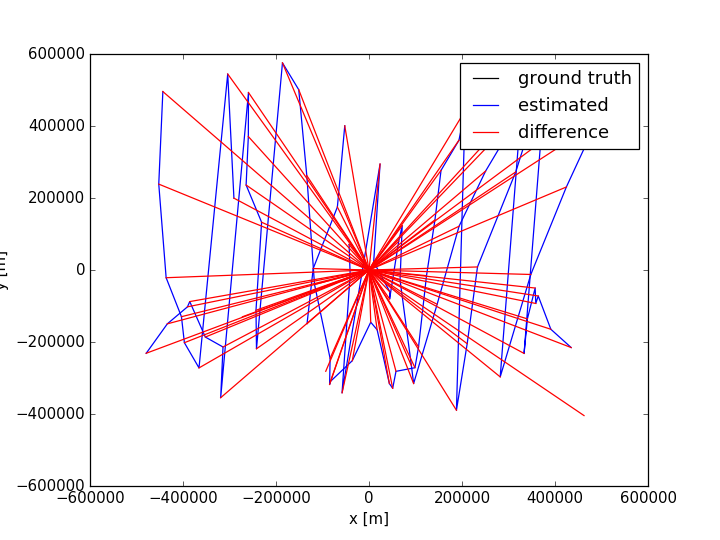

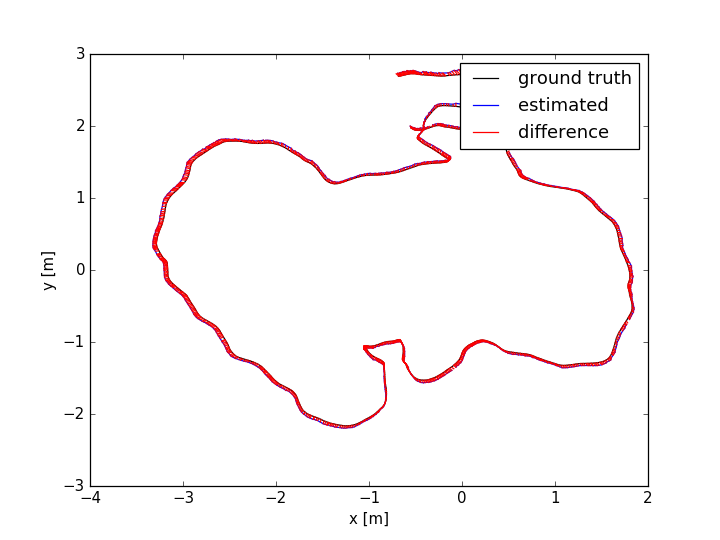

Results: The odometry gets lost some times but recover the next frame. As the camera is quite shaky and visual feautres are far, odometry can be bad at some places. Overall, here is the full run (gray line is the ground truth):  Source parameters:  cheers, Mathieu |

|

Thanks for your support, I will try it and update you.

I see you set the ground-truth file path too. Can you upload your config.ini please. Can we pass the config.ini to RTAB-Map throw command line like : ./rtabmap config.ini |

|

In reply to this post by matlabbe

Dear Mathieu

can you clear it for me: for loop closure detection you use SURF, and for RANSAC you use GFTT? that is mean RTABMAP extract visual feature two time? in the code can you show me the condition where RTABMAP check RANSAC point reach the minimum to accepted as loop closure ? Thanks for your support |

|

Administrator

|

Hi,

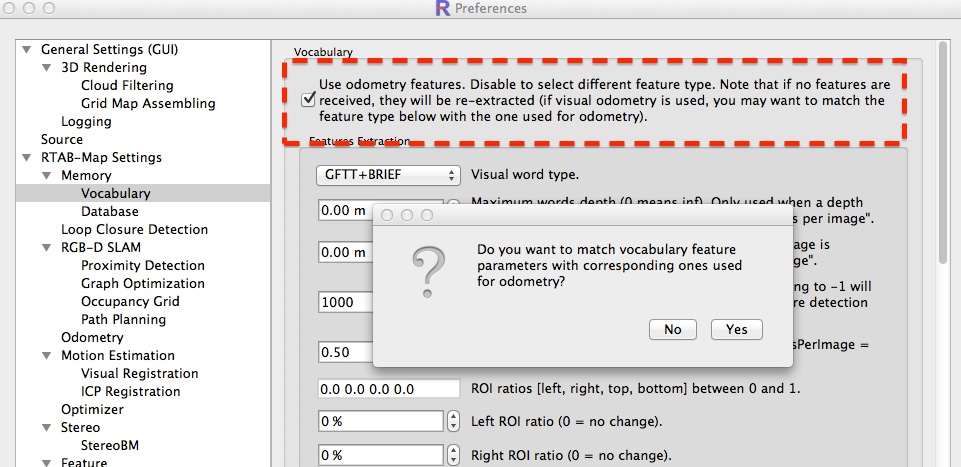

In right terms it is SURF for loop closure detection and GFTT/BRIEF for visual odometry. It is possible to re-use the already extracted features from odometry by checking "Use odometry features" box:  The minimum RANSAC inliers check is here (for PnP RANSAC) and here (for PCL RANSAC transform estimation). cheers |

|

Thanks for your fast support

|

Re: Demo RTAB-Map on Turtlebot

|

This post was updated on .

In reply to this post by matlabbe

Hi

I tried to reproduce your results following the instructions but despite using your intrinsics (ros default) or from the page I can't get any poses remotely near. I also try changin between different odometry strategies but little change. (It is with TORO graph optimization and I couldn't find the ignore covariance button) *(tried with g2o and vertigo and similar results) What could I look for to tune it?  python evaluate_ate.py /home/antonioguerrero/ORB_SLAM2/datasets/rgbd_dataset_freiburg3_long_office_household/groundtruth.txt /home/antonioguerrero/Documents/RTAB-Map/poses_camera_f2f_0hz.txt * --plot atertabmap0f2f.png --offset 0 --scale 1 --verbose *frame to frame and 0 Hz also tried with 30 and 20 (the best result frame to map at 0 Hz) compared_pose_pairs 83 pairs (none of the results reach 90 pairs) absolute_translational_error.rmse 408041.042321 m absolute_translational_error.mean 379314.518259 m absolute_translational_error.median 375067.726482 m absolute_translational_error.std 150392.780599 m absolute_translational_error.min 53123.714529 m absolute_translational_error.max 770123.529968 m edit* I think it could be problem from the images association because I have been having problems to run the associate.py version (I just did it once this morning and I don't know how because though copying the exact command from the shell history it doesn't work again)* (This was resolved getting out the route and getting back. If it persists download again the dataset and try again verifying that the the folder you're in is right.) "ce0xx:~$ python associatedir.py /home/antonioguerrero/ORB_SLAM2/datasets/rgbd_dataset_freiburg3_long_office_household/rgb.txt /home/antonioguerrero/ORB_SLAM2/datasets/rgbd_dataset_freiburg3_long_office_household/depth.txt 1341847980.722988 rgb/1341847980.722988.png 1341847980.723020 depth/1341847980.723020.png rgb/1341847980.722988.png 1341847980.722988.png rgb_sync/1341847980.722988.png 1341847980.722988.png Traceback (most recent call last): File "associatedir.py", line 135, in <module> shutil.move(" ".join(first_list[a]), "rgb_sync/" + " ".join(first_list[a]).split("/")[1]) File "/usr/lib/python2.7/shutil.py", line 302, in move copy2(src, real_dst) File "/usr/lib/python2.7/shutil.py", line 130, in copy2 copyfile(src, dst) File "/usr/lib/python2.7/shutil.py", line 82, in copyfile with open(src, 'rb') as fsrc: IOError: [Errno 2] No such file or directory: 'rgb/1341847980.722988.png' " |

|

Administrator

|

This post was updated on .

Do you refer to instructions of this post?

|

Re: Demo RTAB-Map on Turtlebot

|

Yes, that are the steps I followed. I linked the other one which is the one with the results to compare with mine in a sight.

|

|

Administrator

|

Hi,

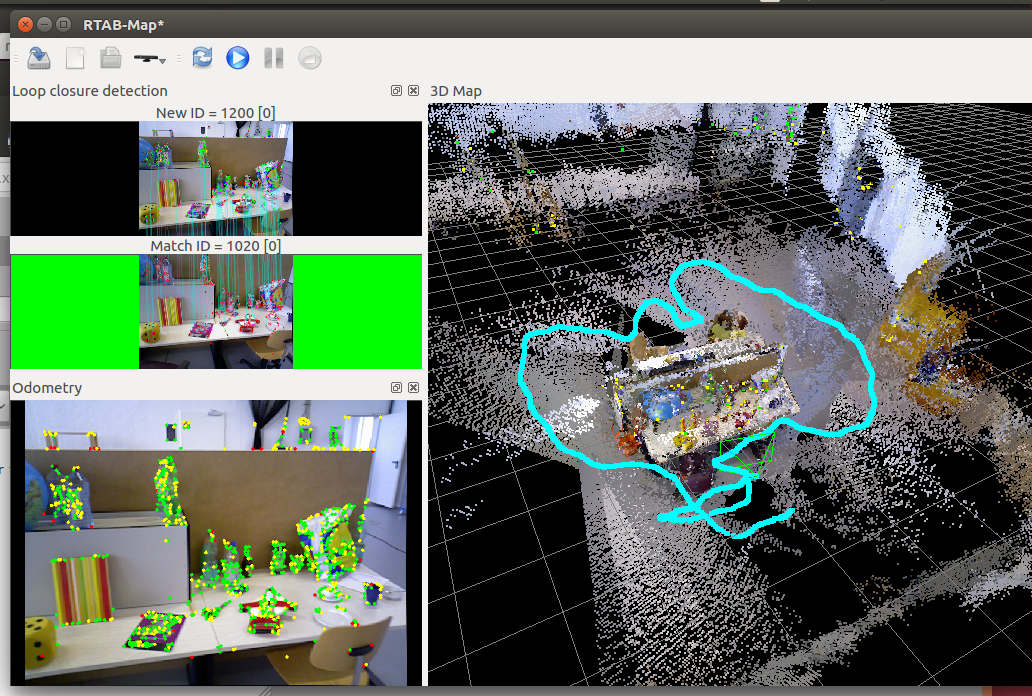

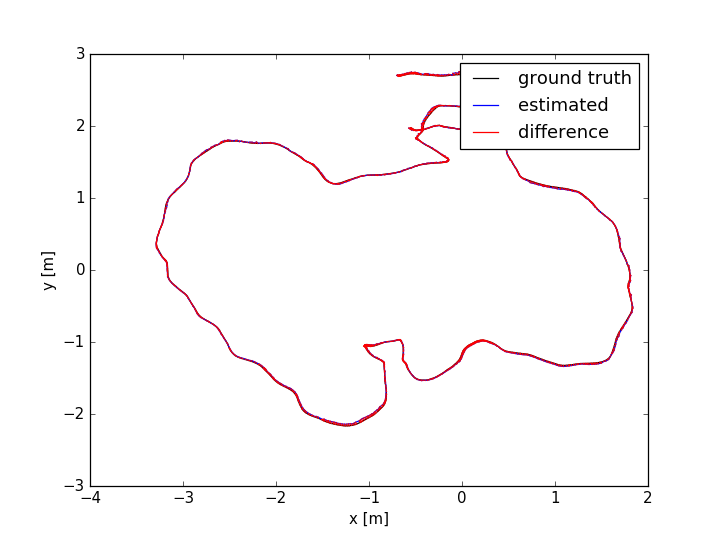

I followed the same steps as the post and they should still work with the latest versions. When the mapping is completed (here I activated intermediate nodes creation like mentioned in the post), press Stop, then do Edit->"Download graph only" with global map optimized option and you should see something like this:  To export poses in RGBD-SLAM format, do File->Export poses... and select RGB-D SLAM format. Save the file to poses.txt for example. With the rgbd-slam evaluation tool: $ python evaluate_ate.py groundtruth.txt poses.txt --plot figure.png --offset 0 --scale 1 --verbose compared_pose_pairs 1199 pairs absolute_translational_error.rmse 0.035687 m absolute_translational_error.mean 0.034737 m absolute_translational_error.median 0.033668 m absolute_translational_error.std 0.008178 m absolute_translational_error.min 0.013810 m absolute_translational_error.max 0.084219 m  Note that based on the RGB calibration for fr3, you may use these calibration parameters instead (which fix the scale of the trajectory): %YAML:1.0

---

camera_name: fr3

image_width: 640

image_height: 480

camera_matrix:

rows: 3

cols: 3

data: [ 5.3539999999999998e+02, 0., 3.2010000000000002e+02, 0.,

5.3920000000000005e+02, 2.4759999999999999e+02, 0., 0., 1. ]which gives: compared_pose_pairs 1252 pairs absolute_translational_error.rmse 0.022903 m absolute_translational_error.mean 0.018278 m absolute_translational_error.median 0.014916 m absolute_translational_error.std 0.013801 m absolute_translational_error.min 0.001312 m absolute_translational_error.max 0.079146 m

|

|

In reply to this post by BerberechoRick

Hello,

I had the same issue (workaround see below): by executing the evaluate_ate.py script, I got values like you in your post: absolute_translational_error.rmse 408041.042321 m absolute_translational_error.mean 379314.518259 m absolute_translational_error.median 375067.726482 m absolute_translational_error.std 150392.780599 m absolute_translational_error.min 53123.714529 m absolute_translational_error.max 770123.529968 m Probably because I use another language on my PC and thus, my poses.txt file generated by rtabmap had "," as seperation instead of ".". The groundtruth.txt file uses "." as seperation. I fixed it as workaround in the associate.py file, used by the evaluate_ate.py file in the method "read_file_list" in line 66: lines = data.replace(",", ".").replace(",", " ").replace("\t"," ").split("\n") instead of the original version: lines = data.replace(",", " ").replace("\t"," ").split("\n") Greets Heike |

Re: Demo RTAB-Map on Turtlebot

|

This post was updated on .

Thank you Heike!

It works perfectly If anyone need to use the online method, you have to change the "," for "." from your poses.txt file. |

Re: Demo RTAB-Map on Turtlebot

|

In reply to this post by matlabbe

Hi Matt

there is a lot of time since this post but I get back to work in this. with the solution of the associate I could reach more reasonable response: python evaluate_ate.py groundtruth.txt posesrtabmap_5-06_30hz.txt --verbose --plot atertabmap.png compared_pose_pairs 289 pairs absolute_translational_error.rmse 0.061848 m absolute_translational_error.mean 0.055130 m absolute_translational_error.median 0.047995 m absolute_translational_error.std 0.028032 m absolute_translational_error.min 0.007329 m absolute_translational_error.max 0.121167 m As I'm working with embedded pc I realised that when I used 30 hz most of the time it couldn't reach that input rate but after doing a median from the messages the 27,3 frame rate reduce the errors even more: python evaluate_ate.py groundtruth.txt posesrtabmap_5-06.txt --verbose --plot atertabmapopt.png compared_pose_pairs 159 pairs absolute_translational_error.rmse 0.047323 m absolute_translational_error.mean 0.045411 m absolute_translational_error.median 0.043999 m absolute_translational_error.std 0.013314 m absolute_translational_error.min 0.012369 m absolute_translational_error.max 0.083712 m Since I'm using the same yaml: camera_name: fr3 image_width: 640 image_height: 480 camera_matrix: rows: 3 cols: 3 data: [ 5.3539999999999998e+02, 0., 3.2010000000000002e+02, 0., 5.3920000000000005e+02, 2.4759999999999999e+02, 0., 0., 1. ] What would it mean getting barely a tenth of pairs compared from your results? (Despite creating intermediate nodes) (I didn't get much change in these aspect replicating the steps with a more powerful computer) Letting the input rate to 0 /inf it reaches around 150 but a bit worse results. |

|

Administrator

|

Not sure what parameters you used in the UI, but for convenience there is now a console application that can be used for benchmarking:

$ rtabmap-rgbd_dataset \ --Rtabmap/DetectionRate 2 \ --RGBD/LinearUpdate 0 \ --Mem/STMSize 30 \ --Rtabmap/CreateIntermediateNodes true \ rgbd_dataset_freiburg3_long_office_household Paths: Dataset name: rgbd_dataset_freiburg3_long_office_household Dataset path: rgbd_dataset_freiburg3_long_office_household RGB path: rgbd_dataset_freiburg3_long_office_household/rgb_sync Depth path: rgbd_dataset_freiburg3_long_office_household/depth_sync Output: rgbd_dataset_freiburg3_long_office_household Output name: rtabmap Skip frames: 0 groundtruth.txt: rgbd_dataset_freiburg3_long_office_household/groundtruth.txt Parameters: Mem/STMSize=30 RGBD/LinearUpdate=0 Rtabmap/CreateIntermediateNodes=true Rtabmap/DetectionRate=2 Rtabmap/PublishRAMUsage=true Rtabmap/WorkingDirectory=rgbd_dataset_freiburg3_long_office_household RTAB-Map version: 0.19.3 [ WARN] (2019-06-06 11:20:34.879) CameraModel.cpp:286::load() Missing "distorsion_coefficients" field in "rgbd_dataset_freiburg3_long_office_household/rtabmap_calib.yaml" [ WARN] (2019-06-06 11:20:34.879) CameraModel.cpp:307::load() Missing "distortion_model" field in "rgbd_dataset_freiburg3_long_office_household/rtabmap_calib.yaml" [ WARN] (2019-06-06 11:20:34.879) CameraModel.cpp:323::load() Missing "rectification_matrix" field in "rgbd_dataset_freiburg3_long_office_household/rtabmap_calib.yaml" [ WARN] (2019-06-06 11:20:34.879) CameraModel.cpp:339::load() Missing "projection_matrix" field in "rgbd_dataset_freiburg3_long_office_household/rtabmap_calib.yaml" [ WARN] (2019-06-06 11:20:34.963) CameraImages.cpp:423::readPoses() 2485 valid poses of 2488 stamps Processing 2488 images... Iteration 1/2488: camera=39ms, odom(quality=0/981, kfs=1)=19ms, slam=19ms Iteration 2/2488: camera=9ms, odom(quality=660/919, kfs=1)=60ms, slam=0ms Iteration 3/2488: camera=9ms, odom(quality=682/981, kfs=1)=38ms, slam=0ms Iteration 4/2488: camera=9ms, odom(quality=645/978, kfs=1)=36ms, slam=0ms, rmse=0.000000m Iteration 5/2488: camera=9ms, odom(quality=670/973, kfs=1)=36ms, slam=0ms, rmse=0.000000m Iteration 6/2488: camera=9ms, odom(quality=671/977, kfs=1)=37ms, slam=0ms, rmse=0.000000m [...] Iteration 2485/2488: camera=9ms, odom(quality=413/975, kfs=282)=52ms, slam=1ms, rmse=0.020466m Iteration 2486/2488: camera=9ms, odom(quality=450/977, kfs=282)=53ms, slam=29ms, rmse=0.020484m Iteration 2487/2488: camera=10ms, odom(quality=434/975, kfs=282)=52ms, slam=1ms, rmse=0.020570m Iteration 2488/2488: camera=9ms, odom(quality=414/971, kfs=282)=51ms, slam=1ms, rmse=0.020587m Saving trajectory... Saving rgbd_dataset_freiburg3_long_office_household/rtabmap_poses.txt... done! translational_rmse= 0.022397 m rotational_rmse= 0.986424 deg Saving rtabmap database (with all statistics) to "rgbd_dataset_freiburg3_long_office_household/rtabmap.db"Here the saved rtabmap_poses.txt. There is also another tool to report results:

$ rtabmap-report rgbd_dataset_freiburg3_long_office_household/rtabmap.db

Database: rgbd_dataset_freiburg3_long_office_household/rtabmap.db

(2488, s=1.000): error lin=0.022m (max=0.043m, odom=0.042m) ang=1.0deg, slam: avg=37ms (max=203ms) loops=21, odom: avg=49ms (max=70ms), camera: avg=9ms, map=376MB

cheers, Mathieu |

|

Hi,

I followed the instructions in this page , however, the start button in not active (Ubuntu RTAB-Map version 0.17.6). Do you have any suggestions? When I use the test button on source option , the camera viewer works well. In addition, standalone application works with Kinect also. Cheers, Ozgur |

|

Administrator

|

If you are using the standalone, the play button will be enabled after creating a new database.

|

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |