Losing odometry when doing fixed point scanning

|

This post was updated on .

Hello,

I have captured images by spinning an ASUS Xtion Pro on a tripod stopping every 45 degrees (I have fixed stops so it is accurate). First lap the camera points straight forward, then the next lap I'm pointing the camera up by 45 degrees and on the 3 lap I'm pointing the camera down by 45 degrees. Hence capturing 8*3=24 overlapping RGB images and the equal number of registered depth images. I want to run these as a dataset in RTABMap and yes I have read http://official-rtab-map-forum.206.s1.nabble.com/How-to-process-RGBD-SLAM-datasets-with-RTAB-Map-td939.html and the other guides and have successfully executed the freiburg examples. What I find is that RTABMap loses odometry very fast, it can't match features even between the two first frames. I have tried many combinations of Feature Matching algorithms and tried Frame->Frame and Frame->Map, Visualization and Geometry. (I have checked that the features in images match match outside of RTABMap using opencv) What am I fundamentally doing wrong here? I feel that I have enough overlap and features. If I make my own odometry (raw x,y,z,roll,pitch,yaw) file as I know my camera positions RTABMAP can run successfully. But I still feel that the point cloud and the meshes have a lot of artifacts, like many small stray (with lack of better word here) faces/vertices. I have some gut feeling that this could be related that no feature matching has not been done in this case. So my questions are: 1) How can I make RTABMap not lose odometry/tracking? 2) The artifacts are they from the lack of Feature Matching, if not why as I have photos that are correctly lit, exposed well and sharp? Thanks! |

|

Administrator

|

Hi,

1) What kind of features did you use in OpenCV? You may use the same for rtabmap (see Vis/FeatureType). However, for a setup like this, if you can know accurately the position/orientation of the camera at each capture, creating odometry as you did would be the best solution, as it would be invariant of the visual appearance of the environment (i.e., if there are visual features or not) or geometry if you use ICP-based registration. 2) Do you have a screenshot of these artefacts? If they are between frames, maybe the poses are not accurate enough (e.g., the pose should be the center of the RGB camera optical lens, not the center of the camera). Another problem could the scale of the depth image or the wrong focal distance in the camera calibration: even if the pose is accurate, if scale is off the reality, there will be undesirable results. A mis-registration between RGB and depth camera can also lead to some visual/geometry problems. If OpenNI2 driver is used, a recalibration can be done with calibration tool of RTAB-Map. cheers, Mathieu |

|

1) I have tried SIFT in OpenCV matching the overlaps with features and tried SIFT in RTABMap. I understand the invariant using my own odometry but one reason why

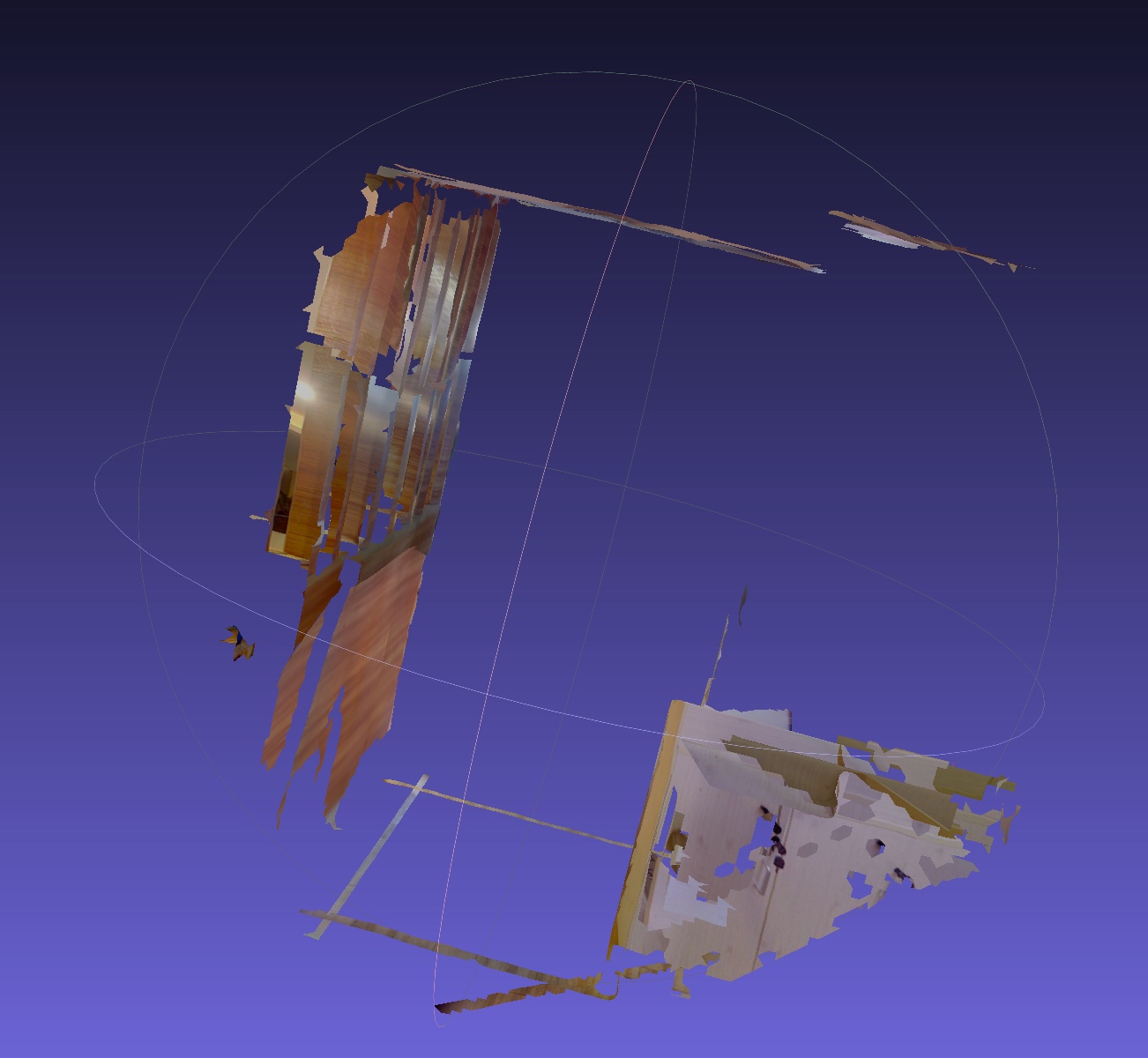

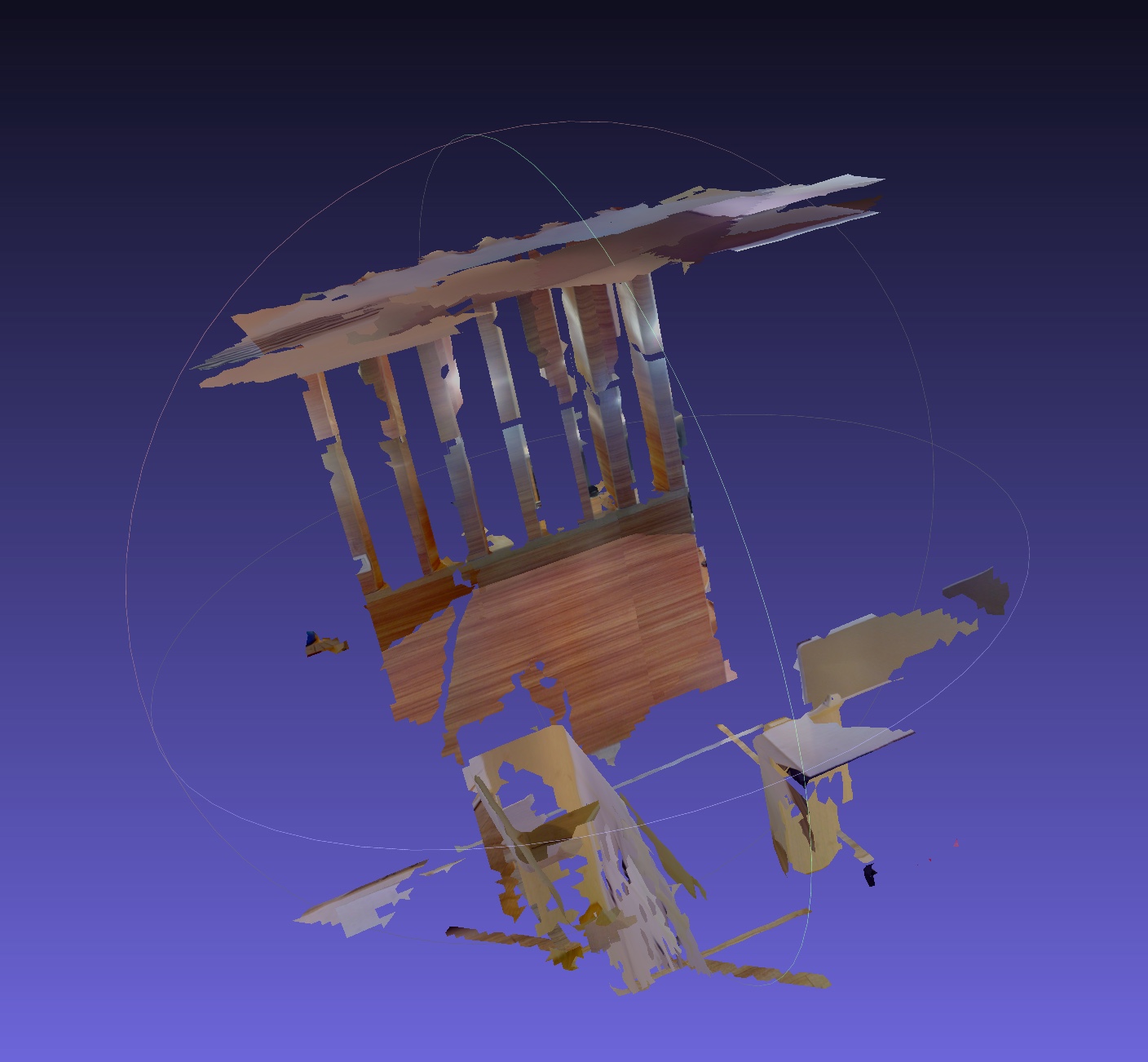

I might want to use features is that I want to scan in an industrial environment where it is not possible for human or robot to scan freely so I was hoping that I could use this type of rig to capture overlapping point clouds/meshes. I.e. that RTABMap would align the point clouds. Maybe this is not even possible directly in RTABMap? 2) You are correct about RGB center vs Camera center, my poses are center of the camera which of course is wrong So I will have to offset them, what are the units of x,y? (and z even though it is not important here), it doesn't seem to be mm or any other metric unit. Feel that this can have something to do with the splits in even surfaces as can be seen here and also the floor is just thick lines around the center (this is dense-possion.obj, the ply has a bit more floor but the same split in surface) and I also get quite a bit of swiss cheese holes, less here than in other shots. Used MeshLab for the screenshots.   |

|

Administrator

|

Knowing accuratly the pose of each capture, for a single scan (24 photos + 24 poses), it is possible to reconstruct the point cloud without RTAB-Map (without extracting features or doing fancy registration as clouds should be already aligned). If you you want to merge two scans (moving the tripod to another place), then RTAB-Map can help to register the two scans together (features matching and motion estimation). RTAB-Map cannot do this out-of-the-box, some coding is required to merge the 24 images together (single SensorData) before sending them to RTAB-Map. I suggest to debug a single scan before going into this.

The units should be meters, however if the focal distance of the camera is wrong, even if the poses are super accurate, the result will be bad. The camera should be then recalibrated. I don't know how you rotate the camera in pitch, but if the lens move along z axis, there should be some z offset. You can also use rtabmap-databaseViewer to debug the poses you are sending to rtabmap. Open Constraint View and browse the neighbor links, you will see the point clouds aligned two by two. Cheers, Mathieu |

|

Your suggestions have lead to better results so thanks a lot for your help.

Not afraid of coding so when you say to merge 24 images in a single SensorData do you refer to the one that is commented as // Multi-cameras RGB-D constructor? If so do I simply just make a 24 image wide collage (with no stitching or fancy overlapping) and set the transform of each in camera model vector? |

|

Administrator

|

Hi,

yes this constructor is for that. Examples for 4 cameras: 4x RGB merged into single cv::Mat: _____ _____ _____ _____ | | | | | | | | | | | | | | | |_____|_____|_____|_____| 4x Depth merged into single cv::Mat: _____ _____ _____ _____ | | | | | | | | | | | | | | | |_____|_____|_____|_____|With OpenCV, you can create a large image (rows, 4xcols), then use Mat.copy() to copy each image in the right ROI. Then for each cameraModel, set the appropriate localTransform. You can then use DatabaseViewer to see all projected cameras in the 3D View, with enabling frustums (right click on the view) to see the camera orientations. cheers, Mathieu |

|

Thank you this was fairly easy to get going when you confirmed my thinking but slightly later in the code flow when doing Rtabmap::process(...) for the multi camera SensorData I get slightly confused.

The only overloaded functions that accept SensorData also requires a pose, and naturally I assume it would be for the entire multicamera object and at least when there is only one it wouldn't matter. I can see in older code (from around 2014) that process existed with only SensorData but not today. Digging past the v0.15 rtabmap.h file I see that just process(SensorData(image, id), Transform()) is used But using only Transform() I get [ERROR] Rtabmap.cpp:945::process() RGB-D SLAM mode is enabled, memory is incremental but no odometry is provided. Image 0 is ignored! Initializing the transform to something sensible like 0,0,1,0, -1,0,0,0, 0,-1,0,0 gives cloud with <1000 points often < 400. (The data base viewer doesn't show anything valuable for this) What could be a sensible init of the pose for process(...) for this multicamera object? I'm loosely following the rgbddataset example. |

|

Administrator

|

Hi,

process(SensorData(image, id), Transform()) is for the loop closure detection only mode, original RTAB-Map. To do 3D mapping, a depth image is also required with a valid Transform, this is why you have the error. Set Transform::getIdentity() for the transform. Can you share the database of the single multi-camera SensorData processed? cheers, Mathieu |

|

Hi,

Sorry I don't have that database anymore it has been overwritten, essentially I think it was just an init of the database as nothing had been properly processed. Now I can process the multi camera but all the cameras are displaced and I'm setting the local transform for each camera the same way (x,y,z,roll,pitch,yaw) as I set my odometry before. I have not properly debugged this yet to see why yet. As for the depth factor it is still small mystery for me and I feel that I have read 'everything' about it. It is simply a factor (img /= depthFactor) to get the depth range right and in general appears to be around 5 for most examples. How do I know or calculate this factor if I'm using any of the depth formats from the openNI(2) API? |

|

Administrator

|

Hi,

Which depth factor do you refer? If it is the one set to CameraRGBDImages constructor, it should be 1 most of the time, unless the depth camera gives slightly off values in scale or that the depth images have already a fixed scale in it (like the TUM RGB-D dataset, which stated here at 5 in meters). - If your depth images have type CV_16UC1, it is assume that values are in mm (value 1500 = 1.5 meter). - If your depth images have type CV_32FC1, it is assume that values are in meters (value 1.5 = 1.5 meter). cheers, Mathieu |

|

Hi Mathieu,

Thank you for clarifying the depth factor. As for the Multi Camera I have implemented each camera successfully and the results are good when generating point clouds individually. But when trying to get the transformation between two multi camera captures the alignment is really off, xyz is not even near the offset and rpy makes the second multi camera capture roll, pitch and yaw heavily. e.g. (from pretty print xyz=-2.009485,1.608905,-0.912544 rpy=-0.358790,-0.188623,0.930016 For a simple test case I have taken two captures about 4.5 meters apart in an open space and the two points clouds overlap visibly and the images are also visibly together so I strongly think it should be possible to align properly. I have tried to individually feature match each individual image instread of the row of images but don't see much difference. What could be fundamentally wrong with the code below that first does feature matching and then motion estimate as you described previously in this thread? I get these warnings [ WARN] VWDictionary.cpp:525::clear() Visual dictionary would be already empty here (3401 words still in dictionary). [ WARN] VWDictionary.cpp:529::clear() Not indexed words should be empty here (3401 words still not indexed) [ WARN] VWDictionary.cpp:525::clear() Visual dictionary would be already empty here (2678 words still in dictionary). [ WARN] VWDictionary.cpp:529::clear() Not indexed words should be empty here (2678 words still not indexed) when running so I have my suspect there could be something wrong with the aggregateWordsAndP3f(...) function if not something else Thanks! #define DETECTOR_TYPE "SIFT" // Similar results with other types too // First Capture cv::Mat rowRgb0 = getRGBRow(my ix for first capture) // 24 or 8 images wide cv::Mat rowDepth0 = getDepthRow(my ix for first capture) data0 = SensorData(rowRgb0, rowDepth0, cameraModels); // models are ok as I can generate a good PC from each individual SensorData cv::Mat gray; cv::cvtColor(rowRgb0, gray, cv::COLOR_BGR2GRAY); ParametersMap detectParams = Parameters::getDefaultParameters(DETECTOR_TYPE); uInsert(detectParams, rtabmap::ParametersPair(rtabmap::Parameters::kKpMaxFeatures(), std::string("0"))); Feature2D *f2d0 = Feature2D::create(detectParams); std::vector<cv::KeyPoint> kp0 = f2d0->generateKeypoints(gray, rowDepth0); cv::Mat desc0 = f2d0->generateDescriptors(gray, kp0); std::vector<cv::Point3f> pts3d0 = f2d0->generateKeypoints3D(sdata, kp0); rtabmap.process(data0, Transform().getIdentity()); // Then second capture cv::Mat rowRgb1 = getRGBRow(my ix for second capture) // 24 or 8 images wide cv::Mat rowDepth1 = getDepthRow(my ix for second capture) data0 = SensorData(rowRgb1, rowDepth1, cameraModels); cv::cvtColor(rowRgb1, gray, cv::COLOR_BGR2GRAY); ParametersMap detectParams1 = Parameters::getDefaultParameters(DETECTOR_TYPE); uInsert(detectParams1, rtabmap::ParametersPair(rtabmap::Parameters::kKpMaxFeatures(), std::string("0"))); Feature2D *f2d1 = Feature2D::create(detectParams1); std::vector<cv::KeyPoint> kp1 = f2d->generateKeypoints(gray, rowDepth1); cv::Mat desc1 = f2d->generateDescriptors(gray, kp1); std::vector<cv::Point3f> pts3d1 = f2d->generateKeypoints3D(sdata, kp1); VWDictionary dictionary0; std::list<int> wordIds0 = dictionary0.addNewWords(desc0, 1); std::multimap<int, cv::Point3f> map0 = aggregateWordsAndP3f(wordIds0, pts3d0); VWDictionary dictionary1; std::list<int> wordIds1 = dictionary1.addNewWords(desc1, 1); std::multimap<int, cv::Point3f> map1 = aggregateWordsAndP3f(wordIds1, pts3d1); std::vector<int> matches; std::vector<int> inliers; Transform pose = util3d::estimateMotion3DTo3D( uMultimapToMapUnique(map0), uMultimapToMapUnique(map1), 4, 1.0, // Need to put this to 1.0 or 0.5 to get enough inliers 100, // 50, 100, 1000 gives simliar result 5, 0, &matches, &inliers); rtabmap.process(data1, pose); std::multimap<int, cv::Point3f> aggregateWordsAndP3f(const std::list<int> & wordIds, const std::vector<cv::Point3f> & keypoints) { std::multimap<int, cv::Point3f> words; std::vector<cv::Point3f>::const_iterator kpIter = keypoints.begin(); for(std::list<int>::const_iterator iter=wordIds.begin(); iter!=wordIds.end(); ++iter) { words.insert(std::pair<int, cv::Point3f >(*iter, *kpIter)); ++kpIter; } return words; } |

|

Administrator

|

Hi,

You don't need to extract features prior to send merged images to rtabmap. However to get transformation between two SensorData, use Odometry class:

cv::Mat rowRgb0 = getRGBRow(my ix for first capture) // 24 or 8 images wide

cv::Mat rowDepth0 = getDepthRow(my ix for first capture)

data0 = SensorData(rowRgb0, rowDepth0, cameraModels);

cv::Mat rowRgb1 = getRGBRow(my ix for first capture) // 24 or 8 images wide

cv::Mat rowDepth1 = getDepthRow(my ix for first capture)

data1 = SensorData(rowRgb1, rowDepth1, cameraModels);

ParametersMap detectParams;

detectParams.insert(ParametersPair(Parameters::kVisFeatureType(), "0")); // SURF feature for example

detectParams.insert(ParametersPair(Parameters::kVisMaxFeatures(), "0")); // no limit of features extracted

detectParams.insert(ParametersPair(Parameters::kVisEstimationType(), "0")); // should be 0 for multi cameras

OdometryF2M odom(detectParams);

Rtabmap rtabmap;

rtabmap.init(detectParams, "myMap.db");

OdometryInfo info;

Transform pose0 = odom.process(data0, &info);

printf("Pose=%s\n", pose0.prettyPrint().c_str()); // would be always identity for the first frame

rtabmap.process(data0, pose0, info.reg.covariance);

Transform pose1 = odom.process(data1, &info);

printf("Pose=%s\n", pose1.prettyPrint().c_str());

rtabmap.process(data1, pose1, info.reg.covariance);

|

|

Thank you, this works but I have some questions after doing a few rounds of experimentation

1) I still have to set inlier distance to a large value like 1.0 or drop the number of inliers to a low value like 4. I feel that this is wrong? 2) For the Frame to map, is it in any way possible to add/process sensor data to a location in the map that is in sight of previously scanned data but out of sight from the previous position? When I try this it often just get discarded. |

|

Administrator

|

Hi,

1) Do you have an example of database with two scans registered? 2) Odometry always assumes that consecutive scans can be registered together. If we want to register a new scan to map and that scan is not overlapping the previous one added, it could be possible to do by triggering a new map in rtabmap, then sending the new scan with Identity transform. For example, assuming that we cannot ensure that all scans overlap with the previous one, but at least they all overlap to one another scan in the map: cv::Mat rowRgb0 = getRGBRow(my ix for first capture) // 24 or 8 images wide cv::Mat rowDepth0 = getDepthRow(my ix for first capture) data0 = SensorData(rowRgb0, rowDepth0, cameraModels); cv::Mat rowRgb1 = getRGBRow(my ix for first capture) // 24 or 8 images wide cv::Mat rowDepth1 = getDepthRow(my ix for first capture) data1 = SensorData(rowRgb1, rowDepth1, cameraModels); cv::Mat rowRgb2 = getRGBRow(my ix for first capture) // 24 or 8 images wide cv::Mat rowDepth2 = getDepthRow(my ix for first capture) data1 = SensorData(rowRgb2, rowDepth2, cameraModels); ParametersMap detectParams; detectParams.insert(ParametersPair(Parameters::kVisFeatureType(), "0")); // SURF feature for example detectParams.insert(ParametersPair(Parameters::kVisMaxFeatures(), "0")); // no limit of features extracted detectParams.insert(ParametersPair(Parameters::kVisEstimationType(), "0")); // should be 0 for multi cameras detectParams.insert(ParametersPair(Parameters::kKpDetectorStrategy(), "0")); // use same features for loop closure detection than for motion estimation (SURF here) Rtabmap rtabmap; rtabmap.init(detectParams, "myMap.db"); rtabmap.process(data0, Transform::getIdentity()); rtabmap.triggerNewMap(); rtabmap.process(data1, Transform::getIdentity()); rtabmap.triggerNewMap(); rtabmap.process(data2, Transform::getIdentity()); ....Note that I never tried that, so maybe it is not 100% sure that each scan will be correctly merged with the previous maps. cheers, Mathieu |

|

Hi

1) I PMed a link to a database. I sometimes succeed using distance 0.1 and but not often and I feel that the distance is often estimated to short. The example database is extreme as it comes back with just a few cm from the pose calculation, it is captured in a fairly large room but I seen similar behavior in smaller (but not cramped) spaces too. 2) I will try this |

|

Administrator

|

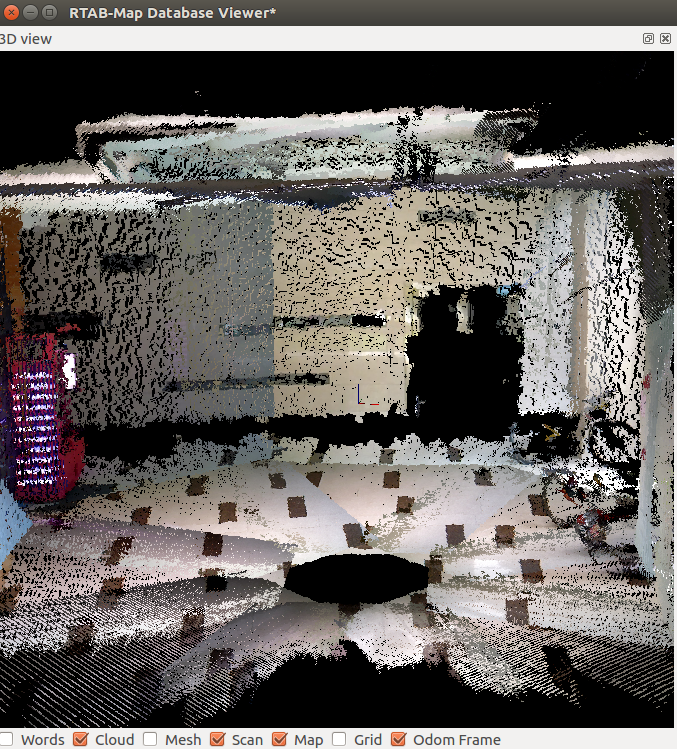

Hi,

I think you are close to have something working. I think the low number of inliers is mainly because of the not enough accurate local transforms. I am not sure how the pattern on the floor should look like, but it seems awkward from the single assembled scan:  The rectangles are not all perfectly aligned, this will make extracting visual features with a lot more error, then difficult to match if the geometry (distance between same visual features) between scans changes. The code I tried:

ParametersMap detectParams;

detectParams.insert(ParametersPair(Parameters::kVisFeatureType(), "0")); // SURF feature for example

detectParams.insert(ParametersPair(Parameters::kVisMaxFeatures(), "24000"));

detectParams.insert(ParametersPair(Parameters::kVisEstimationType(), "0")); // should be 0 for multi cameras

detectParams.insert(ParametersPair(Parameters::kVisInlierDistance(), "0.1"));

detectParams.insert(ParametersPair(Parameters::kKpDetectorStrategy(), "0")); // use same features for loop closure detection than for motion estimation (SURF here)

detectParams.insert(ParametersPair(Parameters::kSURFHessianThreshold(), "100"));

detectParams.insert(ParametersPair(Parameters::kRtabmapLoopThr(), "0"));

detectParams.insert(ParametersPair(Parameters::kRGBDOptimizeMaxError(), "0"));

DBReader reader("/home/mathieu/Downloads/multicamera2_0.1_4.db");

reader.init();

Rtabmap rtabmap;

rtabmap.init(detectParams, "test.db");

SensorData data = reader.takeImage();

rtabmap.process(data, Transform::getIdentity());

rtabmap.triggerNewMap();

data = reader.takeImage();

rtabmap.process(data, Transform::getIdentity());

rtabmap.close();

It fails to find enough inliers. If I increase "Vis/InlierDistance", it will accept some transforms but they are very wrong. Having better aligned scans will help to have more inliers with low "Vis/InlierDistance", thus having good motion estimation. cheers, Mathieu |

|

1) I found some slight errors in my odometry now it looks much better, thank you.

2) For the triggerNewMap example above, I can't get it to work. I always only get the last data added when I save a cloud and opening the database in rtabmap only shows the last. But the database viewer shows all the added nodes. I can also see that the processing time is far to short for have done any merging. Generating a graph in the database viewer gives me a 14 byte file with this content: digraph G { } <and an empty line> Some basic misunderstanding of the API from my side? |

|

Administrator

|

Hi,

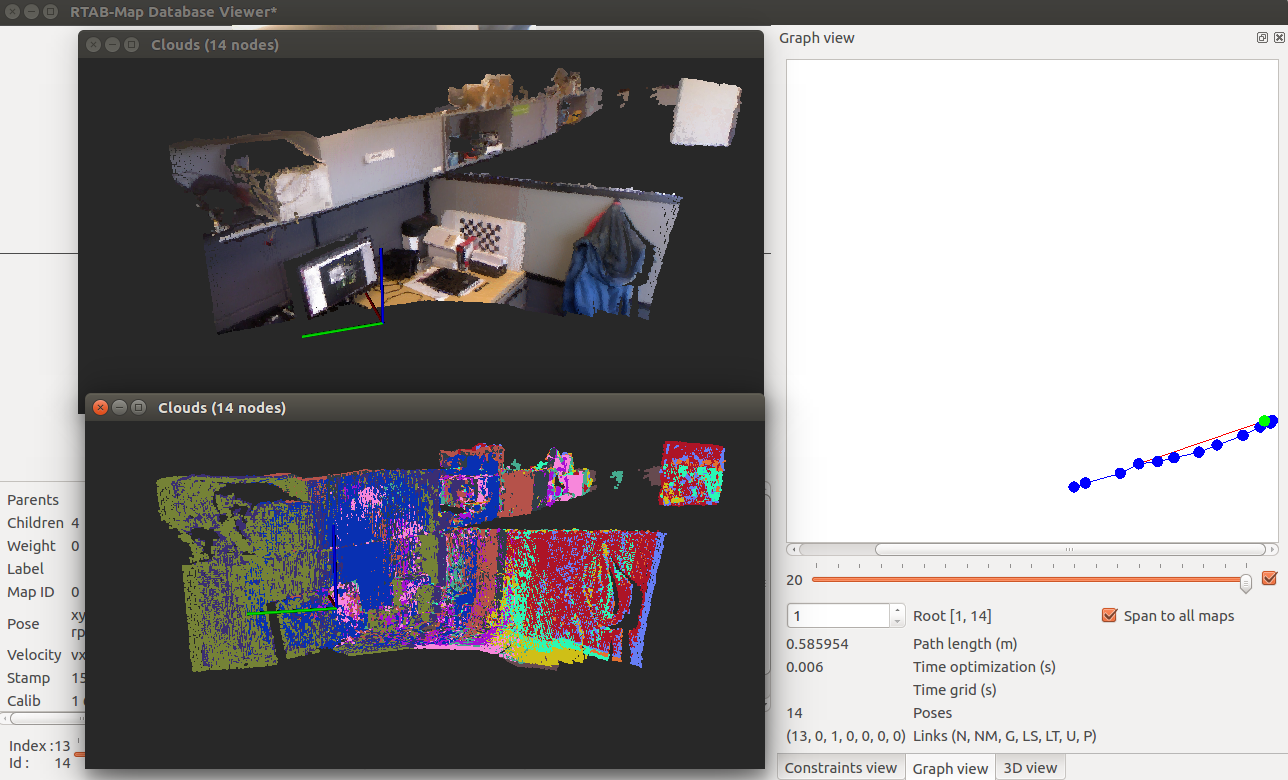

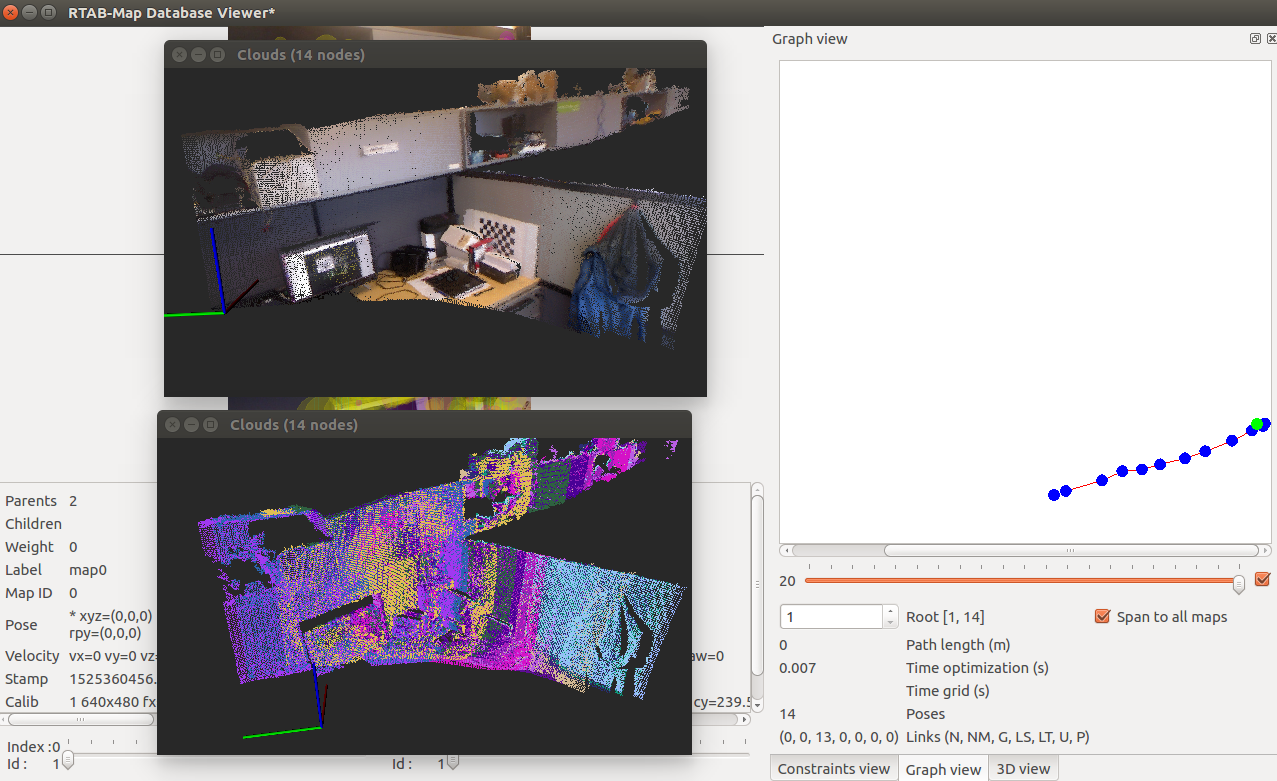

If you add the following at the beginning of the main, you may have more info about why they are not merged: ULogger::setType(ULogger::kTypeConsole); ULogger::setLevel(ULogger::kInfo); In my triggerNewMap example above, as I said, it doesn't work because it cannot find transformation between the scans for the reasons I stated. As it cannot find transformations, it just adds the scans without linking them in the database. You can try the Constraints View in database viewer to add links between the scans. The difference between the databases is that in the first one there is only one map, while in the second there are 14 maps (1 for each scan) merged. What triggerNewMap example does:

When scans are not linked, database viewer only shows the latest one added in the graph. The Constraints View can be used to try to compute explicitly a transform between two scans. Use File->Export poses... to export poses. Exporting the graph in Dot format requires at least one link, and it only shows relations between nodes, not actual metric poses. Note that Export poses... action export poses of the current graph in Graph view. If the database contains many session not connected, you can change the root to export corresponding map poses. Below is an example using the odometry approach and the multi-session approach (triggerNewMap). I recorded a small database (desktop1Hz.db, 3MB) with single camera just to show a working example. The input database has single camera scan, but replacing by your multi-camera scan should work too. int main(int argc, char * argv[]) { ULogger::setType(ULogger::kTypeConsole); ULogger::setLevel(ULogger::kWarning); // Set to kInfo or kDebug to have more info on terminal ParametersMap detectParams; detectParams.insert(ParametersPair(Parameters::kVisFeatureType(), "0")); // SURF feature for example detectParams.insert(ParametersPair(Parameters::kVisMaxFeatures(), "24000")); detectParams.insert(ParametersPair(Parameters::kVisEstimationType(), "0")); // should be 0 for multi cameras detectParams.insert(ParametersPair(Parameters::kVisInlierDistance(), "0.1")); detectParams.insert(ParametersPair(Parameters::kKpDetectorStrategy(), "0")); // use same features for loop closure detection than for motion estimation (SURF here) detectParams.insert(ParametersPair(Parameters::kSURFHessianThreshold(), "100")); detectParams.insert(ParametersPair(Parameters::kRGBDOptimizeMaxError(), "0")); detectParams.insert(ParametersPair(Parameters::kRGBDLinearUpdate(), "0")); // add scan even if we don't move detectParams.insert(ParametersPair(Parameters::kOdomGuessMotion(), "false")); // don't assume constant motion DBReader reader(UDirectory::homeDir()+"/Downloads/desktop1Hz.db", 0, true); reader.init(); Rtabmap rtabmap; // Odometry approach (consecutive scans should overlap!) printf("Odometry approach... \n"); UFile::erase("testOdom.db"); rtabmap.init(detectParams, "testOdom.db"); SensorData data = reader.takeImage(); OdometryF2M odom(detectParams); while(data.isValid()) { OdometryInfo info; Transform pose = odom.process(data, &info); if(!pose.isNull()) { rtabmap.process(data, pose, info.reg.covariance); } else { printf("Odometry is lost, cannot add scan to map!\n"); } data = reader.takeImage(); } rtabmap.close(); printf("Closed testOdom.db\n"); // Multi-session approach without odometry (scans should overlap at least to one other scan to merge all maps together) printf("Multi-session approach... \n"); UFile::erase("testMultiSession.db"); detectParams.insert(ParametersPair(Parameters::kRtabmapLoopThr(), "0")); // bypass loop closure hypotheses, compute always loop closure with highest likelihood location rtabmap.init(detectParams, "testMultiSession.db"); reader.init(); data = reader.takeImage(); while(data.isValid()) { rtabmap.process(data, Transform::getIdentity()); if(rtabmap.getWMSize() != 0 && rtabmap.getStatistics().loopClosureId() == 0) { printf("Could not merge scan to previous scans!\n"); } rtabmap.triggerNewMap(); data = reader.takeImage(); } rtabmap.close(); printf("Closed testMultiSession.db\n"); return 0; } Here are screenshots showing the resulting databases (with database viewer). On the Graph view, the blue links are odometry links, while the red links are loop closure links. The clouds are shown with Edit->View clouds... testOdom.db: testMultiSession.db:

testMultiSession.db:

cheers,

cheers,Mathieu |

|

This post was updated on .

Hi,

I got this to work using Loop Closure and Multi Camera, but I have noticed that when there are a lot of inliers (like 1000+) I get the wrong yaw, x and y feels correct though. In the example below, the pose in bold gets the wrong yaw but most likely got the correct x,y. 0.701933 is close to 0.68346 but 0.701933 is wrong with about 45 degrees. Interestingly my multicamera sensor data is separated by 45 degrees (*8=360) What can be done to prevent this? The poses following the wrong one 7835:[DEBUG] (2018-07-31 13:59:24.171) util3d_registration.cpp:219::transformFromXYZCorrespondences() RANSAC inliers=683/1406 tf=xyz=-0.582010,-0.491917,-0.007348 rpy=-0.003194,0.010166,-0.895116 7906:[DEBUG] (2018-07-31 13:59:26.605) util3d_registration.cpp:219::transformFromXYZCorrespondences() RANSAC inliers=682/1387 tf=xyz=-0.581125,-0.493516,-0.007377 rpy=-0.003855,0.009947,-0.895146 8126:[DEBUG] (2018-07-31 14:01:55.189) Rtabmap.cpp:1245::process() Added pose xyz=5.400993,1.651987,0.000000 rpy=0.000000,-0.000000,0.684386 (odom=xyz=0.000000,0.000000,0.000000 rpy=0.000000,-0.000000,0.000000) 8248:[DEBUG] (2018-07-31 14:01:57.683) util3d_registration.cpp:219::transformFromXYZCorrespondences() RANSAC inliers=1360/2034 tf=xyz=-0.373358,0.225694,-0.001120 rpy=-0.007259,0.001301,-0.017608 8321:[DEBUG] (2018-07-31 14:02:00.082) util3d_registration.cpp:219::transformFromXYZCorrespondences() RANSAC inliers=1322/1953 tf=xyz=-0.373237,0.225743,-0.000976 rpy=-0.007443,0.001289,-0.017557 8539:[DEBUG] (2018-07-31 14:04:30.169) Rtabmap.cpp:1245::process() Added pose xyz=5.831758,1.720608,0.000000 rpy=0.000000,-0.000000,0.701933 (odom=xyz=0.000000,0.000000,0.000000 rpy=0.000000,-0.000000,0.000000) 8665:[DEBUG] (2018-07-31 14:04:32.960) util3d_registration.cpp:219::transformFromXYZCorrespondences() RANSAC inliers=328/972 tf=xyz=-0.935263,0.004363,-0.003679 rpy=-0.003178,-0.003636,0.045954 8734:[DEBUG] (2018-07-31 14:04:35.310) util3d_registration.cpp:219::transformFromXYZCorrespondences() RANSAC inliers=306/952 tf=xyz=-0.935586,0.004978,-0.003911 rpy=-0.003801,-0.003522,0.046266 8955:[DEBUG] (2018-07-31 14:06:25.918) Rtabmap.cpp:1245::process() Added pose xyz=6.576385,2.287081,0.000000 rpy=0.000000,-0.000000,0.655680 (odom=xyz=0.000000,0.000000,0.000000 rpy=0.000000,-0.000000,0.000000) |

|

Administrator

|

With rtabmap-databaseviewer, can you show with Constraints view that constraint computed? If you have a small database to share, I could look more in depth.

|

| Free forum by Nabble | Edit this page |