Hi Mathieu, thank you very much for the amazing work! I am trying to integrate Hector_slam with RTABMAP. What I did was to revise your "demo_hector_mapping.launch" file, and replaced the rgb and depth topics to what I have. The device I used was Kinect v2 and Rplidar A2.

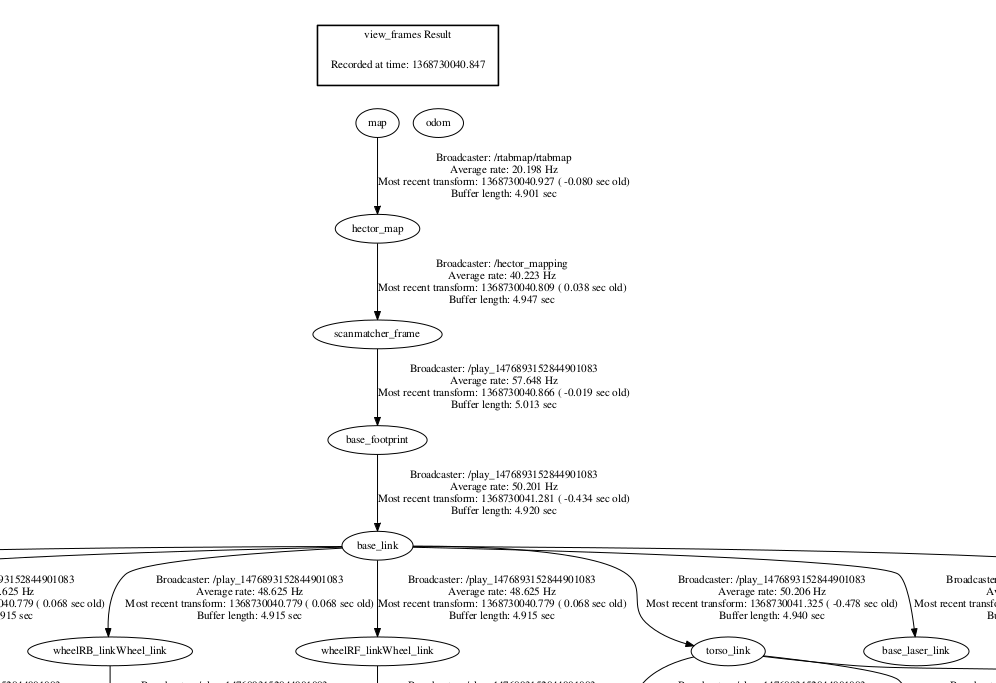

I noticed that for the example dataset you provided ("demo_mapping.bag"), the frames are like this:

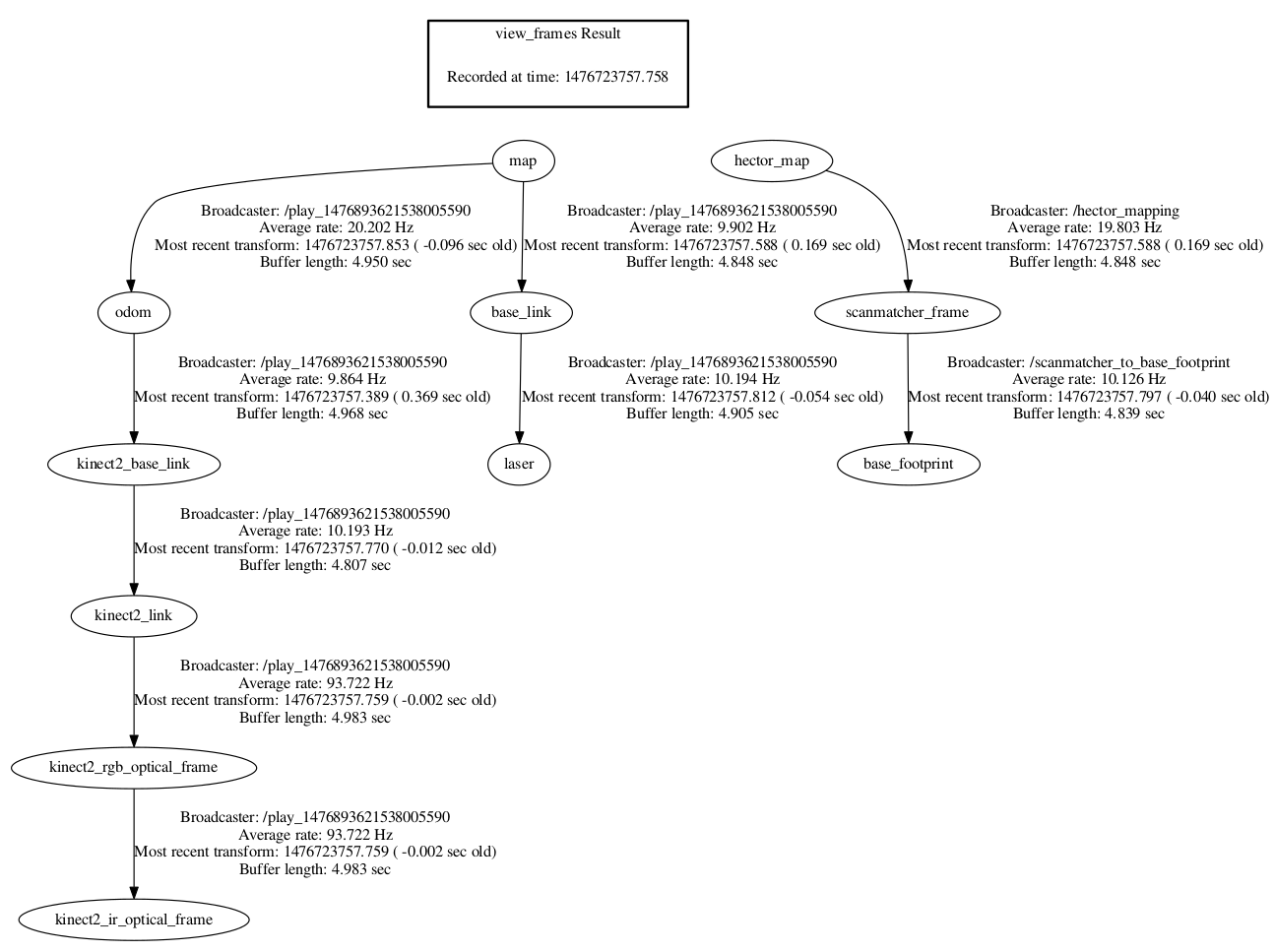

However, when I used my "demo_hector_mapping.launch" file (everything is the same except the specific topics), and run my own data, the frames are like this :

My questions is why mine is different so much than yours? Is there any other parameters I needs to set other than the "demo_hector_mapping.launch" file?

Maybe there is something to note for the data collection? If it is, could you provide me how did you collect your RGBD data as well as the lidar data? Thanks.

Here is my revised "demo_hector_mapping.launch" file:

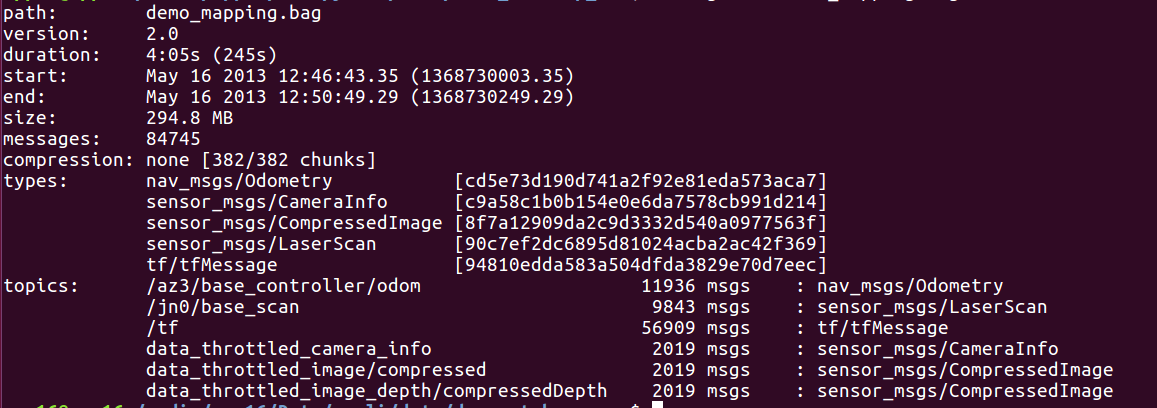

demo_hector_mapping_new_01.launchHere is the information of your "demo_mapping.bag" file:

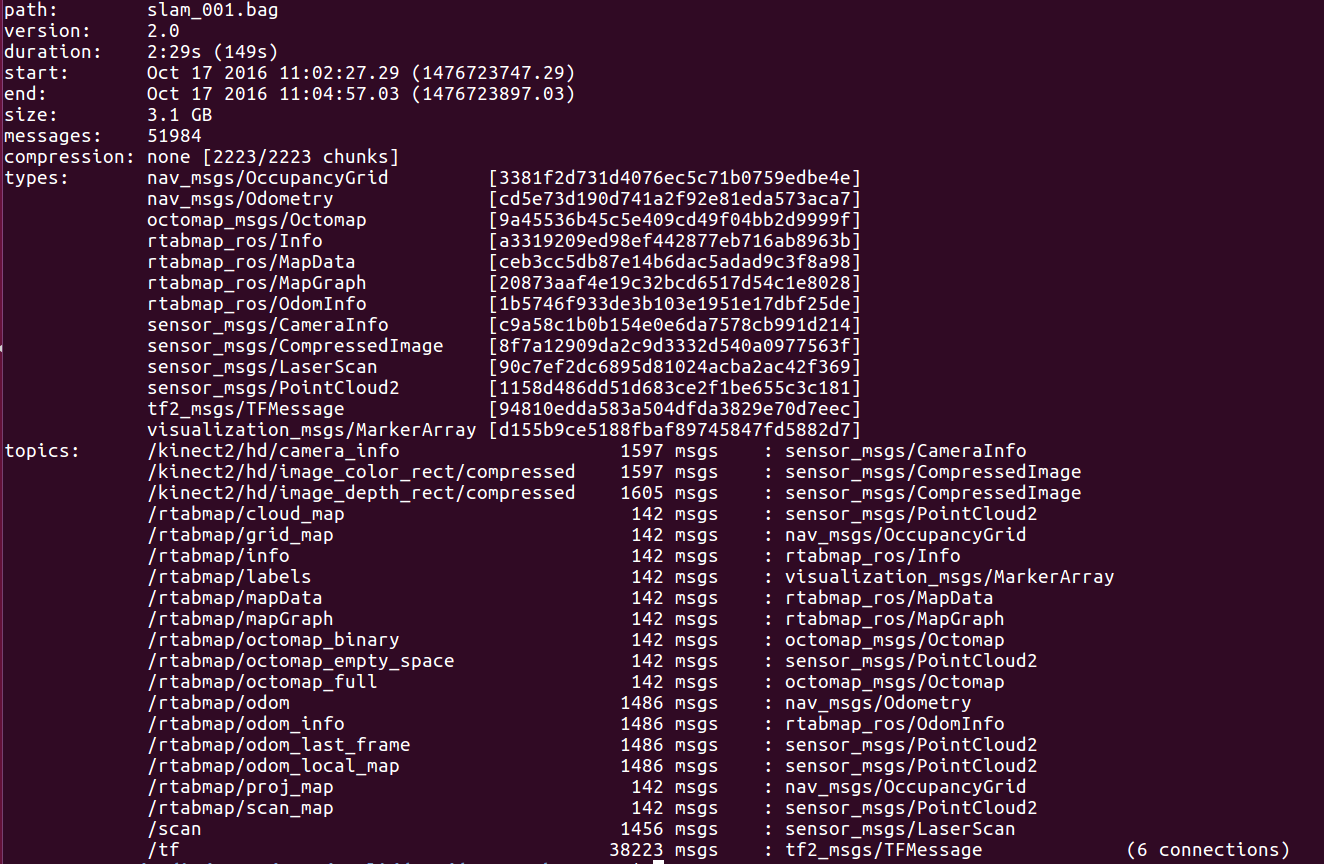

and here is that of my data:

Thanks,

You Li