Rtabmap mapping with two cameras

1234

1234

Re: Rtabmap mapping with two cameras

|

Hello,

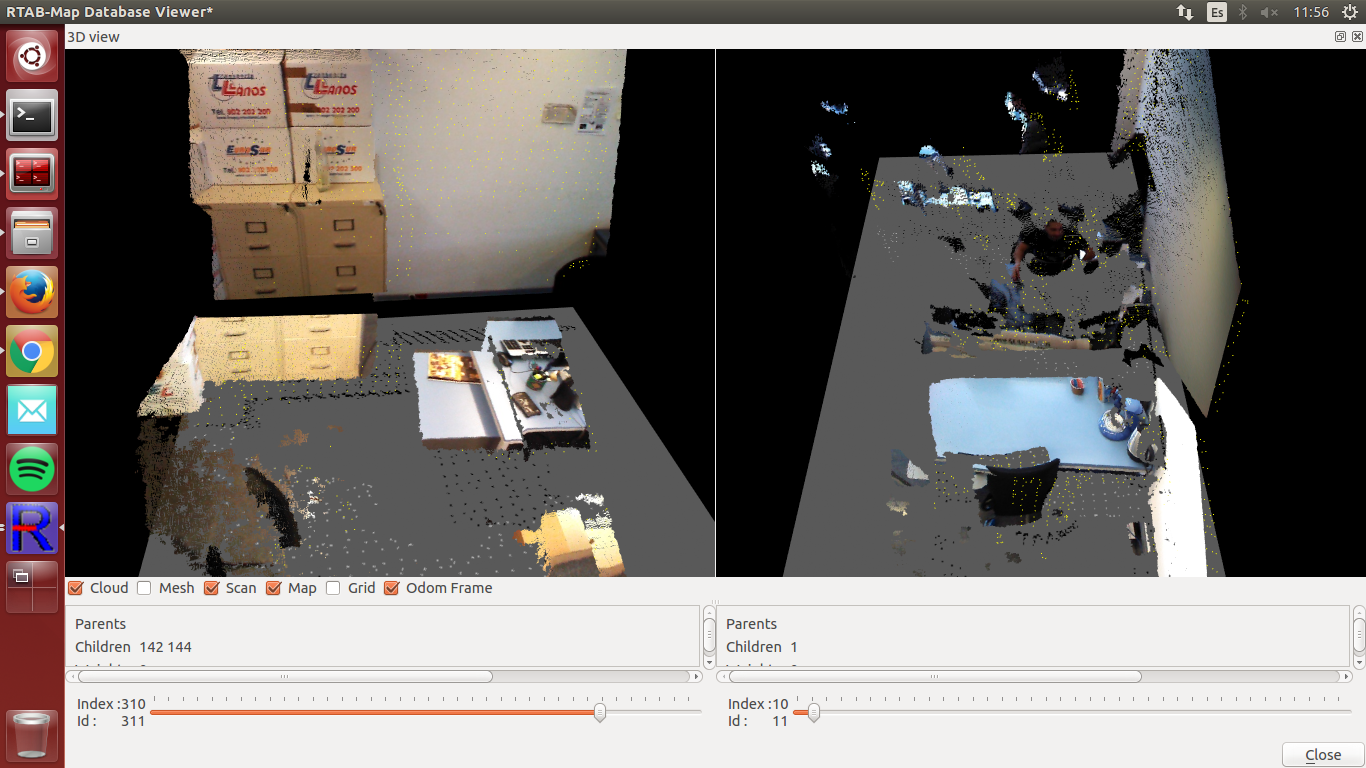

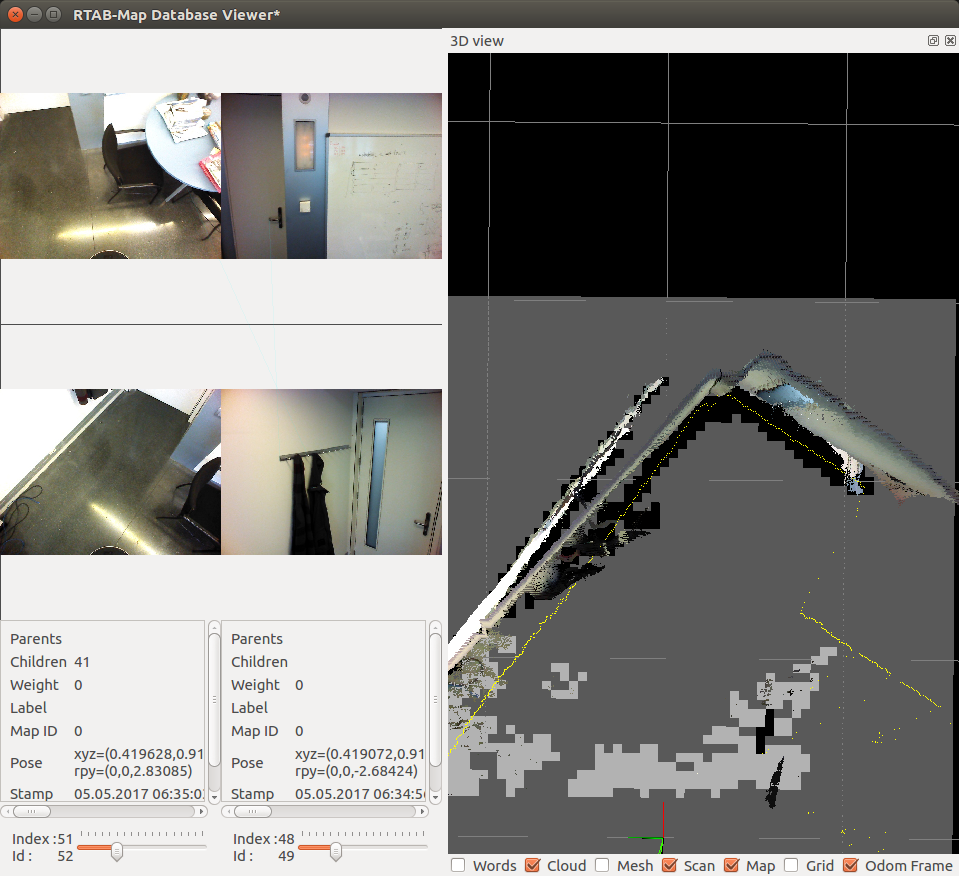

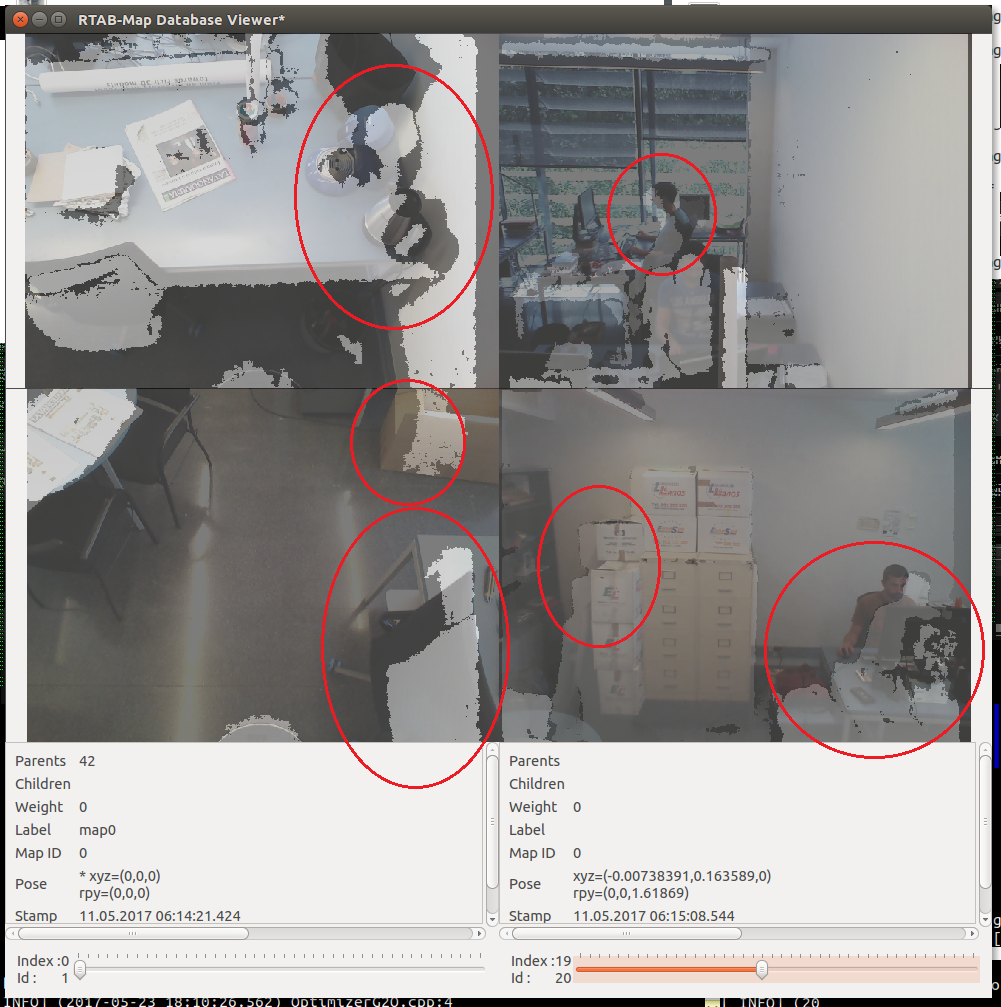

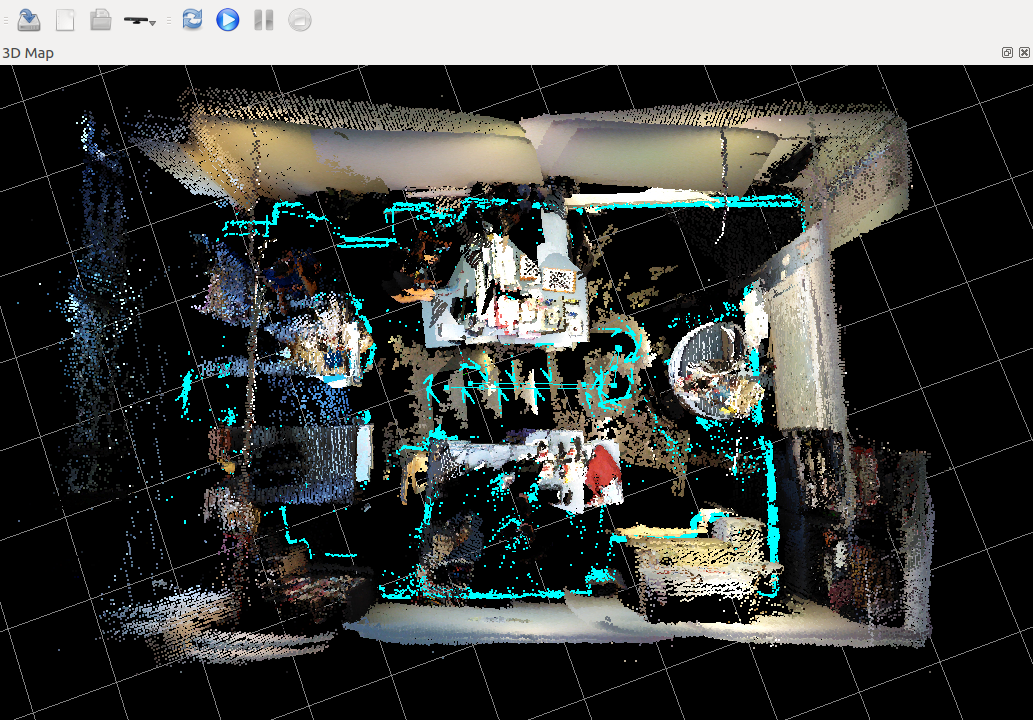

I have been doing some maps from real world - actually from the office I am working on. The results are quite bad, but I do not understand why. Here there is an image of the 3D view in database-editor selecting for example two nodes. Those are pretty good, actually I want to achieve that quality:  However, when it comes to see the actual map, the pcl, when I directly open the database from rtabmap, it is pretty bad, the first image tries to match with the right part of the 3d view, and the second with the left part. As you can see, it is like it places things more than once on the map, although the keypoints detected seem quite good from the database editor:   Any clues on why this is happening? Regards |

|

Administrator

|

Hi,

Can you share the database (or another smaller mapping session)? Looking at the images, it seems that TF between cameras may not be correct. If so, registration between clouds will not be accurate. Also, the yellow points in 3D view, which are the scan points, don't seem to be aligned with the 3D point clouds. cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

Hi, I share in the following links two databases along with the launch file. One of them uses the voxel grid and the other doesnt, but results are similar.

withFilter: http://drive.google.com/file/d/0B6gsiJNJoEJ6cTFfaFk2Rjk5UUk/view?usp=sharing withoutFilter: http://drive.google.com/file/d/0B6gsiJNJoEJ6TVZoOWl6UHcxWEU/view?usp=sharing Cheers |

|

Administrator

|

Hi,

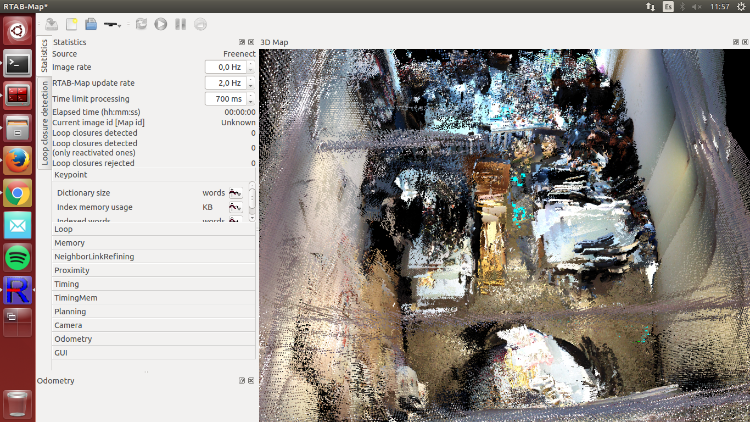

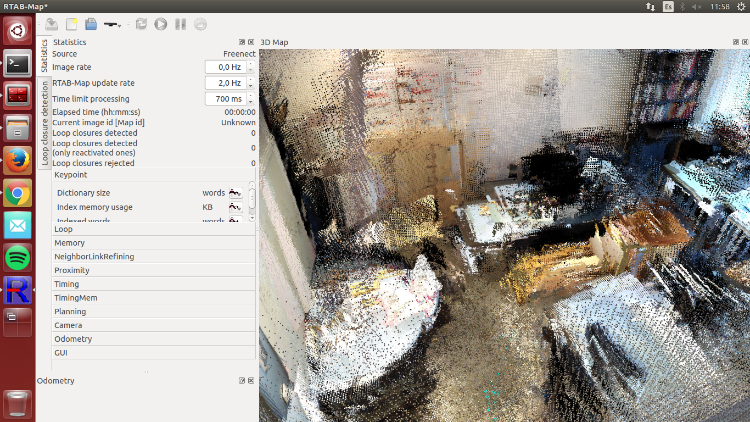

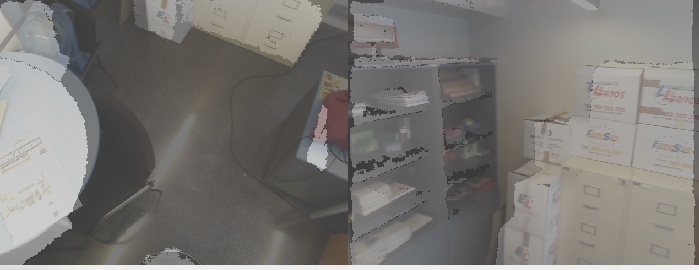

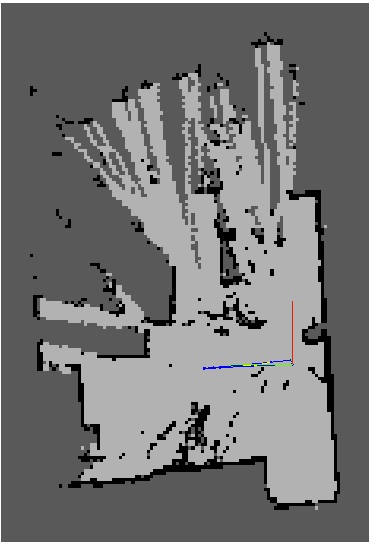

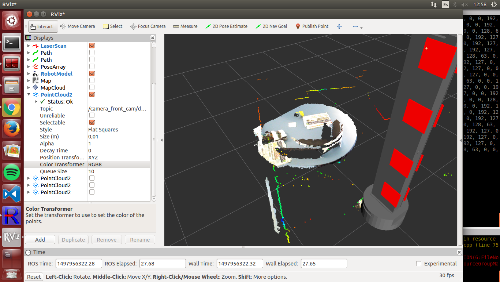

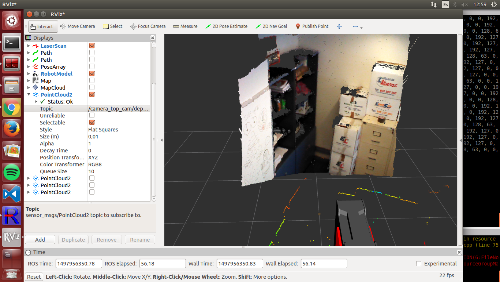

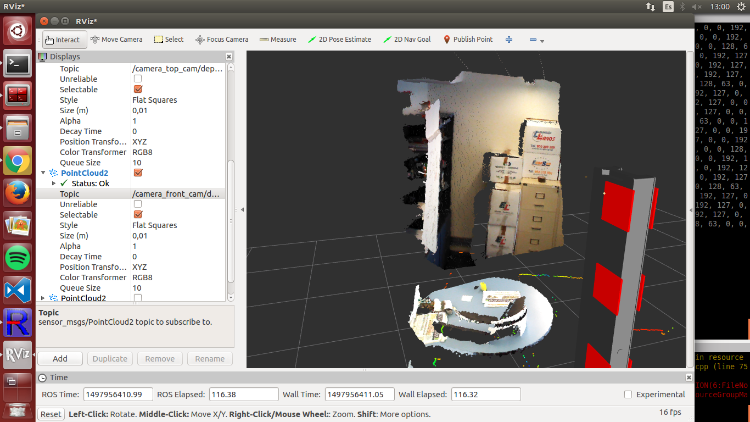

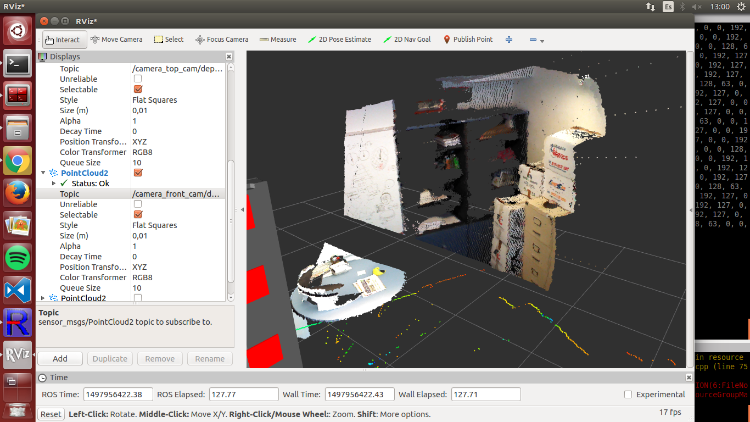

There is indeed a problem with TF between the cameras (3d view of middle images shown):  You may try to debug TF with RVIZ while showing the point clouds of both camera at the same time. Verify also the TF of the laser scan accordingly to cameras, as the laser scan doesn't match with the point clouds too (well maybe just with the top camera). See top view here of the middle images. The laser scan in yellow, the point cloud of the top camera at the corner and the point cloud of the bottom camera on left (white points). The clouds don't follow the wall detected by the lidar:  cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

I thought I aligned them properly, i guess I did not.

Thanks, I will fix it and reply back Cheers |

Re: Rtabmap mapping with two cameras

|

Hello,

I think i fixed the cameras positions and orientations and now they are matched with the laser scan. I share here another database along with the launch file here However the maps still look a bit "bad"; I mean, there are things that still appear twice or more times; for example the chair in index 1 looks nice at the beggining and also at the end, but somehow it appears twice. Also, for the whiteboard, a part of it appears ok but the other part is suddenly bad mapped. Maybe my parameters are bad set? Cheers |

|

Administrator

|

Hi,

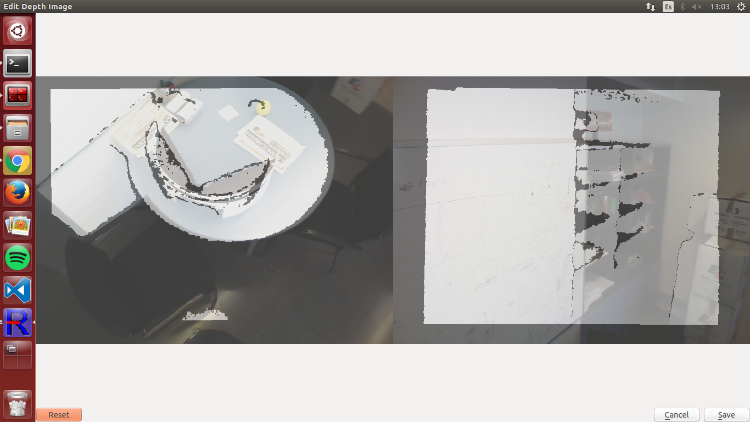

The RGB and depth images are poorly registered and/or synchronized:  If when not moving depth and rgb images don't match perfectly, redo calibration/registration of the cameras. If depth and rgb images don't match only when moving, try with parameter "approx_sync:=false" for rgbd_sync nodelets. This will only work if the camera driver publishes depth/rgb/camera_info with exactly the same stamp. EDIT: Note that laser scans are correctly matched together, the only thing is to fix rgb/depth registration. You can try "Grid/FromDepth=false" to get 2D grid map from laser scans.  cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

Hi,

I have tried what you said and results looks pretty the same. I am using this launch file rtabmapTwoCameras.launch now, with the approx_sync to false. Maybe the problem is that I do not use the correct remap for the images so the time stamps are not syncronised? Or maybe the cameras drivers are working bad? Cheers |

|

Administrator

|

Hi,

which cameras are you using? For kinect v2, there is a calibration/registration step that should be carefully done: calibrating-the-kinect-one as in the previous database I've seen really bad registration between RGB and depth even when cameras were not moving. For freenect/openni2 driver (Kinect v1), normally the default calibration should be a lot better than that, did you manually do another calibration? cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

Hi,

actually the cameras are not kinect, they are "Orbbec Astra" and "Orbbec Astra S". I was thinking that maybe rtabmap is not ready for this camera, is it? Moreover, we just found out that the odometry of the robot is not working properly, does this have to do with the bad registration? or maybe this is due to the fact that kinect has better registration sensors than this cameras? Another thing is that, when moving the robot fordward, the map works perfectly, it is when turning it and then turning it back when the objects start to appear more times. Cheers |

|

Administrator

|

Hi,

RTAB-Map can be integrated with any RGB-D camera as long as the depth image is correctly registered with the color image. The bad registration seems coming from the camera driver. For example, using rqt_image, save depth and color images and put them in photoshop or gimp with transparent overlay, the depth of objects in depth image should overlay perfectly the objects in the color image. Problem explained here: https://3dclub.orbbec3d.com/t/mapping-depth-to-color-registration-offset/94 Another tutorial about extrinsic calibration: http://wiki.ros.org/openni_launch/Tutorials/ExtrinsicCalibration For bad odometry, it is not too bad here because you are using ICP refining with the laser scans. What I saw in the previous database is that odometry was correctly corrected by the laser scan in the map. However, if you want to do navigation with move_base, you may update the odometry algorithm to be more accurate, as move_base uses odometry to update local costmap and issuing commands. cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

Hi again,

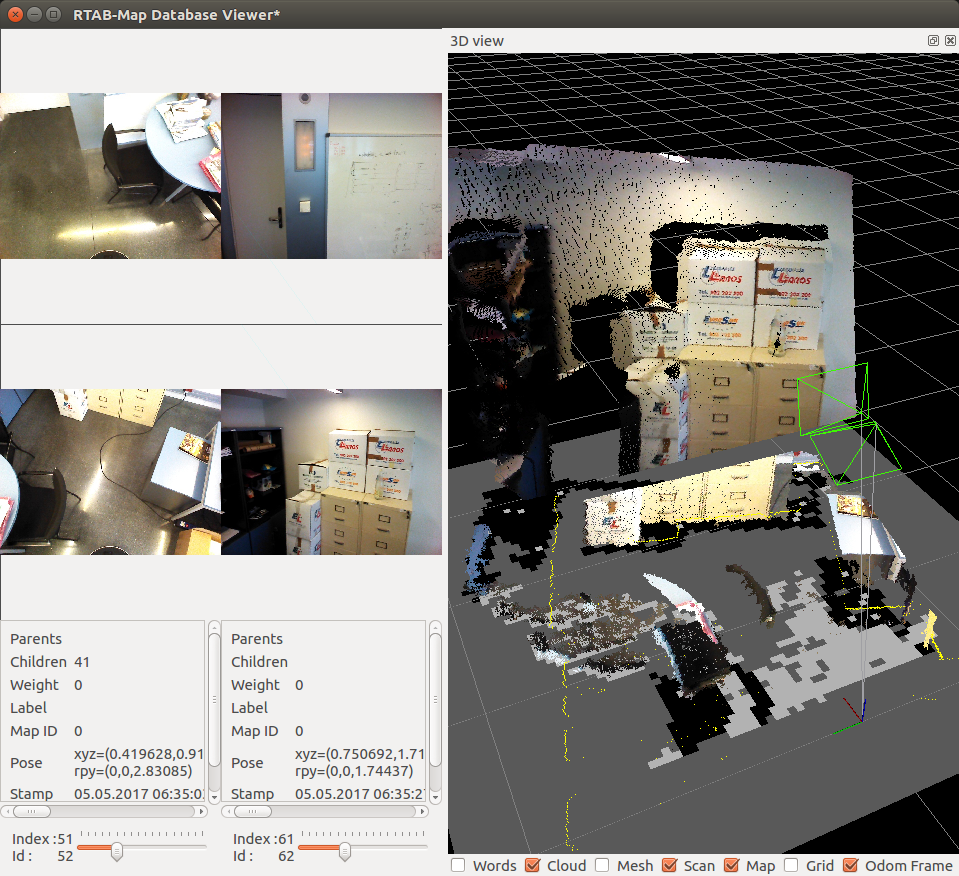

I am trying to figure out the problem with the cameras, maybe it is not just coming from the calibration and registration of the images but also from the drivers. Regarding this, how did you check that the cameras had this problem? Once the images are good registrated, do you think it is a good idea to increase the param "RGDB/AngularUpdate" to for example 0.8 in order to avoid bad loop closure detections? About the odometry it is true that the laser corrects it, as if I dont map with it activated the robot maps the walls too many times. Lastly, how is it possible that i have this good "maps" or images in the database, but in the actualy map they are so different and mixed with other stuff?  Cheers |

|

Administrator

|

Hi,

When we open the database in rtabmap-databaseViewer, right click on the image view then click on Show Depth. You will see RGB and depth supperposed. When correctly registered, there should be a perfect correspondence between RGB and depth pixels, which is not in this example:  "RGDB/AngularUpdate" (like "RGDB/LinearUpdate") is only used to ignore new data if the robot didn't rotate enough (over this angle). Can you show examples of bad loop closure detections? For the online preview of the map, I don't recommend increasing the density of the point cloud for performance issue (while it is possible, see Preferences->3D Rendering options). You can export in any resolution/density in rtabmapviz or by reopening a database in rtabmap. Do File -> Export 3D Clouds. You will have many options to generate a very dense map. cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

Hello, thanks for the reply.

I am trying to do the extrinsic calibration but I couldnt make it work as the tutorial you sent me is for prior versions of ros. I could not find any other tutorial for doing this, do you know other appart form that one? |

|

Administrator

|

Hi,

Maybe the best bet is to ask to Orbbec developers what they recommend on ROS. I've seen that some people using OpenNI2 driver and others orbbec native driver have different results. Some need to shift ~10 pixels left the depth image before doing rgb/depth registration. cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

Hello, Its been some time since I have been here.

I have been researching about this camera calibration: the tools in ROS do not work, not even any fork of "camera_pose". Also, orbbec does not provide any linux tool and the windows output for the calibration looks different from the windows one, as I explained and asked here. At this empty point I tried to check what was the problem of the cameras, as I do not understand everything correctly. So we launched the depth_registration of the cameras to see how actually the image looks, like here:     As you can see, the images look pretty well. However, when it comes to the rtabmap, with rtabmap.launch launch file, software the problems appear:  The depth looks not syncronized with the rgb, but this is caused only when rotating, as the rest of the images of the database here look well. At this point I am quite lost on track with my thesis, is there something that I am doing wrong with the software? Cheers, Adrian |

|

Administrator

|

Hi,

Indeed without moving, the registration looks good. In your test with the camera alone and the registered point cloud in RVIZ, did you try moving the camera while looking at the registered cloud to see if the color "follows" the depth? If the camera driver of these cameras are publishing already synchronized rgb and registered depth topics (with exact timestamps), you may set approx_sync parameter to false for rgbd_sync nodelets. If the camera driver cannot publish synchronized rgb and depth images, the frame rate of the topics should be high (e.g., 30 Hz) to minimize synchronization issues while moving. Otherwise, you may modify the camera driver code to synchronize rgb and depth on its side (and send topics with exact timestamps to use approx_sync above to false). cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

Hi,

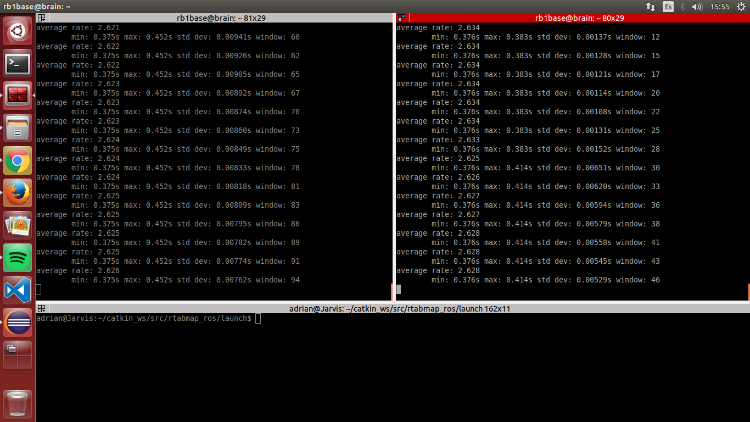

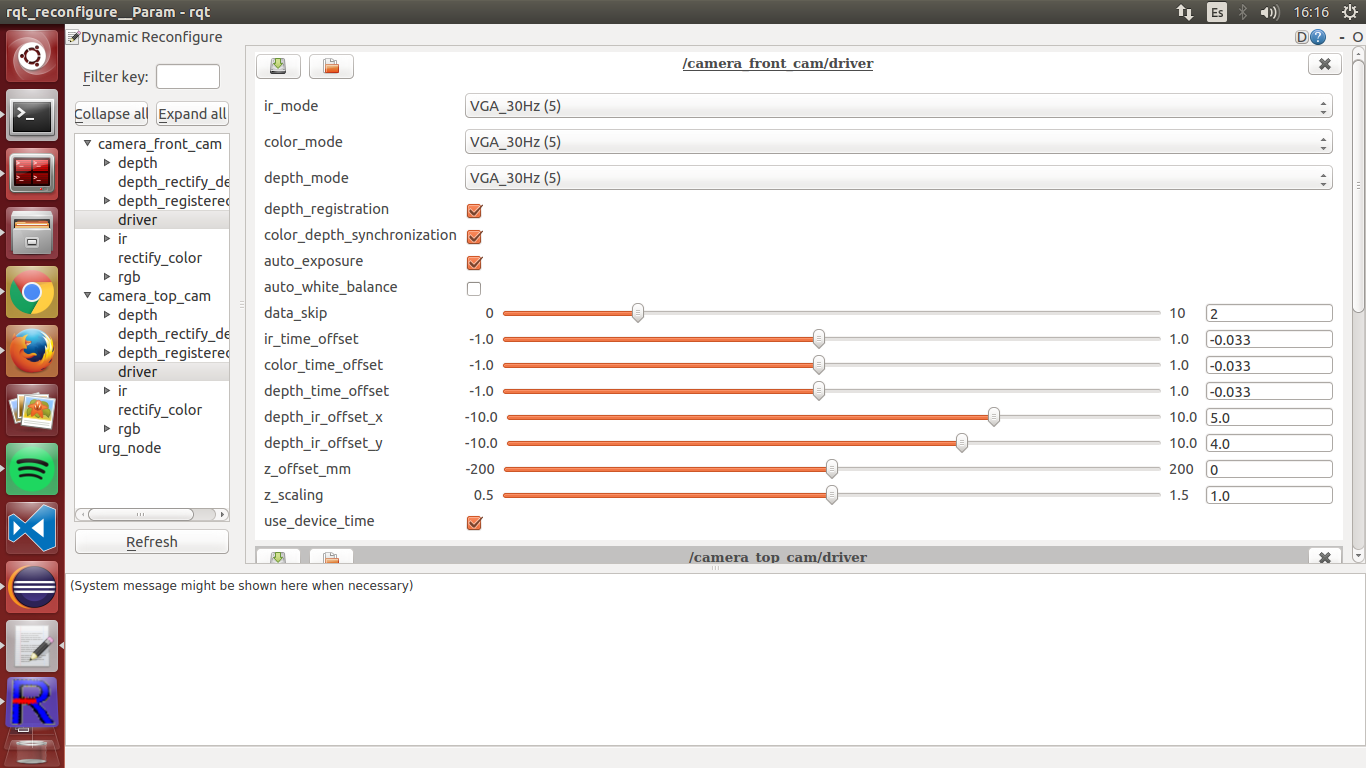

I am not sure if they are perfectly synchronised, I thought they were because the rostopic hz of depth/image_raw (left) and rgb/image_raw (right) are almost the same (miliseconds), but not exact as you say.  Then, that is why i need to set approx_sync always to true? (if I set it to false the software doesnt work). Also I checked the drivers and color_depth_synchronization is active, and the framerate is supposedly almost 30hz for each camera. I modified a few parameters (like offset) in the drivers, and some others in my launch file attached and the results seem irregulars, there are parts that more or less are well mapped but others are simply mapped two or three times. I upload a couple of small tests. What do you think? Regards rtabmap.db <https://drive.google.com/file/d/0BzDVfAKVWMsPMk5CUl9pMHhCa1E/view?usp=drive_web> rtabmap.db <https://drive.google.com/file/d/0BzDVfAKVWMsPRHVfNGtuWWVCYnc/view?usp=drive_web> rtabmapTwoCameras.launch |

Re: Rtabmap mapping with two cameras

|

This post was updated on .

I leave a picture of rqt_reconfigure with drivers:

data_skip at 0 makes the computer of the robot go really slow Other tries with slighty better results: https://drive.google.com/file/d/0BzDVfAKVWMsPcC1HSl9PVG0xUUU/view?usp=sharing https://drive.google.com/file/d/0BzDVfAKVWMsPdnRQN2hIc0VIOGM/view?usp=sharing Regards |

|

Administrator

|

Hi,

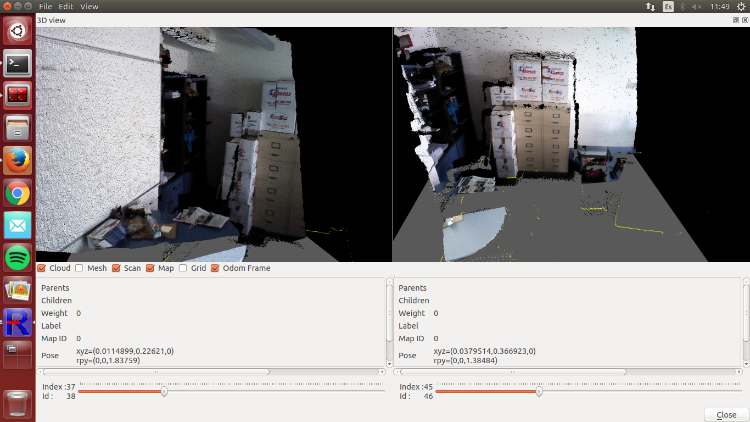

yes the last databases look better. Not sure if you could get better synchronization with a camera driver sending rgb and depth images not synchronized. It seems working like freenect or openni drivers, you may not get better sync results. From there, the map should be relatively good though. Here the rtabmap3.db:  Note that some laser scans could match better (I don't remember, is Reg/Strategy=1, Reg/Force3DoF=true and RGBD/NeighborLinkRefining=true for rtabmap node?). If there is a difference between clouds generated by the camera, the laser scan and the map, tune static transforms in the TF tree. You may also set "odom_sensor_sync" to true (of rtabmap node) to synchronize laser/cameras position accordingly to odometry timestamp. For info, when I said "exact synchronization" is that timestamps in the header of the topics are exactly the same between rgb/depth and camera_info (then approx_sync=false can be used): /camera_front_cam/rgb/image_raw/header/stamp == /camera_front_cam/depth/image_raw/header/stamp == /camera_front_cam/rgb/camera_info/header/stamp cheers, Mathieu |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |