VINS Fusion + RTABMap with ROS2 Humble

12

12

|

Hi everyone!

Is there any example how to use VINS Fusion as external Odometry with RTABMap with ROS2 humble ? Or has somebody achieved to run it successfully and could provide the launch file? Best Dominik |

|

Administrator

|

Hi, Are you referring to these packages? https://github.com/zinuok/VINS-Fusion-ROS2 https://github.com/JanekDev/VINS-Fusion-ROS2-humble-arm I don't think I have seen trying rtabmap with one of these. cheers, Mathieu |

|

Thanks for the reply!

exactly, i managed to run these Repos on the EuRoc MAV dataset but would like to run it in combination with RTABmap and my Intel Realsense D435i. Any advice how to get this done? Best Dominik |

|

Administrator

|

This post was updated on .

Hi Dominik,

Can you show which did commands you use to test on EuRoC? Can you also show what are the available topics (ros2 topic list) and the tf tree (ros2 run tf2_tools view_frames) when it is running? cheers, Mathieu EDIT: I could somewhat make it work inside rtabmap by compiling vins and adding it as dependency to rtabmap. I used that repo, fixed all compilation errors that are referred in the issues of the repo (disabled cuda/GPU stuff, fixed ros::Duration::from_seconds(0)). Then to export as a library, I added SHARED to vins_lib target and added this at the end of the same CMakeLists.txt:

ament_export_dependencies(rclcpp rcpputils std_msgs visualization_msgs geometry_msgs nav_msgs tf2 tf2_ros cv_bridge camera_models image_transport)

ament_export_include_directories(include)

ament_export_targets(${PROJECT_NAME}) # To include downstream with targets

ament_export_libraries(vins_lib) # To include downstream without targets

install(TARGETS

vins_lib

EXPORT ${PROJECT_NAME}

ARCHIVE DESTINATION lib

LIBRARY DESTINATION lib

RUNTIME DESTINATION bin

)

install(DIRECTORY src/

DESTINATION include

FILES_MATCHING PATTERN "*.h" PATTERN "*.hpp"

)

ament_package()

Also had to add "set_target_properties(camera_models PROPERTIES POSITION_INDEPENDENT_CODE ON)" in the CMakeLists.txt of camera_models package (for some -fPIC error when linking inside rtabmap). Build vins (colcon), source the setup.bash of the ros workspace, then rebuild rtabmap with: cmake -DWITH_VINS=ON We can select VINS-Fusion under odometry parameters (with vins config/realsense_d435i/realsense_stereo_imu_config.yaml and image resolution in respective files set to 848x480 instead of 640x480). While it seems to do something, the estimation with my realsense D435i was very poor. I only tested the Stereo-only mode, because with IMU the pose is drifting a lot more. In my memory, I thought VINS-fusion was working better than this, maybe there are some differences with the ros2 implementation. I'll check later if I can retest on a ros1 docker. EDIT2: tried under a docker/noetic, no success with live camera. |

|

Hi Mathieu,

First of all, thanks for your support. I used the following repo, although I think the specific choice of repository is not critical in this case. I have disabled CUDA and resolved also to ros::Duration::from_seconds(0). Node is launched using the command: ros2 run vins vins_node ~/ros2_ws/src/VINS-Fusion-ROS2-humble/config/euroc/euroc_stereo_imu_config.yaml The context is the deployment of RTAB-Map in an industrial logistics environment, where an D435i is mounted on a smart conveyed good. Primary objective is to reconstruct the trajectory of the object during operation (generation of a point cloud is nice to have). However, challenges arises due to the presence of external sensors positioned along the transport path. The sensors / rods are located in close proximity to the camera and frequently obstruct its field of view, leading to reduction in detectable keypoints. Consequently, the VO occasionally fails. To address this, I considered using VINS-Fusion, as it relies more on IMU data and may thus offer improved robustness during brief interruptions. I would be interested to know whether you see the potential for an RTAB-Map-based setup that could handle such short-term failures of VO or a temporary lack of visual features that works with ros2 humble Best Dominik |

|

Administrator

|

Hi Dominik,

RTAB-Map's VO alone won't handle well temporary camera blocked while moving. I don't have much experience with VINS-Fusion in particular, but with ARKit (iOS), you can keep a relatively accurate pose estimation when the camera is temporary blocked, though not for too long (<1 sec). Other options seen on mobile robots is to use wheel odometry (or some other local motion estimator) as guess frame to RTAB-Map's VO, so that when VO cannot be computed, the TF is still published relative to wheel odometry during that time, then VO can be restarted to where the camera should have been based on the wheel odometry. You can also look at OpenVINS project, which may be better maintained for ROS2. cheers, Mathieu |

|

Hi Mathieu,

thanks for the answer. I have one question regarding this. In Theorie i have two d435i cameras on my prototype. One facing in front, one facing to the side (90 deg shifted, not sharing Same field of view ). Is it possible to fuse both Cameras for VO ? Because in case where front camera looses VO caused by to less Keypoints, the other camera would see enough Keypoints. Or do both VOs constantly need to track enough Keypoints, otherwise it will not work with rtabmap? Best, Dominik |

|

Administrator

|

RTAB-Map's VO can use both cameras at the same time (so if one camera is blocked and some feature scan still be tracked in the other one, VO won't get lost). There is an example here. Another recommended requirement for multi-camera is to build rtabmap with OpenGV support.

Another caveat is this assumes that the cameras are hardware synchronized together. If not, it may work, though not optimal. cheers, Mathieu |

|

Hi Mathieu,

thanks, i tried this example as well, but only the topic for one camera is given into the VO node. What do i need to change, to use both camera streams for the VO? Or do i understand that wrong ? Best Dominik |

|

Administrator

|

Oh well, just realized that example didn't use two cameras in rgbd_odometry. To use both cameras in VO, you would need to do:

# RGB-D odometry

rgbd_odometry_node = launch_ros.actions.Node(

package='rtabmap_odom', executable='rgbd_odometry', output="screen",

parameters=[{

"frame_id": 'base_link',

"odom_frame_id": 'odom',

"rgbd_cameras": 2,

"publish_tf": True,

"approx_sync": True,

"subscribe_rgbd": True,

}],

remappings=[

("rgbd_image0", '/realsense_camera1/rgbd_image'),

("rgbd_image1", '/realsense_camera2/rgbd_image'),

("odom", 'odom')],

arguments=["--delete_db_on_start", ''],

prefix='',

namespace='rtabmap'

)

# SLAM

slam_node = launch_ros.actions.Node(

package='rtabmap_slam', executable='rtabmap', output="screen",

parameters=[{

"subscribe_sensor_data": True,

"subscribe_odom_info": True,

"frame_id": 'base_link',

"map_frame_id": 'map',

"publish_tf": True,

"database_path": '~/.ros/rtabmap.db',

"approx_sync": False,

"Mem/IncrementalMemory": "true",

"Mem/InitWMWithAllNodes": "true"

}],

remappings=[

("sensor_data", 'odom_sensor_data/raw'),

("odom", 'odom')],

arguments=["--delete_db_on_start"],

prefix='',

namespace='rtabmap'

)

I also modified rtabmap node to get sensor_data output from rgbd_odometry (containing already the images synchronized and visual features, and to make also sure that odometry topic is perfectly synced with the same data used by vo). You can also instantiate rtabmap_viz node with pretty much the same parameters/remappings than rtabmap node. cheers, Mathieu |

|

Thank you Mathieu, I really appreciate your effort!

Following your advice on this post i would like to use the stereo odometry with my two d435i‘s, but that shouldn‘t be a problem and works the same way, right? Last question on this: regarding your last paragraph what you have done to VO/RTABmap node with the sensor topic, could you share those nodes by any chance to get a grasp of what exactly you have done as I’m new to all of this and it could save me a lot of time? (i‘m using ros2 humble) Best, Dominik |

|

Administrator

|

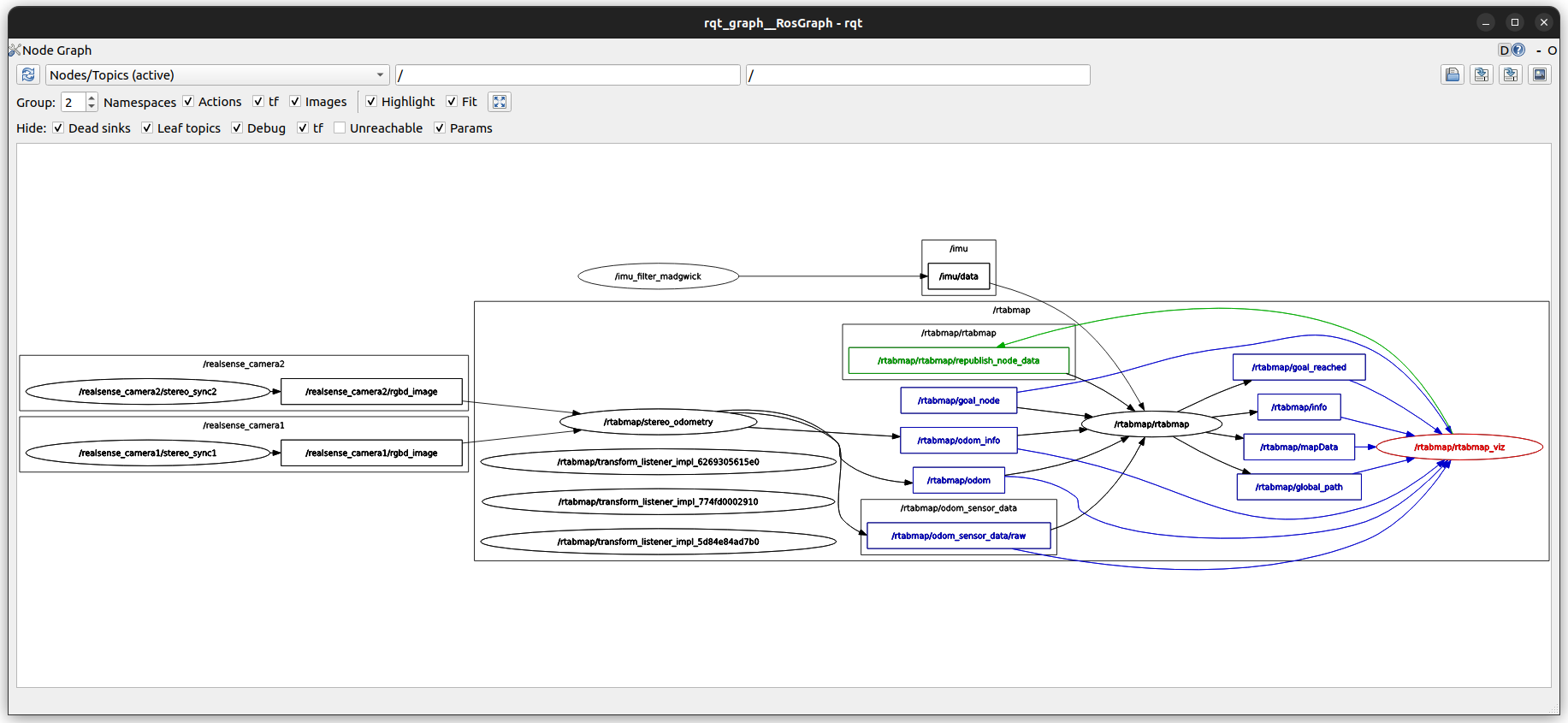

Hi Dominik,

To show nodes and topics like in my screenshot, you can use rqt_graph: $ ros2 run rqt_graph rqt_graphThe same plugin can be opened in rqt directly too (along with other useful tools): $ rqtPlugins->Introspection->Node Graph Other plugins I use often: # Show TF tree (generate a pdf) $ ros2 run tf2_tools view_frames # Show all ros logs and/or filter them $ ros2 run rqt_console rqt_console # Show some data over time (e.g., odometry values) $ ros2 run rqt_plot rqt_plot \ /odom/pose/pose/position/x \ /odom/pose/pose/position/y \ /odom/pose/pose/orientation/z # Diagnostic (when nodes support it, rtabmap publishes some of them about input and output expected frequencies): $ ros2 run rqt_runtime_monitor rqt_runtime_monitor # Re-configuring nodes (particularly useful when tuning nav2 stack for example, note that for rtabmap you would use rtabmap_viz instead) $ ros2 run rqt_reconfigure rqt_reconfigure # Just looking an image topic without rviz $ ros2 run rqt_image_view rqt_image_view If you want to use stereo mode instead, you can use stereo_sync nodes with stereo_odometry. stereo_odometry has also "subscribe_rgbd" and "rgbd_cameras" parameters to connect the output of stereo_sync. You can do `ros2 node info` on a stereo_sync node to see the topics you would need to remap. Note that D435i's IR+Depth mode would be similar to stereo mode, but the depth will be already computed on the camera. cheers, Mathieu |

|

Hi Mathieu,

Thanks again for your help — I’ve got both RGB-D and stereo mode working with two D435i's now! I just have one question regarding your comment: "Note that D435i's IR+Depth mode would be similar to stereo mode, but the depth will be already computed on the camera." Do you mean that, for example, one could use rgbd_odometry with the left IR image and the Depth stream computed by the D435i? Based on your experience — what generally works better with the D435i for odometry? I’ve read that the depth stream works best in the 0.2–3 m range but becomes less reliable beyond that. I’d prefer to use the IR stream for odometry. Would you recommend feeding the camera-computed Depth + left IR into rgbd_odometry, or letting stereo_odometry compute depth directly from the stereo IR images? Huge thanks again! Best, Dominik |

|

Administrator

|

Hi Dominik,

I usually use Left IR+Depth mode with D435i camera (to get "free" depth image). The baseline is not very large on that camera, the depth error will increase faster. Note that stereo mode would have similar error. To use IR+Depth mode instead of stereo, make sure you don't limit the depth range in the realsense driver, you can limit it later in rtabmap in post-processing for more accurate rendering purpose. cheers, Mathieu |

|

Hi Mathieu,

I hope you're doing well! I've noticed some strange behavior related to Z-axis drift in the rawr VO and wanted to check if you have any insights into what might be causing it. In the example below, I drove the exact same path three times but ended up with three different Z-axis drifts each time:  I also have a question about the multi-camera setup: It seems that only the current point cloud from the cameras is displayed during operation. Previously seen points disappear and are neither shown in the map nor stored. Additionally, I couldn’t find a corrected trajectory output when using the multi-camera setup. Do you have any advice or suggestions on this? Thanks a lot in advance! Dominik |

|

In reply to this post by matlabbe

Hi,

I have changed my RTABMap configuration as suggested here. Previously, I've been using an RGBDXSync node and my RTABMap::rgbd_odometry and rtabmap_slam::CoreWrapper subscribed to it with rgbd_cameras=0. However, the rtabmap started working in mono8. Is that expected? Also, I want to use SuperPoint and SuperGlue. I was able to set them in the RTABMap core wrapper, but I remember that I was unable to use SuperGlue in the odom node due to the node crashing. |

|

Administrator

|

In reply to this post by dobbolino

Hi Dominik,

Is it using IMU? or purely VO? What is the frame_id used by VO? Possible causes: * TF between frame_id and the camera frame is not accurate * TF between frame_id and the imu frame is not accurate * Remaining distortions in the camera images You may launch VO in the frame of the camera instead of your base frame, with purely VO to see if there is a difference (that test would ignore all issues related to static TF frames). Sounds like the rtabmap node is not working. Is the database empty after the test? There should not be any differences between single or mult-camera, expect you get two times more clouds to show. cheers, Mathieu |

|

Administrator

|

In reply to this post by f0rward

Did you also set subscribe_rgbd=true?

Can you start another post with superglue crashing log in odom node? I remember trying SuperPoint/SuperGlue with ros using this Dockerfile on ROS Noetic, but it seems the example shown is using SuperPoint/SuperGlue only for rtabmap node, not odometry, don't remember if I tried it (lack of GPURAM?). To try it, instead of:

docker run -it --rm --user $UID \

-e NVIDIA_VISIBLE_DEVICES=all \

-e NVIDIA_DRIVER_CAPABILITIES=all \

--runtime=nvidia \

-e ROS_HOME=/tmp/.ros \

--network host \

-v ~/.ros:/tmp/.ros rtabmap_ros:superpoint \

roslaunch rtabmap_launch rtabmap.launch \

rtabmap_viz:=false \

database_path:=/tmp/.ros/rtabmap.db \

rtabmap_args:="\

--delete_db_on_start \

--SuperPoint/ModelPath /workspace/superpoint_v1.pt \

--PyMatcher/Path /workspace/SuperGluePretrainedNetwork/rtabmap_superglue.py \

--Kp/DetectorStrategy 11 \

--Vis/CorNNType 6 \

--Reg/RepeatOnce false \

--Vis/CorGuessWinSize 0" \

odom_args:="\

--Vis/CorNNType 1 \

--Reg/RepeatOnce true \

--Vis/CorGuessWinSize 40" \

left_image_topic:=/camera/infra1/image_rect_raw \

right_image_topic:=/camera/infra2/image_rect_raw \

left_camera_info_topic:=/camera/infra1/camera_info \

right_camera_info_topic:=/camera/infra2/camera_info \

stereo:=true \

wait_imu_to_init:=true \

imu_topic:=/rtabmap/imu

Do this: (would enable SuperPoint/SuperGlue for odom):

docker run -it --rm --user $UID \

-e NVIDIA_VISIBLE_DEVICES=all \

-e NVIDIA_DRIVER_CAPABILITIES=all \

--runtime=nvidia \

-e ROS_HOME=/tmp/.ros \

--network host \

-v ~/.ros:/tmp/.ros rtabmap_ros:superpoint \

roslaunch rtabmap_launch rtabmap.launch \

rtabmap_viz:=false \

database_path:=/tmp/.ros/rtabmap.db \

args:="\

--delete_db_on_start \

--SuperPoint/ModelPath /workspace/superpoint_v1.pt \

--PyMatcher/Path /workspace/SuperGluePretrainedNetwork/rtabmap_superglue.py \

--Kp/DetectorStrategy 11 \

--Vis/CorNNType 6 \

--Reg/RepeatOnce false \

--Vis/CorGuessWinSize 0" \

left_image_topic:=/camera/infra1/image_rect_raw \

right_image_topic:=/camera/infra2/image_rect_raw \

left_camera_info_topic:=/camera/infra1/camera_info \

right_camera_info_topic:=/camera/infra2/camera_info \

stereo:=true \

wait_imu_to_init:=true \

imu_topic:=/rtabmap/imu

("args" is used by both rtabmap and rgbd_odometry nodes)

for ROS2, would have to make a new Dockerfile similar to that one, but the launch command would be fairly similar to above, like: ros2 launch rtabmap_launch rtabmap.launch.pyinstead of: roslaunch rtabmap_launch rtabmap.launch |

|

This post was updated on .

In reply to this post by matlabbe

Hi Mathieu,

1. Z-drift issue I’m using the Madgwick filter’s orientation output from the D435i’s IMU in the VO node. My current frame_id is base_link (the static transform to camera_link comes from robot_state_publisher based on a CAD model and should be accurate). Switching frame_id to camera_link didn’t change the Z-drift. What I noticed is that the drift seems to depend on my workstation’s hardware performance (currently running in high performance modus). However, replaying the exact same bag files after letting the machine cool down after every trajectory gives a different Z-drift pattern (not better, but different):  The cameras were calibrated with Intel’s on-chip self-calibration tool (intrinsics + extrinsics as described in this white-paper), and I’m using the static TFs from camera_link to the camera sensors directly from the RealSense SDK. However, I believe this doesn’t recalibrate camera_link ↔ IMU or handle any time-shift compensation. Therefore i also tried a cam/IMU calibration with Kalibr and got this result , but I’m not sure which of those TFs I actually need to publish for RTAB-Map to work correctly instead of relying on Realsense SDK TFs. Note: camera_link is located at the optical center of the left IR sensor. 2. Pointcloud issue Yes, I’m deleting the database at startup. With a single camera everything works as expected, but with the dual-camera setup I only see the current point cloud in RTAB-Map — previously seen points aren’t displayed. Launch file is below — maybe you can spot something that could cause this?

urdf_path = os.path.join(get_package_share_path('agenda_bringup'), 'urdf', 'camera_baseframe_assembly.urdf.xacro')

rviz_config = os.path.join(get_package_share_path('agenda_bringup'), 'rviz', 'camera_baseframe.rviz')

robot_description = ParameterValue(Command(['xacro ', urdf_path]), value_type=str)

parameters_sync = [{

"approx_sync": True,

"approx_sync_max_interval":1.0,

}]

parameters_vo = [{

#'use_sim_time': True,

"frame_id": 'base_link',

"odom_frame_id": 'odom',

'subscribe_stereo': True,

"rgbd_cameras": 2,

"publish_tf": True,

"approx_sync": True,

"approx_sync_max_interval":1.0,

"subscribe_rgbd": True,

"Vis/EstimationType": '1'

}]

parameters_slam = [{

#'use_sim_time': True,

"rgbd_cameras":2,

"subscribe_rgbd": True,

"subscribe_sensor_data": True,

"subscribe_odom_info": True,

"frame_id": 'base_link',

"map_frame_id": 'map',

"publish_tf": True,

"database_path": '~/.ros/rtabmap.db',

"approx_sync": False,

"Mem/IncrementalMemory": "true",

"Mem/InitWMWithAllNodes": "true",

"Vis/EstimationType": '1'

}]

def slam_node_launch_setup(context, *args, **kwargs):

delete_db_on_start = LaunchConfiguration('delete_db_on_start').perform(context)

slam_arguments = ['-d'] if delete_db_on_start in ['true', '1'] else []

slam_node = Node(

package='rtabmap_slam',

executable='rtabmap',

output='screen',

parameters=parameters_slam,

remappings=[

('imu', '/imu/data'),

("sensor_data", 'odom_sensor_data/raw'),

("odom", 'odom')],

arguments=slam_arguments

)

return [slam_node]

def generate_launch_description():

database_path_arg = DeclareLaunchArgument(

'database_path',

default_value=os.path.join(os.environ['HOME'], '.ros', 'rtabmap.db'),

description='Pfad zur RTAB-Map-Datenbank'

)

delete_db_on_start_arg = DeclareLaunchArgument(

'delete_db_on_start',

default_value='true',

description='Datenbank beim Start löschen (true/false)'

)

stereo_sync1_node = Node(

package='rtabmap_sync', executable='stereo_sync', name='stereo_sync1', output="screen",

parameters= parameters_sync,

remappings=[

("left/image_rect", '/camera1/infra1/image_rect_raw'),

("right/image_rect", '/camera1/infra2/image_rect_raw'),

("left/camera_info",'/camera1/infra1/camera_info'),

("right/camera_info",'/camera1/infra2/camera_info'),

("rgbd_image", 'rgbd_image')],

namespace='realsense_camera1'

)

stereo_sync2_node = Node(

package='rtabmap_sync', executable='stereo_sync', name='stereo_sync2', output="screen",

parameters= parameters_sync,

remappings=[

("left/image_rect", '/camera2/infra1/image_rect_raw'),

("right/image_rect", '/camera2/infra2/image_rect_raw'),

("left/camera_info",'/camera2/infra1/camera_info'),

("right/camera_info",'/camera2/infra2/camera_info'),

("rgbd_image", 'rgbd_image')],

namespace='realsense_camera2'

)

# RGB-D odometry

stereo_odometry_node = Node(

package='rtabmap_odom', executable='stereo_odometry', output="screen",

parameters=parameters_vo,

remappings=[

('imu', '/imu/data'),

("rgbd_image0", '/realsense_camera1/rgbd_image'),

("rgbd_image1", '/realsense_camera2/rgbd_image'),

("odom", 'odom')],

arguments=["--delete_db_on_start", ''],

prefix='',

namespace='rtabmap'

)

viz_node = Node(

package='rtabmap_viz', executable='rtabmap_viz', output='screen',

parameters=parameters_slam,

remappings=[

# ("rgbd_image0", '/realsense_camera1/rgbd_image'),

# ("rgbd_image1", '/realsense_camera2/rgbd_image'),

("sensor_data", 'odom_sensor_data/raw'),

("odom", 'odom')],

arguments=["--delete_db_on_start"],

prefix='',

namespace='rtabmap'

)

# publishes base_link <-> camera1_link and base_link <-> camera2_link

robot_state_publisher_node = Node(

package="robot_state_publisher",

executable="robot_state_publisher",

parameters=[{'robot_description': robot_description}]

)

imu_filter_node = Node(

package='imu_filter_madgwick', executable='imu_filter_madgwick_node', output='screen',

parameters=[{'use_mag': False, 'world_frame': 'enu', 'publish_tf': False}],

remappings=[('imu/data_raw', f'/camera1/imu')]

)

return LaunchDescription(

[

database_path_arg,

delete_db_on_start_arg,

# bag_path_arg,

# bag_rate_arg,

# sim_time_param,

# bag_player,

stereo_sync1_node,

stereo_sync2_node,

stereo_odometry_node,

OpaqueFunction(function=slam_node_launch_setup),

viz_node,

robot_state_publisher_node,

imu_filter_node

]

)

(launch file code)

|

|

Administrator

|

Did you compare the cam0-imu0 transform from Kalibr to the one from static tf? to use if there is a huge difference or not.

If you set Optimizer/GravitySigma to 0 for rtabmap node, does it change the "drift"? If you are looking at the map->base_link frame trajectory, IMU optimization done on rtabmap side could give this effect if Optimizer/GravitySigma is underestimated. For the launch, it is because the rtabmap_viz node is not connected to rtabmap output. rtabmap_viz is launched under "rtabmap" namespace, so it is expecting /rtabmap/mapData topic. rtabmap node is launched in global namespace, so it advertises to /mapData. Launch rtabmap node inside the same namespace than rtabmap_viz to avoid remapping all topics between these two. Example: slam_node = Node( package='rtabmap_slam', executable='rtabmap', output='screen', parameters=parameters_slam, remappings=[ ('imu', '/imu/data'), ("sensor_data", 'odom_sensor_data/raw'), ("odom", 'odom')], arguments=slam_arguments, namespace='rtabmap' )

|

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |