implementing rtabmap_drone_example in real drone

12

12

|

Hello sir, I am trying to implement this on a real drone with px4 and rpi4. what sensor does it use in simulation for holding constant altitude? I saw px4flow topic but it was not publishing anything in simulation. and you said the visual odometry must be > 10hz how do I can check that in rpi4.Also I checked rostopic hz rtabmap/odom and found to be around 2.5. Will this be issue?

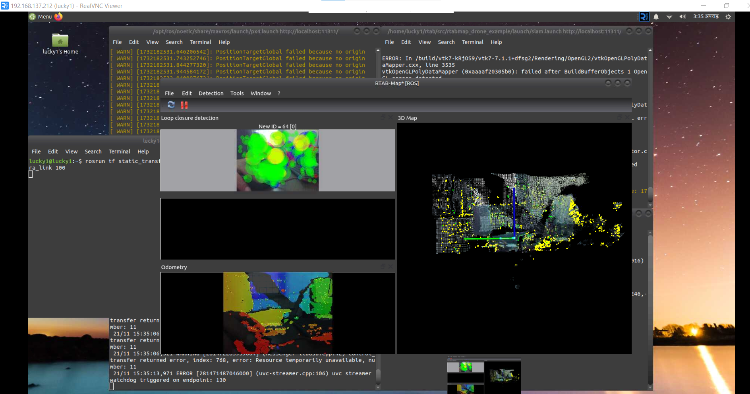

Here are some of the images when we launched in real drone  see the odometry image why is it like that not like the one in simulation? Also I'm passing all the topic for visualization in another computer and only runnning computation in rpi4. Do you suggest to change to jetson nano ? |

|

Administrator

|

In the simulator, the drone would take off from altitude 0 based on visual odometry. In the offboard node, we target an altitude of 1.5 meter. The controller would know the current altitude from the visual odometry input.

2.5 Hz odometry is too slow. You can decrease the resolution of the images if you can, or set Odom/ImageDecimation to 2 or 4. Check also that sometimes the slow rate on RPI4 is that the camera driver itself cannot handle fast frame rate, causing synchronization issues downstream. |

|

This post was updated on .

We tried to change the Odom/ImageDecimation to 4 but that didn't processed the slam.launch.but Odom/ImageDecimation 2 was fine and we saw little /rtabmap/odom hertz increase. We even decrease the fps and resolution but cannot get /rtabmap/odom over 5 hz. If the odom hertz will be 4 will it create issue ?

If there are no obstacle on an open field/ ground will I be able to navigate if the hz of /rtabmap/odom is less than 5 hz ? Will it be better to switch to jetson nano? Can you give any ideas on how we will be able to tune some parameter to run this efficiently on rpi4-b with 4gb ram(4gb swap already) ? I was thinking of doing processing in my laptop by subscribing to rpi4 topic on my drone. I will use the compressed topic for this. Can this be done ? What you think how effective this will be ? |

|

Administrator

|

Can you see log of how much time is required to generate /rtabmap/odom? If it is 50 ms and you still have 4 Hz, maybe there is an issue with the input topics.

I cannot tell if switching to nano will be better. For some camera drivers, they may be faster on nano. Using the swap doesn't sound right, that can cause latency problems. If you can ensure a reliable WIFI connection, you may try doing it on remote computer. |

|

This post was updated on .

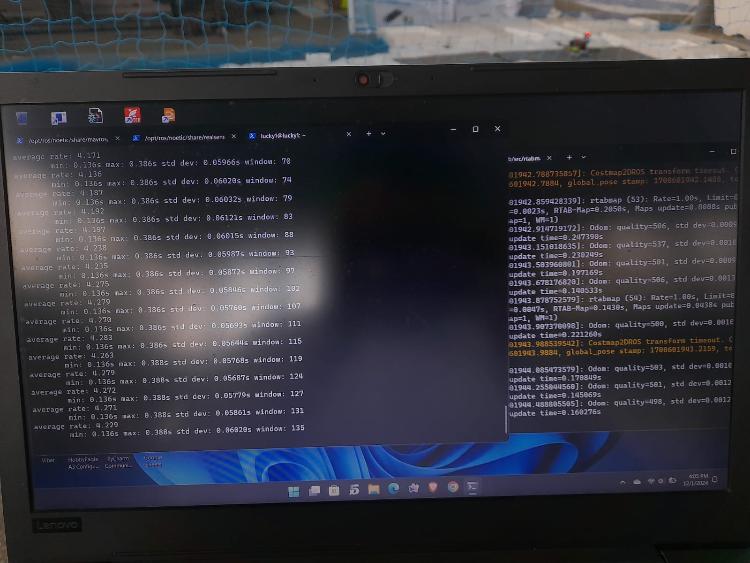

Hello Mathieu,

I tried rtabmap_drone_example on real drone but faced some issues. The drone kind of hovers at given altitude (0.5m) after launching offboard but after 1-2 second starts to drift left and right. We are using rpi4b for processing with 1.5GHz and 8 gb ram. RPI4B is processing rs_camera.launch for d435 camera and slam.launch. We have set the camera resolution as 640*480 and fps=15. Running rostopic hz on camera topic we are getting > 10 hz for color/image_raw but only around 5hz for aligned_depth_to_color and the /rtabmap/odom hz is like 3 - 4 hz. When we run slam.launch we get odometry > 250 and the time update as around 0.15s. The drone takesoff and tries to hold constant altitude but slowly starts to drift. What could be the issue ? I tried to change Odom/ImageDecimation to 2 and 4 but still similar behaviour of drone. Can I really run this on rpi4b with the given processing power? If I can then what parameters can be tuned so that odometry hz increases? Will rpi5 with 2.4GHz be able to handle the processing? I was thinking of doing SLAM.launch in my laptop and running only the px4.launch and rs_camera.launch on rpi4b. If I want to do this will rpi4b wifi and my laptop hotspot will have enough speed/bandwidth transfer ? here is my odometry and /rtabmap/odom hz photo

|

|

This post was updated on .

Just a little update above, really waiting for your update. Been stuck on this issue for last 2 weeks. Do I need the infra1 and infra2 camera ? I don't think we need those as there is no such parameter in launch file .

|

|

Administrator

|

Visual odometry varies around 200-250 ms, which is 4-5 Hz you are seeing, so it is a kind of a CPU limitation. A RPI5 would be probably better. You could try streaming to a remote computer just to compare, in case you can stream images over wifi without problem.

For the drone drifting, you can compare with visual odometry if it is also drifting. If not, maybe it is because the VO delay is too high and EKF filter of PX4 is ignoring it. One way to go around that is to re-stamp the VO poses before sending to PX4 (that is what we do here). If you are using a D435, I'll strongly recommend to use infra1 + depth image (with Ir emitter disabled) instead of RGB/Depth. cheers, Mathieu |

|

This post was updated on .

To use infra1+depth images, will the remapping of rgb_topics to infra1 will work ?

About the re-stamp of the VO poses before sending to PX4, I am doing rosrun the same offboard.src file of rtabmap_drone_example. If the re-stamp is already done in the code what do you mean, I couldn't understand ? IN my current setup, I am passing /camera/color/image_raw and /camera/color/camera_info and /camera/aligned_depth_to_color/image_raw topics. Should I change this to image_rect_raw topics lke this or is it fine ? when doing rostopic hz on all color topics I get like 15hz but on the aligned_depth_color topics I get like 5-6 hz. But on the /camera/depth/color topics I get 20hz. Which topic you would suggest to use on rpi4b and d435 camera setup. We have completly turned off GPS and all other parameter are set to use vision.(PX4 Firmware is the latest not 1.12.3) Thanks for the reply Mathieu. |

|

Administrator

|

Hi,

Yes That may not a re-stamping issue then. In any cases, you should send rectified images to rtabmap, though some camera drivers may publish /camera/color/image_raw as rectified. Doublecheck how much /camera/color/image_raw and /camera/color/image_rect_raw are different. I don't know what represents /camera/depth/color, but you should use the depth image aligned with the rgb camera. If you use infra1 instead, you can use original depth that is already aligned with that camera. |

|

Hello Mathieu,

Thanks for suggesting infra1+depth. drone is now performing much better than our previous (color+depth). Can I get some reasons why infra1 is used in real world instead of color which was used in simulation But still there is like 5-6 cm swing (roll) in drone while taking off on offboard. I have used 15fps and 640*480 resolution for camera and rpi4b. For increasing processing speed as you suggested the following parameter are used --Vis/MaxFeatures 500 --Mem/ImagePreDecimation 2 --Mem/ImagePostDecimation 2 --OdomF2M/MaxSize 1000 --Odom/ImageDecimation 2 --Odom/Strategy 1 --Optimizer/GravitySigma 0.1 --Vis/FeatureType 10 --Kp/DetectorStrategy 10 --Grid/MapFrameProjection true --NormalsSegmentation false --Grid/MaxGroundHeight 1 --Grid/MaxObstacleHeight 1.6 --RGBD/StartAtOrigin true Currently IR emitter is not disabled. My question is what other parameters can be tuned to improve performance in drone ? I want to drone as stable as possible while taking off to required altiude without much drifts. Or Maybe if I can send you the .db file so you can check whats going wrong when the drone takeoffs. Also while giving nav goal the drone looses altitude, what may be issue? |

|

Administrator

|

The simulator is a fake camera, it is not really using the same spec of the realsense. The infra1 has a global shutter and has larger field of view, two important things to get best visual odometry. If you are now using infra1, I strongly recommend to disable the IR emitter or put a sticker on it to block it, otherwise visual odometry will have a hard time as there would be points in the environments moving with the drone. To debug drone takeoff issue, I would need to see the raw data used by visual odometry, so a rosbag of the 15Hz images would be better. I have seen the drone losing altitude when creating that example (issue that we can actually see at 3:52 in that video), but it was fixed by updating px4 to a newer version. I've found the youtube comment:

|

|

Thanks Mathieu for the reply.

Can you check if the parameters I mentioned above will increase odometry rate ? and are there other parameters to increase it ? Also does increasing camera fps to 30 instead of 15 and keeping resolution to 640*480 increase the odometry ? I will send the rosbag to you. |

|

Administrator

|

If you are using "--Odom/Strategy 1", you may also combine with "--Vis/CorType 1". That would be the fastest vo approach from rtabmap, otherwise you may try VIO approaches like msckf_vio or open_vins. |

|

Hello Mathieu,

I used infrared camera and got the drone to the hover at desire altitude of 0.5m but now when giving nav goal through rviz the drone goes forward but looses this altitude while reaching the goal. the drone touches the ground trying to reach the goal. I am using the same global planner and local planner as you did, but what maybe the issue any ideas you have on this issue ? and are there any resources to solve this kind of problem ? px4 1.15 and latest mavros version |

|

Administrator

|

You may try to debug if the lost of altitude is caused be visual odometry or the on-board EKF fusion. You can upload your data to https://review.px4.io/ to see some graph and can also be shared.

|

|

This post was updated on .

Hello Mathieu,

We are thinking of reducing the camera fps to 6 and resolution to 640*480. Do you thing the visual odometry frequency will be sufficient for drone to perform SLAM and autonomous nav ? I also saw your rp3.ini in this link https://github.com/introlab/rtabmap/wiki/Installation#raspberrypi. How can I load this file in the rtabmap parameters since the github repo has slam.launch only ? what rostopic hz do I need to check for ensuring the odometry is > 10 hz (is it rostopic hz /rtabmap/odom?) by changing different parameters? Or is it impossible to do SLAM and autonomous NAV in drone with RPI4? Also to add, sometime I get 6 hz in rostopic hz /rtabmap/odom while sometimes I get 1-2 hz in rostopic hz /rtabmap/odom. Do you have any idea why is that so ? do you have any ideas how can we try drone mapping and navigation using rpi4 or I mean to say any processing hacks for improvements. Doing remote mapping is also not good for Pi4? Hardware: RP4, px4 , d435 |

|

Administrator

|

6 fps feels too slow, I am not sure the px4 EKF will be able to appropriately fuse vision data, in particular if latency/delay is high. Consider using a VIO approach like msckf_vio designed for drones.

For ROS and RPI3, take a look at this link. You can check rtabmap’s odom rate with rostopic hz and ristopic delay on /rtabmap/odom topic. I would not say impossible, I saw rtabmap working on very small drones with Qualcomm chip (drone flight it was called if I remember), but VIO was computed by some closed-source approach (I think the rate was around 20 Hz). Rtabmap was used only as the slam backend ( loop closure and map management). If the frame rate is dropping to 1 or 2 hz but processing time is still under 200 ms, there could be input camera framerate issue. You may check the rate of all camera topics at the same time to know where the rate drop is coming from. For remote mapping, it could be possible to to slam back end on remote, but I would recommend to do the front end ( vo or VIO) onboard to limit the delays. |

|

This post was updated on .

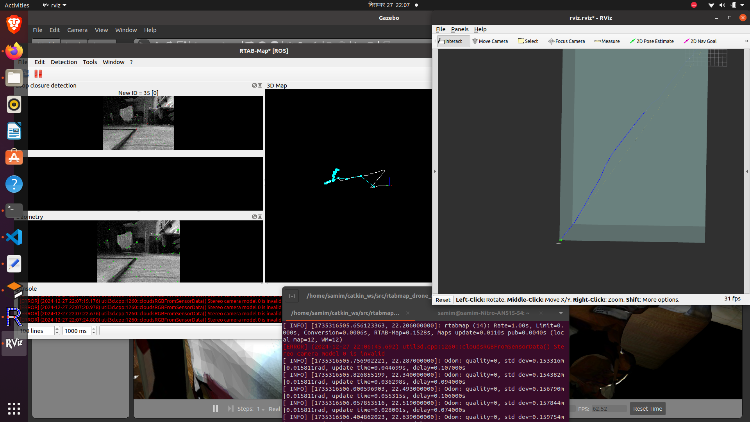

So I can set Odom/Strategy 8 to get the msckf_vio odometry approach right ?

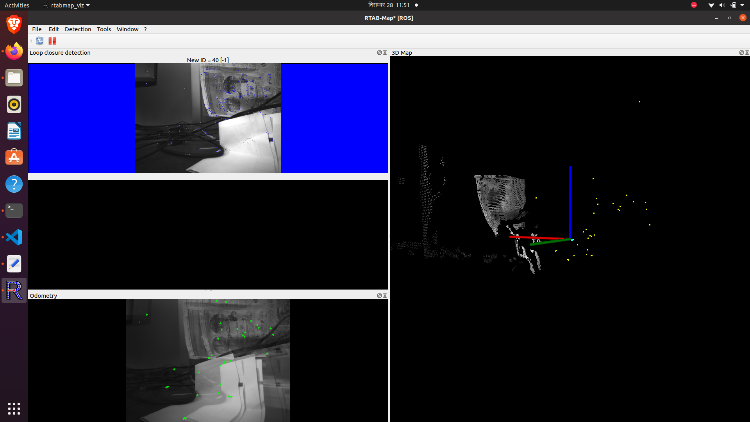

My question is whether msckf_vio will support my infra1+depth? I ran msckf_vio using the two ir camera in simulation and got the following  and in terminal I get this Odom: quality=0, std dev=0.057065m|0.015811rad, update time=0.027484s, delay=0.031000s [ INFO] [1735316669.258054018, 20.008000000]: Odom: quality=0, std dev=0.058607m|0.015811rad, update time=0.062536s, delay=0.099000s [ INFO] [1735316669.500543202, 20.138000000]: Odom: quality=0, std dev=0.060890m|0.015811rad, update time=0.028479s, delay=0.096000s Do I need to use infra1+infra2 for msck_vio? What about the costmap and move_base planning?Or is there another way of remapping slam.launch? If there is can you please provide the launch file. I am guessing using msckf_vio will bring the /rtabmap/odom hz > 10 and rtabmap will give the dense 3d point cloud. Is it right ? currently I'm using this launch file <?xml version="1.0"?>

<launch>

<arg name="localization" default="false"/>

<arg name="rtabmap_viz" default="true"/>

<arg name="ground_truth" default="false"/>

<arg name="sim" default="true"/>

<arg name="use_global_map" default="false"/>

<arg if="$(arg localization)" name="pre_args" default=""/>

<arg unless="$(arg localization)" name="pre_args" default="-d"/>

<node pkg="nodelet" type="nodelet" name="imu_to_tf" args="standalone rtabmap_util/imu_to_tf">

<remap from="imu/data" to="/mavros/imu/data"/>

<param name="fixed_frame_id" value="base_link_stabilized"/>

<param name="base_frame_id" value="base_link"/>

</node>

<!-- To connect rtabmap planning stuff with move_base below -->

<param name="/rtabmap/rtabmap/use_action_for_goal" value="true"/>

<remap from="/rtabmap/move_base" to="/move_base"/>

<!-- VSLAM -->

<param name="/rtabmap/rtabmap/latch" value="false"/> <!-- For some reason, if we latch grid_map, the global costmap inflation layer will create holes on robot path. To avoid holes, republish grid_map on each update (latching disabled). -->

<include file="$(find rtabmap_launch)/launch/rtabmap.launch">

<arg name="localization" value="$(arg localization)"/>

<arg name="stereo" value="true"/>

<arg name="args" value="$(arg pre_args) --Odom/Strategy 8 --Optimizer/GravitySigma 0.1 --Vis/FeatureType 10 --Kp/DetectorStrategy 10 --Grid/MapFrameProjection true --NormalsSegmentation false --Grid/MaxGroundHeight 1 --Grid/MaxObstacleHeight 1.6 --RGBD/StartAtOrigin true" />

<arg name="rtabmap_viz" value="$(arg rtabmap_viz)" />

<arg name="frame_id" value="base_link" />

<arg name="odom_guess_frame_id" value="base_link_stabilized" />

<arg name="left_image_topic" value="/D435_camera/camera/ir2/image_raw" />

<arg name="right_image_topic" value="/D435_camera/camera/ir/image_raw" />

<arg name="left_camera_info_topic" value="/D435_camera/camera/ir2/camera_info" />

<arg name="right_camera_info_topic" value="/D435_camera/camera/ir/camera_info" />

<arg name="imu_topic" value="/mavros/imu/data"/>

<arg name="wait_imu_to_init" value="true"/>

<arg name="approx_sync" value="true"/>

<arg if="$(arg ground_truth)" name="ground_truth_frame_id" value="world"/>

<arg if="$(arg ground_truth)" name="ground_truth_base_frame_id" value="base_link_gt"/>

</include>

<!-- Costmap

<node pkg="nodelet" type="nodelet" name="camera_points_xyz" args="standalone rtabmap_util/point_cloud_xyz">

<remap from="depth/image" to="/camera/aligned_depth_to_color/image_raw"/>

<remap from="depth/camera_info" to="/camera/aligned_depth_to_color/camera_info"/>

<remap from="cloud" to="camera_cloud" />

<param name="decimation" type="double" value="4"/>

<param name="voxel_size" type="double" value="0.0"/>

<param name="approx_sync" type="bool" value="true"/>

</node> -->

<!-- navigation -->

<node pkg="move_base" type="move_base" respawn="false" name="move_base" output="screen">

<remap from="map" to="/rtabmap/grid_map"/>

<remap from="odom" to="/rtabmap/odom"/>

<param name="OmplPlanner/odometry_topic" value="/rtabmap/odom" />

<param name="base_local_planner" value="dwa_local_planner/DWAPlannerROS" />

<rosparam file="$(find rtabmap_drone_example)/param/costmap_common_params.yaml" command="load" ns="global_costmap" />

<rosparam file="$(find rtabmap_drone_example)/param/costmap_common_params.yaml" command="load" ns="local_costmap" />

<rosparam file="$(find rtabmap_drone_example)/param/global_costmap_params.yaml" command="load" />

<rosparam file="$(find rtabmap_drone_example)/param/local_costmap_params.yaml" command="load" />

<rosparam file="$(find rtabmap_drone_example)/param/base_local_planner_params.yaml" command="load" />

</node>

<node name="empty_voxels_markers" pkg="rtabmap_costmap_plugins" type="voxel_markers" args="voxel_grid:=/move_base/local_costmap/voxel_layer/voxel_grid visualization_marker:=/voxels_empty">

<param name="cell_type" value="0"/>

</node>

<node name="marked_voxels_markers" pkg="rtabmap_costmap_plugins" type="voxel_markers" args="voxel_grid:=/move_base/local_costmap/voxel_layer/voxel_grid visualization_marker:=/voxels_marked" />

</launch>

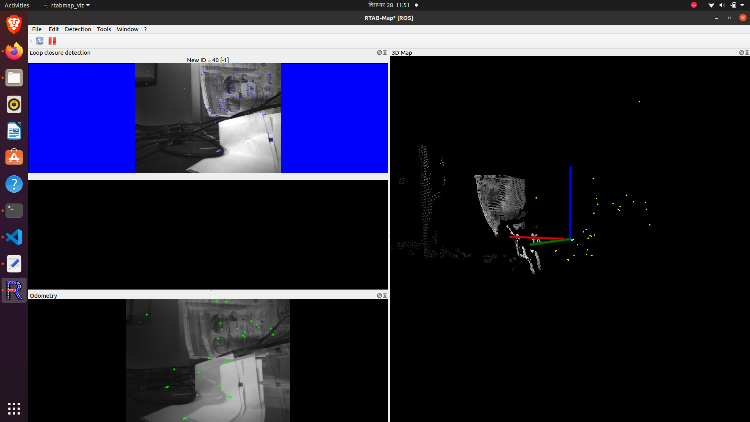

In real drone, I get the following in RTAB gui with Odom/Strategy set to 8. I have done static transform of camera_link to base_link (0 0 0 0 0 0).

the image and features are opposite to each other. and in terminal I get Odom: quality=0, std dev=0.014701m|0.015811rad, update time=0.003791s, delay=0.024658s Odom: quality=0, std dev=0.014775m|0.015811rad, update time=0.031933s, delay=0.045303s Make sure IMU is published faster than data rate! (last image stamp=1735366937.850737 and last imu stamp received=1735366937.843248). Buffering the image until an imu with same or greater stamp is received.What can be the issue. Please guide me through this. |

|

Administrator

|

You can also try msckf_vio package directly if it makes it easier to debug or make sure issues you could see are not related to rtabmap integration. You should be able to use infra left and right. Make sure to disable IR emitter if you do so. If you get "Make sure IMU is published faster than data rate! (last image stamp=1735366937.850737 and last imu stamp received=1735366937.843248). Buffering the image until an imu with same or greater stamp is received.", the approach (or any VIO approaches) may not work properly. What i the frame rate of the IMU? I would expect that IMU delay is always smaller than the the camera delay, so if IMU data received after image, there is something wrong about the driver. |

|

This post was updated on .

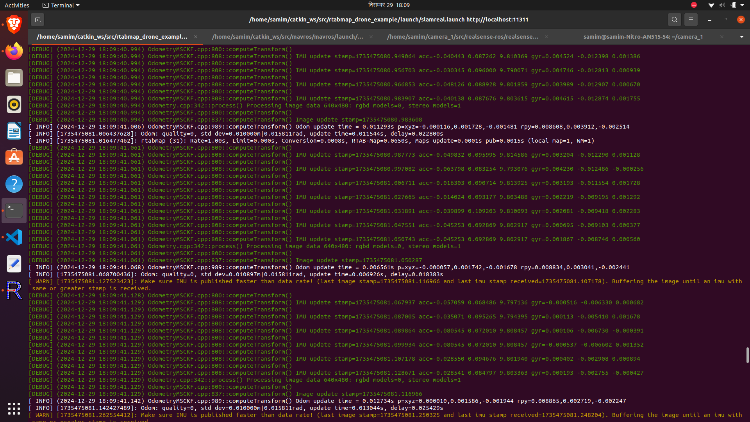

roslaunch rtabmap_launch rtabmap.launch \ rtabmap_args:="--delete_db_on_start" \ left_image_topic:=/camera/infra1/image_rect_raw \ right_image_topic:=/camera/infra2/image_rect_raw \ left_camera_info_topic:=/camera/infra1/camera_info \ right_camera_info_topic:=/camera/infra2/camera_info \ stereo:=true I used this to test if msckf_vio is working with Odom/Strategy set to 8. But as you see in the picture above the odom quality is 0 but in rtabmap gui the features are coming but are in opposite direction the ir images  when setting these same parameters in the slam.launch file for rtabmap_drone_example I get errors. [ INFO] [1735471624.723013700]: Odom: quality=0, std dev=0.013451m|0.015811rad, update time=0.007419s, delay=0.051344s [ INFO] [1735471624.762384399]: Odom: quality=0, std dev=0.013852m|0.015811rad, update time=0.015196s, delay=0.057370s [ INFO] [1735471624.786541107]: Odom: quality=0, std dev=0.014729m|0.015811rad, update time=0.006580s, delay=0.048158s What may be the issue? Also take a look at this on real drone implemenation of MSCKF_VIO  [DEBUG] (2024-12-29 18:09:48.271) OdometryMSCKF.cpp:800::computeTransform() [DEBUG] (2024-12-29 18:09:48.271) OdometryMSCKF.cpp:808::computeTransform() IMU update stamp=1735475088.208419 acc=-0.043486 0.096491 9.811581 gyr=-0.007254 0.003139 -0.001783 [DEBUG] (2024-12-29 18:09:48.271) OdometryMSCKF.cpp:800::computeTransform() [DEBUG] (2024-12-29 18:09:48.271) OdometryMSCKF.cpp:808::computeTransform() IMU update stamp=1735475088.218429 acc=-0.027667 0.084424 9.809449 gyr=-0.007284 0.001291 -0.001756 [DEBUG] (2024-12-29 18:09:48.271) OdometryMSCKF.cpp:800::computeTransform() [DEBUG] (2024-12-29 18:09:48.271) OdometryMSCKF.cpp:808::computeTransform() IMU update stamp=1735475088.223419 acc=-0.027667 0.084424 9.809449 gyr=-0.007457 0.000939 -0.001546 [DEBUG] (2024-12-29 18:09:48.271) OdometryMSCKF.cpp:800::computeTransform() [DEBUG] (2024-12-29 18:09:48.271) OdometryMSCKF.cpp:808::computeTransform() IMU update stamp=1735475088.238429 acc=-0.051082 0.075744 9.792781 gyr=-0.007713 0.000141 -0.001119 [DEBUG] (2024-12-29 18:09:48.271) OdometryMSCKF.cpp:800::computeTransform() [DEBUG] (2024-12-29 18:09:48.271) OdometryMSCKF.cpp:808::computeTransform() IMU update stamp=1735475088.243429 acc=-0.051082 0.075744 9.792781 gyr=-0.006932 -0.000172 -0.000932 [DEBUG] (2024-12-29 18:09:48.271) OdometryMSCKF.cpp:800::computeTransform() [DEBUG] (2024-12-29 18:09:48.271) OdometryMSCKF.cpp:808::computeTransform() IMU update stamp=1735475088.268429 acc=-0.025140 0.075903 9.807644 gyr=-0.006304 -0.002918 -0.001023 [DEBUG] (2024-12-29 18:09:48.271) Odometry.cpp:342::process() Processing image data 640x480: rgbd models=0, stereo models=1 [DEBUG] (2024-12-29 18:09:48.271) OdometryMSCKF.cpp:800::computeTransform() [DEBUG] (2024-12-29 18:09:48.271) OdometryMSCKF.cpp:837::computeTransform() Image update stamp=1735475088.251797 [ INFO] (2024-12-29 18:09:48.276) OdometryMSCKF.cpp:989::computeTransform() Odom update time = 0.005081s p=xyz=0.000053,0.001984,-0.001811 rpy=0.008455,0.006167,-0.002073 [ INFO] [1735475088.277201802]: Odom: quality=0, std dev=0.013525m|0.015811rad, update time=0.005467s, delay=0.025365s Here is a small part of launch file which I think will be necessary <include file="$(find rtabmap_launch)/launch/rtabmap.launch">

<arg name="localization" value="$(arg localization)"/>

<arg name="stereo" value="true"/>

<arg name="args" value="$(arg pre_args) --Odom/Strategy 8 --Optimizer/GravitySigma 0.1 --Vis/FeatureType 10 --Kp/DetectorStrategy 10 --Grid/MapFrameProjection true --NormalsSegmentation false --Grid/MaxGroundHeight 1 --Grid/MaxObstacleHeight 1.6 --RGBD/StartAtOrigin true" />

<arg name="rtabmap_viz" value="$(arg rtabmap_viz)" />

<arg name="frame_id" value="base_link" />

<arg name="odom_guess_frame_id" value="base_link_stabilized" />

<arg name="left_image_topic" value="/camera/infra1/image_rect_raw" />

<arg name="right_image_topic" value="/camera/infra2/image_rect_raw" />

<arg name="left_camera_info_topic" value="/camera/infra1/camera_info" />

<arg name="right_camera_info_topic" value="/camera/infra2/camera_info" />

<arg name="imu_topic" value="/mavros/imu/data"/>

<arg name="wait_imu_to_init" value="true"/>

<arg name="approx_sync" value="true"/>

<arg if="$(arg ground_truth)" name="ground_truth_frame_id" value="world"/>

<arg if="$(arg ground_truth)" name="ground_truth_base_frame_id" value="base_link_gt"/>

</include>"I would expect that IMU delay is always smaller than the the camera delay, so if IMU data received after image, there is something wrong about the driver. " I installed the latest librealsense driver if the driver you are talking about is the camera driver. |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |