misalignment from tango multi session

123

123

|

Hi.

I'm testing RTAB from a few weeks with kinect and lenovo phab2 in office space environment. I have better result with the tango phone, thanks to the phone odometry. I have some trouble to align multi session. I try on a 3 database set. 2 of the DB align well because they start infront of the same QrCode (I fix 8 QrCode around the office wall to create good anchor points).The third one start infront of a Qrcode previously scan in the other scans, and finish infront of the starting point of the 2 others. I try replay with TORO optimization in RTAB-map standelone, but I have misalignment. Is there a way to well align scans without start infront of the same area? I don't well understand of the rtab settings. database set: https://we.tl/t-jHE5CHe6F8 config: [Camera] imgRate = 0 mirroring = false calibrationName = type = 3 device = localTransform = 0 0 1 -1 0 0 0 -1 0 imageDecimation = 1 rgbd\driver = 4 rgbd\rgbdColorOnly = false rgbd\distortion_model = rgbd\bilateral = false rgbd\bilateral_sigma_s = 10 rgbd\bilateral_sigma_r = 0.10000000000000001 stereo\driver = 1 stereo\depthGenerated = false stereo\exposureCompensation = false rgb\driver = 0 rgb\rectify = false Openni\oniPath = Openni2\autoWhiteBalance = true Openni2\autoExposure = true Openni2\exposure = 0 Openni2\gain = 100 Openni2\mirroring = false Openni2\stampsIdsUsed = false Openni2\oniPath = Openni2\hshift = 0 Openni2\vshift = 0 Freenect2\format = 1 Freenect2\minDepth = 0.29999999999999999 Freenect2\maxDepth = 12 Freenect2\bilateralFiltering = true Freenect2\edgeAwareFiltering = true Freenect2\noiseFiltering = true Freenect2\pipeline = K4W2\format = 1 RealSense\presetRGB = 0 RealSense\presetDepth = 2 RealSense\odom = false RGBDImages\path_rgb = RGBDImages\path_depth = RGBDImages\scale = 1 RGBDImages\start_index = 0 StereoImages\path_left = StereoImages\path_right = StereoImages\rectify = false StereoImages\start_index = 0 StereoVideo\path = StereoVideo\path2 = StereoZed\resolution = 2 StereoZed\quality = 1 StereoZed\self_calibration = false StereoZed\sensing_mode = 0 StereoZed\confidence_thr = 100 StereoZed\odom = false StereoZed\svo_path = Images\path = Images\startPos = 0 Images\bayerMode = 0 Images\filenames_as_stamps = false Images\sync_stamps = true Images\stamps = Images\path_scans = Images\scan_transform = 0 0 0 0 0 0 Images\scan_max_pts = 0 Images\scan_downsample_step = 1 Images\scan_voxel_size = 0.025000000000000001 Images\odom_path = Images\odom_format = 0 Images\gt_path = Images\gt_format = 0 Images\max_pose_time_diff = 0.02 Images\imu_path = Images\imu_local_transform = 0 0 1 0 -1 0 1 0 0 Images\imu_rate = 0 Video\path = ScanFromDepth\enabled = false ScanFromDepth\decimation = 8 ScanFromDepth\maxDepth = 4 ScanFromDepth\voxelSize = 0.025000000000000001 ScanFromDepth\normalsK = 20 ScanFromDepth\normalsRadius = 0 ScanFromDepth\normalsUp = false DepthFromScan\depthFromScan = false DepthFromScan\depthFromScanFillHoles = true DepthFromScan\depthFromScanVertical = true DepthFromScan\depthFromScanHorizontal = false DepthFromScan\depthFromScanFillBorders = false Database\path = "J:/Axone/RTAB-map/AxoneSuresnes/AxoneOffice_targetTrack01.db;J:/Axone/RTAB-map/AxoneSuresnes/AxoneOffice_targetTrack02.db" Database\ignoreOdometry = false Database\ignoreGoalDelay = true Database\ignoreGoals = true Database\startPos = 0 Database\cameraIndex = -1 Database\useDatabaseStamps = false [Gui] General\imagesKept = true General\cloudsKept = true General\loggerLevel = 2 General\loggerEventLevel = 3 General\loggerPauseLevel = 3 General\loggerType = 1 General\loggerPrintTime = true General\loggerPrintThreadId = false General\verticalLayoutUsed = true General\imageRejectedShown = true General\imageHighestHypShown = false General\beep = false General\figure_time = true General\figure_cache = true General\notifyNewGlobalPath = false General\odomQualityThr = 50 General\odomOnlyInliersShown = false General\posteriorGraphView = true General\odomDisabled = false General\odomRegistration = 3 General\gtAlign = true General\showClouds0 = true General\decimation0 = 4 General\maxDepth0 = 0 General\minDepth0 = 0 General\roiRatios0 = 0.0 0.0 0.0 0.0 General\showScans0 = true General\showFeatures0 = false General\showFrustums0 = false General\downsamplingScan0 = 1 General\maxRange0 = 0 General\minRange0 = 0 General\voxelSizeScan0 = 0 General\colorScheme0 = 0 General\opacity0 = 1 General\ptSize0 = 2 General\colorSchemeScan0 = 0 General\opacityScan0 = 1 General\ptSizeScan0 = 2 General\ptSizeFeatures0 = 3 General\showClouds1 = true General\decimation1 = 4 General\maxDepth1 = 0 General\minDepth1 = 0 General\roiRatios1 = 0.0 0.0 0.0 0.0 General\showScans1 = true General\showFeatures1 = true General\showFrustums1 = false General\downsamplingScan1 = 1 General\maxRange1 = 0 General\minRange1 = 0 General\voxelSizeScan1 = 0 General\colorScheme1 = 0 General\opacity1 = 0.75 General\ptSize1 = 2 General\colorSchemeScan1 = 0 General\opacityScan1 = 0.5 General\ptSizeScan1 = 2 General\ptSizeFeatures1 = 3 General\cloudVoxel = 0 General\cloudNoiseRadius = 0 General\cloudNoiseMinNeighbors = 5 General\cloudCeilingHeight = 0 General\cloudFloorHeight = 0 General\normalKSearch = 10 General\normalRadiusSearch = 0 General\scanCeilingHeight = 0 General\scanFloorHeight = 0 General\scanNormalKSearch = 0 General\scanNormalRadiusSearch = 0 General\showGraphs = true General\showLabels = false General\noFiltering = true General\cloudFiltering = false General\cloudFilteringRadius = 0.10000000000000001 General\cloudFilteringAngle = 30 General\subtractFiltering = false General\subtractFilteringMinPts = 5 General\subtractFilteringRadius = 0.02 General\subtractFilteringAngle = 0 General\gridMapShown = false General\gridMapOpacity = 0.75 General\octomap = false General\octomap_depth = 16 General\octomap_2dgrid = true General\octomap_3dmap = true General\octomap_rendering_type = 0 General\octomap_point_size = 5 General\meshing = false General\meshing_angle = 15 General\meshing_quad = true General\meshing_texture = false General\meshing_triangle_size = 2 [CalibrationDialog] board_width = 8 board_height = 6 board_square_size = 0.033000000000000002 max_scale = 1 geometry = @ByteArray(\x1\xd9\xd0\xcb\0\x2\0\0\0\0\0\0\0\0\0\0\0\0\x5!\0\0\x3N\0\0\0\0\0\0\0\0\0\0\x5!\0\0\x3N\0\0\0\0\0\0\0\0\a\x80) [Core] Version = 0.16.3 BRIEF\Bytes = 32 BRISK\Octaves = 3 BRISK\PatternScale = 1 BRISK\Thresh = 30 Bayes\FullPredictionUpdate = false Bayes\PredictionLC = 0.1 0.36 0.30 0.16 0.062 0.0151 0.00255 0.000324 2.5e-05 1.3e-06 4.8e-08 1.2e-09 1.9e-11 2.2e-13 1.7e-15 8.5e-18 2.9e-20 6.9e-23 Bayes\VirtualPlacePriorThr = 0.9 DbSqlite3\CacheSize = 10000 DbSqlite3\InMemory = false DbSqlite3\JournalMode = 3 DbSqlite3\Synchronous = 0 DbSqlite3\TempStore = 2 FAST\Gpu = false FAST\GpuKeypointsRatio = 0.05 FAST\GridCols = 4 FAST\GridRows = 4 FAST\MaxThreshold = 200 FAST\MinThreshold = 7 FAST\NonmaxSuppression = true FAST\Threshold = 20 FREAK\NOctaves = 4 FREAK\OrientationNormalized = true FREAK\PatternScale = 22 FREAK\ScaleNormalized = true GFTT\BlockSize = 3 GFTT\K = 0.04 GFTT\MinDistance = 3 GFTT\QualityLevel = 0.001 GFTT\UseHarrisDetector = false GTSAM\Optimizer = 1 Grid\3D = true Grid\CellSize = 0.05 Grid\ClusterRadius = 0.1 Grid\DepthDecimation = 4 Grid\DepthRoiRatios = 0.0 0.0 0.0 0.0 Grid\FlatObstacleDetected = true Grid\FootprintHeight = 0.0 Grid\FootprintLength = 0.0 Grid\FootprintWidth = 0.0 Grid\FromDepth = true Grid\GroundIsObstacle = false Grid\MapFrameProjection = false Grid\MaxGroundAngle = 45 Grid\MaxGroundHeight = 0.0 Grid\MaxObstacleHeight = 0.0 Grid\MinClusterSize = 10 Grid\MinGroundHeight = 0.0 Grid\NoiseFilteringMinNeighbors = 5 Grid\NoiseFilteringRadius = 0.0 Grid\NormalK = 20 Grid\NormalsSegmentation = true Grid\PreVoxelFiltering = true Grid\RangeMax = 5 Grid\RangeMin = 0.0 Grid\RayTracing = false Grid\Scan2dMaxFilledRange = 4.0 Grid\Scan2dUnknownSpaceFilled = false Grid\ScanDecimation = 1 GridGlobal\Eroded = false GridGlobal\FootprintRadius = 0.0 GridGlobal\FullUpdate = true GridGlobal\MaxNodes = 0 GridGlobal\MinSize = 0.0 GridGlobal\OctoMapOccupancyThr = 0.5 GridGlobal\UpdateError = 0.01 Icp\CorrespondenceRatio = 0.1 Icp\DownsamplingStep = 1 Icp\Epsilon = 0 Icp\Iterations = 30 Icp\MaxCorrespondenceDistance = 0.1 Icp\MaxRotation = 0.78 Icp\MaxTranslation = 0.2 Icp\PM = false Icp\PMConfig = Icp\PMMatcherEpsilon = 0.0 Icp\PMMatcherKnn = 1 Icp\PMOutlierRatio = 0.95 Icp\PointToPlane = false Icp\PointToPlaneK = 5 Icp\PointToPlaneMinComplexity = 0.02 Icp\PointToPlaneRadius = 1.0 Icp\VoxelSize = 0.0 KAZE\Diffusivity = 1 KAZE\Extended = false KAZE\NOctaveLayers = 4 KAZE\NOctaves = 4 KAZE\Threshold = 0.001 KAZE\Upright = false Kp\BadSignRatio = 0.5 Kp\DetectorStrategy = 0 Kp\DictionaryPath = Kp\FlannRebalancingFactor = 2.0 Kp\GridCols = 1 Kp\GridRows = 1 Kp\IncrementalDictionary = true Kp\IncrementalFlann = true Kp\MaxDepth = 0 Kp\MaxFeatures = 1000 Kp\MinDepth = 0 Kp\NNStrategy = 1 Kp\NewWordsComparedTogether = true Kp\NndrRatio = 0.8 Kp\Parallelized = true Kp\RoiRatios = 0.0 0.0 0.0 0.0 Kp\SubPixEps = 0.02 Kp\SubPixIterations = 0 Kp\SubPixWinSize = 3 Kp\TfIdfLikelihoodUsed = true Mem\BadSignaturesIgnored = false Mem\BinDataKept = true Mem\CompressionParallelized = true Mem\DepthAsMask = true Mem\GenerateIds = true Mem\ImageKept = false Mem\ImagePostDecimation = 1 Mem\ImagePreDecimation = 1 Mem\IncrementalMemory = true Mem\InitWMWithAllNodes = false Mem\IntermediateNodeDataKept = false Mem\LaserScanDownsampleStepSize = 1 Mem\LaserScanNormalK = 0 Mem\LaserScanNormalRadius = 0 Mem\LaserScanVoxelSize = 0.0 Mem\MapLabelsAdded = true Mem\NotLinkedNodesKept = true Mem\RawDescriptorsKept = true Mem\RecentWmRatio = 0.2 Mem\ReduceGraph = false Mem\RehearsalIdUpdatedToNewOne = false Mem\RehearsalSimilarity = 0.6 Mem\RehearsalWeightIgnoredWhileMoving = false Mem\STMSize = 10 Mem\SaveDepth16Format = false Mem\TransferSortingByWeightId = false Mem\UseOdomFeatures = true ORB\EdgeThreshold = 31 ORB\FirstLevel = 0 ORB\Gpu = false ORB\NLevels = 8 ORB\PatchSize = 31 ORB\ScaleFactor = 1.2 ORB\ScoreType = 0 ORB\WTA_K = 2 Odom\AlignWithGround = false Odom\FillInfoData = true Odom\FilteringStrategy = 0 Odom\GuessMotion = true Odom\Holonomic = true Odom\ImageBufferSize = 1 Odom\ImageDecimation = 1 Odom\KalmanMeasurementNoise = 0.01 Odom\KalmanProcessNoise = 0.001 Odom\KeyFrameThr = 0.3 Odom\ParticleLambdaR = 100 Odom\ParticleLambdaT = 100 Odom\ParticleNoiseR = 0.002 Odom\ParticleNoiseT = 0.002 Odom\ParticleSize = 400 Odom\ResetCountdown = 0 Odom\ScanKeyFrameThr = 0.9 Odom\Strategy = 0 Odom\VisKeyFrameThr = 150 OdomF2M\BundleAdjustment = 1 OdomF2M\BundleAdjustmentMaxFrames = 10 OdomF2M\MaxNewFeatures = 0 OdomF2M\MaxSize = 2000 OdomF2M\ScanMaxSize = 2000 OdomF2M\ScanSubtractAngle = 45 OdomF2M\ScanSubtractRadius = 0.05 OdomFovis\BucketHeight = 80 OdomFovis\BucketWidth = 80 OdomFovis\CliqueInlierThreshold = 0.1 OdomFovis\FastThreshold = 20 OdomFovis\FastThresholdAdaptiveGain = 0.005 OdomFovis\FeatureSearchWindow = 25 OdomFovis\FeatureWindowSize = 9 OdomFovis\InlierMaxReprojectionError = 1.5 OdomFovis\MaxKeypointsPerBucket = 25 OdomFovis\MaxMeanReprojectionError = 10.0 OdomFovis\MaxPyramidLevel = 3 OdomFovis\MinFeaturesForEstimate = 20 OdomFovis\MinPyramidLevel = 0 OdomFovis\StereoMaxDisparity = 128 OdomFovis\StereoMaxDistEpipolarLine = 1.5 OdomFovis\StereoMaxRefinementDisplacement = 1.0 OdomFovis\StereoRequireMutualMatch = true OdomFovis\TargetPixelsPerFeature = 250 OdomFovis\UpdateTargetFeaturesWithRefined = false OdomFovis\UseAdaptiveThreshold = true OdomFovis\UseBucketing = true OdomFovis\UseHomographyInitialization = true OdomFovis\UseImageNormalization = false OdomFovis\UseSubpixelRefinement = true OdomMono\InitMinFlow = 100 OdomMono\InitMinTranslation = 0.1 OdomMono\MaxVariance = 0.01 OdomMono\MinTranslation = 0.02 OdomOKVIS\ConfigPath = OdomORBSLAM2\Bf = 0.076 OdomORBSLAM2\Fps = 0.0 OdomORBSLAM2\MapSize = 3000 OdomORBSLAM2\MaxFeatures = 1000 OdomORBSLAM2\ThDepth = 40.0 OdomORBSLAM2\VocPath = OdomViso2\BucketHeight = 50 OdomViso2\BucketMaxFeatures = 2 OdomViso2\BucketWidth = 50 OdomViso2\InlierThreshold = 2.0 OdomViso2\MatchBinsize = 50 OdomViso2\MatchDispTolerance = 2 OdomViso2\MatchHalfResolution = true OdomViso2\MatchMultiStage = true OdomViso2\MatchNmsN = 3 OdomViso2\MatchNmsTau = 50 OdomViso2\MatchOutlierDispTolerance = 5 OdomViso2\MatchOutlierFlowTolerance = 5 OdomViso2\MatchRadius = 200 OdomViso2\MatchRefinement = 1 OdomViso2\RansacIters = 200 OdomViso2\Reweighting = true Optimizer\Epsilon = 0.00001 Optimizer\Iterations = 20 Optimizer\PriorsIgnored = true Optimizer\Robust = false Optimizer\Strategy = 0 Optimizer\VarianceIgnored = false RGBD\AngularSpeedUpdate = 0.0 RGBD\AngularUpdate = 0 RGBD\CreateOccupancyGrid = false RGBD\Enabled = true RGBD\GoalReachedRadius = 0.5 RGBD\GoalsSavedInUserData = false RGBD\LinearSpeedUpdate = 0.0 RGBD\LinearUpdate = 0 RGBD\LocalImmunizationRatio = 0.25 RGBD\LocalRadius = 10 RGBD\LoopClosureReextractFeatures = false RGBD\MaxLocalRetrieved = 2 RGBD\NeighborLinkRefining = false RGBD\NewMapOdomChangeDistance = 0 RGBD\OptimizeFromGraphEnd = false RGBD\OptimizeMaxError = 1 RGBD\PlanAngularVelocity = 0 RGBD\PlanLinearVelocity = 0 RGBD\PlanStuckIterations = 0 RGBD\ProximityAngle = 45 RGBD\ProximityBySpace = true RGBD\ProximityByTime = false RGBD\ProximityMaxGraphDepth = 50 RGBD\ProximityMaxPaths = 3 RGBD\ProximityPathFilteringRadius = 1 RGBD\ProximityPathMaxNeighbors = 0 RGBD\ProximityPathRawPosesUsed = true RGBD\ScanMatchingIdsSavedInLinks = true Reg\Force3DoF = false Reg\RepeatOnce = true Reg\Strategy = 0 Rtabmap\ComputeRMSE = true Rtabmap\CreateIntermediateNodes = false Rtabmap\DetectionRate = 0 Rtabmap\ImageBufferSize = 0 Rtabmap\ImagesAlreadyRectified = true Rtabmap\LoopRatio = 0 Rtabmap\LoopThr = 0.11 Rtabmap\MaxRetrieved = 2 Rtabmap\MemoryThr = 0 Rtabmap\PublishLastSignature = true Rtabmap\PublishLikelihood = true Rtabmap\PublishPdf = true Rtabmap\PublishRAMUsage = false Rtabmap\PublishStats = true Rtabmap\SaveWMState = false Rtabmap\StartNewMapOnLoopClosure = false Rtabmap\StatisticLogged = false Rtabmap\StatisticLoggedHeaders = true Rtabmap\StatisticLogsBufferedInRAM = true Rtabmap\TimeThr = 0 Rtabmap\WorkingDirectory = C:/Users/stephane-Axone/Documents/RTAB-Map SIFT\ContrastThreshold = 0.04 SIFT\EdgeThreshold = 10 SIFT\NFeatures = 0 SIFT\NOctaveLayers = 3 SIFT\Sigma = 1.6 SURF\Extended = false SURF\GpuKeypointsRatio = 0.01 SURF\GpuVersion = false SURF\HessianThreshold = 500 SURF\OctaveLayers = 2 SURF\Octaves = 4 SURF\Upright = false Stereo\Eps = 0.01 Stereo\Iterations = 30 Stereo\MaxDisparity = 128.0 Stereo\MaxLevel = 5 Stereo\MinDisparity = 0.5 Stereo\OpticalFlow = true Stereo\SSD = true Stereo\WinHeight = 3 Stereo\WinWidth = 15 StereoBM\BlockSize = 15 StereoBM\MinDisparity = 0 StereoBM\NumDisparities = 128 StereoBM\PreFilterCap = 31 StereoBM\PreFilterSize = 9 StereoBM\SpeckleRange = 4 StereoBM\SpeckleWindowSize = 100 StereoBM\TextureThreshold = 10 StereoBM\UniquenessRatio = 15 VhEp\Enabled = false VhEp\MatchCountMin = 8 VhEp\RansacParam1 = 3 VhEp\RansacParam2 = 0.99 Vis\BundleAdjustment = 1 Vis\CorFlowEps = 0.01 Vis\CorFlowIterations = 30 Vis\CorFlowMaxLevel = 3 Vis\CorFlowWinSize = 16 Vis\CorGuessMatchToProjection = false Vis\CorGuessWinSize = 20 Vis\CorNNDR = 0.6 Vis\CorNNType = 1 Vis\DepthAsMask = true Vis\EpipolarGeometryVar = 0.02 Vis\EstimationType = 1 Vis\FeatureType = 6 Vis\ForwardEstOnly = true Vis\GridCols = 1 Vis\GridRows = 1 Vis\InlierDistance = 0.1 Vis\Iterations = 300 Vis\MaxDepth = 0 Vis\MaxFeatures = 0 Vis\MinDepth = 0 Vis\MinInliers = 20 Vis\PnPFlags = 0 Vis\PnPRefineIterations = 0 Vis\PnPReprojError = 2 Vis\RefineIterations = 5 Vis\RoiRatios = 0.0 0.0 0.0 0.0 Vis\SubPixEps = 0.02 Vis\SubPixIterations = 0 Vis\SubPixWinSize = 3 g2o\Baseline = 0.075 g2o\Optimizer = 0 g2o\PixelVariance = 1.0 g2o\RobustKernelDelta = 8 g2o\Solver = 0 Thanks |

|

Administrator

|

Hi,

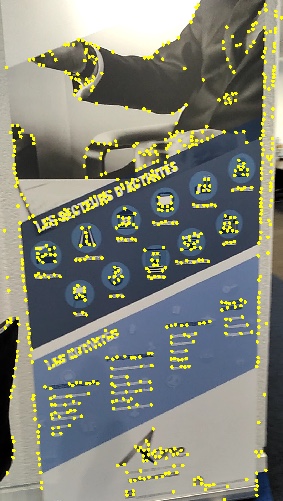

Thank you for sharing the data, I could test more RTAB-Map and fix some issues. First, I would recommend to update RTAB-Map on your phone, the databases you recorded are from version 0.13.2. The current version of RTAB-Map on Tango is 0.16.3. This causes this issue, which makes a lot of loop closures to be rejected. To run with RTAB-Map desktop >= 0.16.3, we should then update your databases like explained in the issue (updating covariance of odometry links from 0.000001 to 0.0001): sqlite3 AxoneQRCODE001.db "update Link Set information_matrix=x'000000000088C340000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000088C340000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000088C340000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000088C340000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000088C340000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000088C340' where type=0" sqlite3 AxoneQRCODE002.db "update Link Set information_matrix=x'000000000088C340000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000088C340000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000088C340000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000088C340000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000088C340000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000088C340' where type=0" sqlite3 AxoneQRCODE003.db "update Link Set information_matrix=x'000000000088C340000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000088C340000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000088C340000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000088C340000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000088C340000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000088C340' where type=0" On step 5 of the Multi-session mapping tutorial, go to Preferences->Graph Optimization->"Reject loop closures if optimization error ratio..." and set the value to 1 (instead of 0.01). Afterward, I changed other parameters more like the current default ones in latest RTAB-Map Tango version: Kp/DetectorStrategy=6 Kp/MaxFeatures=200 Rtabmap/TimeThr=0 Vis/MaxFeatures=400 RGBD/OptimizeMaxError=1 RGBD/LoopCovLimited=true"RGBD/LoopCovLimited" is a new parameter I added in this commit. This reduces the problems of GTSAM not able to optimize the graph in some cases, and thus getting better optimizations. "RGBD/OptimizeMaxError=1" is the parameter we set to 1 previously with the GUI. Using "Kp/DetectorStrategy=6" (GFTT/BRIEF) helps to find more loop closures. Lowering "Kp/MaxFeatures" and "Vis/MaxFeatures" reduces a lot time to update the visual word vocabulary. "Rtabmap/TimeThr" to 0 to keep all frames in WM, in order to be able to detect better wrong graph deformations to reject wrong loop closures (see section below about QR Code generating a lot of bad loop closures). With all those parameters, I can process the 3 databases, then do Tool->Post-processing to detect more loop closures and get what it seems to be a good optimized map:   Comments on QR Code usageWhen I tried to process the first time with the parameters included in the database, here is what I got: The problem was that RTAB-Map wrongly detected a loop closure between very similar QR codes, and since it was between 2 sessions, the loop closure could not be rejected by detecting a huge graph deformation:  I'll suggest to find "anchors" a lot more distinctive. Here are good anchors I've seen in the databases:   I would rather start/end sessions in front of those unique anchors. Bonne journée, Mathieu |

|

Hi, and thank you for the reply.

I have made some other scan, with april tags. It's a lot better than Qrcode for making anchor position. I still have issues, but not the same. This time, aprilTags are well find for loop closure, for the 3 or 4 first scan of a batch replay. Then, I got this message at the start of the 5th scan: Thrown when a linear system is ill-posed. The most common cause for this error is having underconstrained variables. Mathematically, the system is underdetermined. See the GTSAM Doxygen documentation at http://borg.cc.gatech.edu/ on gtsam::IndeterminantLinearSystemException for more information. Then the new scan didn't find any loop closure with the other previous scan. I can send you the database if your are interest, with my setting files. anyway, I update tango phone and standelone version to last version I can found. I will try some more scans and come back soon with more news. Last question.Is there a way to stream live tango phone data to a windows standelone version of rtab-map, or do i have to install rtab-map on ubuntu and run from there with tango ros streamer. Thanks for your great job with this soft |

|

Administrator

|

Hi,

I got a lot of "Thrown when a linear system is ill-posed." with your previous databases on my first trial too, particularly for the third session. By adding the "RGBD/LoopCovLimited" parameters, it helped to reduce this problem, though not definitively. If you are using the windows binaries and want to try this parameter, download the latest snapshot here. Check the parameter under Preferences->RGB-D SLAM->"Limit covariance of non-neighbor links...". There is no built-in option to stream Tango data over RTAB-Map standalone on Windows, we should indeed use ROS for that with Tango ROS Streamer. cheers, Mathieu |

|

Hi.

I update standelone version this morning with V17.7 and replay my test from friday. I scanned a full train station in 4 scan and I have far better results with the V17.5. I saw a lot of disable features in this new version. SURF visual word type is unusable for exemple as well as GTSAM graph optimization. I replay tango DB with this config in 17.5 ReplayTango01.ini database https://we.tl/t-tu1RwCgEsm https://we.tl/t-GjduYJo35H https://we.tl/t-fMmfNyYV34 https://we.tl/t-tp5zduYGsJ I know the scan condition was not optimal at all (3 first scan on morning, the other one on aftenoon, projected shadow, direct sunlight exposition) but the result was nearly perfect with V17.5,it was impressive. |

|

Administrator

|

It is a bug that GTSAM is not in the appveyor's artifact, there is a problem with the appveyor config, as GTSAM should be included. I don't have access to a Windows computer right now, I'll try to fix this as soon as possible. I was using GTSAM in my tests above.

For SIFT/SURF, I also changed the opencv version used in the automated build, now using OpenCV2 instead of OpenCV3. I'll let you know when an artifact with GTSAM is available. cheers, Mathieu |

|

Hi.

I find a way to align my scans with the previous version (17.5) I create a new database with this settings: ReplayTango03.ini and replay one database at a time,then save to a new database. When each database are resaved, I batch them in a new database with the same settings. That's look much better to find loop closure over differents databases. But I can align all my scans. RTAB crash before the end if I put all scans in 1 batch. the database is the same as in my previous message except I resave them with the config file in this message. |

|

Administrator

|

This post was updated on .

Hi,

I fixed the problem with the artifacts, GTSAM is included, as well as SURF/SIFT. I tried your ini file with this version and I can process all databases. However, they don't automatically merge all together and when I try to reopen the database in rtabmap-databaseViewer, GTAM fails to optimize the map. I didn't try the new option I described in that post to avoid this, I'll test it tomorrow. Note that on my computer, I cannot show the map (Preferences->3D Rendering, uncheck Map column) online as I don't have enough RAM (only 6GB on my windows computer). I should also disable the following setting in Preferences->General Settings (GUI): -Insert new data received in the GUI cache. Used to show the loop closure image and the 3D Map.The crash at the end is maybe related to a full memory (how much RAM do you have?). In the ini file, set also "RGBD\LoopClosureReextractFeatures" to false to increase speed of loop closure estimation (that parameter is deprecated, as in newer version descriptors are re-matched on loop closures). cheers, Mathieu |

|

hi

I have 32Go of Ram and gtx 1080. It's probably not that (crash at 24go when I retry). It's generally crash when I use a other graphic software like photoshop or 3ds max. I have a other question. imagine 5 scans as my train station. Scan 1,2,3 and 4 align well in a new database. scan 2,4 and 5 align well in a new database. why when I replay this 2 new database in a batch, rtab-map can't found loop closure for exact same pictures? Is there a way to use the new pose (calculated camera location during replay) of the new databases for help align all the scans? thanks for all your help, it's very useful. |

|

Administrator

|

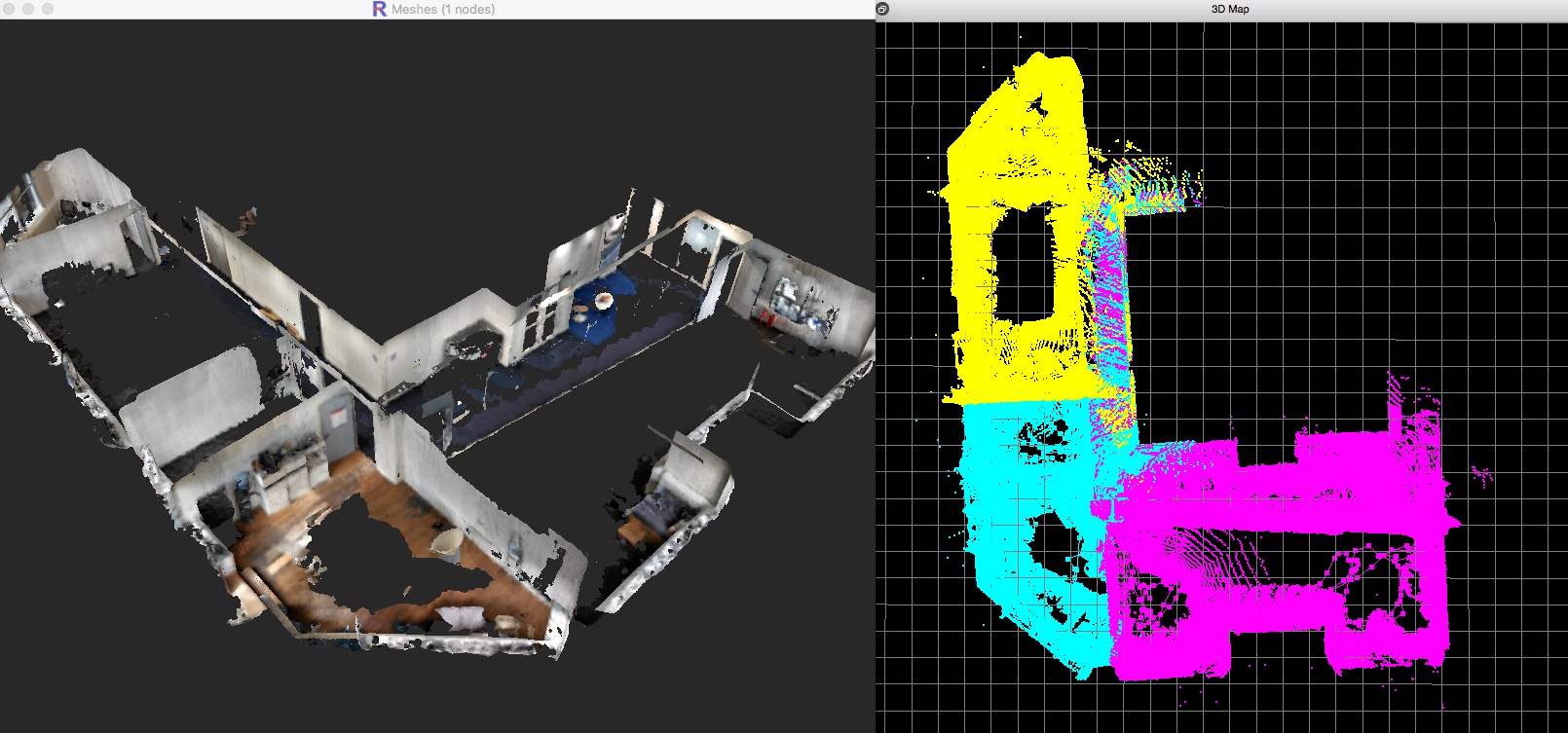

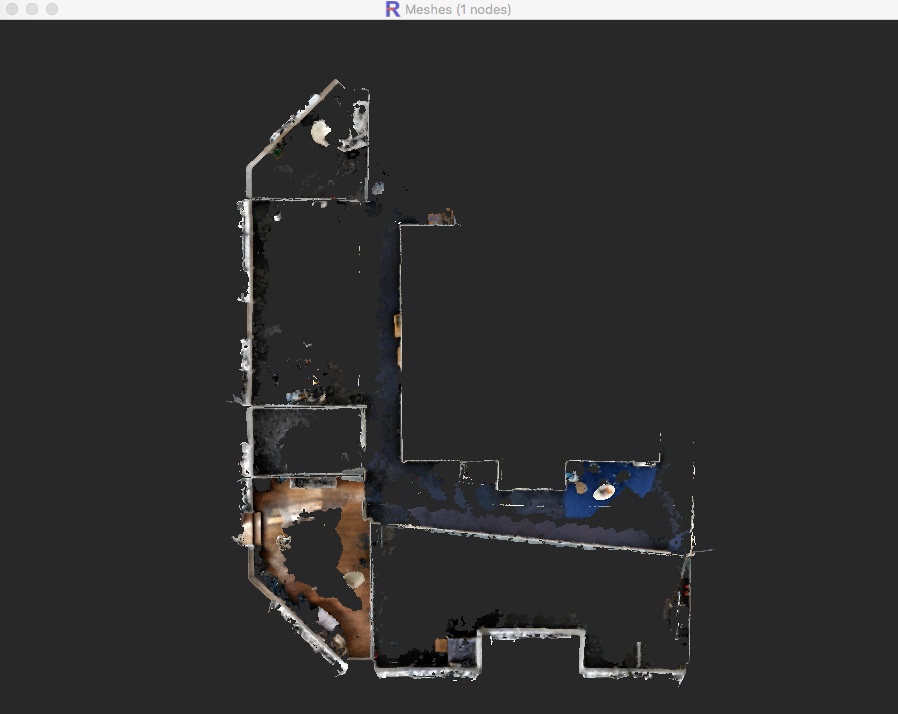

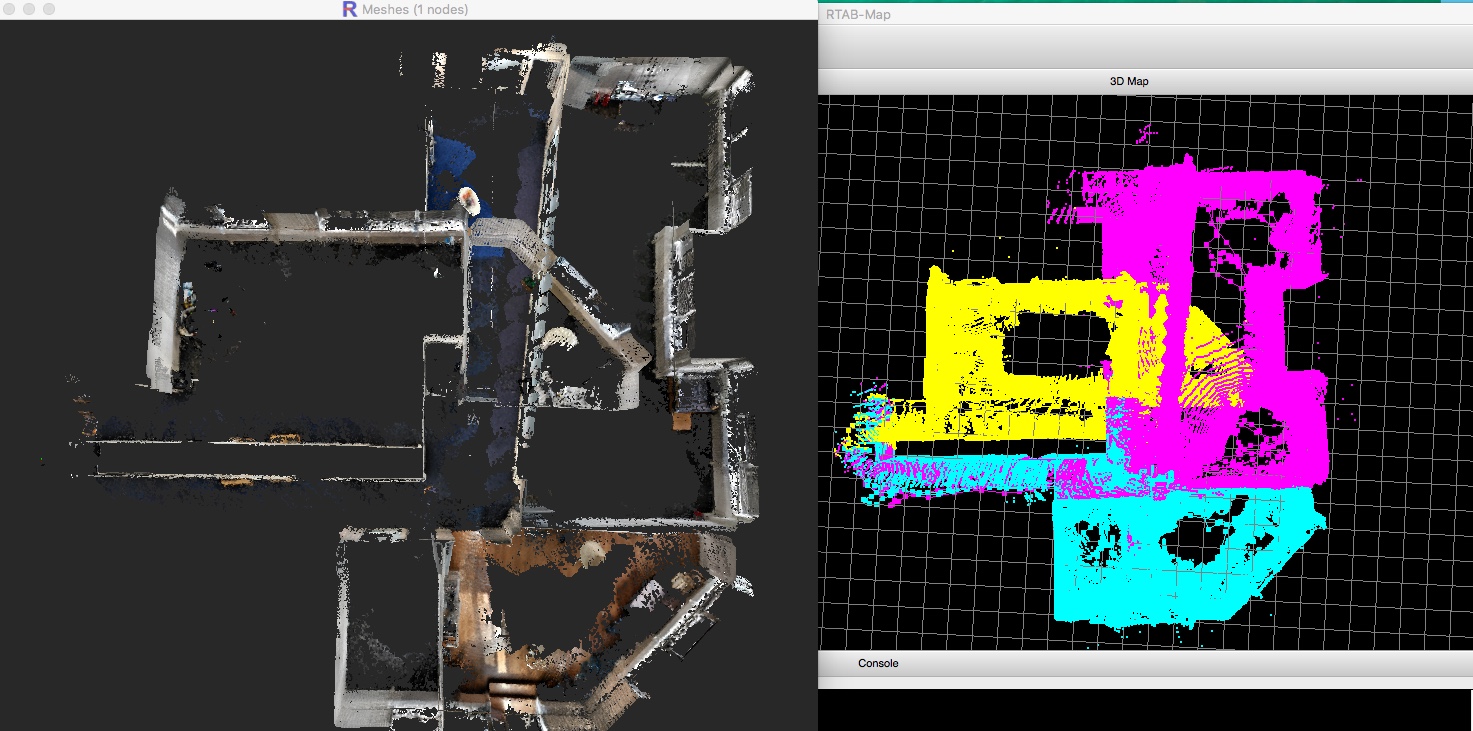

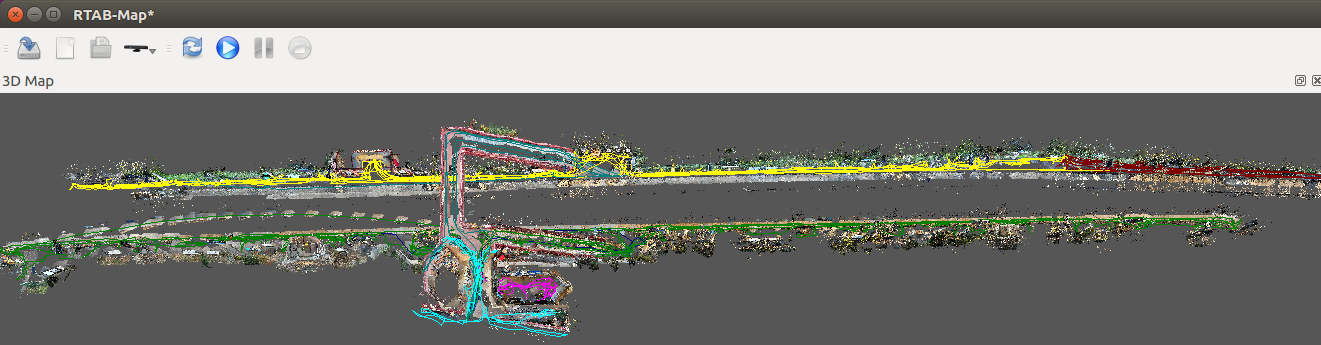

This post was updated on .

Hi,

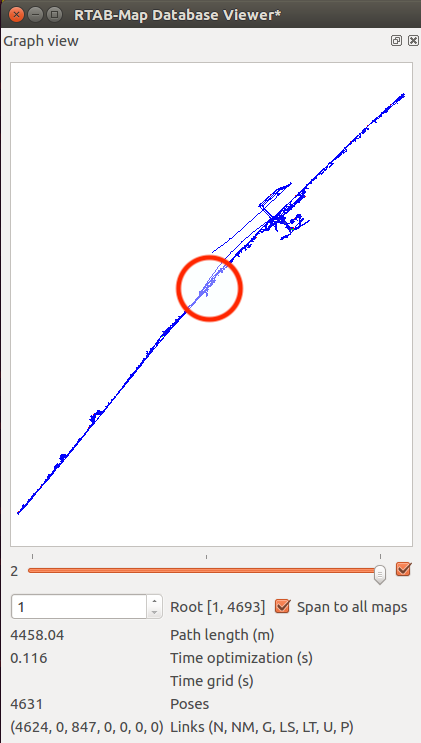

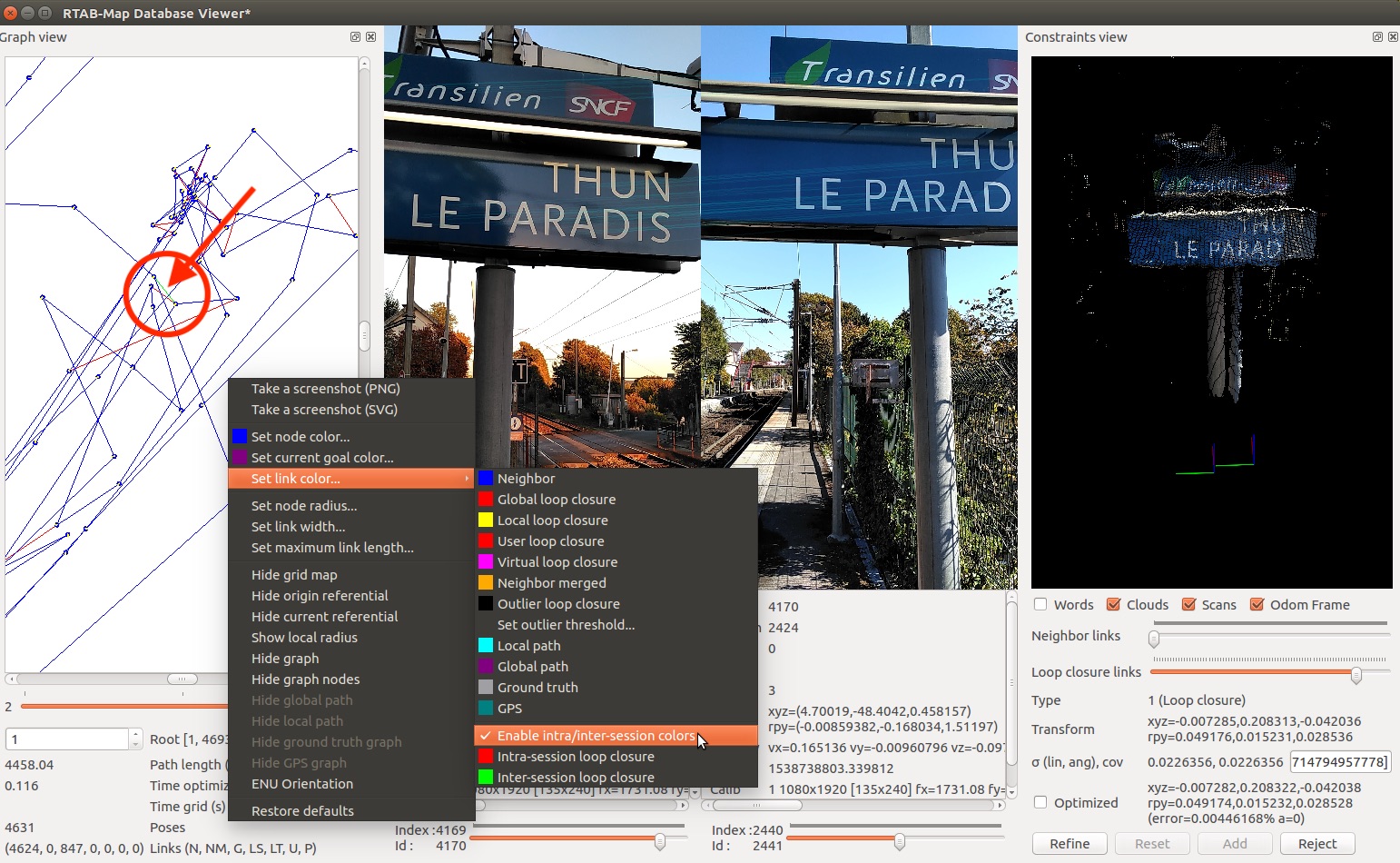

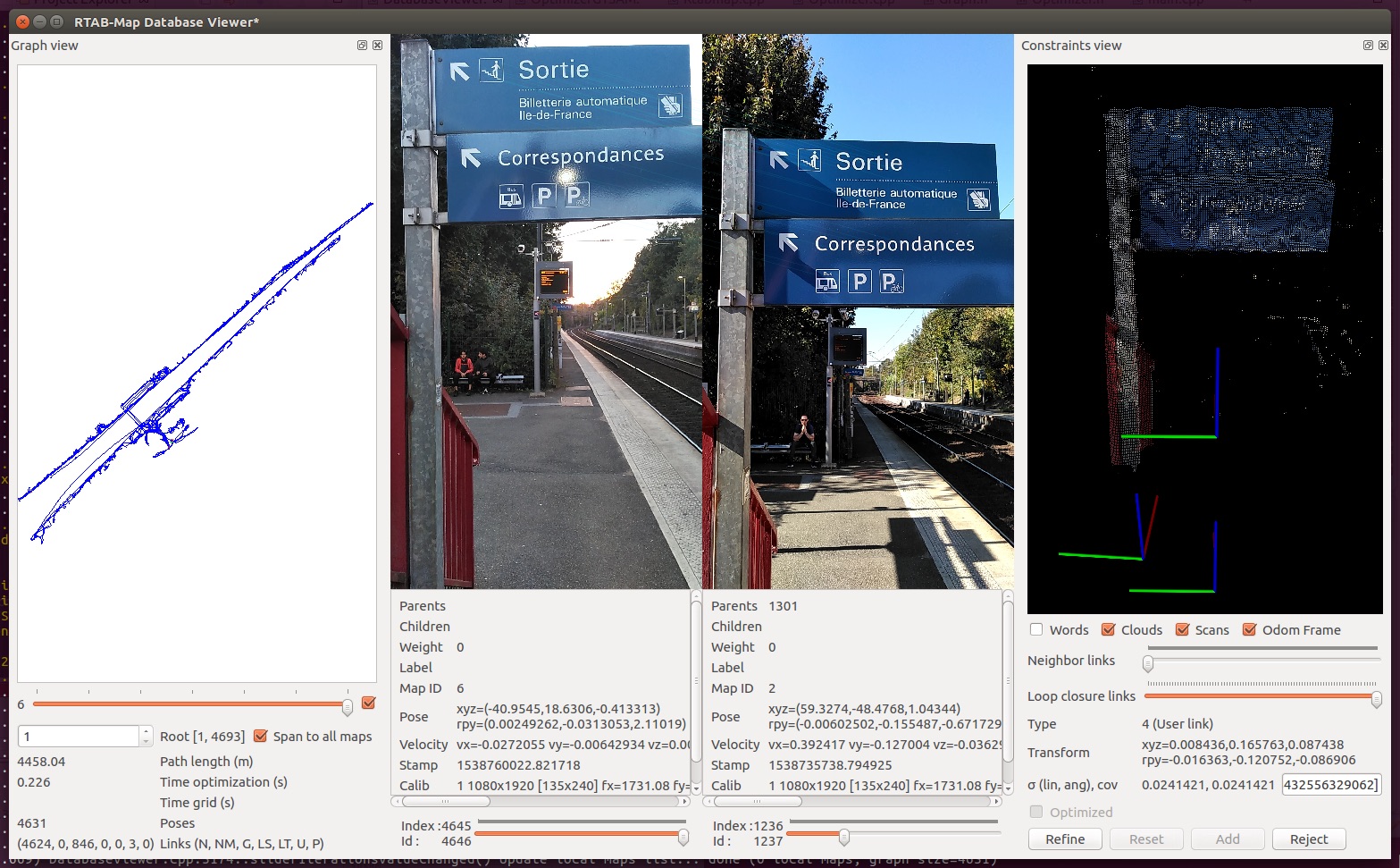

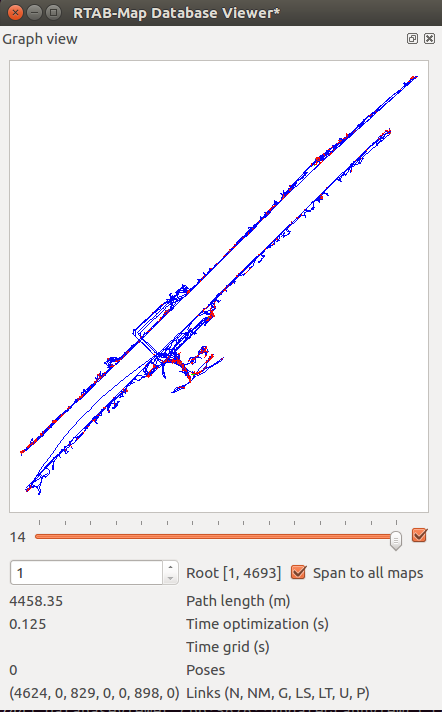

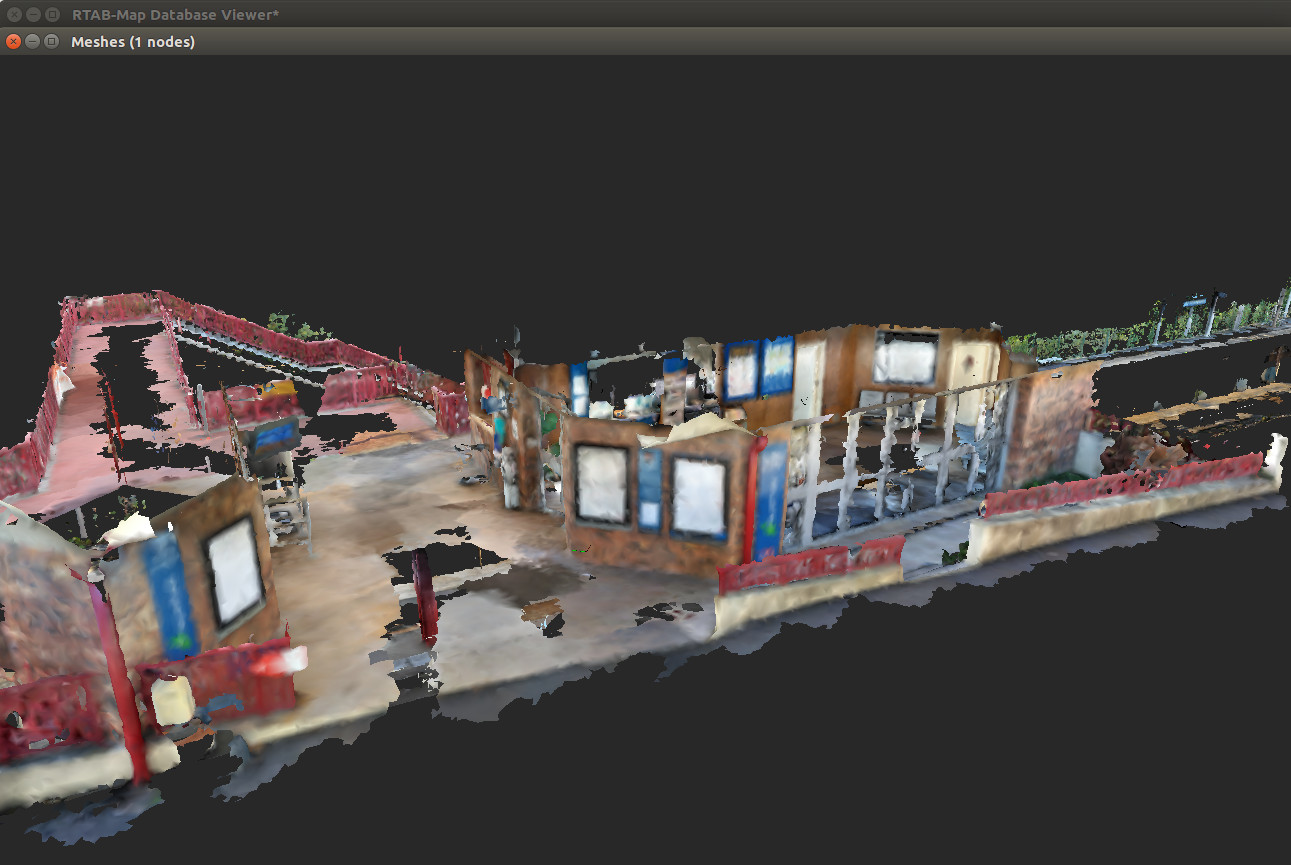

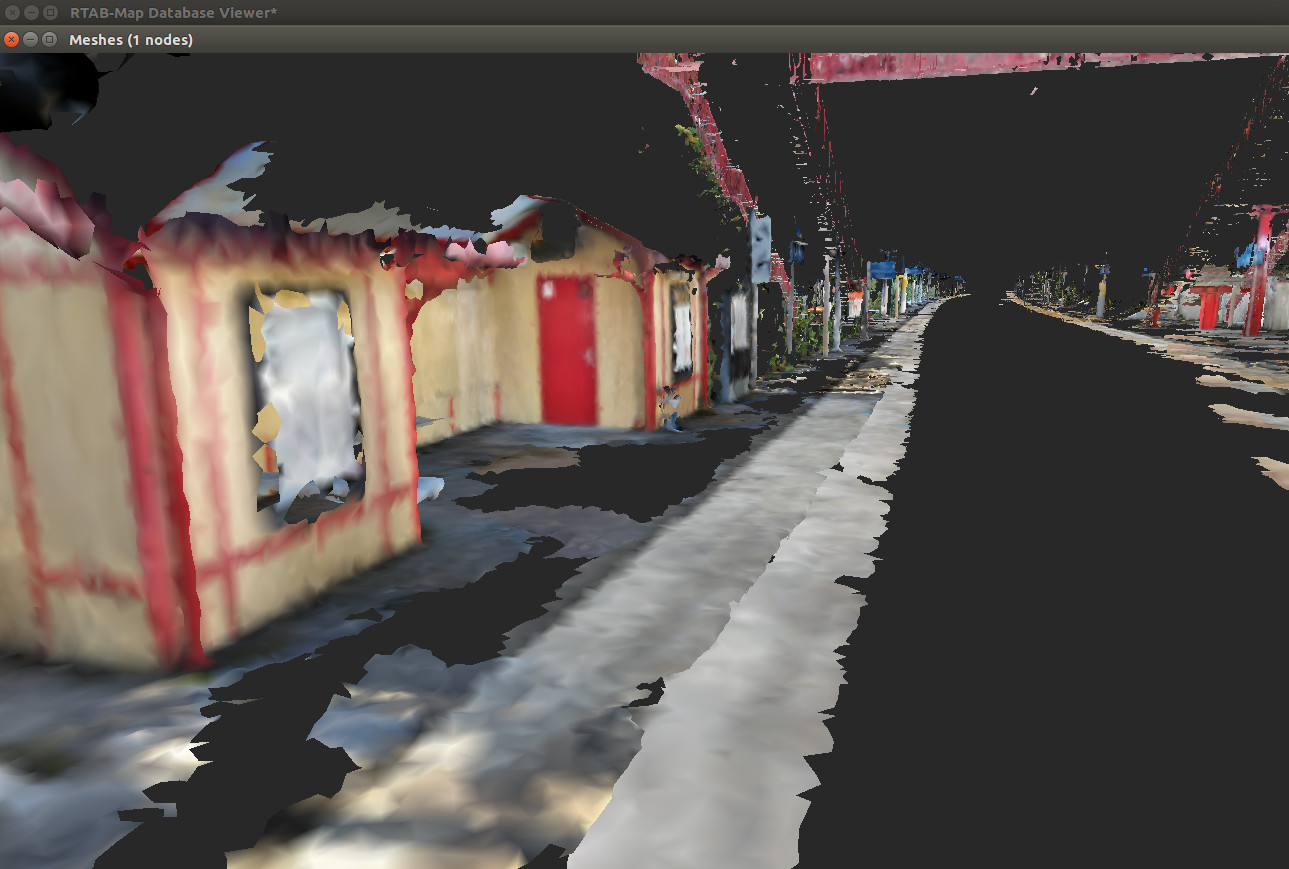

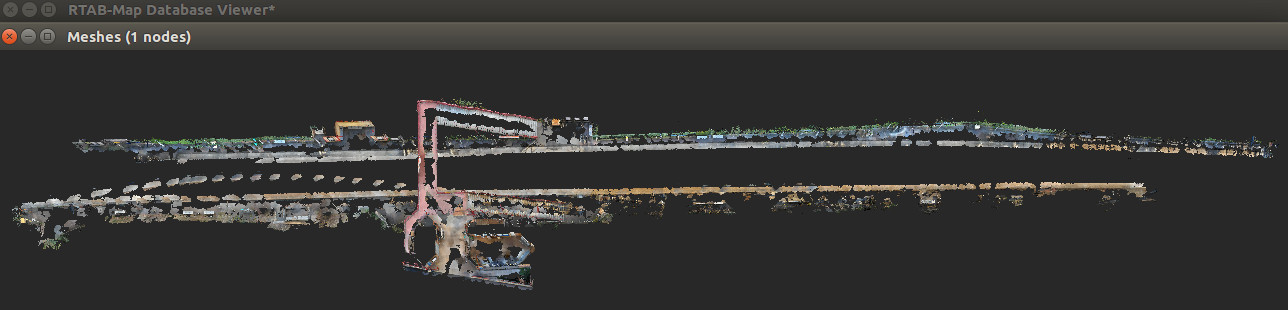

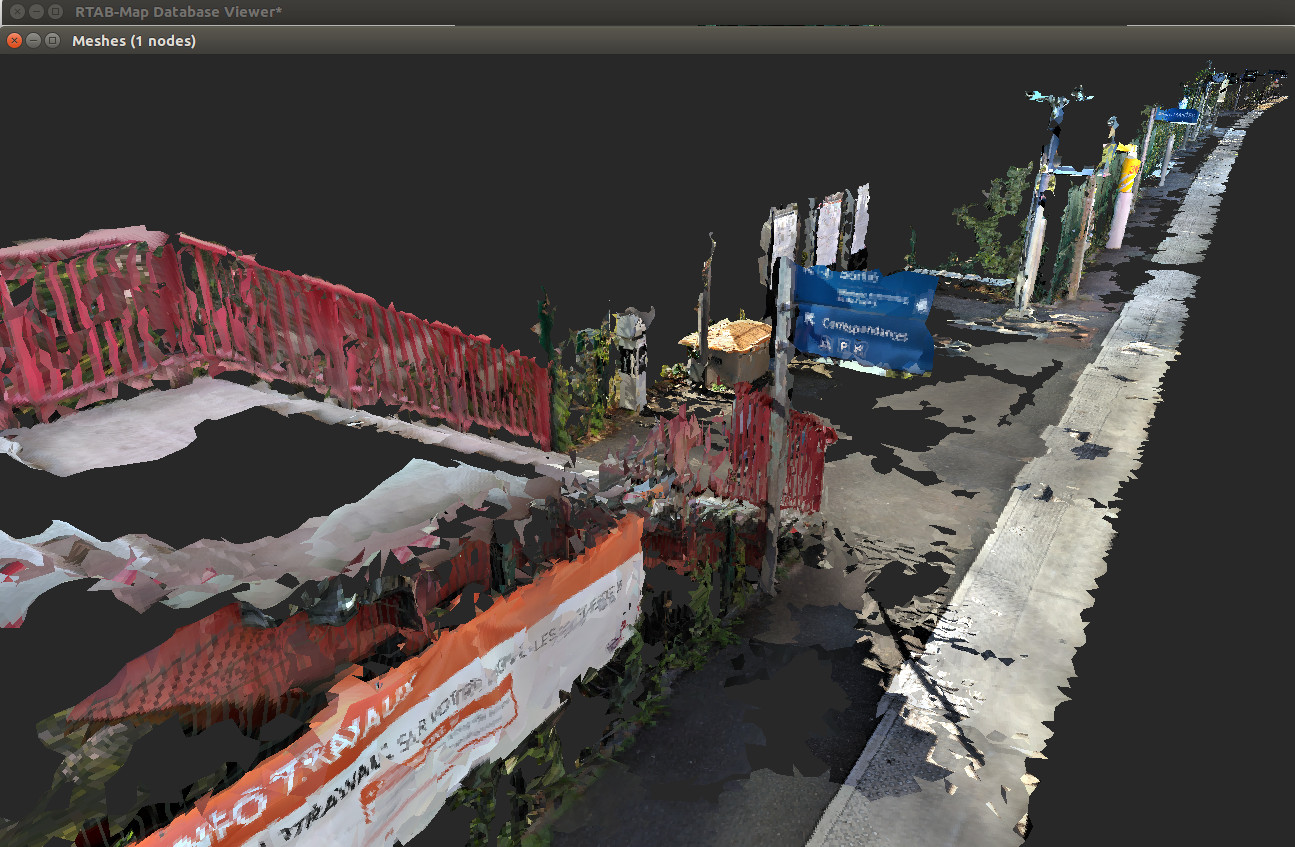

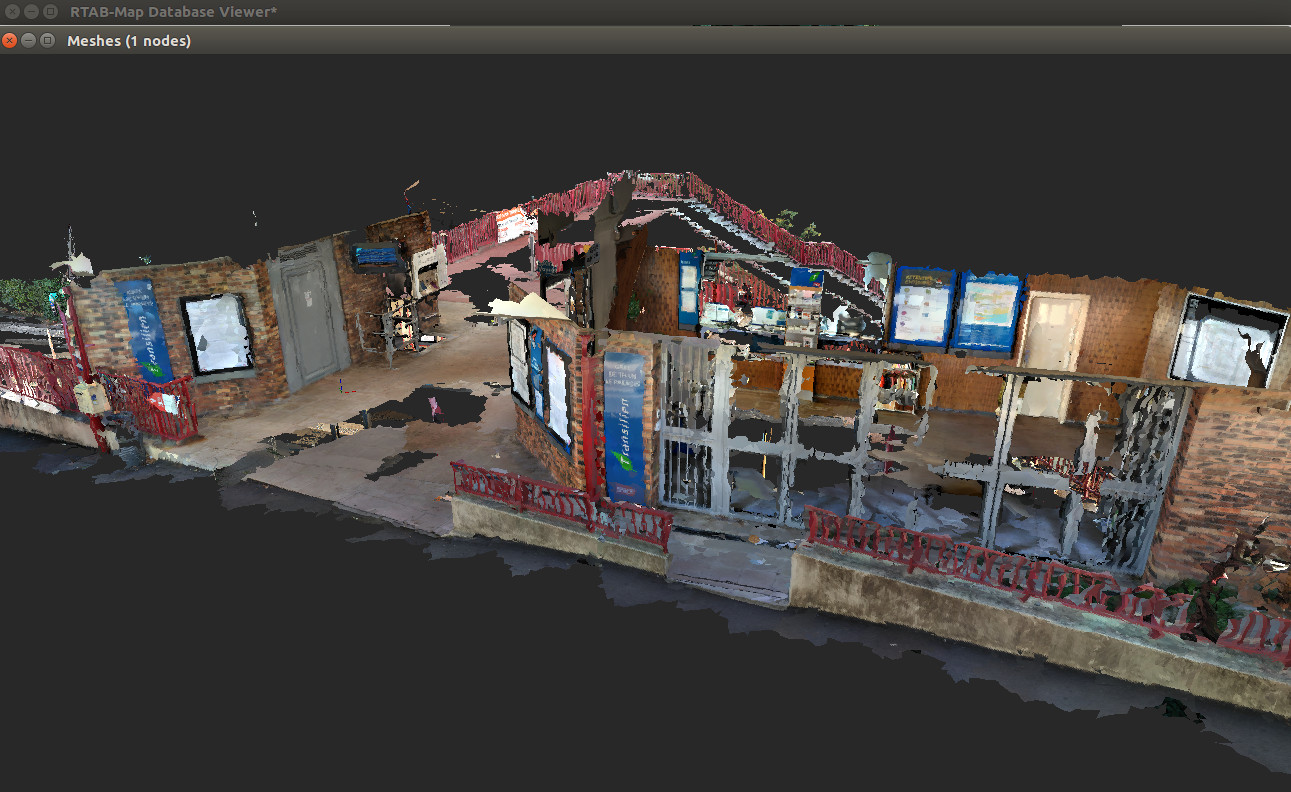

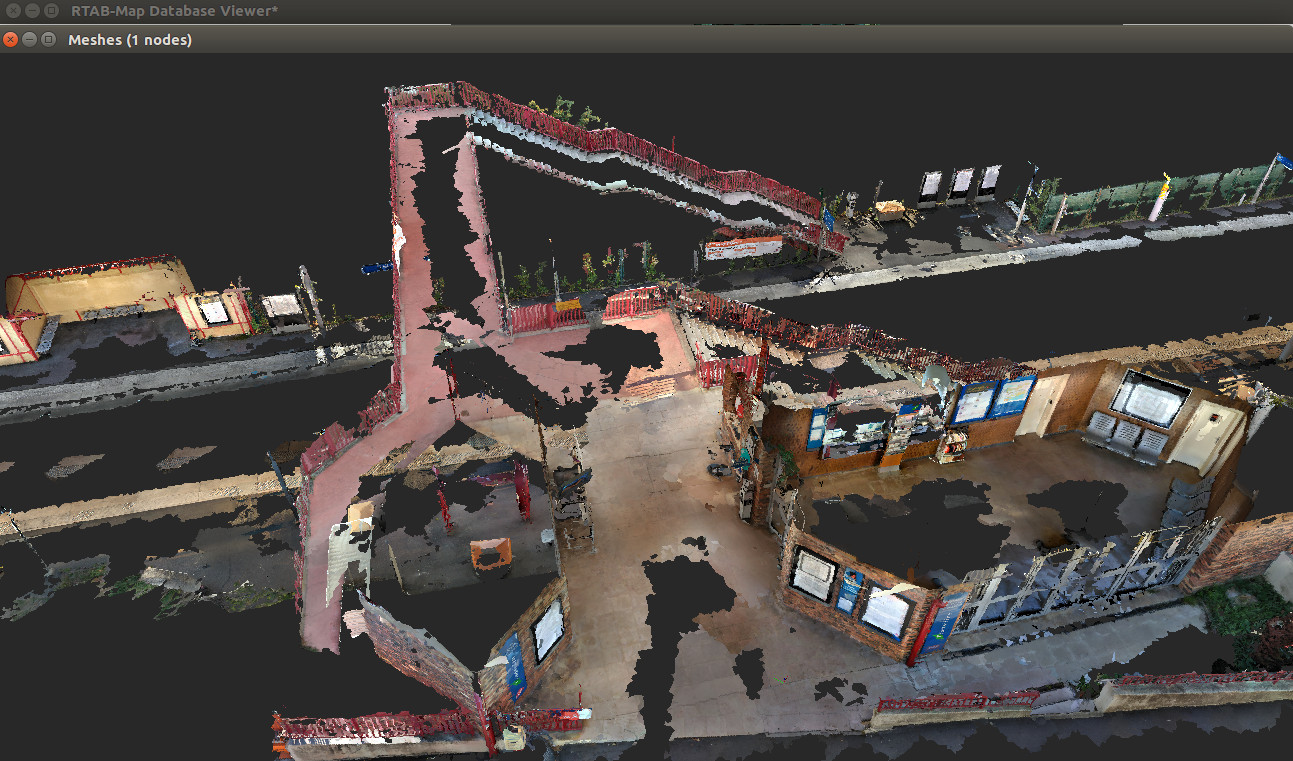

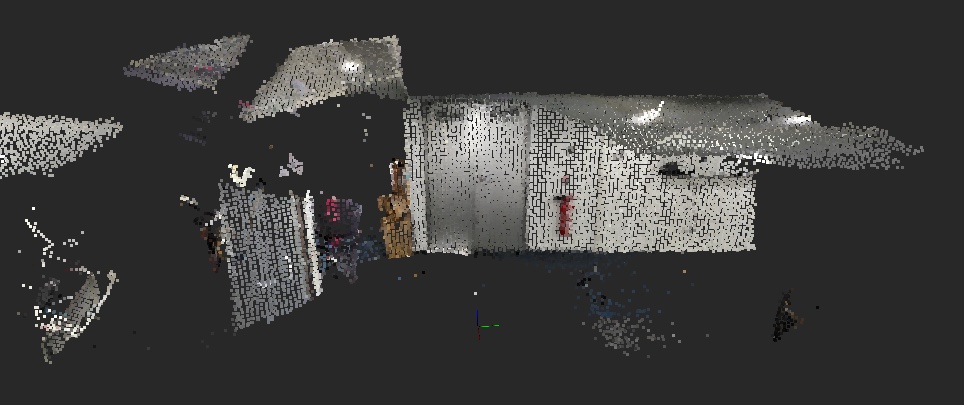

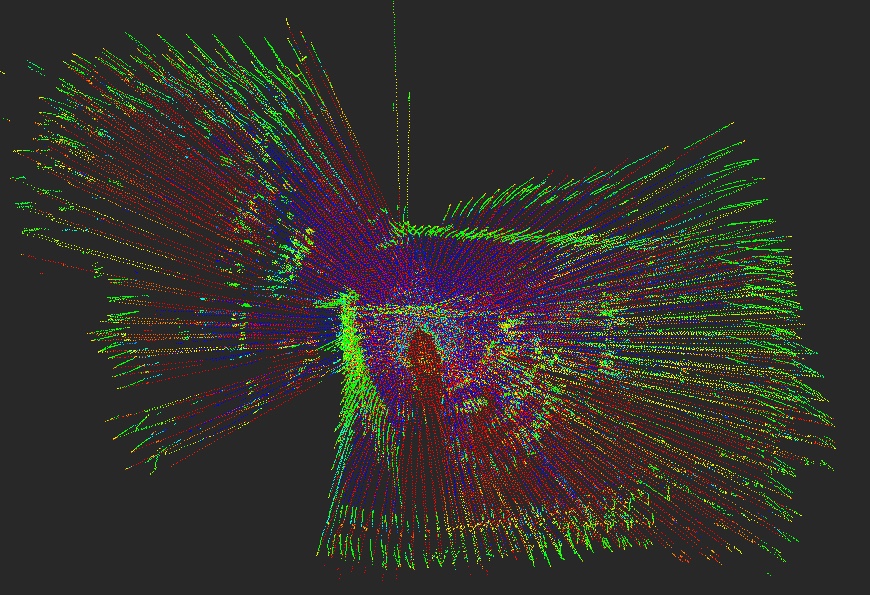

I tested the two past days your databases and I am now able to merge all maps with GTSAM. Here is what I did with latest version (I updated the code to make it work). 1) I updated "rtabmap-reprocess" tool for convenience, instead of using the GUI to reprocess all databases: $ rtabmap-reprocess --uwarn \ -c ReplayTango03.ini \ --RGBD/LoopClosureReextractFeatures false \ --RGBD/OptimizeMaxError 3 \ --RGBD/LoopCovLimited true \ "MeulanGare001.db;MeulanGare002.db;MeulanGare003.db;MeulanGareQuai002.db;MeulanGarfTransition.db" \ merged.db 2) Open the resulting map (merged.db) in rtabmap-databaseViewer, in Graph View you would have:  3) The red circle shows a wrong loop closure that happened between two sessions. To see better which link is the wrong one, enable inter-session colors, zoom in the red circle above and you would see a green link just here (the corresponding images are also shown):  4) Remove that link with the Constraints View, navigate loop closures until you get 4170 <-> 2441, then click reject. 5) Add the following link to merge the maps manually: 4646 <-> 1237  6) At this point, all maps should be linked together. To make the map more straight between two sides of the railroad, click on Edit->Detect More loop closures. Afterwards the two sidewalks should be almost parallel.  Example exporting the cloud at 5 cm voxel:  With paths of the different sessions colored:  EDIT Some other exports: (Colored Mesh)    Note that the curved path at the bottom left seems a big drift from Google Tango's VIO (not sure what happened), which cannot be corrected by RTAB-Map. (Textured Mesh)    EDIT2 I think it is one of the biggest 3D scan I've seen with Google Tango: 4.5 km! Thx! cheers, Mathieu |

|

Hi mathieu. I made some other test by starting my scan where I finish the last one, and good result is way more easy to obtain with the good settings. Thanks for all your help.

We are looking for a other solution, like Kaarta stencil or GEOSLAM ZEB-REVO that can return distance until 100m and align scan by loop closure like RTAB map. But the price is huge (55000$ for the ZEB-Horizon). Do you know a cheaper device, all in 1, that can make same results, and work this RTAB, or do we have to develop our own devices, like some other on this forum? Is that hard to develop and make it work as the price is huge for a pre-build device? If I attach a lidar on my Tango, is there a way to match the lidar scan to the tango post-processed odometry and IR scan? thank you |

|

Administrator

|

Hi,

When scanning large areas, lidar-based scanners are more convenient than tango because of their larger field-of-view and range. You can add Paracosm PX-80 to your list. RTAB-Map can be also used with lidars, in theory it is possible to reproduce those devices. For example, you could combine a Velodyne and a camera with RTAB-Map, to get a colored point cloud. The Velodyne could be used alone (or with an IMU) to get already some accurate pose estimation (e.g., odometry) and camera could be used for loop closure in very large areas. If you can synchronize the clock of Tango with an external lidar, you could feed the poses from tango and scans to rtabmap (e.g., possibly using tango ros streamer and publishing transform between VIO ref frame and lidar via TF). RTAB-Map can already create surfaces of the environment using the lidar contained in the database and apply texture from the camera on them. However, there are probably some non-trivial tuning to do to rtabmap to get good results that I cannot think right now. cheers, Mathieu |

|

Hi,

If I understand well, I lidar don't need IMU or camera to work with RTAB-map and can be use alone for mapping? Camera only return color for point cloud? not use for Loop closure? We already have the M8 lidar from Quanergy. It's a 8 layers 360° lidar. Do you think this device can be use for mapping? thanks stephane |

|

Administrator

|

Hi,

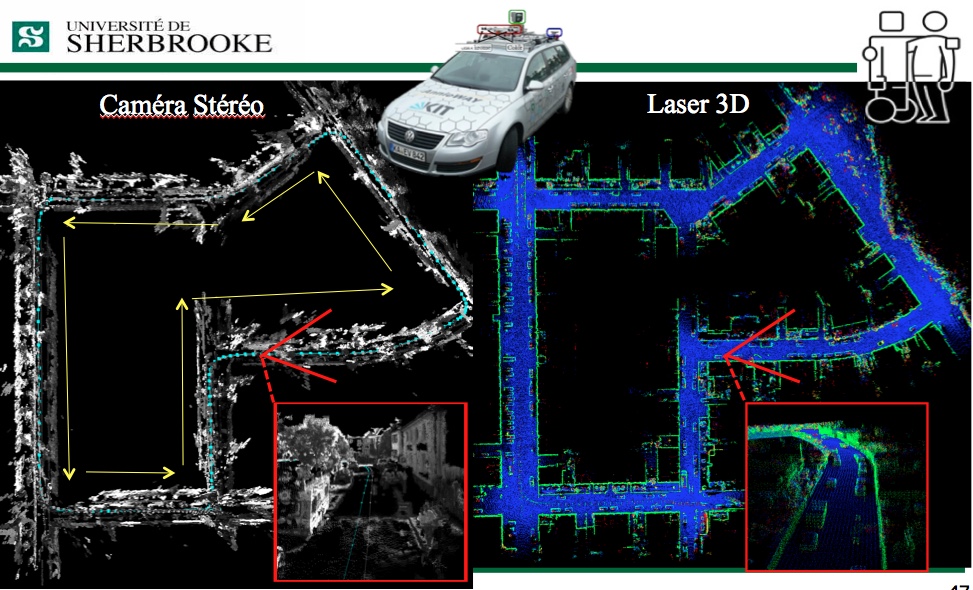

It is quite recent, but we can indeed use rtabmap without a camera, only a lidar for mapping. In this mode however, visual loop closure detection is disabled but proximity detection can work (to find small loop closures). If we want to add a camera only for coloring point clouds or texturing a resulting mesh from lidar, there are some works to do on exporting dialog (this has never been tried yet). Yes, 3D lidars like Quanergy/Ouster/Velodyne can be used in rtabmap. In this paper, we evaluated RTAB-Map with a velodyne for autonomous car (KITTI dataset).  Hand-held 3D lidar scanning is still in development. I just recently had my hands on a velodyne PUCK, so some examples may appear in the next months. cheers, Mathieu |

|

Hi,

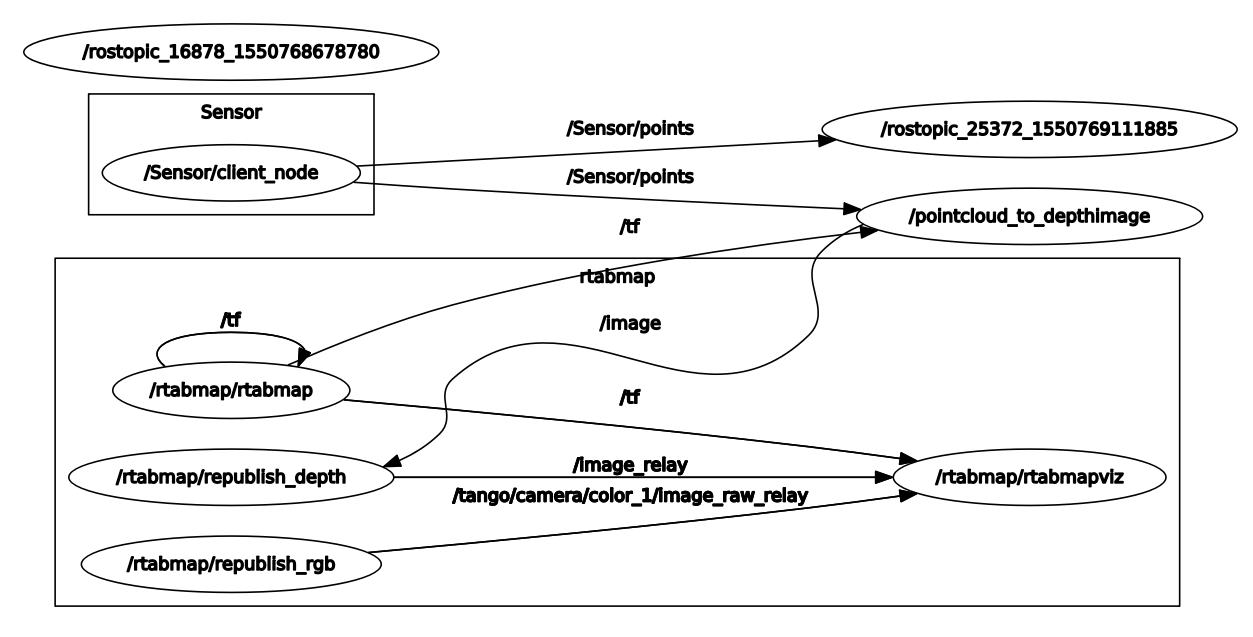

I'm currently having some trouble to setup the Quanergy lidar with rtabmap and rtabmap_ros. As far as I understand, the quanergy_client_ros that I have posts its points datas on a topic named `/Sensor/points`. The fact is that I can't see any points on rtabmap. Here's the steps I followed: First I launch the quanergy_client_ros with a basic launch file: `roslaunch quanergy_client_ros client.launch` client.launch: ``` <?xml version="1.0" encoding="UTF-8"?> <launch> <arg name="host" default="10.10.1.66"/> <arg name="ns" default="Sensor" /> <arg name="return" default="0" /> <arg name="maxCloudSize" default="-1" /> <group ns="$(arg ns)"> <node name="client_node" pkg="quanergy_client_ros" type="client_node" args="--host $(arg host) --settings $(find quanergy_client_ros)/settings/client.xml --frame $(arg ns) --return $(arg return) --max-cloud $(arg maxCloudSize)" required="true" output="screen"/> </group> </launch> ``` Then I listen to the `/Sensor/points` topic to be sure that the lidar and ros are communicating on this particular topic. `rostopic hz /Sensor/points` Then I run another ros package, `pointcloud_to_depthimage` and set the cloud parameter to the `/Sensor/points` topic so that rtabmap can get the point cloud from the lidar and display it - this point may be where I'm wrong right now `rosrun rtabmap_ros pointcloud_to_depthimage cloud:=/Sensor/point _fixed_frame_id:=start_of_service _decimation:=8 _fill_holes_size:=5` Finally, I launch rtabmap_ros with the `rtabmap.launch` file. It opens rtabmap but the point cloud doesn't appear. `roslaunch rtabmap_ros rtabmap.launch rtabmap_args:="--delete_db_on_start --Mem/ImagePreDecimation 2 --Mem/ImagePostDecimation 2" visual_odometry:=false odom_frame_id:="start_of_service" frame_id:="device" rgb_topic:=/tango/camera/color_1/image_raw depth_topic:=/image camera_info_topic:=/tango/camera/color_1/camera_info compressed:=true depth_image_transport:=raw approx_sync:=false`  Do you have an idea of where is the problem? Thank you. |

|

This post was updated on .

Hi mathieu

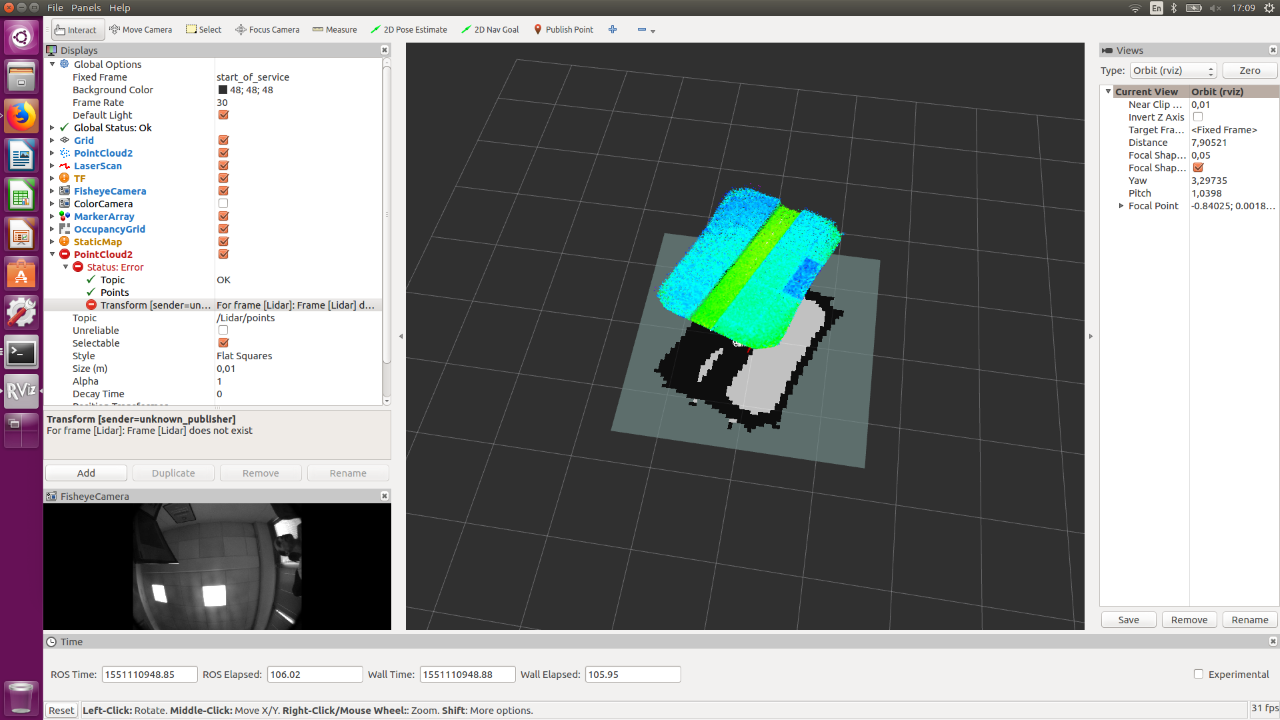

I tried a lot of things but I think I don't understand well how all that work. I'm able to connect tango ros steamer to rviz and actually see the pointcloud. We also use a Quanergy lidar. The only published topic is /Lidar/points. It's PointCloud2 data. I'm able to add a second pointCloud2 in Rviz and link /Lidar/points topic to this PointCloud2. But I have transform problem (show the image)  so I don't see lidar points. I assume the lidar need a tf to work with Rviz. How can I provide a tf to my lidar if I want to use it alone? this is my lidar launch file <?xml version="1.0" encoding="UTF-8"?> <launch> <arg name="host" /> <arg name="ns" default="Sensor" /> <arg name="return" default="0" /> <arg name="maxCloudSize" default="-1" /> <group ns="$(arg ns)"> <node name="client_node" pkg="quanergy_client_ros" type="client_node" args="--host $(arg host) --settings $(find quanergy_client_ros)/settings/client.xml --frame $(arg ns) --return $(arg return) --max-cloud $(arg maxCloudSize)" required="true" output="screen"/> </group> </launch> but that's don't work. then I try this command: rosrun tf static_transform_publisher 0 0 0 0 0 0 1 map TestName 10. After that, I'm able to change map to "TestName" in global option >fixed frame, but same error message. What did I miss? my final goal here is to use both lidar and tango, and make a 3D map in rtab-map. Is there a way to connect tango TF to this second pointCloud2? How can I transfert all that data to rtab to make a 3D map? thank you |

|

Administrator

|

Hi,

I would try first to use tango ros streamer example "as is" while adding the lidar input. To add the lidar point clouds, we have to pre-sync the tango image topics together. To do that, rtabmap.launch has for convenience an argument called rgbd_sync: $ rosrun rtabmap_ros pointcloud_to_depthimage \ cloud:=/tango/point_cloud \ camera_info:=/tango/camera/color_1/camera_info \ _fixed_frame_id:=start_of_service \ _decimation:=8 \ _fill_holes_size:=5 $ roslaunch rtabmap_ros rtabmap.launch \ rtabmap_args:="--delete_db_on_start --Mem/ImagePreDecimation 2 --Mem/ImagePostDecimation 2" \ visual_odometry:=false \ odom_frame_id:="start_of_service" \ frame_id:="device" \ rgb_topic:=/tango/camera/color_1/image_raw \ depth_topic:=/image \ camera_info_topic:=/tango/camera/color_1/camera_info \ compressed:=true \ depth_image_transport:=raw \ approx_sync:=true \ rgbd_sync:=true \ approx_rgbd_sync:=false \ subscribe_rgbd:=true \ subscribe_scan_cloud:=true \ scan_cloud_topic:=/Lidar/points # We should adjust the pose of the lidar accordingly to device frame: "x y z yaw pitch rool" $ rosrun tf static_transform_publisher 0 0 0 0 0 0 device lidar 100 The database will then contain the point cloud of Tango and also the point cloud of the lidar. cheers, Mathieu |

|

I tried tango ros streamer exemple with my last .ini from my train station test, work very well in rtab

if I understand well $ rosrun tf static_transform_publisher 0 0 0 0 0 0 device lidar 100 because : frame_id:="device" \ and scan_cloud_topic:=/Lidar/points can't try right now but have no doubt that will work. As always :) Many thanks |

|

Hi mathieu

Thank you, that's work. Not as fine as I expected but see a evolution. I set my lidar like that: $ rosrun tf static_transform_publisher 0 0 0 1.57079632679 3.14159265359 0 device Lidar 100 When I start a new map, points from lidar and tando are align. Tango is facing forward, lidar return points on side. There is no corresponding points between the devices. The 2 devices look completely differents things. Is that a problem? problem seems to appear on rotation. when I show only tango points, all looks ok. Lidar points don't always match. How can I improve correspondence between the 2 devices. link to db: https://wetransfer.com/downloads/c6f762647bbbdedf5f001ca90818b88720190226175916/865adbf51e8f0a4102324af746b4b7fd20190226175916/a407cb Thank you |

|

Administrator

|

Hi,

Thx for the db, there is indeed a TF problem between the device frame and the lidar frame. There is no requirement that lidar should overlap tango's cloud, but it would make it easier to debug the transform. In your transform, xyz = "0 0 0", not sure the lidar is exactly on device frame. For example, if the phone is 10 cm over the lidar, it could be something like "0 0 0.1". Another problem could be the time synchronization between the stamps from the lidar and Tango poses. If you move very slowly, does the lidar look less off-sync from Tango?

|

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |