Hesai QT128 with Zed2i and external fused Odometry

123456

123456

|

This post was updated on .

|

|

Hi Mathieu,

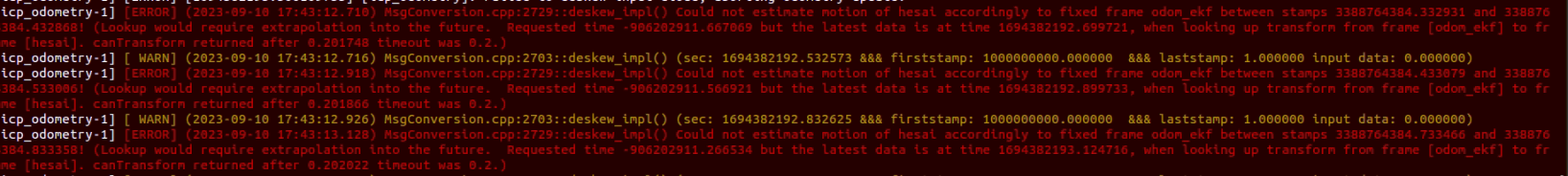

Quick question on Hesai Lidar, This below error is because in theory timestamp = stamp.header.sec - first_stamp_sec ?

|

|

Administrator

|

For the ouster, I fixed the issue in this commit. For some reason, on the "t" channel of the point cloud, the time offset can reset in the middle of a scan. It seems that the ros2 driver of ouster doesn't assure that the first point has the smallest time offset. It means that the driver is assembling a Nx32 point cloud where the offset stamp can be reset in middle of N.

For Hesai, I am not sure what timestamps you are actually printing. I added more logs in commit above. Normally, the firstStamp <= Header.stamp <= lastStamp. |

|

Thank you Mathieu, I appreciate you. I will apply this commit soon and let you know but that makes sense.

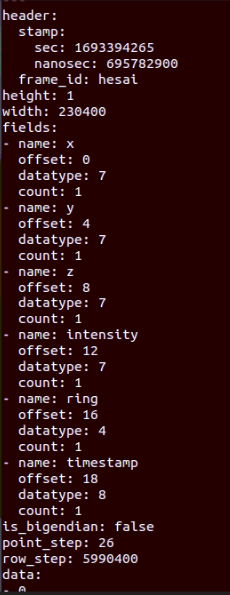

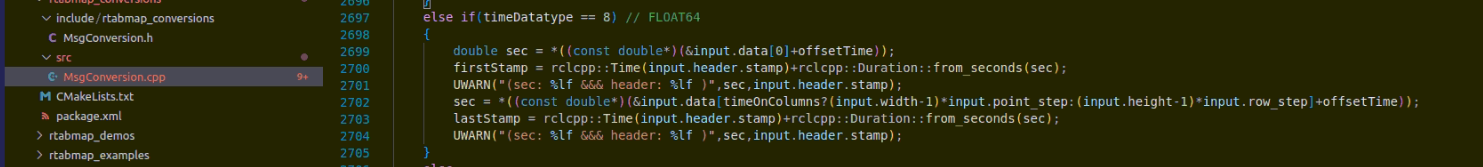

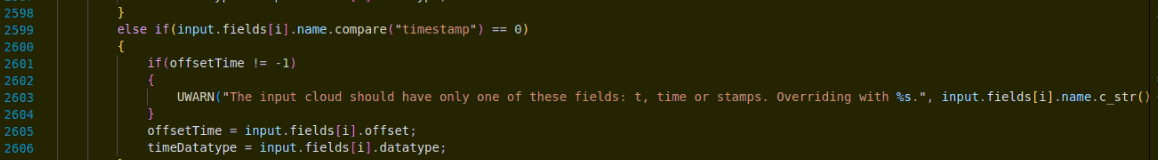

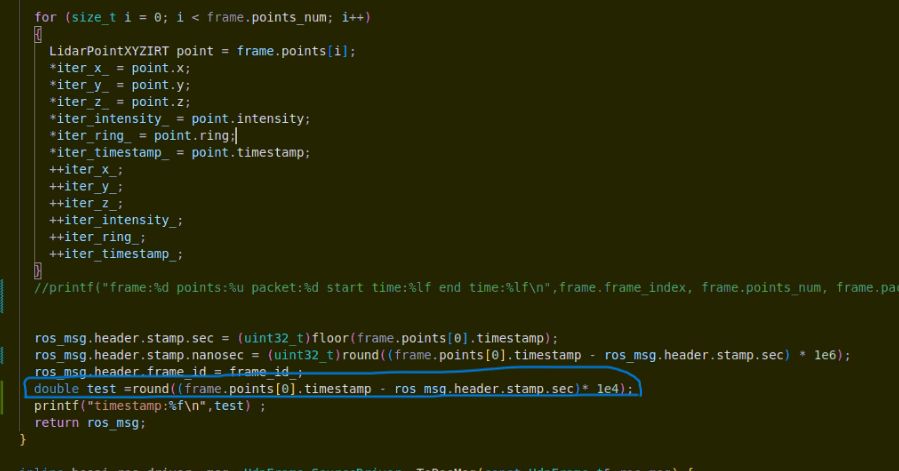

For Hesai, I have added this code in MsgConversion.cpp, where I am capturing the field "timestamp" as Hesai channel has "timestamp" instead of "t" or "time" field.    I tried printing this on SDK of Hesai and looks like this is the issue, as they are sending "timestamp" of stamped cloud on this "timestamp" channel of pointcloud and its taking the time negative in this function above on the deskewing side of Rtabmap because of timestamp instead of offset time. So what I wanted to confirm was is in current code of deskewing for channel "t" or "time" at input side, is it needing a offset time from the header.stamp.sec in seconds (current_header_stamp_sec - timestamp_in_secs) ? from starting of 0,0.1,0.1233.........N time in seconds.nanosenconds format ? I am still trying to fix there SDK driver, like below and have'nt implemented yet !  |

|

This post was updated on .

In reply to this post by matlabbe

Hi Mathieu,

This commit is working as expected for the ouster (as per test). Thank you again. Just when you have a chance please let me know about my above question for Hesai. Also what is the use of using separate rtabmap_util to deskewing VS just using rtabmap_odom to deskew and compute odom at the same time ? Also if using rtabmap_util to deskew, then does in rtabmap_odom, does deskew parameter needs to be False ?

|

|

Administrator

|

For the timestamp channel, rtabmap is expecting the time offset from the header's stamp. If the timestamp is an actual timestamp (independently of the header stamp), then the code would need to be updated to not use it like a time offset.

The deskewing in rtabmap_util or rtabmap_odom is using the same code. You don't need to enable it for rtabmap_odom if it has been already done externally with rtabmap_util (and the deskewed cloud is used for rtabmap_odom). The reason we provide both is that one could want the raw deskewed point cloud as input to another node, for this rtabmap_util would be used. In another scenario, one could do it in rtabmap_odom if the raw deskewed cloud is not required by any other node. For rtabmap node, you would either subscribe to output of rtabmap_util or the post-processed cloud from rtabmap_odom. By default rtabmap_odom deskew parameter is false. |

|

Thank you Mathieu, I appreciate you and your response.

Apologies for asking questions but just trying to understand more on the exact point timestamp and trying to make it work, point timestamp = (current_frame_firing_time_stamp - header.stamp.timestamp[0] ? OR if I use the SDK actual timestamp, can I make it work somehow on Rtabmap by altering code in a tightly coupled way ? Or do you have a velodyne ros2 bag in archive which I can use for reference purposes as that should be close to float64 and should answer my questions and clear my doubts on this pointstamp ? Also, is it better to start with ICP odometry from 128 beam LIDAR or use Zed2i with VINS-Fusion or ORB-SLAM3 and feed it to slam for accuracy purposes, if you have tested them prior or have any datasets ? Also, this will be for mapping launch file, giving grid map and 3d point cloud, for final localization, do I just need to add localization = true, in the same launch file by adding Nav2 bt navigator tree ? Thanks Ani |

|

Administrator

|

I don't have bag on hand to share, but the time set in the channel "t" or "time" or "stamps" of the point cloud is assumed to be the elapsed time since the timestamp in header of the msg. To get the actual timestamp of one point, you have to sum its "t" with the timestamp of the header. For example, you could have timestamp in header like "1694981342.142" (epoch time in sec) and for one point its "t" channel is 0.01, meaning it has been fired 10 ms after the timestamp of first point in the cloud. Its timestamp would be "1694981342.152". If your lidar is sending full timestamp for each point in a "timestamp" channel in 64Float, the code could use it directly instead of summing with the header stamp. Feel free to share a rosbag of the output point cloud you get from QT128. I could make the change on rtabmap_ros side to support "timestamp" channel format (float64bits) It really depends on the kind of environment you are. Not that deskewing is optional if the lidar is not doing fast rotation motions. Well, it depends what "localization" argument does in your launch file. If it sets "Mem/IncrementalMemory" to false, then yes. |

|

This post was updated on .

Hi Mathieu,

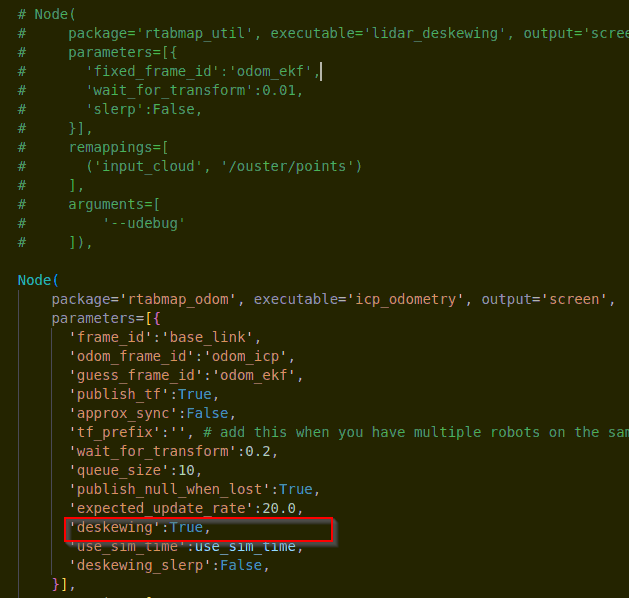

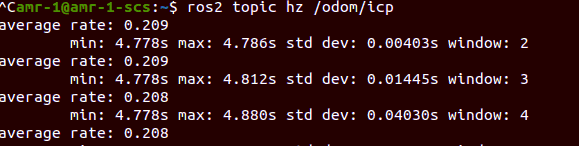

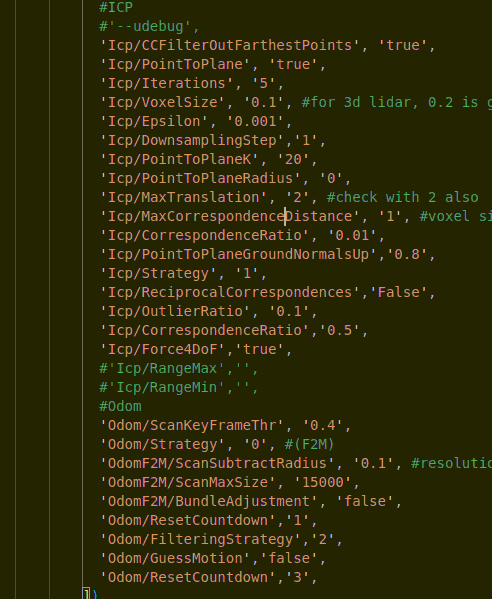

Thank you for the explanation, I appreciate you. I have made changes to the file link below and looks like ICP odometry is working fine, just when deskewing is set to True, I get "deskewing failed, aborting odometry" at 10Hz Lidar, but when Lidar is at 20Hz, it works but the rate is 0.2Hz of the resulting /odom/icp node which is very slow and my guess towards the addition of "for" loop computation where the width of Hesai cloud is too large, can you please take a look at it.  I tried opening a PR request but could not as I am not a collaborator. https://github.com/introlab/rtabmap_ros/issues/1035 https://easyupload.io/m/awgm2t I am trying to achieve dolly docking application in lab (pick from location and place to different location), Nav2 still has a long standing ticket for the same so other alternate I was thinking was to use reflectors behind cart and use intensity of LIDAR to drive under using Nav2 plugin but have not come to conclusion yet on this part as Nav2 will still detect object as obstacle (still need reading how to avoid obstacle avoidance at that pose). Environment is complex, have enough details like lab equipment.  I did try to go through most of the parameters and found this based on relevance, can you please suggest any improvements or changes to this ? Also RangeMax and Min are for robot hitting laser scans in units of meters ? Or what is the purpose of that ? Also is F2F better for match ? |

|

This post was updated on .

Hi Mathieu,

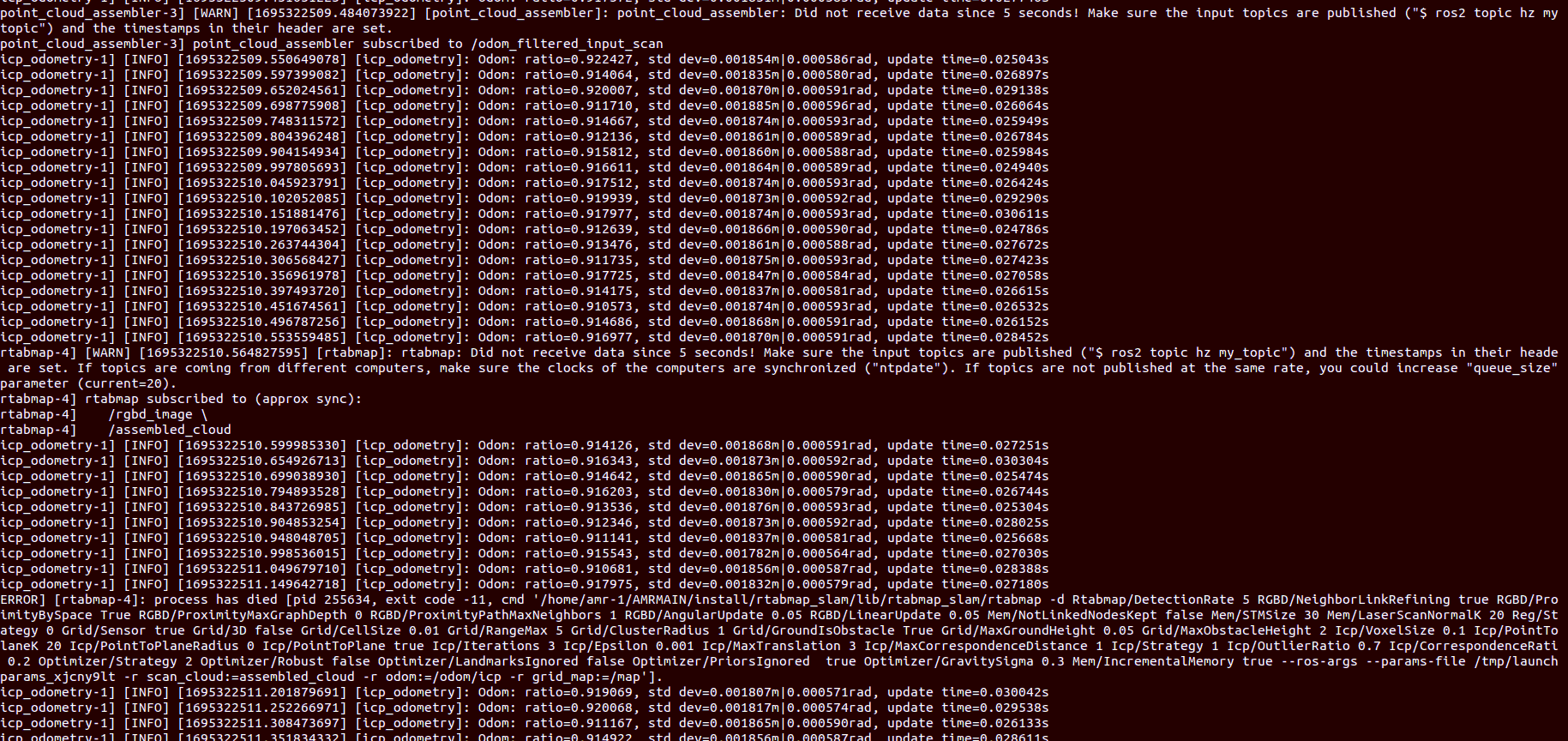

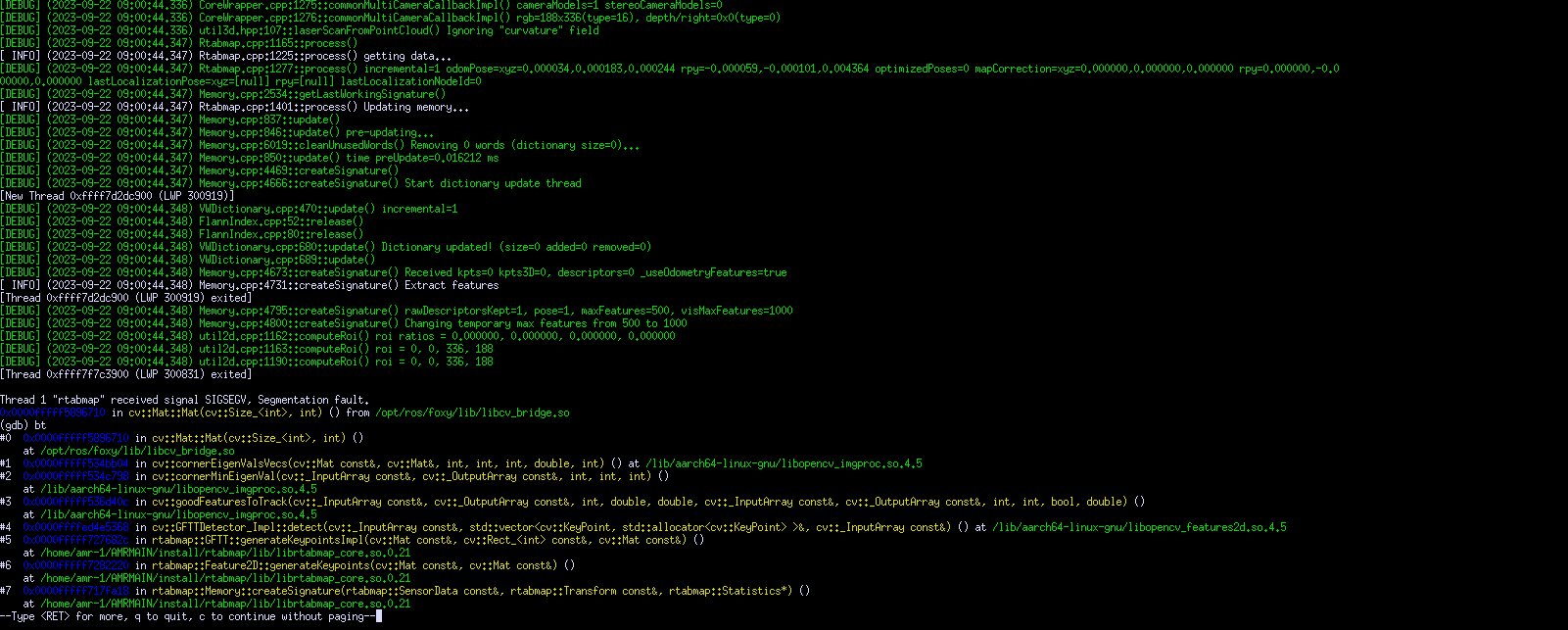

I tried the ICP_odom node with deskewing, cannot get more than 05Hz (incoming robot_localization running at 100hz and ros2_control at 150hz) where as on the odom generated by this node but with deskewing false I can get 15hz at LIDAR = 20Hz (Is deskewing required if Lidar is running at 20 Hz? and what is the minimum Hz the deskewing is required for LIDAR, is it when LIDAR is slower than Robot travel speed) After adding camera to SLAM node and feeding odom, Rtabmap complains about no data at 5sec, and get error message for node crashing, ICP odometry still computes. This is my launch file, can you please suggest: https://pastebin.com/AD9CQf08  But when I turn that subscribe rgb to false, it works fine. Using gdb debugging, is it because of opencv version ? I am on Jetpack 5.1

|

|

Administrator

|

For the OpenCV error, don't remember in the thread, but do you use a jetson? In jetpack, they rebuilt optimized opencv version for nvidia but ros cvbridge is still using system opencv. Rtabmap looks building against nvidia opencv, then rtabmap_ros breaks on runtime as cvbridge (used in rtabmap_ros) will use opencv build from nvidia, which is not the same opencv version it has been built with. I'll suggest to rebuild to uninstall ros-foxy-cv-bridge package and rebuild it from source so it is built with nvidia opencv. Other option is to use docker approach with "introlab3it/rtabmap_ros:humble-latest" image (support aarch64). You may want to wait for this build to get latest commit below. For deskewing, thx for sharing your patch and a bag, I added support for Hesai lidar in this commit. For the framerate, make sure you build colcon in Release mode. You can get also more efficient deskewing by setting slerp parameter to true. I tested with (note in this example a fake odom TF is used, but should be better to be an IMU TF like in this ros1 example): ros2 run tf2_ros static_transform_publisher 0 0 0 0 0 0 odom hesai ros2 run rtabmap_util lidar_deskewing --ros-args -p fixed_frame_id:=odom -r input_cloud:=/hesai/points -p slerp:=false ros2 bag play rosbag2_2023_09_18-07_35_27_0.db3 ros2 topic hz /hesai/points ros2 topic hz /hesai/points/deskewed The lidar framerate in the bag is around 8-9 Hz, and I can get deskewed lidar at 7-8 Hz with slerp=true, and 5-6 Hz with slerp=false. Deskewing would be more important to do if you expect to do fast rotations, otherwise you may not see any difference. cheers, Mathieu |

|

This post was updated on .

Hi Mathieu,

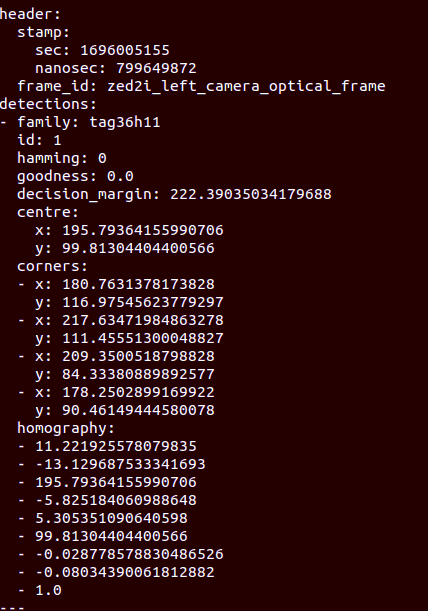

Firstly thank you, it worked as you mentioned (with slerp I got ~7hz but without slerp I still get max ~3Hz with lidar at 8 hz) . Just had to re-image the controller and build opencv bridge as you stated. Yes, I am using Jetson AGX Orin controller. I will appreciate if you can please glance my launch file for any further parameter optimizations which I would have missed from the parameter list. I do not have any bag or issue, just hypothetical case consideration. Also in localization mode, can most of the arguments be ported over from mapping ones which I have currently in setup for setting localization/mapping modes ? https://pastebin.com/guFNx3tV While using external Apriltag_ros library externally, does Marker detection parameters play any role ? Also how does these tags be saved as labels in RTAB-Map map, Meaning while sending pose to go to, can it be referred to that landmark location for sending goal pose like area1, area2 etc ?  Also Can I use Kp/DetectorStrategy = 10 and Vis/FeatureType=10 for loop closures with my current setup as I am using stereo mode for better accuracy on loop closure ? question: Why would we need octomap vs occupancy grid for navigation stack (Nav2) (is it better to use octomap in 2D planar environment for moving people so it can clear objects in localization phase, meaning mapping with static environment ) ? For saving map, is it just terminating the rtabmap and its saved to database and then when in localization mode, remapping map topic should get populated from database and project map ? Can you please share your views on this constantly moving environment. Ultimately I want to integrate with full Nav2 stack and Rtab-map. |

|

In reply to this post by matlabbe

Hi Mathieu,

Any input on previous post will be appreciated. Thanks Ani |

|

Administrator

|

I cannot suggest parameters if the application is not explicit. yes no they appear as a negative id node. Note that tag 0 is ignored. Tag 1 will have node id -1 and so on. You can use any features In localization mode, the map from rtabmap will be static. Not sure about nav2, but in nav1, you could have a global costmap to handle dynamic obstacles over the static map. yes Do you mean that there are dynamic objects around that move over time/days? In dynamic environment, you may need remapping at some point, as localization performance would decrease over time. There is an example here: https://github.com/introlab/rtabmap_ros/tree/ros2#example-with-turtlebot3 If you want to be one of the first message I take time to answer, please ask 1 question at a time, and be specific and with enough context to answer, otherwise I'll put the question in the backlog and answer someday when I have more time... |

|

Hi Mathieu,

Thank you for the response and apologies for not being explicit. My application is in warehouse environment, where there are multiple stations for pick and common area to drop where forklift unloads. Stations look the same but at different locations in the warehouse as below, marked on box is the tag where I am planning to put Apriltags for location landmarks.  Current Launch File Robot gets a signal when the cart is in place, OR will be already at station if open for charge in below picture, but once cart in place the robot drives under the cart and comes out on other side by 1 meters to dock with pin up and drives to unload area, once unloaded Robot gets signal using REST API to drive and repeat.

Based on this if you can please advise parameter or see my file, I will appreciate it, with any input on driving under the cart disabling the obstacle layer ? With dynamic objects I meant, was moving people in the warehouse but not moving mapped environment but I will definitely review global costmap layer as I am starting to go through Nav2 literature. |

|

Administrator

|

So your main issues are about ICP odometry accuracy, localization accuracy (or not able to localize), or mapping issues?

On mapping part, you could enable Grid/RayTracing=true to get unknown cells better filled. I would use also Grid/NormalsSegmentation=false with lidar like this to get better segmentation between the floor and obstacles. After mapping, you can also edit the occupancy grid in rtabmap-databaseViewer (File->edit optimized 2d map...) to remove dynamic obstacles and keep only the static ones in the final map. On runtime, before going under the cart, you may disable (/clear) the obstacle layer in the costmap. When you say "the robot drives under the cart" is the lidar also going under the cart? that could indeed affect icp odometry if the lidar is completely occluded. I can speculate more about the best parameters, but if you can provide a database with an issue in it you want to address, it will be easier to give more specific suggestions. cheers, Mathieu |

|

Thank you Mathieu, appreciate your response. I will get there soon to provide you with database in next 2 to 3 weeks at the latest with updates on localization while going under the cart.

|

|

Hi Mathieu,

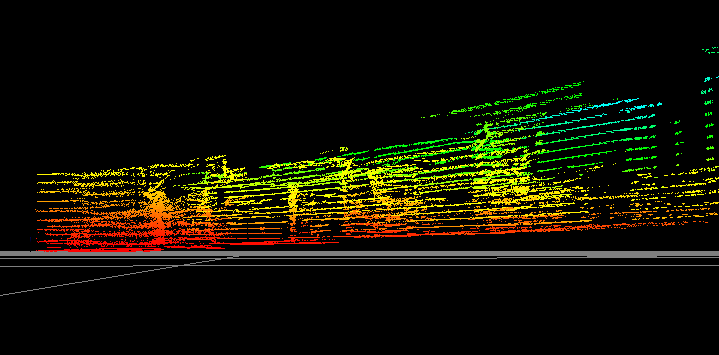

Thank you for continuingly answering questions, I did create map and its attached below, if I asked too many questions in this post, I apologize. 1. I would appreciate if you can advise any more optimization so map could be more better ? Databse and launch file I did notice, when I switch to stereo mode for ZED2i, the map does not display in rviz, I have to export using rtabmap-databaseviewer after mapping session is over. 2. I would like to export posses from your databaseviewer which I saw option for, and save it my own database against other parameters to send it to Nav2 based on current pose, is that the right way to do this ? I see to add label in rtabmapviz for current location in mapping mode but is there any other way to add labels to map in rviz or after map is created because a pose could be nearby but not as accurate ? 3. To go under the cart I was thinking to use lane follower using opencv and clear global costmap and disable obstacle layer on Nav2, is that a good approach in your experience ?

|

|

Administrator

|

Hi,

I am surprised that the lidar occupancy grids look relatively good, because the TF between base_link and the lidar frame seems wrong. The lidar looks tilted:  For the stereo, you need to use stereo_sync node if you want to input both image and scan data to rtabmap. When setting rtabmap node in stereo mode, it will ignore scan_cloud input. Because your grid parameter Grid/Sensor=0, hen no occupancy grids are generated because there is no lidar. To generate from stereo, you would need to set Grid/Sensor=1. For grid generation, as the robot is working in 2D, use a fast passthrough filter instead: 'Grid/NormalsSegmentation': 'false', 'Grid/MaxGroundHeight': '0.05', 'Grid/MaxObstacleHeight': '1', 'Grid/MinGroundHeight': '0', 'Grid/NoiseFilteringRadius': '0', 'Grid/VoxelSize': '0.05', A Rtabmap/DetectionRate of 7 Hz is pretty high, I would start with default 1 Hz. For 3D lidar (assuming 360 FOV): 'RGBD/ProximityPathMaxNeighbors': '1', 'RGBD/ProximityPathFilteringRadius': '0', 'RGBD/ProximityAngle': '0' For your last question, if the robot has to navigate under it, maybe reduce `Grid/MaxObstacleHeight` so that it will only see the legs/wheels as obstacle. Otherwise, if it should see the whole cart as obstacle most of the time, but only when it has to go under the cart, you may keep obstacle map like this, and bypass nav2 with some control node specific to dock under the cart (like you said, maybe a line follower, or without using the map, try to detect the wheel and navigate in the middle). cheers, Mathieu |

|

Hi Mathieu,

Thank you for taking time in looking into my files, I did not even realize that LIDAR was tilted, thank you so much. I will make the necessary adjustments as you suggested to see the results, but one quick thing I have 180 FOV with 3D lidar due to height of robot and docking part, can these parameters as you suggested be still true, I have doubt on "ProximityAngle", should that be in my case 0 and ProximityPathMaxNeighbors be a higher value for better loop closure like 2 or 3 ? 'RGBD/ProximityPathMaxNeighbors': '1', 'RGBD/ProximityPathFilteringRadius': '0', 'RGBD/ProximityAngle': '0' |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |