Hesai QT128 with Zed2i and external fused Odometry

123456

123456

|

Administrator

|

I tested with turtebot3 demo, and I could not reproduce the GTSAM + apriltag issue (or g2o jumping like crazy) if the odometry covariance is not to much over or under estimated. I had underestimated constraints GTSAM error when I switched to Reg/Force3DoF = false. That was because the odometry from turtlebot simulator sets very large covariance (500000) to z, roll and pitch values. With Reg/Force3DoF=true it was "fine" when I set more appropriate covariance for x/y and yaw (otherwise got many RGBD/OptimizeMaxError error even when standing close to the tag. In other situations, I still get often localizations rejected because optimization error is over RGBD/OptimizeMaxError threshold, but these rejections look appropriate as when it happens, as expected, tags are detected far away (very poor orientation estimation).

Note that all my tests were using default landmark covariance (landmark_angular_variance and landmark_linear_variance). If GTSAM is not working on your setup and g2o is, maybe just switch to g2o. Otherwise if you find why GTSAM is rejecting or poorly optimize with the provided tag constraints and it is a bug in RTAB-Map side, we could add the fix. If RGBD/OptimizeFromGraphEnd is true, not sure why you feel it is acting weird, as the map->base_link should never jump (with or without memory management). The occupancy grid could move though, which could affect the global planner, but should not affect the local planner. With RGBD/OptimizeFromGraphEnd=false, the robot will jump on map frame. If goal sent to nav2 are using map frame, then when the robot jumps (relocalization), it would indeed affect where the global planner thinks the robot is. |

|

This post was updated on .

Thank you for the reply, you are right there is no bug, it was my odometry covariance which was causing that behavior.

1. Can you please look at my database and let me know if I did memory management timing right or are there any parameters which could be tweaked for optimum performance, Database link 'Mem/RehearsalSimilarity','0.30', 'Rtabmap/TimeThr','700', 'RGBD/OptimizeFromGraphEnd','False' 2. Will it better to create service on rtabmap side (lifecycle msg ?) when the localization is true for Nav2 stack blocking function instead of using when /info topic is published ? localizer |

|

Administrator

|

You may be able to set Icp/VoxelSize=0.2 to reduce ICP time when doing proximity detection.

I also see that you disabled rotation optimization on the tags: [0.001, 0, 0, 0, 0, 0; 0, 0.001, 0, 0, 0, 0; 0, 0, 0.001, 0, 0, 0; 0, 0, 0, 9999, 0, 0; 0, 0, 0, 0, 9999, 0; 0, 0, 0, 0, 0, 9999]If rotation estimation of the tags is bad, that can help to get better optimization. Setting 'Mem/RehearsalSimilarity','0.20' maybe better for that kind of environment to keep more uniformly distributed nodes in WM.  For integration with nav2, it seems that we would need to put more effort to add services to rtabmap to be compatible with nav2 state machine. Currently to know if rtabmap is localized, you would need to look at the /rtabmap/localization_pose covariance published, large covariance means lost. In ros diagnostic, we can also see the status of the node, localized or not. |

|

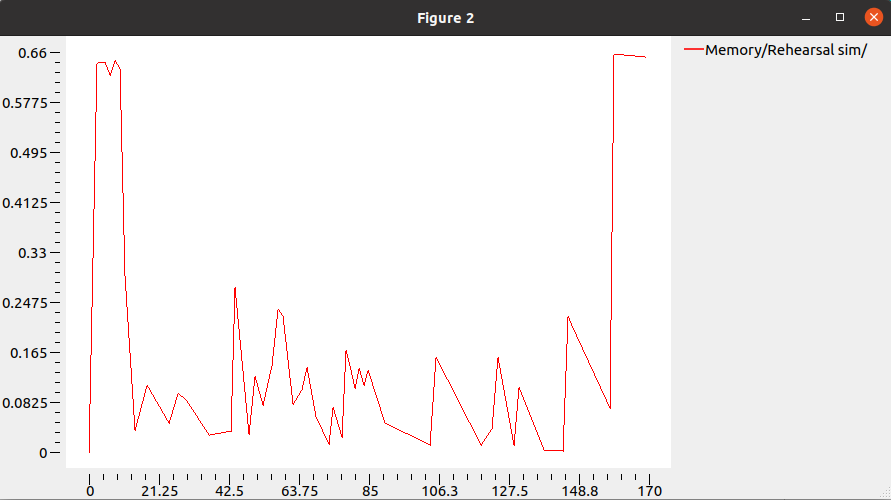

Thank you for the reply, I was going through your paper for localization methods and implementing superpoint + superglue but the Rehearsel_sim was high which is probably expected due to python code being called from c++ side, but is there any optimizations which could be done apart from your last post ?

Localization Methods  Database |

|

Administrator

|

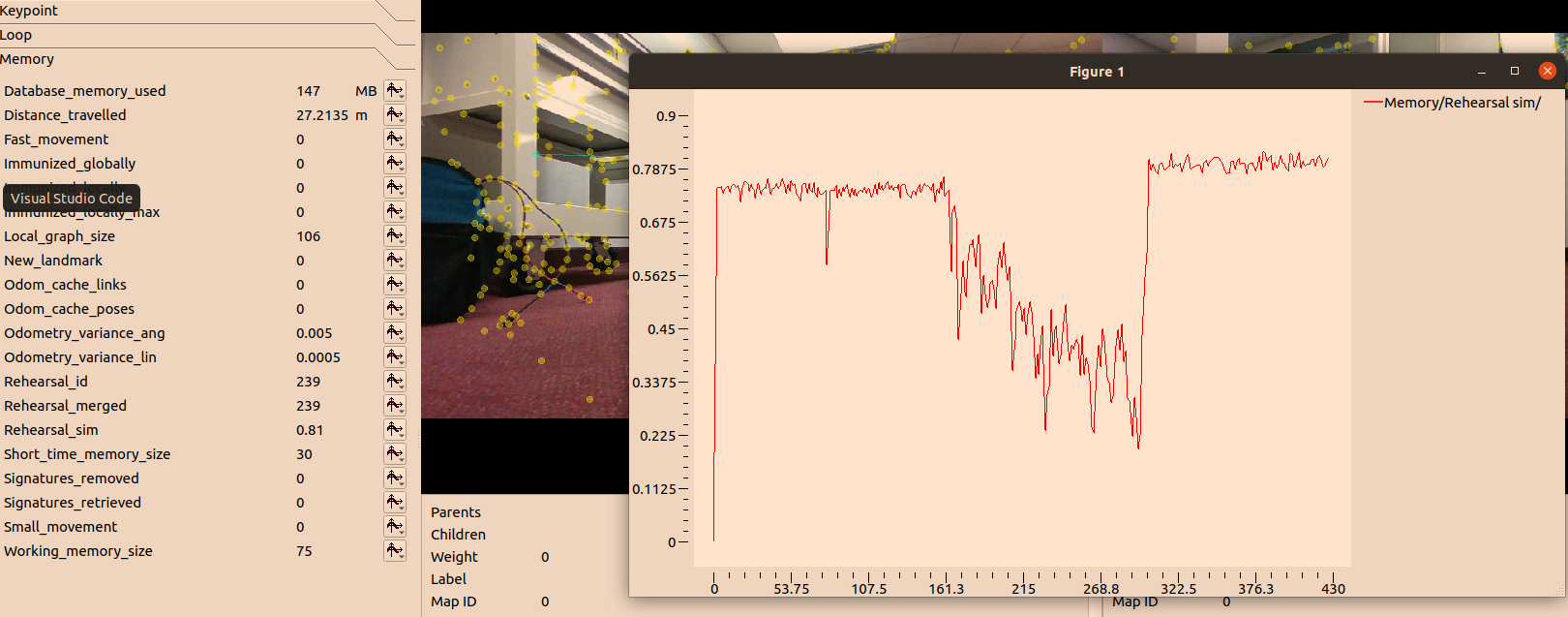

The rehearsal similarity will be high (as expected) if the scene is similar between consecutive images (for example we would expect in theory a 100% similarity when the camera is not moving and the scene is static). With SuperPoint, as features are generally more discriminating and easy to match, the similarity could be higher for the same trajectory than with other descriptors. The Mem/RehearsalSimilarity (default 0.6) would need to be adjusted if needed.

In your graph, was the robot still between 0-161 and 322-430 frames? Thus default 0.6 may be okay, however, to set it correctly, I generally do a longer trajectory and look at that graph again. Right-click on the curve name in plot legend, show mean/std/max values, then similarity threshold could be set to average+std. cheers, Mathieu |

|

This post was updated on .

Hi Mathieu,

Thank you for your reply, I did set rehearsal similarity as you mentioned and everything works good, but as soon as I turn on 'RGBD/OptimizeFromGraphEnd':'true', I do not see any map being published. I tried see the tags and still the map never gets published. My Parameters are below with Database, parameters = { 'frame_id': 'base_link', 'map_frame_id':'map', 'publish_tf':True, 'subscribe_depth':False, 'subscribe_scan ':False, 'subscribe_stereo ':False, 'subscribe_scan_cloud':True, 'subscribe_rgb':True, 'subscribe_rgbd':False, 'approx_sync':True, 'queue_size':5, 'wait_for_transform':0.2, 'odom_frame_id':'odom_icp', 'tf_prefix':'', 'use_sim_time':use_sim_time, 'qos_image':qos, 'qos_camera_info':qos, 'qos_odom':qos, 'wait_imu_to_init': False, #'odom_tf_linear_variance':0.0005, 'odom_tf_linear_variance':0.001, #'odom_tf_angular_variance':0.005, 'odom_tf_angular_variance':0.01, #RGBD 'RGBD/MarkerDetection':'false', 'RGBD/NeighborLinkRefining':'false', 'RGBD/ProximityBySpace':'True', 'RGBD/ProximityMaxGraphDepth': '0', 'RGBD/ProximityPathMaxNeighbors': '3', 'RGBD/AngularUpdate': '0.01', 'RGBD/LinearUpdate':'0.01', 'RGBD/CreateOccupancyGrid':'true', 'RGBD/Enabled':'true', 'RGBD/OptimizeMaxError':'0.1', 'RGBD/ProximityPathFilteringRadius':'0', 'RGBD/MaxOdomCacheSize':'30', 'RGBD/StartAtOrigin':'false', 'RGBD/LocalizationPriorError':'0.01', #Grid for 2D 'Reg/Strategy':'1', 'Reg/Force3DoF':'true', 'Reg/RepeatOnce':'False', 'Grid/Sensor':'0', 'Grid/3D':'false', 'Grid/CellSize':'0.05', 'Grid/RangeMin':'0.1', 'Grid/RangeMax':'5', 'Grid/ClusterRadius':'1', 'Grid/MinGroundHeight':'0.01', 'Grid/MaxGroundHeight':'0.03', 'Grid/MaxObstacleHeight':'2', 'Grid/RayTracing':'true', 'Grid/NoiseFilteringMinNeighbors':'2', 'Grid/NoiseFilteringRadius':'0.05', 'Grid/NormalsSegmentation':'false', 'Grid/FootprintHeight':'0.171', 'Grid/FootprintLength':'0.6604', 'Grid/FootprintWidth':'0.6096', 'Grid/VoxelSize':'0.05', #optimizer 'Optimizer/GravitySigma':'0.1', 'Optimizer/Strategy':'2', #'Optimizer/Robust':'True', 'GTSAM/Optimizer':'2', #ICP 'Icp/VoxelSize':'0.1', 'Icp/PointToPlaneK':'20', 'Icp/PointToPlaneRadius':'0', 'Icp/PointToPlane':'true', 'Icp/Epsilon':'0.001', 'Icp/MaxTranslation':'0', 'Icp/MaxCorrespondenceDistance':'0.3', 'Icp/Strategy':'1', 'Icp/OutlierRatio':'0.85', 'Icp/CorrespondenceRatio':'0.1', 'Icp/PointToPlaneGroundNormalsUp':'0.8', 'Icp/Iterations':'10', 'Icp/PMConfig':'', #memory 'Mem/STMSize':'30', 'Mem/LaserScanNormalK':'20', 'Mem/NotLinkedNodesKept':'false', #loop closures 'Kp/DetectorStrategy':'11', 'Vis/FeatureType':'11', 'Vis/CorGuessWinSize':'0', 'Vis/MaxFeatures':'500', #localization 'SuperPoint/ModelPath':'/home/amr/AMRMAIN/src/ROS_DRIVERS_Readme/rtabmap/archive/2022-IlluminationInvariant/scripts/superpoint_v1.pt', #'PyMatcher/Path':'/home/amr/AMRMAIN/src/ROS_DRIVERS_Readme/rtabmap/archive/2022-IlluminationInvariant/scripts/SuperGluePretrainedNetwork/rtabmap_superglue.py', #'PyMatcher/Cuda':'true', 'SuperPoint/Cuda':'true', #'Mem/UseOdomFeatures':'True', 'PyMatcher/Iterations':'5', } remappings = [ ('scan_cloud', 'assembled_cloud'), ('tag_detections','/apriltag/detections'), ('grid_map','/map'), ('rgb/image','/slam_lidar_camera/color/image_raw'), ('rgb/camera_info','/slam_lidar_camera/color/camera_info')] return LaunchDescription([ # Launch arguments DeclareLaunchArgument( 'use_sim_time', default_value='false', description='Simulation purposes only'), DeclareLaunchArgument( 'deskewing', default_value='false', description='lidar slicing'), DeclareLaunchArgument( 'deskewing_slerp', default_value='true', description='lidar slicing'), DeclareLaunchArgument( 'localization', default_value='true', description='same file for mapping and localization'), DeclareLaunchArgument( 'qos', default_value='1', description='qos 1 = reliable, 2 = best effort'), Node( package='rtabmap_odom', executable='icp_odometry', output='screen', #prefix="xterm -e gdb -ex run --args", parameters=[{ 'frame_id':'base_link', 'odom_frame_id':'odom_icp', 'guess_frame_id':'odom_ekf', 'publish_tf':True, 'approx_sync':False, 'tf_prefix':'', 'wait_for_transform':0.2, 'queue_size':3, 'publish_null_when_lost':False, 'expected_update_rate':30.0, 'use_sim_time':use_sim_time, 'qos':qos, 'deskewing':deskewing, 'deskewing_slerp':deskewing_slerp, 'wait_imu_to_init': False, 'Icp/CCFilterOutFarthestPoints':'true', 'Icp/PointToPlane':'true', 'Icp/VoxelSize':'0.1', 'Icp/Epsilon':'0.001', 'Icp/DownsamplingStep':'1', 'Icp/PointToPlaneK':'20', 'Icp/PointToPlaneRadius':'0', 'Icp/MaxTranslation':'0', 'Icp/Iterations':'10', 'Icp/MaxCorrespondenceDistance':'0.3', 'Icp/CorrespondenceRatio':'0.1', 'Icp/PointToPlaneGroundNormalsUp':'0.8', 'Icp/Strategy':'1', 'Icp/ReciprocalCorrespondences':'False', 'Icp/OutlierRatio':'0.85', 'Icp/PMConfig':'', #Odom 'Odom/ScanKeyFrameThr':'0.4', 'OdomF2M/ScanSubtractRadius':'0.1', #same as voxel size 'OdomF2M/ScanMaxSize':'20000', 'OdomF2M/BundleAdjustment':'false', 'Odom/ResetCountdown':'10', #optimizer 'Optimizer/Strategy':'2', 'Optimizer/GravitySigma':'0.1', 'Reg/Strategy':'1', 'Reg/Force3DoF':'true', }], remappings=[ ('scan_cloud', '/hesai/points'), ('odom','/odom/icp') ], arguments=[ #ICP '--uwarn' ]), Node( package='rtabmap_util', executable='point_cloud_assembler', output='screen', parameters=[{ 'max_clouds':10, 'fixed_frame_id':'', 'use_sim_time':use_sim_time, 'qos':qos, 'qos_odom':qos, }], remappings=[ ('cloud', 'odom_filtered_input_scan'), ('odom', '/odom/icp') ]), #this is for mapping node only! Node( condition=UnlessCondition(localization), package='rtabmap_slam', executable='rtabmap', output='screen', #prefix="xterm -e gdb -ex run --args", parameters=[parameters, { 'Rtabmap/DetectionRate':'0', 'Rtabmap/TimeThr':'700', }], remappings=remappings, arguments=['-d','--udebug',] ), #this is for localization node only ! Node( condition=IfCondition(localization), package='rtabmap_slam', executable='rtabmap', output='screen', #prefix="xterm -e gdb -ex run --args", parameters=[parameters, { 'Rtabmap/DetectionRate':'1', 'Rtabmap/StartNewMapOnLoopClosure':'true', 'Rtabmap/TimeThr':'800', 'RGBD/OptimizeFromGraphEnd':'true', 'Optimizer/Iterations':'10', 'Optimizer/PriorsIgnored':'true', 'Optimizer/Epsilon':'0.01', #'GTSAM/Incremental':'true', #memory 'Mem/IncrementalMemory':'false', 'Mem/InitWMWithAllNodes':'true', 'Mem/RehearsalSimilarity':'0.58', 'Mem/BinDataKept':'false', #apriltags 'Marker/Priors':'"1 3.33 -0.64 0.47 0.02 -0.02 -1.65|2 0.96 -10.26 0.60 0.00 -0.02 -3.09"', 'landmark_angular_variance':float(9999), 'landmark_linear_variance':0.01, }], remappings=remappings, arguments=[ #rtabmap '--udebug', #'--uwarn', ]), Database Can you please advise on my mistake here to why I do not see the map ? I was trying this parameter 'RGBD/OptimizeMaxError':'0.1' and after using this the robot does not jump when it sees the tag that much while rotating or while driving as it eliminates excessive deformation based on your parameter, I wanted to know if the optimization still takes place considering landmark positions and drift in the loop when the robot is at optimum position from the tag to correct it ? What could be ideal number considering external apriltag_ros library for landmarks ? |

|

Administrator

|

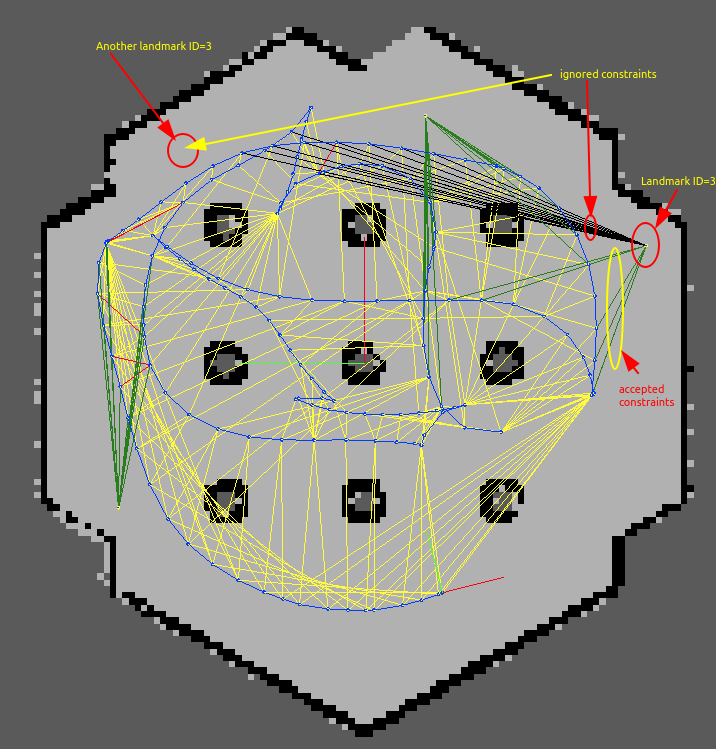

This post was updated on .

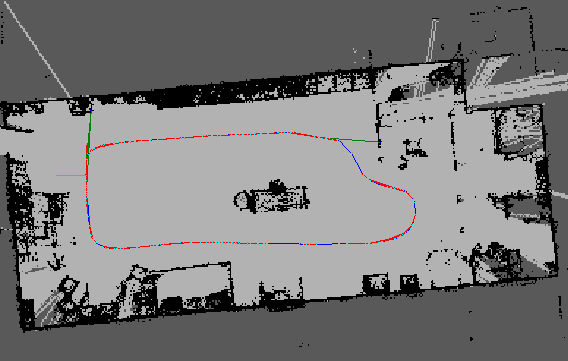

Hi,

There was indeed a bug causing the map to be cleared on start when RGBD/OptimizeFromGraphEnd is true. Should be fixed in https://github.com/introlab/rtabmap/commit/92edae35fca2b93ef7982f539ddb7664b893ceaa RGBD/OptimizeMaxError=0.1 is quite low. I set it generally between 2 and 4. I experimented a little with this demo (it is ros1 but the following could be translated to ros2 demo): <param name="RGBD/MarkerDetection" type="string" value="20"/> <param name="Marker/Length" type="string" value="0.08"/> <param name="Marker/MaxRange" type="string" value="2"/> <param name="Marker/CornerRefinementMethod" type="string" value="3"/> <param name="Marker/Dictionary" type="string" value="20"/> <param name="Marker/VarianceLinear" type="string" value="0.1"/> <param name="Marker/VarianceAngular" type="string" value="0.05"/>It seems that lower covariance on the landmark constraint will make rtabmap reject proximity detections with the laser scan with optimization error ratio > 3 (default) on landmark constraints. Setting a smaller Marker/MaxRange helps to avoid landmark detection with very bad orientation estimation (that then cause rtabmap to reject almost all new loop closures). There was no mechanism in rtabmap to reject bad landmark constraints, so I added support in this commit (note that it will work correctly only if the first time the landmark has been observed the constraint was good). Note also that I updated Vertigo robust graph (OptimizeMaxGraphError=0 and Optimizer/Robust=true) integration to also reject landmarks if constraints are poor (currently working only on landmark constraints with orientation). Contrary to OptimizeMaxGraphError>0 approach to reject the bad landmark constraints, with Vertigo it doesn't need to have the first constraint good (i.e., the first detection of a landmark can be ignored eventually if a new better estimation is detected afterwards). Here is an example where I put the same landmark at two different locations. One of the landmark ID=3 has been observed first on the right side of the map, then eventually observed again on the left side of the map, which constraints are then rejected (black) as the landmark 3 is expected to be on the right side of the map.   cheers, Mathieu |

|

Hi Mathieu,

Thank you for replying and the fix commit, and it makes sense. Quick question, I am using currently external apriltag_ros , can I still use these basic parameters without dictionary declaration for tags filtering or I do I need to build Rtabmap with Aruco module, because I read in this post ? "Marker/Length" :"0.08", "Marker/MaxRange":2", "Marker/CornerRefinementMethod":"3", "Marker/VarianceLinear":"0.1", "Marker/VarianceAngular":"0.05", |

|

Administrator

|

If you are using external apiltag_ros, the "Marker/" parameters are not used. These parameters are used only when we detect tags internally in rtabmap when "RGBD/MarkerDetection" is enabled (to work, rtabmap should be built against opencv built with aruco module, like explained in that post).

You would have to use ros parameters "landmark_angular_variance" (default 0.001) and "landmark_angular_variance" (default 0.001) to adjust how good the detections are, this will affect graph optimization and loop closure rejections. In comparison with my test above, with external apriltag_ros, I would set "landmark_angular_variance" to 0.1 and "landmark_angular_variance" to 0.05. For the max detection range, that would need to be done externally. |

|

Hi Mathieu,

Thank you for the reply, building Opencv with aruco module seems better option For Rtabmap and it works better for graph optimization and rejection of loop closure, just the only thing which I noticed is increased of queue size on the rtabmap side from 5 to 12 size as RGBD/MarkerDetection came into code and tried to reduce the resolution on camera but did not help (640x480x60, D455 running on CUDA with superpoint too), is it worth to do pre and post image decimation ? or this queue size is good enough ? . Also the RGBD/OptimizeFromGraphEnd worked too as soon as it found the first loop closure. |

|

Administrator

|

The queue size is used to synchronize input topics. Having to increase it means frame rate is different on some topics than before.

For these parameters:

Param: Mem/ImagePreDecimation = "1" [Decimation of the RGB image before

visual feature detection. If depth size is larger than

decimated RGB size, depth is decimated to be always at

most equal to RGB size. If Mem/DepthAsMask is true

and if depth is smaller than decimated RGB, depth may

be interpolated to match RGB size for feature detection.]

Param: Mem/ImagePostDecimation = "1" [Decimation of the RGB image before

saving it to database. If depth size is larger than decimated

RGB size, depth is decimated to be always at most equal to

RGB size. Decimation is done from the original image. If set

to same value than Mem/ImagePreDecimation, data already

decimated is saved (no need to re-decimate the image).]

Mem/ImagePreDecimation would affect feature detection but not marker detection / occupancy grid creation / database image resolution. Mem/ImagePostDecimation would affect occupancy grid creation / database image resolution, not feature detection / marker detection. cheers, Mathieu |

|

This post was updated on .

Hi Mathieu,

Thank you so much for the reply and for answering all the questions. I have been running for over 2 weeks now, and did not see any weird error or warning except sometimes when I change the light intensity in test lab to see localization performance with superpoint, just get warnings about features in image are 0 than stored and loop closure rejected (left it to default Vis/MinInliers) but still performance is good. So far everything works as expected in terms of tags utilization internally, Memory, superpoint, loop closures --> Nav2. I would like close this topic and just ask for one last guidance or any lookouts in parameters or anything could be enhanced for faster processing (ICP/localization), towards my latest database for if there is any parameters which could be tweaked for application in warehouse for pick and drop missions. (it is complex manufacturing environment with machines and workflow conveyor where this application will be running) Database and launch File For multiple robots, just namespacing with same shared map frame is the approach on rtabmap side ? |

|

Administrator

|

Hi,

Either: 1) you start the robots with their own roscore and not change anything in your launch files and use some other mechanism than ROS to communicate between robots, or 2) namespace all nodes, topics and TF frames if all robots are using the same roscore. See example here and look how "prefix" is used. This assumes that the URDF is also using same prefix. You can see here that we added an argument to URDF so that we can prefix all TF frames of the robot. For your database, the computation time seems reasonable. Not sure if you really need `point_cloud_assembler` for your application, you may feed the `icp_odometry` output scan to rtabmap node directly, or feeding original scan to rtabmap node. cheers, Mathieu |

|

Hi Mathieu,

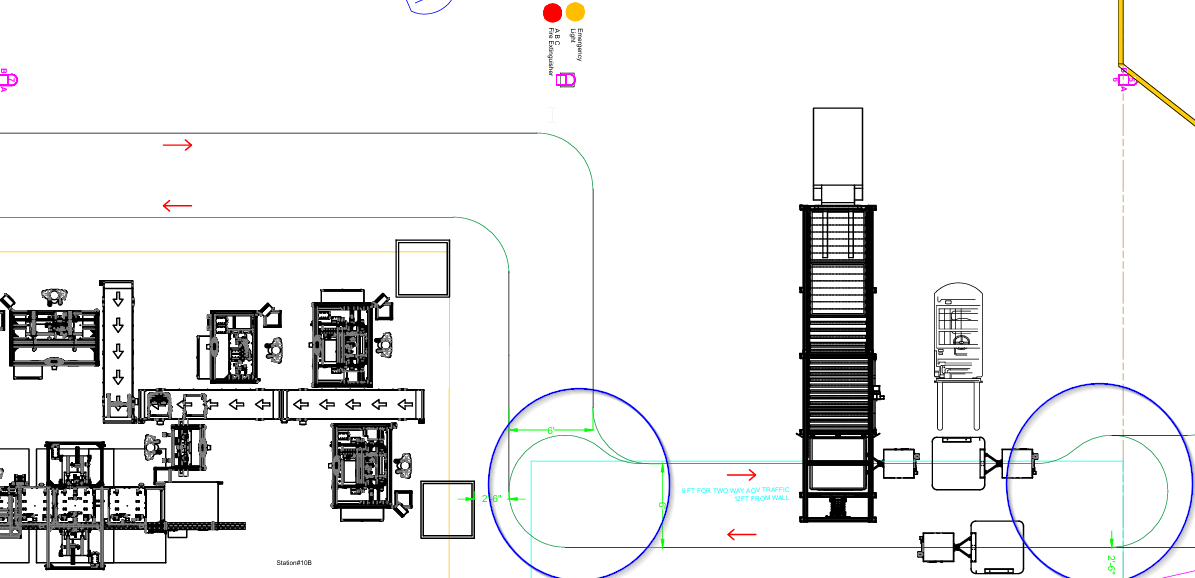

Thank you for replying and for those links, it helped setting up the multi robot configuration so far and still in process to upgrade to Humble, but as I go I did not think about as you mentioned to feed icp_odometry directly to rtabmap node as I was more likely following example of yours for vlp16 with d435 and reading along documentation from docs. I can try both methods but can you provide some input please, like how can I benchmark for both ways. Is `point_cloud_assembler` used more for drone applications or painting pointcloud application ? When you say feed directly, currently I have ekf (imu + encoders) as guess to icp_odometry, so use directly ekf into rtabmap node, wouldn't there be more drift introduced in this method vs icp_odometry method going into rtabmap node ? One application question based on looking into your racecar repo, currently I had ROS based magnetic tape and RFID based robots programmed in production for 2 years now. Upgrading to SLAM based currently, what would you have done for intersection traffic, (Apriltags or OpenRMF (this seems little pain without documentation available today)) or any another method. On Nav2 side, I am able to cancel goals and save path in database as needed and find euclidean distance if lost depending on localization_pose coming from SLAM wrt to saved poses and continue (the BT I designed is very good and working respecting re-plan based on distance before goal and stuff).  |

|

Administrator

|

The test_velodyne_***.launch examples are for handheld scanning, in which we want to record all scan points for 3D reconstruction/surveying.

For robot, I would refer you to husky demo instead, in which we care more about localization than 3D reconstruction. I use point_cloud_assembler in applications I want to record all lidar points. This obviously generates bigger databases. By "feeding directly", I was refering to filtered output scans from icp_odometry. I don't remember exactly your odometry setup, but feeding EKF (imu+encoder) to icp_odometry as guess_frame_id, then feed odomety output from icp_odometry to rtabmap sounds good. Not sure at which decision level you mean... Examples: are robot decisions based only on current sensor readings? can robots detect other robots? are there preferred lanes or general rules (always pass on right...) in case of traffic? can robots communicate with other robots nearby? are they all on same network? is there a fleet management software that can know where all the robots are at anytime so it can plan routes for them to avoid traffic? |

|

This post was updated on .

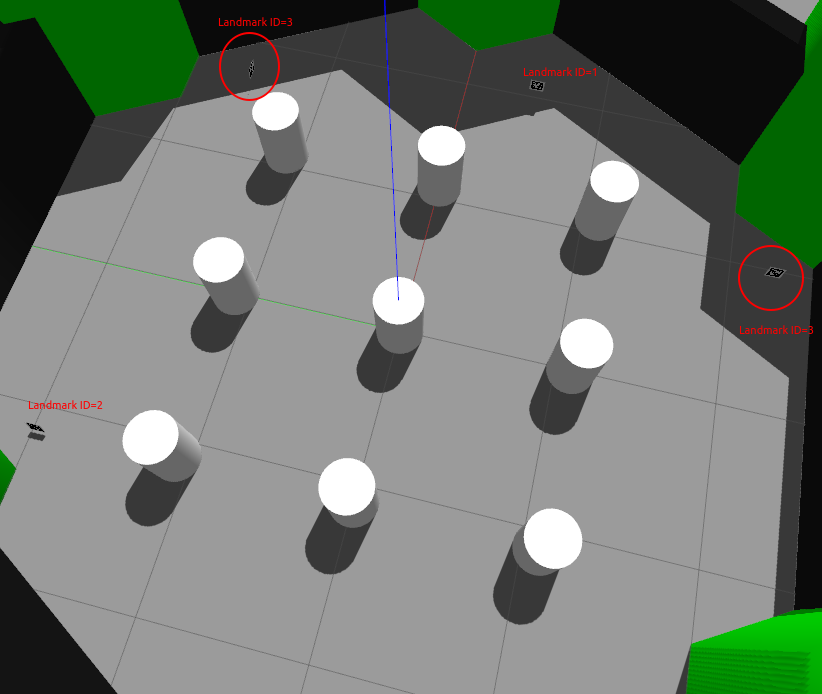

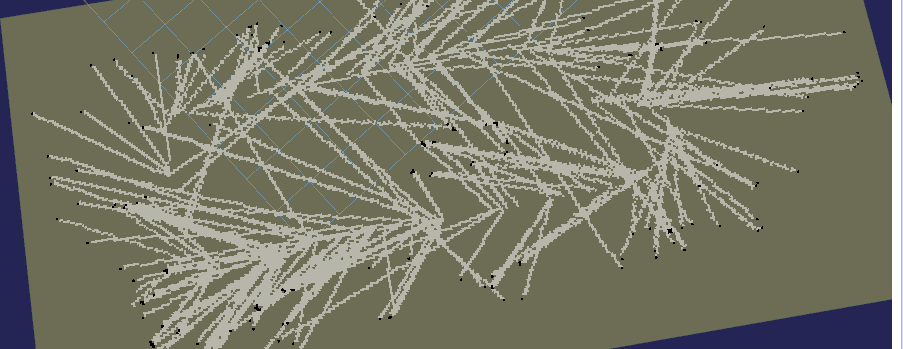

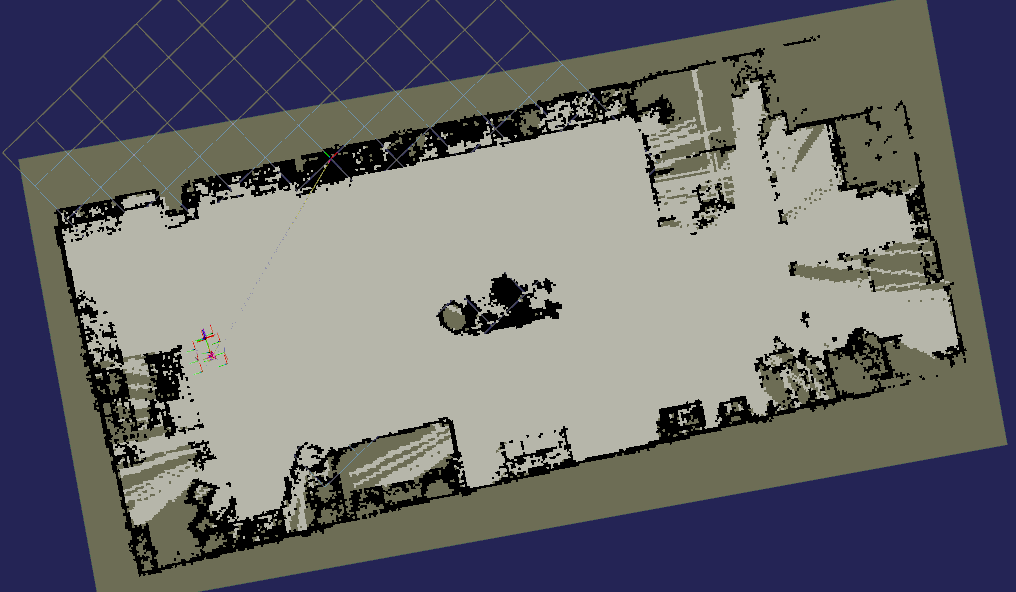

Hi Mathieu,

I tried your Husky demo configuration and map generated is strange like below after first pass, feeding icp_odom (EKF+wheels) --> /odom_filtered_input_scan --> rtabmap node directly   Launch file with DB VS using point_cloud_assembler, the map looks very good to room scale.

|

|

Administrator

|

Can you share database of the "husky" config? Is it the same environment? or some empty space?

|

|

This post was updated on .

Please see the link for Database and my launch file with config, its same environment.

Link Something I have noticed is, using Apriltags internally and using below parameters, the robot only corrects its position once during first localization and not the second time OR is it better to use MaxOptimize error without Robust parameter and increase the odom cache size ? 'Marker/Dictionary':'20', 'Marker/CornerRefinementMethod':'3', 'Marker/VarianceLinear':'0.1', 'Marker/VarianceAngular':'9999', #c 0.05 'Marker/Length':'0.1', 'Marker/MaxRange':'2.0', 'Marker/PriorsVarianceAngular':'9999', 'Marker/PriorsVarianceLinear':'0.1', 'Optimizer/GravitySigma':'0.0', 'Optimizer/Strategy':'2', 'Optimizer/Robust':'True', 'Optimizer/PriorsIgnored':'false', |

|

Administrator

|

Set Grid/NoiseFilteringRadius to 0 (to disable the noise filter).

You may tune it again afterwards. |

|

Thank you Mathieu, it worked. My bad, as I missed this parameter to unset after transitioning from handheld to husky config.

|

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |