Rtabmap mapping with two cameras

1234

1234

|

Hello everyone!

I am trying to launch rtabmap to map with two cameras, but i do not know how to merge the information given by them from the launch file. The cameras topics are camera_front and camera_back and my launch file is attached. rtabmap.launch As you can see the file is similar to the default one but i tried to add the two cameras with the param: < param name="depth_cameras" type="int" value="2"/> However, when it comes to merge them, I do not know how to do it, I just have the rgb topics remapped like this: <remap from="rgb0/image" to="/camera_front/rgb/image_raw"/> <remap from="depth0/image" to="/camera_front/depth/image_raw"/> <remap from="rgb0/camera_info" to="/camera_front/rgb/camera_info"/> <remap from="rgb1/image" to="/camera_back/rgb/image_raw"/> <remap from="depth1/image" to="/camera_back/depth/image_raw"/> <remap from="rgb1/camera_info" to="/camera_back/rgb/camera_info"/> but i do not know how to proceed now. Any help is appreciated! |

|

Administrator

|

Hi,

you may take a look at this example launch file: https://github.com/introlab/rtabmap_ros/blob/master/launch/demo/demo_two_kinects.launch If you are on older rtabmap version (e.g. Indigo), see this version instead: https://github.com/introlab/rtabmap_ros/blob/indigo-devel/launch/demo/demo_two_kinects.launch (Well, look in your rtabmap_ros/launch/demo installation to see which version you have) cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

Thanks for the answer,

I tried to follow the version for Indigo, and i came up with this new launch file: rtabmap.launch However, when i try to run rtabmap with rviz I have the error on global status: "Fixed frame map does not exist". I tried to map eveything as in the default rtabmap.launch file for just 1 camera but I still have this problem. Any ideas where this problem comes from? Cheers, Adrian |

|

Administrator

|

Hi Adrian,

If you are using robot odometry: /rb1/mobile_base_controller/odom, you don't need rgbd_odometry node. In the launch file, rtabmap is subscribed to "/rb1/mobile_base_controller/odom" to get odometry, not the output of rgbd_odometry (which would be /rtabmap/odom). If any one of the input topics (rgbd cameras and odom) is not published, rtabmap will not work (/map -> /odom won't be published). Do you see on the terminal rtabmap status info at 1 Hz? If not, there is a missing input or rtabmap cannot synchronize them (e.g., timestamps are not correctly set in all topics). Can you show TF tree and rqt_graph? $ rosrun tf view_frames $ rqt_graph cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

This post was updated on .

Thanks again for the answer.

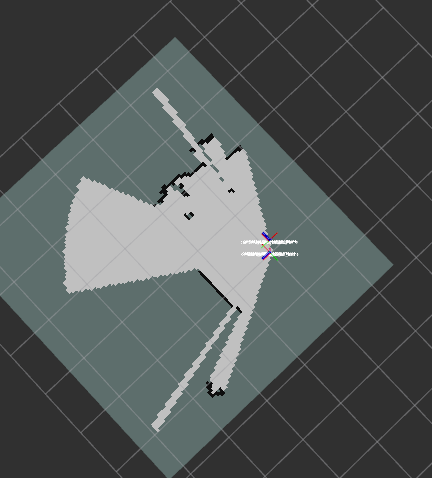

I actually found the same problem myself, those two nodes were disturbing each other so the rtabmap was going crazy. Also some of the parameters were not configured properly, as you have said. I leave attached my last launch file in case anyone runs into the same problem since it is working now: rtabmap.launch However I still have some trouble with the mapping since it seems that the laser is in the other way as it should be, I mean, the grid map that rtabmap does around the robot points to the back of the robot instead of the front. I do not know why it is happening. Cheers, Adrian [EDIT] I have added the parameter: param name="map_negative_poses_ignored" type="bool" value="true"/> and now the grid map looks like this  but it appears a black line in front of the robot and I do not know what this is. Besides I have the warning [pcl::IterativeClosestPoint::computeTransformation] Not enough correspondences found. Relax your threshold parameters every time I move the robot. but it appears a black line in front of the robot and I do not know what this is. Besides I have the warning [pcl::IterativeClosestPoint::computeTransformation] Not enough correspondences found. Relax your threshold parameters every time I move the robot.

|

|

Administrator

|

Hi,

I see you are using "gen_scan" and is the sensor tilted?. The sensor should be parallel to ground to work properly as it uses the middle line of the depth image, similar to depthimage_to_laserscan node. You may want to generate the laser scan outside rtabmap node if the camera is tilted using pointcloud_to_laserscan node. For the ray tracing behind the sensor, is it similar to this? If so, I'll check if there is a bug with "gen_scan" about that. cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

I think it is that, the sensor is a bit tilted. However it can be also the case that it (camera) is somehow in a higher or lower position than the software thinks it is and part of it collapses with the floor. Actually if I see the color spectrum with one of my cameras it looks like it is like a bit mixed with the floor (I will edit this post later with some pictures). Meanwhile I will also try to run the node separatedly as you said.

For the ray tracing it is actually that, I found that post previously and it looks like it is fixed with the param "map_negative_poses_ignored" or, at least, does not map a circular space behind the robot. Again i will post a picture later on. Cheers, Adrian |

Re: Rtabmap mapping with two cameras

|

This post was updated on .

As I said, I leave here some pictures of the simulation:

This first is with the param "map_negative_poses_ignored" set to false, which makes the robot's sensor think that it should map to the back of the robot:  This one is with the param set to true:  As you can see, it looks like there is an object or something blocking the view, but there is actually nothing there. These two images correspond to one of the cameras color representation. It looks like the cameras sensor is somehow mixed with the floor, or at least, that is what i think, since the line of intersection is in the same position as the line in the previous pictures with the grid map:   I also tried taking out the sensor as a different node, but I get this error: SensorData.cpp:594::uncompressDataConst() Requested laser scan data, but the sensor data (1) doesn't have laser scan. And there is no grid map on rviz to see. Cheers, Adrian |

|

Administrator

|

This post was updated on .

Hi,

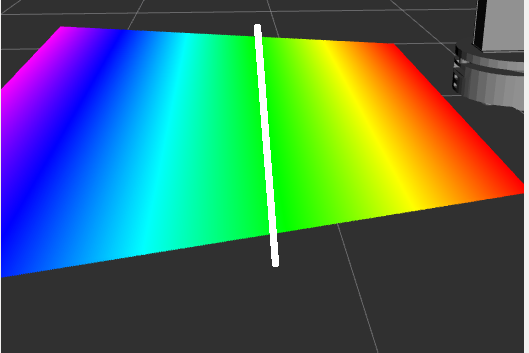

There was a bug in the laser scan generation from the depth images (in rtabmap node). It is fixed in this commit. Before:  With the fix:  For convenience, I added "map_negative_scan_empty_ray_tracing" to avoid filling space between the two kinects (as in the second screenshot above), while being able to keep "map_negative_poses_ignored" to update grid map with latest data even if we are not moving. Note that demo_two_kinects.launch example assumes that the cameras are parallel to ground, like in this post: http://official-rtab-map-forum.206.s1.nabble.com/demo-two-kinect-data-error-td1231.html#a1251, so the middle lines in the cameras simulate directly a laser scan. In your setup, the kinect is tilted toward the ground. With "gen_scan" (which works exactly like depthimage_to_laserscan), the scan is generated like in the image below (middle line of the depth image):  Can you show TF in RVIZ to better visualize how the cameras are configured? Here is an example with pointcloud_to_laserscan and a single camera tilted 45 degrees toward the ground:

<launch>

<!-- The fake scan will be 20 cm over /base_link -->

<node pkg="tf" type="static_transform_publisher" name="base_link"

args="0 0 0.2 0 0 0 base_link base_scan_link 100" />

<!-- Camera is tilted 45 degrees toward the ground, 80 cm over base_scan_link -->

<node pkg="tf" type="static_transform_publisher" name="base_scan_link"

args="0 0 0.8 0 0.785398163 0 base_scan_link camera_link 100" />

<!-- Camera -->

<include file="$(find freenect_launch)/launch/freenect.launch">

<arg name="depth_registration" value="True" />

</include>

<group ns="camera">

<!-- Run a VoxelGrid filter to clean NaNs and downsample the data -->

<node pkg="nodelet" type="nodelet" name="voxel_grid" args="load pcl/VoxelGrid camera_nodelet_manager" output="screen">

<remap from="~input" to="/camera/depth_registered/points" />

<remap from="~output" to="/camera/depth_registered/points_downsampled" />

<rosparam>

filter_field_name: z

filter_limit_min: 0.01

filter_limit_max: 10

filter_limit_negative: False

leaf_size: 0.01

</rosparam>

</node>

<node pkg="nodelet" type="nodelet" name="pointcloud_to_laserscan" args="load pointcloud_to_laserscan/pointcloud_to_laserscan_nodelet camera_nodelet_manager" output="screen">

<remap from="cloud_in" to="/camera/depth_registered/points_downsampled"/>

<!-- in base_scan_link referential -->

<param name="min_height" type="double" value="-0.05"/>

<param name="max_height" type="double" value="0.05"/>

<param name="target_frame" type="string" value="base_scan_link"/>

<param name="angle_min" type="double" value="-0.65"/>

<param name="angle_max" type="double" value="0.65"/>

<param name="angle_increment" type="double" value="0.006"/>

<param name="range_min" type="double" value="0"/>

<param name="range_max" type="double" value="4"/>

</node>

</group>

</launch>

For rtabmap node: <node pkg="rtabmap_ros" type="rtabmap" name="rtabmap"> ... <param name="subscribe_scan" type="bool" value="true"/> <remap from="scan" to="/camera/scan"/> <!-- output of pointcloud_to_laserscan --> </node> In red the computed laser scan with the corresponding grid map:  cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

This post was updated on .

Thanks for the correction and commit.

But is it for indigo? Because I actually do not have the file util3d.cpp in my rtabmap version. In addition, if I try to add the laser node and suscribe to it with: param name="subscribe_scan" type="bool" value="true"/> I run into the error that this is not supported, it says that the conditions are that there is only one camera, and I am using two. Cheers |

|

Administrator

|

Hi,

I cannot reproduce the error. What exactly is it? For util3d.cpp, that file is in rtabmap project, not rtabmap_ros project. You should update rtabmap libraries. cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

Sorry I actually made that error.

I am updating the rtabmap and trying to run everything. I will update the post with news if it works or not, thank you. Cheers |

Re: Rtabmap mapping with two cameras

|

In reply to this post by matlabbe

Hello again,

apparently in this new indigo version (downloaded from github) the remapping has changed. I mean, for using 2 cameras before I had to use: <param name="depth_cameras" type="int" value="2"/> <remap from="/rgb0/image" to="/camera_front/rgb/image_raw"/> <remap from="/depth0/image" to="/camera_front/depth/image_raw"/> <remap from="/rgb0/camera_info" to="/camera_front/rgb/camera_info"/> <remap from="/rgb1/image" to="/camera_back/rgb/image_raw"/> <remap from="/depth1/image" to="/camera_back/depth/image_raw"/> <remap from="/rgb1/camera_info" to="/camera_back/rgb/camera_info"/> But know when I launch the rtabmap with this it says that I must use <param name="rgb_cameras" type="int" value="1"/> <remap from="/rtabmap/rgb/image" to="/camera_front/rgb/image_raw"/> <remap from="/rtabmap/depth/image" to="/camera_front/depth/image_raw"/> <remap from="/rtabmap/rgb/camera_info" to="/camera_front/rgb/camera_info"/>So when I try to add two it doesnt work: <remap from="/rtabmap/rgb0/image" to="/camera_front/rgb/image_raw"/> <remap from="/rtabmap/depth0/image" to="/camera_front/depth/image_raw"/> <remap from="/rtabmap/rgb0/camera_info" to="/camera_front/rgb/camera_info"/> <remap from="/rtabmap/rgb1/image" to="/camera_back/rgb/image_raw"/> <remap from="/rtabmap/depth1/image" to="/camera_back/depth/image_raw"/> <remap from="/rtabmap/rgb1/camera_info" to="/camera_back/rgb/camera_info"/>What is the remapping to use? In addition, I would like to ask you if you have published some paper regarding the topic on how rtabmap buils the maps (not about the loop closure detection) since I will try to make a comparison between some mapping softwares and it is important to know the concept behind the mapping. Cheers |

|

Administrator

|

Hi,

You should use the remapping found in your demo_two_kinects.launch version. In particular this on the latest version: <param name="subscribe_depth" type="bool" value="false"/> <param name="subscribe_rgbd" type="bool" value="true"/> <param name="rgbd_cameras" type="int" value="2"/> <remap from="rgbd_image0" to="/camera1/rgbd_image"/> <remap from="rgbd_image1" to="/camera2/rgbd_image"/> For how the occupancy grid maps are created, I'm currently writing a paper which will include this. That paper does standard 2D ray tracing with laser scans, but rtabmap_ros has more options (more or less robust to dynamic environments) depending on the sensor used and computing power available. cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

Hi Mathieu, I am trying to install rtabmap_ros from github in the indigo-devel version in order to apply the changes that you made for the laser, but catkin_make gives a lot of errors:

/home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp: In member function ‘void rtabmap_ros::OdometryROS::processData(const rtabmap::SensorData&, const ros::Time&)’:

/home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp:396:38: error: ‘class rtabmap::OdometryInfo’ has no member named ‘variance’

odom.pose.covariance.at(0) = info.variance*2; // xx

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp:397:38: error: ‘class rtabmap::OdometryInfo’ has no member named ‘variance’

odom.pose.covariance.at(7) = info.variance*2; // yy

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp:398:39: error: ‘class rtabmap::OdometryInfo’ has no member named ‘variance’

odom.pose.covariance.at(14) = info.variance*2; // zz

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp:399:39: error: ‘class rtabmap::OdometryInfo’ has no member named ‘variance’

odom.pose.covariance.at(21) = info.variance*2; // rr

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp:400:39: error: ‘class rtabmap::OdometryInfo’ has no member named ‘variance’

odom.pose.covariance.at(28) = info.variance*2; // pp

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp:401:39: error: ‘class rtabmap::OdometryInfo’ has no member named ‘variance’

odom.pose.covariance.at(35) = info.variance*2; // yawyaw

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp:417:48: error: ‘class rtabmap::OdometryInfo’ has no member named ‘variance’

odom.twist.covariance.at(0) = setTwist?info.variance:BAD_COVARIANCE; // xx

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp:418:48: error: ‘class rtabmap::OdometryInfo’ has no member named ‘variance’

odom.twist.covariance.at(7) = setTwist?info.variance:BAD_COVARIANCE; // yy

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp:419:49: error: ‘class rtabmap::OdometryInfo’ has no member named ‘variance’

odom.twist.covariance.at(14) = setTwist?info.variance:BAD_COVARIANCE; // zz

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp:420:49: error: ‘class rtabmap::OdometryInfo’ has no member named ‘variance’

odom.twist.covariance.at(21) = setTwist?info.variance:BAD_COVARIANCE; // rr

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp:421:49: error: ‘class rtabmap::OdometryInfo’ has no member named ‘variance’

odom.twist.covariance.at(28) = setTwist?info.variance:BAD_COVARIANCE; // pp

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp:422:49: error: ‘class rtabmap::OdometryInfo’ has no member named ‘variance’

odom.twist.covariance.at(35) = setTwist?info.variance:BAD_COVARIANCE; // yawyaw

^

In file included from /opt/ros/indigo/include/ros/ros.h:40:0,

from /home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.h:31,

from /home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp:28:

/home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp:547:114: error: ‘class rtabmap::OdometryInfo’ has no member named ‘variance’

NODELET_INFO( "Odom: quality=%d, std dev=%fm, update time=%fs", info.inliers, pose.isNull()?0.0f:std::sqrt(info.variance), (ros::WallTime::now()-time).toSec());

^

/opt/ros/indigo/include/ros/console.h:342:165: note: in definition of macro ‘ROSCONSOLE_PRINT_AT_LOCATION_WITH_FILTER’

::ros::console::print(filter, __rosconsole_define_location__loc.logger_, __rosconsole_define_location__loc.level_, __FILE__, __LINE__, __ROSCONSOLE_FUNCTION__, __VA_ARGS__)

^

/opt/ros/indigo/include/ros/console.h:375:7: note: in expansion of macro ‘ROSCONSOLE_PRINT_AT_LOCATION’

ROSCONSOLE_PRINT_AT_LOCATION(__VA_ARGS__); \

^

/opt/ros/indigo/include/ros/console.h:557:35: note: in expansion of macro ‘ROS_LOG_COND’

#define ROS_LOG(level, name, ...) ROS_LOG_COND(true, level, name, __VA_ARGS__)

^

/opt/ros/indigo/include/rosconsole/macros_generated.h:112:35: note: in expansion of macro ‘ROS_LOG’

#define ROS_INFO_NAMED(name, ...) ROS_LOG(::ros::console::levels::Info, std::string(ROSCONSOLE_NAME_PREFIX) + "." + name, __VA_ARGS__)

^

/opt/ros/indigo/include/nodelet/nodelet.h:59:27: note: in expansion of macro ‘ROS_INFO_NAMED’

#define NODELET_INFO(...) ROS_INFO_NAMED(getName(), __VA_ARGS__)

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/nodelets/OdometryROS.cpp:547:2: note: in expansion of macro ‘NODELET_INFO’

NODELET_INFO( "Odom: quality=%d, std dev=%fm, update time=%fs", info.inliers, pose.isNull()?0.0f:std::sqrt(info.variance), (ros::WallTime::now()-time).toSec());

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/MsgConversion.cpp: In function ‘rtabmap::Signature rtabmap_ros::nodeDataFromROS(const NodeData&)’:

/home/adrian/catkin_ws/src/rtabmap_ros/src/MsgConversion.cpp:589:42: error: no matching function for call to ‘rtabmap::SensorData::SensorData(cv::Mat, const _laserScanMaxPts_type&, const _laserScanMaxRange_type&, cv::Mat, cv::Mat, rtabmap::StereoCameraModel&, const _id_type&, const _stamp_type&, cv::Mat)’

compressedMatFromBytes(msg.userData)):

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/MsgConversion.cpp:589:42: note: candidates are:

In file included from /usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/Signature.h:42:0,

from /home/adrian/catkin_ws/src/rtabmap_ros/include/rtabmap_ros/MsgConversion.h:41,

from /home/adrian/catkin_ws/src/rtabmap_ros/src/MsgConversion.cpp:28:

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:117:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, const rtabmap::LaserScanInfo&, const cv::Mat&, const cv::Mat&, const rtabmap::StereoCameraModel&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:117:2: note: candidate expects 8 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:108:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, const cv::Mat&, const rtabmap::StereoCameraModel&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:108:2: note: candidate expects 6 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:97:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, const rtabmap::LaserScanInfo&, const cv::Mat&, const cv::Mat&, const std::vector<rtabmap::CameraModel>&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:97:2: note: candidate expects 8 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:88:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, const cv::Mat&, const std::vector<rtabmap::CameraModel>&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:88:2: note: candidate expects 6 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:77:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, const rtabmap::LaserScanInfo&, const cv::Mat&, const cv::Mat&, const rtabmap::CameraModel&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:77:2: note: candidate expects 8 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:68:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, const cv::Mat&, const rtabmap::CameraModel&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:68:2: note: candidate expects 6 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:60:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, const rtabmap::CameraModel&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:60:2: note: candidate expects 5 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:53:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:53:2: note: candidate expects 4 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:50:2: note: rtabmap::SensorData::SensorData()

SensorData();

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:50:2: note: candidate expects 0 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:46:19: note: rtabmap::SensorData::SensorData(const rtabmap::SensorData&)

class RTABMAP_EXP SensorData

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:46:19: note: candidate expects 1 argument, 9 provided

/home/adrian/catkin_ws/src/rtabmap_ros/src/MsgConversion.cpp:599:42: error: no matching function for call to ‘rtabmap::SensorData::SensorData(cv::Mat, const _laserScanMaxPts_type&, const _laserScanMaxRange_type&, cv::Mat, cv::Mat, std::vector<rtabmap::CameraModel>&, const _id_type&, const _stamp_type&, cv::Mat)’

compressedMatFromBytes(msg.userData)));

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/MsgConversion.cpp:599:42: note: candidates are:

In file included from /usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/Signature.h:42:0,

from /home/adrian/catkin_ws/src/rtabmap_ros/include/rtabmap_ros/MsgConversion.h:41,

from /home/adrian/catkin_ws/src/rtabmap_ros/src/MsgConversion.cpp:28:

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:117:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, const rtabmap::LaserScanInfo&, const cv::Mat&, const cv::Mat&, const rtabmap::StereoCameraModel&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:117:2: note: candidate expects 8 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:108:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, const cv::Mat&, const rtabmap::StereoCameraModel&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:108:2: note: candidate expects 6 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:97:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, const rtabmap::LaserScanInfo&, const cv::Mat&, const cv::Mat&, const std::vector<rtabmap::CameraModel>&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:97:2: note: candidate expects 8 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:88:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, const cv::Mat&, const std::vector<rtabmap::CameraModel>&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:88:2: note: candidate expects 6 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:77:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, const rtabmap::LaserScanInfo&, const cv::Mat&, const cv::Mat&, const rtabmap::CameraModel&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:77:2: note: candidate expects 8 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:68:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, const cv::Mat&, const rtabmap::CameraModel&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:68:2: note: candidate expects 6 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:60:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, const rtabmap::CameraModel&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:60:2: note: candidate expects 5 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:53:2: note: rtabmap::SensorData::SensorData(const cv::Mat&, int, double, const cv::Mat&)

SensorData(

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:53:2: note: candidate expects 4 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:50:2: note: rtabmap::SensorData::SensorData()

SensorData();

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:50:2: note: candidate expects 0 arguments, 9 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:46:19: note: rtabmap::SensorData::SensorData(const rtabmap::SensorData&)

class RTABMAP_EXP SensorData

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/SensorData.h:46:19: note: candidate expects 1 argument, 9 provided

/home/adrian/catkin_ws/src/rtabmap_ros/src/MsgConversion.cpp: In function ‘void rtabmap_ros::nodeDataToROS(const rtabmap::Signature&, rtabmap_ros::NodeData&)’:

/home/adrian/catkin_ws/src/rtabmap_ros/src/MsgConversion.cpp:618:47: error: ‘const class rtabmap::SensorData’ has no member named ‘laserScanMaxPts’

msg.laserScanMaxPts = signature.sensorData().laserScanMaxPts();

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/MsgConversion.cpp:619:49: error: ‘const class rtabmap::SensorData’ has no member named ‘laserScanMaxRange’

msg.laserScanMaxRange = signature.sensorData().laserScanMaxRange();

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/MsgConversion.cpp: In function ‘rtabmap::OdometryInfo rtabmap_ros::odomInfoFromROS(const OdomInfo&)’:

/home/adrian/catkin_ws/src/rtabmap_ros/src/MsgConversion.cpp:715:7: error: ‘class rtabmap::OdometryInfo’ has no member named ‘variance’

info.variance = msg.variance;

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/MsgConversion.cpp: In function ‘void rtabmap_ros::odomInfoToROS(const rtabmap::OdometryInfo&, rtabmap_ros::OdomInfo&)’:

/home/adrian/catkin_ws/src/rtabmap_ros/src/MsgConversion.cpp:756:22: error: ‘const class rtabmap::OdometryInfo’ has no member named ‘variance’

msg.variance = info.variance;

^

make[2]: *** [rtabmap_ros/CMakeFiles/rtabmap_ros.dir/src/nodelets/OdometryROS.cpp.o] Error 1

make[2]: *** Se espera a que terminen otras tareas....

/home/adrian/catkin_ws/src/rtabmap_ros/src/MapsManager.cpp: In member function ‘std::map<int, rtabmap::Transform> MapsManager::updateMapCaches(const std::map<int, rtabmap::Transform>&, const rtabmap::Memory*, bool, bool, bool, bool, bool, const std::map<int, rtabmap::Signature>&)’:

/home/adrian/catkin_ws/src/rtabmap_ros/src/MapsManager.cpp:592:72: error: no matching function for call to ‘rtabmap::OctoMap::addToCache(const int&, pcl::PointCloud<pcl::PointXYZRGB>::Ptr&, pcl::PointCloud<pcl::PointXYZRGB>::Ptr&)’

octomap_->addToCache(iter->first, groundCloud, obstaclesCloud);

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/MapsManager.cpp:592:72: note: candidates are:

In file included from /home/adrian/catkin_ws/src/rtabmap_ros/src/MapsManager.cpp:50:0:

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/OctoMap.h:63:7: note: void rtabmap::OctoMap::addToCache(int, pcl::PointCloud<pcl::PointXYZRGB>::Ptr&, pcl::PointCloud<pcl::PointXYZRGB>::Ptr&, const pcl::PointXYZ&)

void addToCache(int nodeId,

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/OctoMap.h:63:7: note: candidate expects 4 arguments, 3 provided

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/OctoMap.h:67:7: note: void rtabmap::OctoMap::addToCache(int, const cv::Mat&, const cv::Mat&, const Point3f&)

void addToCache(int nodeId,

^

/usr/local/lib/rtabmap-0.12/../../include/rtabmap-0.12/rtabmap/core/OctoMap.h:67:7: note: candidate expects 4 arguments, 3 provided

/home/adrian/catkin_ws/src/rtabmap_ros/src/MapsManager.cpp:673:16: error: ‘class rtabmap::SensorData’ has no member named ‘laserScanMaxRange’

data.laserScanMaxRange()>gridMaxUnknownSpaceFilledRange_?gridMaxUnknownSpaceFilledRange_:data.laserScanMaxRange());

^

/home/adrian/catkin_ws/src/rtabmap_ros/src/MapsManager.cpp:673:105: error: ‘class rtabmap::SensorData’ has no member named ‘laserScanMaxRange’

data.laserScanMaxRange()>gridMaxUnknownSpaceFilledRange_?gridMaxUnknownSpaceFilledRange_:data.laserScanMaxRange());

^

make[2]: *** [rtabmap_ros/CMakeFiles/rtabmap_ros.dir/src/MsgConversion.cpp.o] Error 1

make[2]: *** [rtabmap_ros/CMakeFiles/rtabmap_ros.dir/src/MapsManager.cpp.o] Error 1

make[1]: *** [rtabmap_ros/CMakeFiles/rtabmap_ros.dir/all] Error 2

make: *** [all] Error 2

Invoking "make -j8 -l8" failed

Is it that the github version has some mistakes or that i dont have the proper version of rtabmap (installed from github following tutorial indigo-devel)? Cheers |

Re: Rtabmap mapping with two cameras

|

Hello again,

I am starting to think that the previous error is coming from several different ones in the installation of ros. I'd better just reinstall everything and start over again. Another point I am looking into is the realization of the maps as I asked you before. The paper you sent me does not talk a lot about the facts I am more interested in, which can be reduced to the question: How is the relation between the 2D map and the 3D map? About this I have several questions: how does rtabmap use the laser for the mapping? Is it mandatory to use or is it possible to use only a camera? does rtabmap perform a localization of the 2D map and then translates it to the 3D map? does it get a 2D map from a camera and a 3D map from a different source and then combines them or does it combine them on the road from the same sources? What is the difference between using one camera or two; is one of them used for the mapping and the other as laser or are both used for mapping and combined their images? is the laser independent of the cameras? Sorry for the long post, I hope you can help me. Cheers |

|

Administrator

|

Hi,

For your errors above, it seems related to multiple rtabmap installations with different versions. 1- Make sure the rtabmap/rtabmap_ros binaries are not installed 2- Update both rtabmap and rtabmap_ros projects (git pull) 3- Make sure there are no rtabmap installed from source anywhere: # If installed in /usr/local $ rm /usr/local/lib/librtabmap* $ rm -r /usr/local/lib/rtabmap* $ rm -r /usr/local/include/rtabmap* $ rm /usr/local/bin/rtabmap* # If installed in ~/catkin_ws/devel $ rm ~/catkin_ws/devel/lib/librtabmap* $ rm -r ~/catkin_ws/devel/lib/rtabmap* $ rm -r ~/catkin_ws/devel/include/rtabmap* $ rm ~/catkin_ws/devel/bin/rtabmap*4- In rtabmap project, delete everything in build folder, and remake: $ rm -r rtabmap/build/* $ cd rtabmap/build $ cmake -DCMAKE_INSTALL_PREFIX=~/catkin_ws/devel .. $ make install5- catkin_make For your questions: They both generated with sensor data contained in the map's graph. When the graph changes (e.g., loop closure correction), the maps are regenerated/updated according to each new poses. It uses it to 1) create the 2D occupancy grid map (Grid/FromDepth=false), and/or 2) refine graph's links with ICP (Reg/Strategy=1, RGBD/NeighborLinkRefining=true), and/or 3) add proximity links, similar to loop closure detection but only by matching nearby scans (RGBD/ProximityBySpace=true) A LiDAR is not required, while it can add precision in some environments. The minimum requirement is a RGB-D camera or a stereo camera. Localization is done by matching visual features and by scan matching (if subscribed to a scan topic and RGBD/ProximityBySpace=true) using directly the nodes in the map's graph. Thus 2D map and the 3D map are not used for localization, they are the results of the optimized graph/pose correction. It may be complicated because of the choice of the sensors, but to make it simple you must choose a single sensor to create the map. Here some examples (note that some examples can produce both 2D and 3D maps): 1) 2d scan input (/scan + subscribe_scan=true) + Grid/FromDepth=false -----> 2D grid map 2) 3d point cloud input (/scan_cloud + subscribe_scan_cloud=true) + Grid/FromDepth=false + Grid/3D=true -----> 3D grid map and 2D grid map 3) 3d point cloud input (/scan_cloud + subscribe_scan_cloud=true) + Grid/FromDepth=false + Grid/3D=false -----> 2D grid map 4) Grid/FromDepth=true + Grid/3D=true -----> 3D grid map and 2D grid map from camera's depth 5) Grid/FromDepth=true + Grid/3D=false -----> 2D grid map from camera's depth There is no way to combine multiple sensors to create the map (multi-camera is considered one sensor). If you want to combine multiple sensor data, you have to merge them together into a single point cloud per acquisition before rtabmap node, then use /scan_cloud input topic. They are both combined into a single image. The mapping doesn't know that there are two or more cameras. The advantage is the extended FOV (better visual odometry and localization). If laser scans are from a different sensor, yes. In some examples (like this one), we create a fake laser scan from the kinect for convenience to get a fast 2D grid map created like with a real LiDAR (2D ray tracing), so in these cases they are dependent (same source). cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

Hello Mathieu, awesome answer, thank you so much for your work!

Just one more question, before I start mapping in real-world, about the odometry; in which odometry do you relay on more? I assume it is on the visual odometry (cameras) but, is it on the robot's wheels (robot's odometry)? Or is it that you take on account both? In that case, how important is each part and how do you combine them? Cheers, Adrian PD: I modified part of the code to correct the laser for the indigo version like you did in your commit. I leave the file util3d.cpp here in case you want to upload it on github or anyone needs it. |

|

Administrator

|

Hi,

I prefer using only robot wheel odometry in our environment, as there are a lot of white walls (no visual features to track) and its odometry is okay. You have choice to use visual odometry (rtabmap_ros/rgbd_odometry or any other visual odometry packages) or odometry from the robot. To combine them, I will refer you to robot_localization package, which uses an Extended Kalman Filter. For code modifications, it will be easier to see the changes if you fork the github project and change it on your side (even send a pull request). Note that you can use master branch (all latest changes) on Indigo. The indigo-devel branch is updated only when there is a new ROS binary release (so the indigo-devel branch matches the current released ros-indigo-rtabmap). cheers, Mathieu |

Re: Rtabmap mapping with two cameras

|

Ok thanks for the answer; I will start working on that.

Another question is about the pointclouds. I would like to use the configuration with a 3D grid map and a 2D grid map but with 2 cameras and a laser as I would like to maximize the precision and details of the maps. I already know how to mix the pointcoulds generated by each camera but, how do I make rtabmap use of this pointcloud for creating the maps? Is there a way to remap from this to scan_cloud or there exist a determined parameter to remap? (if there is where can i find it to avoid repeatdly questioning) Note that the point cloud has format /camera_merged/depth/points Cheers |

«

Return to Official RTAB-Map Forum

|

1 view|%1 views

| Free forum by Nabble | Edit this page |